File:2016 MetaLearningwithMemoryAugmented Fig1.png

Original file (1,160 × 313 pixels, file size: 77 KB, MIME type: image/png)

Summary

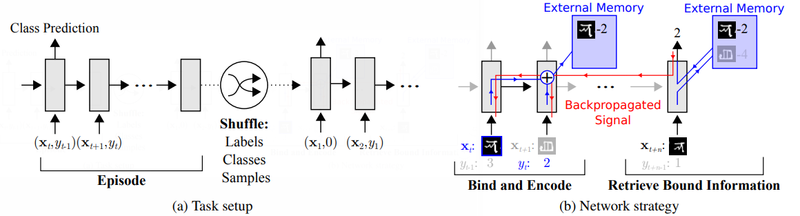

Figure 1. Task structure. (a) Omniglot images (or x-values for regression), [math]\displaystyle{ x_t }[/math], are presented with time-offset labels (or function values), [math]\displaystyle{ y_{t−1} }[/math], to prevent the network from simply mapping the class labels to the output. From episode to episode, the classes to be presented in the episode, their associated labels, and the specific samples are all shuffled. (b) A successful strategy would involve the use of an external memory to store bound sample representation-class label information, which can then be retrieved at a later point for successful classification when a sample from an already-seen class is presented. Specifically, sample data [math]\displaystyle{ x_t }[/math] from a particular time step should be bound to the appropriate class label [math]\displaystyle{ y_t }[/math], which is presented in the subsequent time step. Later, when a sample from this same class is seen, it should retrieve this bound information from the external memory to make a prediction. Backpropagated error signals from this prediction step will then shape the weight updates from the earlier steps in order to promote this binding strategy. In: Santoro et al. (2016).

File history

Click on a date/time to view the file as it appeared at that time.

| Date/Time | Thumbnail | Dimensions | User | Comment | |

|---|---|---|---|---|---|

| current | 23:56, 18 November 2018 | 1,160 × 313 (77 KB) | Omoreira (talk | contribs) | '''Figure 1'''. Task structure. (a) Omniglot images (or x-values for regression), <math>x_t</math>, are presented with time-offset labels (or function values), <math>y_{t−1}</math>, to prevent the network from simp... |

You cannot overwrite this file.

File usage

The following 3 pages use this file: