Bidirectional LSTM-CNN (BLSTM-CNN) Training System

Jump to navigation

Jump to search

A Bidirectional LSTM-CNN (BLSTM-CNN) Training System is a biLSTM Training System that can train a BLSTM-CNN.

- Context:

- …

- Example(s):

- a Bidirectional LSTM-CNN-CRF Training System,

- a BLSTM-CNN Training System that uses a Softmax classifier.

- …

- Counter-Example(s):

- See: Bidirectional Neural Network, Convolutional Neural Network, Conditional Random Field, Bidirectional Recurrent Neural Network, Dynamic Neural Network.

References

2018

- (Reimers & Gurevych, 2018) ⇒ EMNLP 2017 BiLSTM-CNN-CRF repository: https://github.com/UKPLab/emnlp2017-bilstm-cnn-crf Retrieved: 2018-07-08.

- QUOTE: This code can be used to run the systems proposed in the following papers:

- Huang et al., Bidirectional LSTM-CRF Models for Sequence Tagging - You can choose between a softmax and a CRF classifier.

- Ma and Hovy, End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF - Character based word representations using CNNs is achieved by setting the parameter

charEmbeddingsto CNN. - Lample et al, Neural Architectures for Named Entity Recognition - Character based word representations using LSTMs is achieved by setting the parameter

charEmbeddingsto LSTM. - Sogard, Goldberg: Deep multi-task learning with low level tasks supervised at lower layers - Train multiple task and supervise them on different levels.

- QUOTE: This code can be used to run the systems proposed in the following papers:

2016

- (Ma & Hovy, 2016) ⇒ Xuezhe Ma, and Eduard Hovy (2016). "End-to-end sequence labeling via bi-directional lstm-cnns-crf". arXiv preprint arXiv:1603.01354.

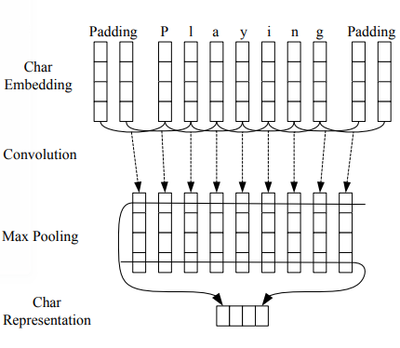

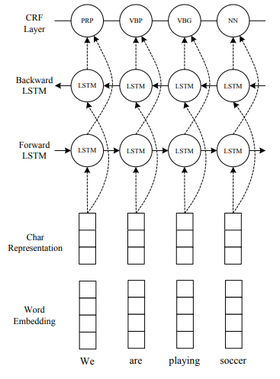

- QUOTE: Finally, we construct our neural network model by feeding the output vectors of BLSTM into a CRF layer. Figure 3 illustrates the architecture of our network in detail. For each word, the character-level representation is computed by the CNN in Figure 1 with character embeddings as inputs. Then the character-level representation vector is concatenated with the word embedding vector to feed into the BLSTM network. Finally, the output vectors of BLSTM are fed to the CRF layer to jointly decode the best label sequence. As shown in Figure 3, dropout layers are applied on both the input and output vectors of BLSTM.

- QUOTE: Finally, we construct our neural network model by feeding the output vectors of BLSTM into a CRF layer. Figure 3 illustrates the architecture of our network in detail. For each word, the character-level representation is computed by the CNN in Figure 1 with character embeddings as inputs. Then the character-level representation vector is concatenated with the word embedding vector to feed into the BLSTM network. Finally, the output vectors of BLSTM are fed to the CRF layer to jointly decode the best label sequence. As shown in Figure 3, dropout layers are applied on both the input and output vectors of BLSTM.

|

|

| Figure 1: The convolution neural network for extracting character-level representations of words. Dashed arrows indicate a dropout layer applied before character embeddings are input to CNN. | Figure 3: The main architecture of our neural network. The character representation for each word is computed by the CNN in Figure 1. Then the character representation vector is concatenated with the word embedding before feeding into the BLSTM network. Dashed arrows indicate dropout layers applied on both the input and output vectors of BLSTM. |