Contract Understanding Atticus Dataset (CUAD) Benchmark

(Redirected from CUAD (Contract Understanding Atticus Dataset))

Jump to navigation

Jump to search

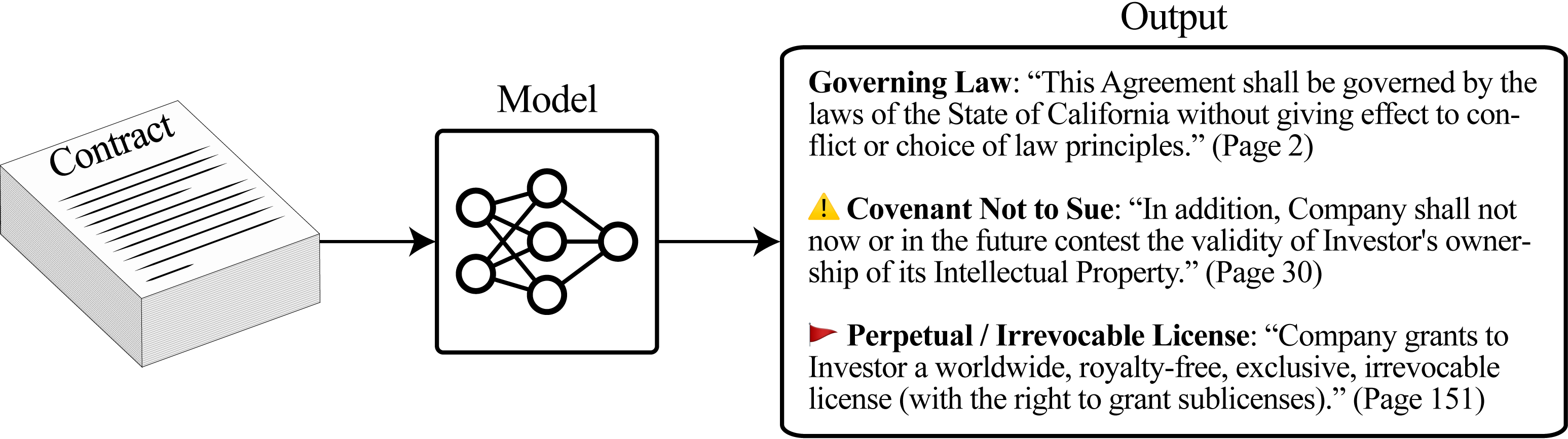

A Contract Understanding Atticus Dataset (CUAD) Benchmark is a legal NLP benchmark dataset that provides expert-annotated commercial contracts with 41 clause type labels for evaluating contract review automation systems through span-selection question answering.

- AKA: CUAD, CUAD Benchmark, CUAD Dataset, Contract Understanding Atticus Dataset, CUAD (Commercial Contract Dataset), CUAD (Contract Understanding Atticus Dataset), Atticus Contract Dataset, CUAD Legal Contract Corpus.

- Context:

- It can typically contain CUAD Commercial Legal Contracts with CUAD expert annotations for CUAD contract review tasks.

- It can typically identify CUAD 41 Legal Clause Types crucial for CUAD contract review, especially in CUAD corporate transactions such as CUAD merger and acquisitions.

- It can typically provide CUAD 13,000+ Annotations across CUAD 510 contracts created by CUAD legal experts from The Atticus Project.

- It can typically support CUAD Contract Clause Extraction through CUAD salient portion highlighting.

- It can typically enable CUAD Transformer Model Training through CUAD labeled training data.

- It can typically formulate CUAD Span-Selection Question-Answering Tasks that mirror CUAD SQuAD 2.0 approaches.

- It can typically predict CUAD Start and End Token Positions of CUAD text segments for CUAD legal review.

- It can typically generate CUAD Questions that identify CUAD label categories with CUAD descriptive explanations.

- ...

- It can often facilitate CUAD NLP Research and CUAD NLP development focused on CUAD legal contract review.

- It can often serve as CUAD Challenging Research Benchmark for the broader CUAD NLP community.

- It can often demonstrate CUAD Model Performance Variation based on CUAD model design and CUAD training dataset size.

- It can often require CUAD Expert Annotators for CUAD dataset creation and CUAD quality assurance.

- It can often employ CUAD Five Core Evaluation Metrics designed for CUAD class imbalance handling.

- It can often utilize CUAD Jaccard Similarity Coefficient for CUAD span matching validation.

- It can often address CUAD Needle-in-Haystack Characteristic where CUAD relevant clauses comprise CUAD 10% of contract content.

- It can often apply CUAD Sliding Window Techniques to process CUAD lengthy documents within CUAD transformer limitations.

- ...

- It can range from being a Simple CUAD Clause Detection Task to being a Complex CUAD Contract Understanding Task, depending on its CUAD task complexity.

- It can range from being a Binary CUAD Classification Task to being a Multi-Label CUAD Classification Task, depending on its CUAD label structure.

- It can range from being a Clause-Level CUAD Analysis Task to being a Document-Level CUAD Analysis Task, depending on its CUAD analysis granularity.

- It can range from being a Single-Domain CUAD Application to being a Multi-Domain CUAD Application, depending on its CUAD industry coverage.

- It can range from being a Single-Span CUAD Extraction Task to being a Multi-Span CUAD Recognition Task, depending on its CUAD span complexity.

- ...

- It can support CUAD Contract Review Automation through CUAD machine learning models.

- It can enable CUAD Legal AI Development through CUAD benchmark evaluations.

- It can facilitate CUAD Contract Risk Assessment through CUAD clause identification.

- It can guide CUAD Model Performance Comparison through CUAD standardized metrics.

- It can inform CUAD Legal Technology Advancement through CUAD research findings.

- It can measure CUAD Exact Match (EM) requiring CUAD character-level correspondence.

- It can calculate CUAD F1 Score using CUAD word-level harmonic mean.

- It can compute CUAD Area Under Precision-Recall Curve (AUPR) for CUAD class imbalance handling.

- It can evaluate CUAD Precision at 80% Recall for CUAD practical performance assessment.

- It can assess CUAD Precision at 90% Recall for CUAD stringent evaluation threshold.

- ...

- Examples:

- CUAD Version Releases, such as:

- CUAD v1 with 13,000+ labels across 510 commercial contracts from various industries.

- CUAD GitHub Repository providing code implementations, model baselines, and evaluation scripts.

- CUAD Hugging Face Dataset enabling easy integration with transformer libraries.

- CUAD Clause Category Types, such as:

- CUAD Binary Classification Categories (33 types), such as:

- CUAD Agreement Date Clause for contract execution date identification.

- CUAD Parties Clause for contracting entity extraction.

- CUAD Termination Clause for contract end condition detection.

- CUAD Governing Law Clause for jurisdiction determination.

- CUAD Confidentiality Clause for information protection term identification.

- CUAD Indemnification Clause for liability allocation analysis.

- CUAD Limitation of Liability Clause for damage cap detection.

- CUAD Anti-Assignment Clause for transfer restriction identification.

- CUAD Non-Compete Provision for competition limitation extraction.

- CUAD Warranty Clause for guarantee term identification.

- CUAD Insurance Clause for coverage requirement detection.

- CUAD Arbitration Clause for dispute resolution mechanism extraction.

- CUAD Entity Extraction Categories (8 types), such as:

- CUAD Effective Date Extraction for contract commencement date.

- CUAD Expiration Date Extraction for contract termination date.

- CUAD Renewal Term Extraction for extension period identification.

- CUAD Liability Cap Extraction for maximum damage amount.

- CUAD Minimum Commitment Extraction for obligation threshold.

- CUAD Revenue Percentage Extraction for financial term analysis.

- CUAD Notice Period Extraction for notification requirement.

- CUAD Price Adjustment Extraction for cost escalation term.

- CUAD Binary Classification Categories (33 types), such as:

- CUAD Model Performances, such as:

- CUAD RoBERTa-base Performance achieving baseline F1 scores across clause types.

- CUAD RoBERTa-large Performance demonstrating improved accuracy with larger model capacity.

- CUAD DeBERTa-xlarge Performance showing state-of-the-art results on complex clauses.

- CUAD GPT-3 Zero-Shot Performance testing generalization capability.

- CUAD Legal-BERT Performance leveraging domain-specific pretraining.

- CUAD Evaluation Metrics, such as:

- CUAD Exact Match (EM) requiring character-level correspondence for strict evaluation.

- CUAD F1 Score using word-level harmonic mean for balanced assessment.

- CUAD Area Under Precision-Recall Curve (AUPR) handling class imbalance issues.

- CUAD Precision at 80% Recall for practical deployment threshold.

- CUAD Precision at 90% Recall for high-recall applications.

- CUAD Application Domains, such as:

- M&A Due Diligence Application for acquisition target review.

- Commercial Contract Analysis Application for business agreement evaluation.

- Legal AI System Training Application for model development.

- Contract Risk Assessment Application for liability identification.

- Regulatory Compliance Application for requirement verification.

- ...

- CUAD Version Releases, such as:

- Counter-Examples:

- MAUD (Merger Agreement Understanding Dataset), which focuses specifically on merger agreements rather than diverse commercial contracts.

- ContractNLI Dataset, which emphasizes natural language inference rather than clause extraction.

- LEDGAR Dataset, which contains SEC filings rather than commercial contracts.

- Legal Judgment Prediction Dataset, which predicts case outcomes rather than identifying contract clauses.

- Clinical Trial Dataset, which contains medical protocols rather than legal contracts.

- SQuAD Dataset, which performs general question answering without legal domain specificity.

- GLUE Benchmark, which evaluates general language understanding rather than legal text comprehension.

- See: Legal Contract Review Benchmark Task, CUAD Clause Classification Task, Contract Understanding Atticus Dataset (CUAD) Clause Label, Contract Understanding Atticus Dataset (CUAD) Subtask, The Atticus Project, Legal Natural Language Inference (NLI) Task, Legal AI Benchmark, Contract-Focused AI System, Contract Review Fine-Tuned LLM, AI-based Contract Review System, Legal Document Classification Task, Legal Document Corpus, Natural Language Processing, Machine Learning in Legal Tech, Span Selection Question Answering Task, Legal NLP Evaluation Metric, Transformer-Based Legal Model, Contract Analysis Automation, Legal Technology Benchmark, Contract Intelligence System.

References

2023

- (Savelka, 2023) ⇒ Jaromir Savelka. (2023). “Unlocking Practical Applications in Legal Domain: Evaluation of Gpt for Zero-shot Semantic Annotation of Legal Texts.” In: Proceedings of the Nineteenth International Conference on Artificial Intelligence and Law. DOI:10.1145/3594536.3595161

- QUOTE: ... … selected semantic types from the Contract Understanding Atticus Dataset (CUAD) [16]. Wang et al. assembled and released the Merger Agreement Understanding Dataset (MAUD) [37]. …

2021

- (GitHub, 2021) ⇒ https://github.com/TheAtticusProject/cuad/

- QUOTE: This repository contains code for the Contract Understanding Atticus Dataset (CUAD), pronounced "kwad", a dataset for legal contract review curated by the Atticus Project. It is part of the associated paper CUAD: An Expert-Annotated NLP Dataset for Legal Contract Review by Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball.

Contract review is a task about "finding needles in a haystack." We find that Transformer models have nascent performance on CUAD, but that this performance is strongly influenced by model design and training dataset size. Despite some promising results, there is still substantial room for improvement. As one of the only large, specialized NLP benchmarks annotated by experts, CUAD can serve as a challenging research benchmark for the broader NLP community.

- QUOTE: This repository contains code for the Contract Understanding Atticus Dataset (CUAD), pronounced "kwad", a dataset for legal contract review curated by the Atticus Project. It is part of the associated paper CUAD: An Expert-Annotated NLP Dataset for Legal Contract Review by Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball.

2021

- (Hendrycks et al., 2021) ⇒ Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball. (2021). “CUAD: An Expert-annotated Nlp Dataset for Legal Contract Review.” In: arXiv preprint arXiv:2103.06268. doi:10.48550/arXiv.2103.06268

- ABSTRACT: Many specialized domains remain untouched by deep learning, as large labeled datasets require expensive expert annotators. We address this bottleneck within the legal domain by introducing the Contract Understanding Atticus Dataset (CUAD), a new dataset for legal contract review. CUAD was created with dozens of legal experts from The Atticus Project and consists of over 13,000 annotations. The task is to highlight salient portions of a contract that are important for a human to review. We find that Transformer models have nascent performance, but that this performance is strongly influenced by model design and training dataset size. Despite these promising results, there is still substantial room for improvement. As one of the only large, specialized NLP benchmarks annotated by experts, CUAD can serve as a challenging research benchmark for the broader NLP community.