Feed-Forward Neural Network (NNet) Training System

A Feed-Forward Neural Network (NNet) Training System is a NNet training system that implements a feed-forward neural network training algorithm to solve a Feed-Forward Neural Network Training Task.

- Context:

- It can range from being a Perceptron Training System to being a Deep Feed-Forward Neural Network Training System.

- It usually implements a Backpropagation Algorithm.

- …

- Example(s):

- a Stacked Autoencoding System,

- a Simple Recurrent Network,

- a Two-Hidden Layer Feedforward Network Training System such as PMML 4.0 Neural Network Model applied to the Iris dataset: http://dmg.org/pmml/pmml_examples/Iris.csv

- a CNN Training System.

- an RNN Training System.

- …

- Counter-Example(s):

- See: Feed-Forward Neural Network, Convolution Neural Network Training System, Stochastic Gradient Descent System, Artificial Neural Network, Neural Network Topology, Backpropagation, Machine Learning, Learning Curve, Radial Basis Function Network, Deep Learning, Deep Learning Artificial Neural Network, Pattern Recognition, Feedforward Neural Network, Recurrent Network, Adaptive Resonance Theory.

References

2017a

- (Munro, 2017) ⇒ Paul Munro (2017) "Backpropagation". In: Sammut & Webb. (2017).

- QUOTE: A feed-forward neural network is a mathematical function that is composed of constituent “semi-linear” functions constrained by a feed-forward network architecture, wherein the constituent functions correspond to nodes (often called units or artificial neurons) in a graph, as in Fig. 1. A feed-forward network architecture has a connectivity structure that is an acyclic graph; that is, there are no closed loops (...)

Several aspects of the feed-forward network must be defined prior to running a BP program, such as the configuration of the hidden units, the initial values of the weights, the functions they will compute, and the numerical representation of the input and target data. There are also parameters of the learning algorithm that must be chosen, such as the value of η and the form of the error function. The weight and bias parameters are set to their initial values (these are usually random within specified limits). BP is implemented as an iterative process as follows:

- QUOTE: A feed-forward neural network is a mathematical function that is composed of constituent “semi-linear” functions constrained by a feed-forward network architecture, wherein the constituent functions correspond to nodes (often called units or artificial neurons) in a graph, as in Fig. 1. A feed-forward network architecture has a connectivity structure that is an acyclic graph; that is, there are no closed loops (...)

- 1. A stimulus-target pair is drawn from the training set.

- 2. The activity values for the units in the network are computed for all the units in the network in a forward fashion from input to output (Fig. 2a).

- 3. The network output values are compared to the target and a delta (δ) value is computed for each output unit based on the difference between the target and the actual output response value.

- 4. The deltas are propagated backward through the network using the same weights that were used to compute the activity values (Fig. 2b).

- 5. Each weight is updated by an amount proportional to the product of the downstream delta value and the upstream activity (Fig. 2c).

- The procedure can be run either in an online mode or batch mode. In the online mode, the network parameters are updated for each stimulus-target pair. In the batch mode, the weight changes are computed and accumulated over several iterations without updating the weights until a large number (B) of stimulus-target pairs have been processed (often, the entire training set), at which the weights are updated by the accumulated amounts.

2017b

- (PMML, 2017) ⇒ "PMML 4.0 - Neural Network Models" http://dmg.org/pmml/v4-0-1/NeuralNetwork.html#Example%20model Retrieved:2017-12-17

- QUOTE: The description of neural network models assumes that the reader has a general knowledge of artificial neural network technology. A neural network has one or more input nodes and one or more neurons. Some neurons' outputs are the output of the network. The network is defined by the neurons and their connections, aka weights. All neurons are organized into layers; the sequence of layers defines the order in which the activations are computed. All output activations for neurons in some layer L are evaluated before computation proceeds to the next layer L+1. Note that this allows for recurrent networks where outputs of neurons in layer L+i can be used as input in layer L where L+i > L. The model does not define a specific evaluation order for neurons within a layer.

Each neuron receives one or more input values, each coming via a network connection, and sends only one output value. All incoming connections for a certain neuron are contained in the corresponding Neuron element. Each connection Con of the element Neuron stores the ID of a node it comes from and the weight. A bias weight coefficient or a width of a radial basis function unit may be stored as an attribute of Neuron element.

- QUOTE: The description of neural network models assumes that the reader has a general knowledge of artificial neural network technology. A neural network has one or more input nodes and one or more neurons. Some neurons' outputs are the output of the network. The network is defined by the neurons and their connections, aka weights. All neurons are organized into layers; the sequence of layers defines the order in which the activations are computed. All output activations for neurons in some layer L are evaluated before computation proceeds to the next layer L+1. Note that this allows for recurrent networks where outputs of neurons in layer L+i can be used as input in layer L where L+i > L. The model does not define a specific evaluation order for neurons within a layer.

2015

- (Schmidhuber, 2015) ⇒ Jurgen Schmidhuber (2015). "Deep learning in neural networks: An overview". Neural networks, 61, 85-117. DOI: 10.1016/j.neunet.2014.09.003 arxiv: 1404.7828

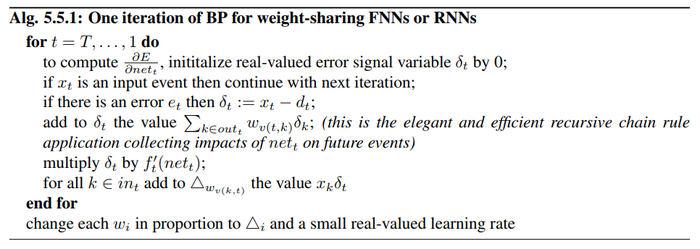

- QUOTE: Using the notation of Sec. 2 for weight-sharing FNNs or RNNs, after an episode of activation spreading through differentiable [math]\displaystyle{ f_t }[/math], a single iteration of gradient descent through BP computes changes of all [math]\displaystyle{ w_i }[/math] in proportion to [math]\displaystyle{ \frac{\partial E}{\partial w_i} = \sum_t \frac{\partial E}{\partial net_t}\frac{\partial net_t}{\partial w_i} }[/math] as in Algorithm 5.5.1 (for the additive case), where each weight [math]\displaystyle{ w_i }[/math] is associated with a real-valued variable [math]\displaystyle{ \Delta_i }[/math] initialized by 0. The computational costs of the backward (BP) pass are essentially those of the forward pass (Sec. 2). Forward and backward passes are re-iterated until sufficient performance is reached.

As of 2014, this simple BP method is still the central learning algorithm for FNNs and RNNs.

- QUOTE: Using the notation of Sec. 2 for weight-sharing FNNs or RNNs, after an episode of activation spreading through differentiable [math]\displaystyle{ f_t }[/math], a single iteration of gradient descent through BP computes changes of all [math]\displaystyle{ w_i }[/math] in proportion to [math]\displaystyle{ \frac{\partial E}{\partial w_i} = \sum_t \frac{\partial E}{\partial net_t}\frac{\partial net_t}{\partial w_i} }[/math] as in Algorithm 5.5.1 (for the additive case), where each weight [math]\displaystyle{ w_i }[/math] is associated with a real-valued variable [math]\displaystyle{ \Delta_i }[/math] initialized by 0. The computational costs of the backward (BP) pass are essentially those of the forward pass (Sec. 2). Forward and backward passes are re-iterated until sufficient performance is reached.