2017 UnsupervisedPretrainingforSeque

- (Ramachandran et al., 2017) ⇒ Prajit Ramachandran, Peter J. Liu, and Quoc V. Le. (2017). “Unsupervised Pretraining for Sequence to Sequence Learning". In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing (EMNLP 2017). DOI: 10.18653/v1/D17-1039

Subject Headings: Sequence-to-Sequence Learning Task.

Notes

- Version(s) and URL(s):

- Resource(s):

Cited By

- Google Scholar: ~ 138 Citations. Retrieved: 2020-04-07.

- Semantic Scholar: ~ 141 Citations. Retrieved: 2020-04-07.

- MS Academic: ~ 115 Citations. Retrieved: 2020-04-07.

Quotes

Abstract

This work presents a general unsupervised learning method to improve the accuracy of sequence to sequence (seq2seq) models. In our method, the weights of the encoder and decoder of a seq2seq model are initialized with the pretrained weights of two language models and then fine-tuned with labeled data. We apply this method to challenging benchmarks in machine translation and abstractive summarization and find that it significantly improves the subsequent supervised models. Our main result is that pretraining improves the generalization of seq2seq models. We achieve state-of-the art results on the WMT English → German task, surpassing a range of methods using both phrase-based machine translation and neural machine translation. Our method achieves a significant improvement of 1.3 BLEU from the previous best models on both WMT'14 and WMT'15 English → German. We also conduct human evaluations on abstractive summarization and find that our method outperforms a purely supervised learning baseline in a statistically significant manner.

1 Introduction

2 Methods

(...)

2.1 Basic Procedure

(...)

(...)

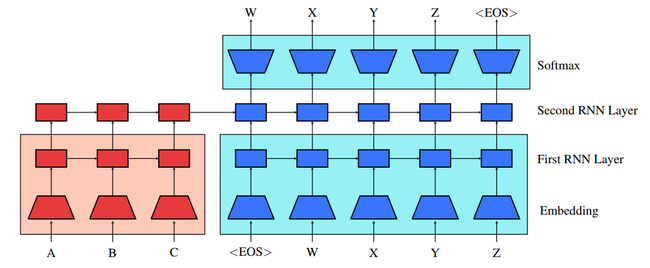

Therefore, the basic procedure of our approach is to pretrain both the seq2seq encoder and decoder networks with language models, which can be trained on large amounts of unlabeled text data. This can be seen in Figure 1, where the parameters in the shaded boxes are pretrained. In the following we will describe the method in detail using machine translation as an example application.

|

(...)

(...)

2.2 Monolingual Language Modeling Losses

2.3 Other Improvements to the Model

3 Experiments

3.1 Machine Translation

3.2 Abstractive Summarization

4 Related Work

5 Conclusion

References

BibTeX =

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2017 UnsupervisedPretrainingforSeque | Quoc V. Le Peter J. Liu Prajit Ramachandran | Unsupervised Pretraining for Sequence to Sequence Learning | 2017 |