Code-to-Sequence (code2seq) Neural Network

(Redirected from code2seq architecture)

Jump to navigation

Jump to search

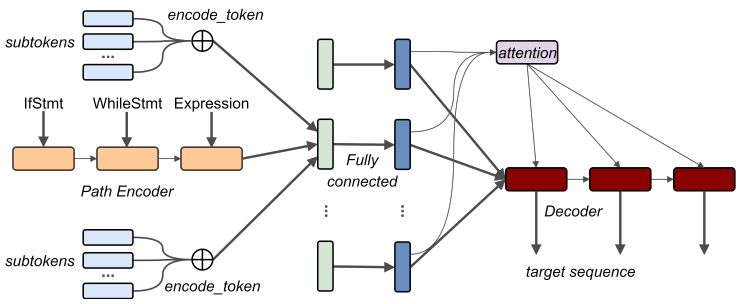

A Code-to-Sequence (code2seq) Neural Network is an seq2seq architecture with attention that generates a sequence from a source code snippet.

- Context:

- It can be instantiated in a code2seq Source Code Repository, such as

https://github.com/tech-srl/code2seq/ - It can be trained by a code2seq Training System (that implements a code2seq training algorithm to solve a code2seq training task).

- It can be used by a code2seq Inference System (that solves a code2seq Inference Task), such as then one that powers

https://code2seq.org

(which can be used by a code summarization, code generation and code documention systems). - …

- It can be instantiated in a code2seq Source Code Repository, such as

- Example(s):

- A Trained code2seq Model, such as:

https://s3.amazonaws.com/code2seq/model/java-large/java-large-model.tar.gz, (Alon et al., 2018). - A code2seq Metamodel, such as:

.

. - …

- A Trained code2seq Model, such as:

- Counter-Example(s):

- See: Abstract Syntax Tree, Automatic Code Documentation Task, Automatic Code Retrieval Task, Automatic Code Generation Task, Automatic Code Captioning Task, Encoder-Decoder Neural Network, Neural Machine Translation (NMT) Algorithm.

References

2020

- (Yahav, 2020) ⇒ Eran Yahav (2020). "From Programs to Deep Models - Part 2". In: ACM SIGPLAN Blog.

- QUOTE: The code2seq architecture is based on the standard seq2seq architecture with attention, with the main difference that instead of attending to the source words, code2seq attends to the source paths. In the following example, the mostly-attended path in each prediction step is marked in the same color and number as the corresponding predicted word in the output sentence.

{|style="border:2px solid #F4F6F6; text-align:center; vertical-align:center; border-spacing: 1px; margin: 1em auto; width: 80%"

- QUOTE: The code2seq architecture is based on the standard seq2seq architecture with attention, with the main difference that instead of attending to the source words, code2seq attends to the source paths. In the following example, the mostly-attended path in each prediction step is marked in the same color and number as the corresponding predicted word in the output sentence.

|-

| |-

|}

|-

|}

- The code2seq model was demonstrated on the task of method name prediction in Java (in which it performed significantly better than code2vec); on the task of predicting StackOverflow natural language questions given their source code answers (which was first presented by Iyer et al. 2016); and on the task of predicting documentation sentences (JavaDocs) given their Java methods. In all tasks, code2seq was shown to perform much better than strong Neural Machine Translation (NMT) models that address these tasks as “translating” code as a text to natural language.

2018

- (Alon et al., 2018) ⇒ Uri Alon, Shaked Brody, Omer Levy, and Eran Yahav. (2018). “code2seq: Generating Sequences from Structured Representations of Code.” In: Proceedings of the 7th International Conference on Learning Representations (ICLR 2019).

- QUOTE: Our model follows the standard encoder-decoder architecture for NMT (Section 3.1), with the significant difference that the encoder does not read the input as a flat sequence of tokens. Instead, the encoder creates a vector representation for each AST path separately (Section 3.2). The decoder then attends over the encoded AST paths (rather than the encoded tokens) while generating the target sequence. Our model is illustrated in Figure 3.

- QUOTE: Our model follows the standard encoder-decoder architecture for NMT (Section 3.1), with the significant difference that the encoder does not read the input as a flat sequence of tokens. Instead, the encoder creates a vector representation for each AST path separately (Section 3.2). The decoder then attends over the encoded AST paths (rather than the encoded tokens) while generating the target sequence. Our model is illustrated in Figure 3.

|