sklearn.neural network.MLPRegressor: Difference between revisions

m (Text replacement - " ReLU " to " ReLU ") |

m (Remove links to pages that are actually redirects to this page.) |

||

| Line 2: | Line 2: | ||

* <B>Context</B> | * <B>Context</B> | ||

** Usage: | ** Usage: | ||

::: 1) Import [[MLP Regression System]] from [[scikit-learn]] : <code>from [[sklearn.neural_network]] import [[MLPRegressor]]</code> | ::: 1) Import [[MLP Regression System]] from [[scikit-learn]] : <code>from [[sklearn.neural_network]] import [[sklearn.neural network.MLPRegressor|MLPRegressor]]</code> | ||

::: 2) Create [[design matrix]] <code>X</code> and [[response vector]] <code>Y</code> | ::: 2) Create [[design matrix]] <code>X</code> and [[response vector]] <code>Y</code> | ||

::: 3) Create [[Regressor]] object: <code>regressor_model=MLPRegressor([hidden_layer_sizes=(100, ), activation=’relu’, solver=’adam’, alpha=0.0001, batch_size=’auto’, learning_rate=’constant’, learning_rate_init=0.001, ...])</code> | ::: 3) Create [[Regressor]] object: <code>regressor_model=MLPRegressor([hidden_layer_sizes=(100, ), activation=’relu’, solver=’adam’, alpha=0.0001, batch_size=’auto’, learning_rate=’constant’, learning_rate_init=0.001, ...])</code> | ||

| Line 37: | Line 37: | ||

=== 2017b === | === 2017b === | ||

* (sklearn,2017) ⇒ http://scikit-learn.org/stable/modules/neural_networks_supervised.html#regression Retrieved:2017-12-3. | * (sklearn,2017) ⇒ http://scikit-learn.org/stable/modules/neural_networks_supervised.html#regression Retrieved:2017-12-3. | ||

** QUOTE: Class <code>[[MLPRegressor]]</code> implements a [[multi-layer perceptron (MLP)]] that [[train]]s using [[backpropagation]] with no [[activation function]] in the [[output layer]], which can also be seen as using the [[identity function]] as [[activation function]]. Therefore, it uses the [[square error]] as the [[loss function]], and the [[output]] is a set of [[continuous value]]s. <P> <code>MLPRegressor</code> also supports [[multi-output regression]], in which a [[sample]] can have more than one [[target]]. | ** QUOTE: Class <code>[[sklearn.neural network.MLPRegressor|MLPRegressor]]</code> implements a [[multi-layer perceptron (MLP)]] that [[train]]s using [[backpropagation]] with no [[activation function]] in the [[output layer]], which can also be seen as using the [[identity function]] as [[activation function]]. Therefore, it uses the [[square error]] as the [[loss function]], and the [[output]] is a set of [[continuous value]]s. <P> <code>MLPRegressor</code> also supports [[multi-output regression]], in which a [[sample]] can have more than one [[target]]. | ||

---- | ---- | ||

__NOTOC__ | __NOTOC__ | ||

[[Category:Concept]] | [[Category:Concept]] | ||

Revision as of 20:45, 23 December 2019

A sklearn.neural_network.MLPRegressor is a multi-layer perceptron regression system within sklearn.neural_network.

- Context

- Usage:

- 1) Import MLP Regression System from scikit-learn :

from sklearn.neural_network import MLPRegressor - 2) Create design matrix

Xand response vectorY - 3) Create Regressor object:

regressor_model=MLPRegressor([hidden_layer_sizes=(100, ), activation=’relu’, solver=’adam’, alpha=0.0001, batch_size=’auto’, learning_rate=’constant’, learning_rate_init=0.001, ...]) - 4) Choose method(s):

fit(X, y)Fits the regression model to data matrix X and target(s) y.get_params([deep])Gets parameters for this estimator.predict(X)Predicts using the multi-layer perceptron model.score(X, y[, sample_weight])Returns the coefficient of determination R^2 of the prediction.set_params(**params)Set the parameters of this estimator.

- 1) Import MLP Regression System from scikit-learn :

|

|

|

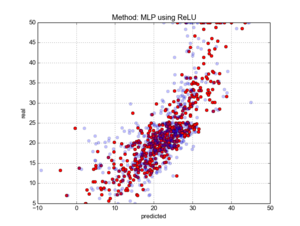

| Method: MLP using ReLU RMSE on the data: 5.3323 RMSE on 10-fold CV: 6.7892 |

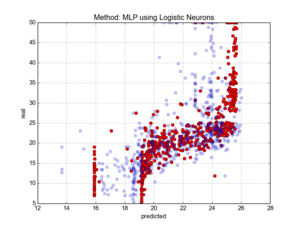

Method: MLP using Logistic Neurons RMSE on the data: 7.3161 RMSE on 10-fold CV: 8.0986 |

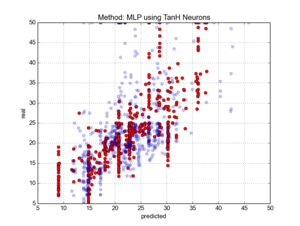

Method: MLP using TanH Neurons RMSE on the data: 6.3860 RMSE on 10-fold CV: 8.0147 |

- Counter-Example(s):

- See: Artificial Neural Network, Supervised Learning System, Regression Task, Feedforward Neural Network, Restricted Boltzmann Machines, Support Vector Machines.

References

2017a

- (Scikit-Learn, 2017A) ⇒ http://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPRegressor.html Retrieved:2017-12-17

- QUOTE:

class sklearn.neural_network.MLPRegressor(hidden_layer_sizes=(100, ), activation=’relu’, solver=’adam’, alpha=0.0001, batch_size=’auto’, learning_rate=’constant’, learning_rate_init=0.001, power_t=0.5, max_iter=200, shuffle=True, random_state=None, tol=0.0001, verbose=False, warm_start=False, momentum=0.9, nesterovs_momentum=True, early_stopping=False, validation_fraction=0.1, beta_1=0.9, beta_2=0.999, epsilon=1e-08)Multi-layer Perceptron regressor. This model optimizes the squared-loss using LBFGS or stochastic gradient descent.

(...)

Notes

MLPRegressor trains iteratively since at each time step the partial derivatives of the loss function with respect to the model parameters are computed to update the parameters. It can also have a regularization term added to the loss function that shrinks model parameters to prevent overfitting. This implementation works with data represented as dense and sparse numpy arrays of floating point values.

- QUOTE:

2017b

- (sklearn,2017) ⇒ http://scikit-learn.org/stable/modules/neural_networks_supervised.html#regression Retrieved:2017-12-3.

- QUOTE: Class

MLPRegressorimplements a multi-layer perceptron (MLP) that trains using backpropagation with no activation function in the output layer, which can also be seen as using the identity function as activation function. Therefore, it uses the square error as the loss function, and the output is a set of continuous values.MLPRegressoralso supports multi-output regression, in which a sample can have more than one target.

- QUOTE: Class