Mixture Probability Function

A Mixture Probability Function is a probability function that is a composite function.

- AKA: Probability Function Ensemble.

- Context:

- It can (often) be used to represent a Mixture Process.

- It can be an instance of a Mixture Probability Function Family.

- It can be a member of a Mixture Model.

- It can range from being a Homogeneous Mixture Probability Function to being a Heterogeneous Mixture Probability Function.

- It can range from being a Mixture Mass Probability Function to being a Mixture Density Probability Function.

- It can range from being a Univariate Mixture Function to being an Multivariate Mixture Function.

- It can range from being a Finite Mixture Function (2-component, 3-component, ...) to being an Infinite Mixture Function.

- It can range from being a Linear Mixture Function to being a Non-Linear Mixture Function.

- Example(s):

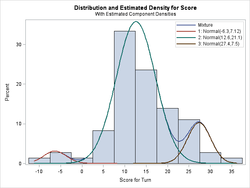

- a Univariate Gaussian Mixture Function, such as: a

.

. - a Bivariate Two-Component Gaussian:

- a Poisson Mixture Function.

- …

- a Univariate Gaussian Mixture Function, such as: a

- Counter-Example(s):

- See: Probability Distribution Function.

References

2013

- http://en.wikipedia.org/wiki/Mixture_distribution

- QUOTE: In probability and statistics, a mixture distribution is the probability distribution of a random variable whose values can be interpreted as being derived in a simple way from an underlying set of other random variables. In particular, the final outcome value is selected at random from among the underlying values, with a certain probability of selection being associated with each. Here the underlying random variables may be random vectors, each having the same dimension, in which case the mixture distribution is a multivariate distribution.

In cases where each of the underlying random variables is continuous, the outcome variable will also be continuous and its probability density function is sometimes referred to as a mixture density. The cumulative distribution function (and the probability density function if it exists) can be expressed as a convex combination (i.e. a weighted sum, with non-negative weights that sum to 1) of other distribution functions and density functions. The individual distributions that are combined to form the mixture distribution are called the mixture components, and the probabilities (or weights) associated with each component are called the mixture weights. The number of components in mixture distribution is often restricted to being finite, although in some cases the components may be countable. More general cases (i.e. an uncountable set of component distributions), as well as the countable case, are treated under the title of compound distributions.

A distinction needs to be made between a random variable whose distribution function or density is the sum of a set of components (i.e. a mixture distribution) and a random variable whose value is the sum of the values of two or more underlying random variables, in which case the distribution is given by the convolution operator. As an example, the sum of two normally-distributed random variables, each with different means, will still be a normal distribution. On the other hand, a mixture density created as a mixture of two normal distributions with different means will have two peaks provided that the two means are far enough apart, showing that this distribution is radically different from a normal distribution.

Mixture distributions arise in many contexts in the literature and arise naturally where a statistical population contains two or more subpopulations. They are also sometimes used as a means of representing non-normal distributions. Data analysis concerning statistical models involving mixture distributions is discussed under the title of mixture models, while the present article concentrates on simple probabilistic and statistical properties of mixture distributions and how these relate to properties of the underlying distributions.

- QUOTE: In probability and statistics, a mixture distribution is the probability distribution of a random variable whose values can be interpreted as being derived in a simple way from an underlying set of other random variables. In particular, the final outcome value is selected at random from among the underlying values, with a certain probability of selection being associated with each. Here the underlying random variables may be random vectors, each having the same dimension, in which case the mixture distribution is a multivariate distribution.