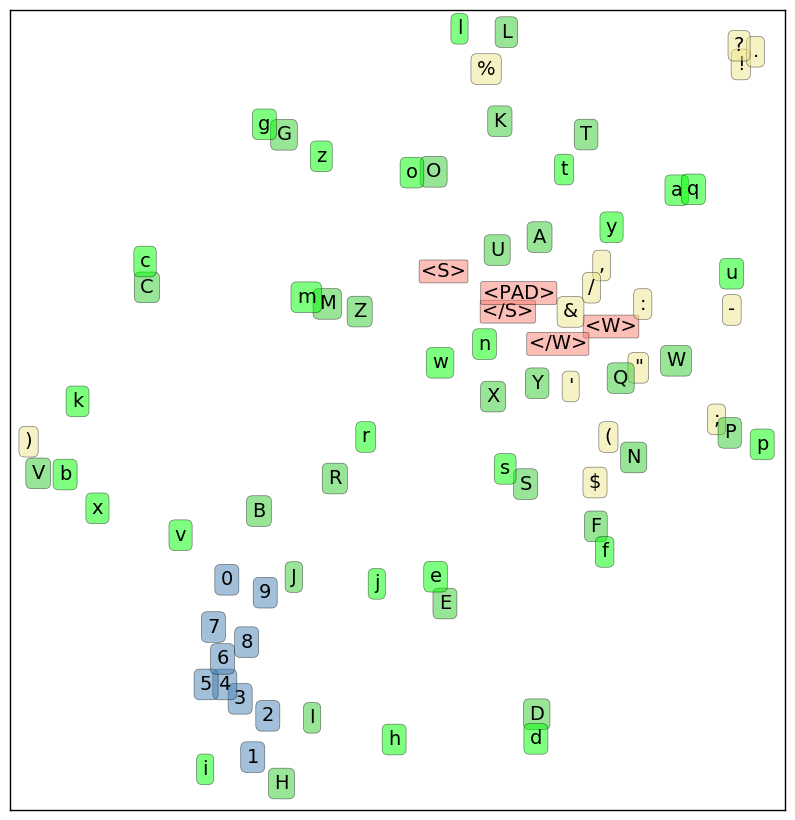

Distributional-based Character Embedding Space

A Distributional-based Character Embedding Space is a text-item embedding space for text characters associated with a distributional character embedding function (which maps to distributional word vectors).

- Context:

- It can be created by a Distributional Character Embedding Modeling System (that implements a distributional character embedding modeling algorithm).

- It can range from being a Closed Distributional Character Embedding (that applies only to the words in the training data) to being an Open Distributional Character Embedding that transfers learning.

- It can be referenced by a Character-level NLP Algorithm (such as character-level seq2seq).

- …

- Example(s):

- one created by ....

- …

- Counter-Example(s):

- See: Distributional Co-Occurrence Word Vector, Word Representation, Distributional Co-Occurrence Text-Item Vector.

References

2018

- (Belinkov & Bisk, 2018) ⇒ Yonatan Belinkov, and Yonatan Bisk. (2018). “Synthetic and Natural Noise Both Break Neural Machine Translation.” In: Proceedings of 6th International Conference on Learning Representations, (ICLR-2018).

- QUOTE: The rise of end-to-end models in neural machine translation has led to recent interest in understandinghow these models operate. Several studies investigated the ability of such models to learnlinguistic properties at morphological (Vylomova et al., 2016; Belinkov et al., 2017a; Dalvi et al.,2017), syntactic (Shi et al., 2016; Sennrich, 2017), and semantic levels (Belinkov et al., 2017b). The use of characters or other sub-word units emerges as an important component in these models. Our work complements previous studies by presenting such NMT systems with noisy examples andexploring methods for increasing their robustness.

We experiment with three different NMT systems with access to character information at different levels. First, we use the fully character-level model of Lee et al. (2017). This is a sequence-to-sequence model with attention (Sutskever et al., 2014; Bahdanau et al., 2014) that is trained oncharacters to characters (charachar). It has a complex encoder with convolutional, highway, and recurrent layers, and a standard recurrent decoder. See Lee et al. (2017) for architecture details. This model was shown to have excellent performance on the German/English and Czech!English language pairs. We use the pre-trained German/Czech English models.

Second, we use Nematus (Sennrich et al., 2017), a popular NMT toolkit that was used in top-performing contributions in shared MT tasks in WMT (Sennrich et al., 2016b) and IWSLT (Junczys-Dowmunt & Birch, 2016). It is another sequence-to-sequence model with several architecture modifications,especially operating on sub-word units using byte-pair encoding (BPE) (Sennrich et al.,2016a). We experimented with both their single best and ensemble BPE models, but saw no significant difference in their performance under noise, so we report results with their single best WMTmodels for German/Czech!English.

Finally, we train an attentional sequence-to-sequence model with a word representation based on a character convolutional neural network (charCNN). This model retains the notion of a word but learns a character-dependent representation of words. It was shown to perform well on morphologically-rich languages (Kim et al., 2015; Belinkov & Glass, 2016; Costa-juss`a & Fonollosa, 2016; Sajjad et al., 2017), thanks to its ability to learn morphologically-informative representations (Belinkov et al., 2017a). The charCNN model has two long short-term memory (Hochreiter & Schmidhuber, 1997) layers in the encoder and decoder. A CNN over characters in each word replaces the word embeddings on the encoder side (for simplicity, the decoder is word-based). We use 1000 filters with a width of 6 characters. The character embedding size is set to 25. The convolutions are followed by Tanh and max-pooling over the length of the word (Kim et al., 2015). We train charCNN with the implementation in Kim (2016); all other settings are kept to default values.

- QUOTE: The rise of end-to-end models in neural machine translation has led to recent interest in understandinghow these models operate. Several studies investigated the ability of such models to learnlinguistic properties at morphological (Vylomova et al., 2016; Belinkov et al., 2017a; Dalvi et al.,2017), syntactic (Shi et al., 2016; Sennrich, 2017), and semantic levels (Belinkov et al., 2017b). The use of characters or other sub-word units emerges as an important component in these models. Our work complements previous studies by presenting such NMT systems with noisy examples andexploring methods for increasing their robustness.

2017

- https://minimaxir.com/2017/04/char-embeddings/

- QUOTE: Why not work backwards and calculate character embeddings? Then you could calculate a relatively few amount of vectors which would easily fit into memory, and use those to derive word vectors, which can then be used to derive the sentence/paragraph/document/etc vectors. But training character embeddings traditionally is significantly more computationally expensive since there are 5-6x the amount of tokens, and I don’t have access to the supercomputing power of Stanford researchers.

Why not use the existing pretrained word embeddings to extrapolate the corresponding character embeddings within the word? Think “bag-of-words,” except “bag-of-characters.” …

- QUOTE: Why not work backwards and calculate character embeddings? Then you could calculate a relatively few amount of vectors which would easily fit into memory, and use those to derive word vectors, which can then be used to derive the sentence/paragraph/document/etc vectors. But training character embeddings traditionally is significantly more computationally expensive since there are 5-6x the amount of tokens, and I don’t have access to the supercomputing power of Stanford researchers.

2016

- Colin Morris. (2016). “Dissecting Google's Billion Word Language Model Part 1: Character Embeddings." Blog post. Sep 21, 2016

- QUOTE: Character embeddings?

The most obvious way to represent a character as input to our neural network is to use a one-hot encoding. For example, if we were just encoding the lowercase Roman alphabet, we could say…

- onehot('a') = [1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0]

- onehot('c') = [0,0,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0]

And so on. Instead, we’re going to learn a “dense” representation of each character. If you’ve used word embedding systems like word2vec then this will sound familiar.

The first layer of the Char CNN component of the model is just responsible for translating the raw characters of the input word into these character embeddings, which are passed up to the convolutional filters.

In lm_1b, the character alphabet is of size 256 (non-ascii characters are expanded into multiple bytes, each encoded separately), and the space these characters are embedded into is of dimension 16. For example, ‘a’ is represented by the following vector: …

2016b

- (Kim et al., 2016) ⇒ Yoon Kim, Yacine Jernite, David Sontag, and Alexander M. Rush. (2016). “Character-Aware Neural Language Models.” In: Proceeding of AAAI.

2015a

- (Zhang, Zhao, & LeCun, 2015)) ⇒ Xiang Zhang, Junbo Zhao, and Yann LeCun. (2015). “Character-level Convolutional Networks for Text Classification.” In: Advances in Neural Information Processing Systems.

2015b

- (dos_Santos & Guimarães, 2015) ⇒ Cícero Nogueira dos Santos, and Victor Guimarães. (2015). “Boosting Named Entity Recognition with Neural Character Embeddings.” In: Proceedings of the Fifth Named Entity Workshop.

2014

- (dos Santos & Zadrozny, 2014) ⇒ Cícero Nogueira dos Santos, and Bianca Zadrozny. (2014). “Learning Character-level Representations for Part-of-speech Tagging.” In: Proceedings of the 31st International Conference on Machine Learning (ICML-14).