Exploding Gradient Problem

Jump to navigation

Jump to search

A Exploding Gradient Problem is a Machine Learning Training Algorithm problem that arises when using gradient descent and backpropagation.

- Example(s):

- Counter-Example(s):

- See: Recurrent Neural Network, Deep Neural Network Training Algorithm.

References

2017

- (Grosse, 2017) ⇒ Roger Grosse (2017). "Lecture 15: Exploding and Vanishing Gradients". In: CSC321 Course, University of Toronto.

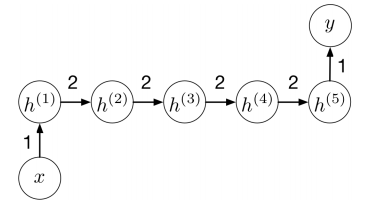

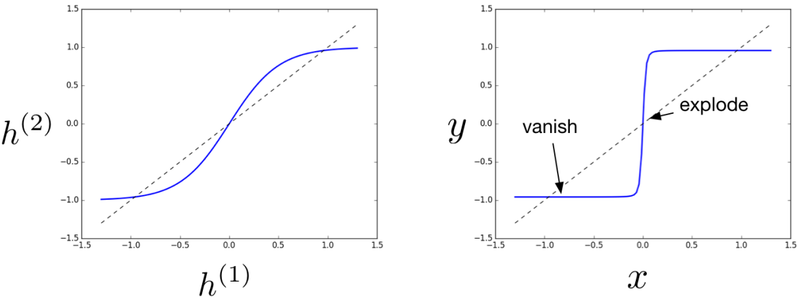

- QUOTE: To make this story more concrete, consider the following RNN, which uses the tanh activation function:

|