2016 StillNotThereComparingTradition

- (Schnober et al., 2016) ⇒ Carsten Schnober, Steffen Eger, Erik-Lan Do Dinh, and Iryna Gurevych. (2016). “Still Not There? Comparing Traditional Sequence-to-Sequence Models to Encoder-Decoder Neural Networks on Monotone String Translation Tasks.” In: Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers.

Subject Headings: Encoder-Decoder Neural Network; Sequence-To-Sequence Neural Network; Spelling Error Correction, Monotone String Translation Task, Attention-Encoder-Decoder Neural Network, Morphological Inflection Encoder-Decoder Neural Network.

Notes

- Article Versions and URLs:

Cited By

Quotes

Abstract

We analyze the performance of encoder-decoder neural models and compare them with well-known established methods. The latter represent different classes of traditional approaches that are applied to the monotone sequence-to-sequence tasks OCR post-correction, spelling correction, grapheme-to-phoneme conversion, and lemmatization. Such tasks are of practical relevance for various higher-level research fields including digital humanities, automatic text correction, and speech recognition. We investigate how well generic deep-learning approaches adapt to these tasks, and how they perform in comparison with established and more specialized methods, including our own adaptation of pruned CRFs.

1 Introduction

2 Task Description

3 Data

4 Model Description

In this section, we briefly describe encoder-decoder neural models, pruned CRFs, and our three baselines.

4.1 Encoder-Decoder Neural Models

We compare three variants of encoder-decoder models: the ‘classic’ variant and two modifications:

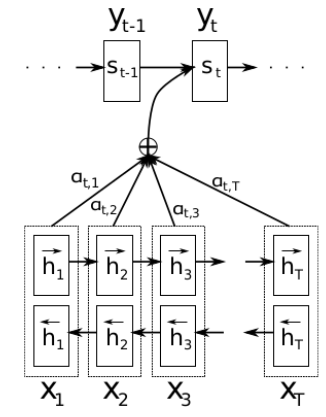

enc-dec: Encoder-decoder models using recurrent neural networks (RNNs) for Seq2Seq tasks were introduced by Cho et al. (2014) and Sutskever et al. (2014). The encoder reads an input $\vec{x}$ and generates a vector representation $e$ from it. The decoder predicts the output $\vec{y}$ one time step $t$ at a time, based on $e$. The probability for each output symbol $y_t$ hence depends on e and all previously generated output symbols: $p(\vec{y}|e) = \displaystyle \prod_{t=1}^T' p(y_t|e,y_1,\cdots, y_{t-1})$ where $T’$ is the length of the output sequence. In NLP, most implementations of encoder-decoder models employ LSTM (long short- term memory) layers as hidden units, which extend generic RNN hidden layers with a memory cell that is able to “memorize" and “forget" features. This addresses the Vanishing gradients problem and allows to catch long-range dependencies.attn-enc-dec: We explore the attention-based encoder-decoder model proposed by Bahdanau et al. (2014) (Figure 1). It extends the encoder-decoder model by learning to align and translate jointly. The essential idea is that the current output unit $y_t$ does not depend on all input units in the same way, as captured by a global vector $e$ encoding the input. Instead, $y_t$ may be conditioned upon local context in the input (to which it pays attention).morph-trans: Faruqui et al. (2016) present a new encoder-decoder model designed for morphological inflection, proposing to feed the input sequence directly into the decoder. This approach is motivated by the observation that input and output are usually very similar in problems such as morphological inflection. Similar ideas have been proposed in Gu et al. (2016) in their so-called “CopyNet” encoder-decoder model (which they apply to text summarization) that allows for portions of the input sequence to be simply copied to the output sequence, without modifications. A priori, this observation seems to apply to our tasks too: at least in spelling correction, the output usually differs only marginally from the input.For the tested neural models, we follow the same overall approach as Faruqui et al. (2016): we perform decoding and evaluation of the test data using an ensemble of $k = 5$ independently trained models in order to deal with the non-convex nature of the optimization problem of neural networks and the risk of running into a local optimum (Collobert et al., 2011). The total probability pens for generating an output token $y_t$ is estimated from the individual model output probabilities: $p_{en}s(y_t|\cdot) = \dfrac{1}{Z} \displaystyle \prod_{i=1}^k p_i(y_t|\cdot)^{frac{1}{Z}}$ with a normalization factor $Z$.

4.2 Pruned Conditional Random Fields

4.3 Further Baseline Systems

5 Results and Analysis

5.1 Model Performances

5.2 Training Time

6 Conclusions

Acknowledgements

References

- 1. (Bahdanau et al., 2015) ⇒ Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. (2015). “Neural Machine Translation by Jointly Learning to Align and Translate.” In: Proceedings of the Third International Conference on Learning Representations, (ICLR-2015).

- 2. Bisani, M., & Ney, H. (2008). Joint-sequence models for grapheme-to-phoneme conversion. Speech Communication, 50(5), 434–451.

- 3. Brill, E., & Moore, R. C. (2000). An improved error model for noisy channel spelling correction. In ACL ’00 Proceedings of the 38th Annual Meeting on Association for Computational Linguistics (pp. 286–293).

- 4. Charniak, E., & Johnson, M. (2005). Coarse-to-Fine n-Best Parsing and MaxEnt Discriminative Reranking. In: Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL’05) (pp. 173–180).

- 5. Cho, K., Merrienboer, B. van, Gulcehre, C., Bahdanau, D., Bougares, F., Schwenk, H., & Bengio, Y. (2014). Learning Phrase Representations using RNN Encoder--Decoder for Statistical Machine Translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (pp. 1724–1734).

- 6. Chrupala, G. (2014). Normalizing tweets with edit scripts and recurrent neural embeddings. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers) (pp. 680–686).

- 7. (Collobert et al., 2011b) ⇒ Ronan Collobert, Jason Weston, Léon Bottou, Michael Karlen, Koray Kavukcuoglu, and Pavel Kuksa. (2011). “Natural Language Processing (Almost) from Scratch.” In: The Journal of Machine Learning Research, 12.

- 8. Cucerzan, S., & Brill, E. (2004). Spelling Correction as an Iterative Process that Exploits the Collective Knowledge of Web Users. In EMNLP (pp. 293–300).

- 9. Eger, S. (2015). Designing and Comparing G2P-Type Lemmatizers for a Morphology-Rich Language. In International Workshop on Systems and Frameworks for Computational Morphology (pp. 27–40).

- 10. Eger, S., Brück, T. vor der, & Mehler, A. (2016). A Comparison of Four Character-Level String-to-String Translation Models for (OCR) Spelling Error Correction. The Prague Bulletin of Mathematical Linguistics, 105(1), 77–99.

- (Farra et al., 2014) ⇒ Noura Farra, Nadi Tomeh, Alla Rozovskaya, and Nizar Habash. 2014. Generalized Character-Level Spelling Error Correction. In: Proceedings of ACL ’14, pages 161–167, Baltimore, MD, USA. Association for Computational Linguistics.

- (Faruqui et al., 2016) ⇒ Manaal Faruqui, Yulia Tsvetkov, Graham Neubig, and Chris Dyer. (2016). “Morphological Inflection Generation Using Character Sequence to Sequence Learning.” In: Proceedings of the 2016 Conference of the North {{American Chapter of the Association for Computational Linguistics: Human Language Technologies. DOI:10.18653/v1/N16-1077

- (Gu et al., 2016) ⇒ Jiatao Gu, Zhengdong Lu, Hang Li, and Victor O.K. Li. (2016). “Incorporating Copying Mechanism in Sequence-to-Sequence Learning.” In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). doi:10.18653/v1/P16-1154

- 11. Gubanov, S., Galinskaya, I., & Baytin, A. (2014). Improved Iterative Correction for Distant Spelling Errors. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers) (pp. 168–173).

- 12. Jiampojamarn, S., Cherry, C., & Kondrak, G. (2010). Integrating Joint n-gram Features into a Discriminative Training Framework. In Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics (pp. 697–700).

- 13. Kominek, J., & Black, A. W. (2004). The CMU Arctic speech databases. SSW, 223–224.

- 14. Lafferty, J. D., McCallum, A., & Pereira, F. C. N. (2001). Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In ICML ’01 Proceedings of the Eighteenth International Conference on Machine Learning (pp. 282–289).

- 15. Lewellen, M. (1998). Neural Network Recognition of Spelling Errors. In: Proceedings of the 36th Annual Meeting of the Association for Computational Linguistics and 17th International Conference on Computational Linguistics, Volume 2 (pp. 1490–1492).

- 16. Luong, M.-T., Pham, H., & Manning, C. D. (2015). Effective Approaches to Attention-based Neural Machine Translation. ArXiv Preprint ArXiv:1508.04025.

- 17. Mueller, T., Schmid, H., & Schütze, H. (2013). Efficient Higher-Order CRFs for Morphological Tagging. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing (pp. 322–332).

- 18. Okazaki, N., Tsuruoka, Y., Ananiadou, S., & Tsujii, J. ’ichi. (2008). A Discriminative Candidate Generator for String Transformations. In: Proceedings of the 2008 Conference on Empirical Methods in Natural Language Processing (pp. 447–456).

- 19. Raaijmakers, S. (2013). A Deep Graphical Model for Spelling Correction. BNAIC 2013: Proceedings of the 25th Benelux Conference on Artificial Intelligence, Delft, The Netherlands, November 7-8, 2013.

- 20. Rao, K., Peng, F., Sak, H., & Beaufays, F. (2015). Grapheme-to-phoneme conversion using Long Short-Term Memory recurrent neural networks. In 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 4225–4229).

- 21. Reynaert, M. (2014). On OCR ground truths and OCR post-correction gold standards, tools and formats. In: Proceedings of the First International Conference on Digital Access to Textual Cultural Heritage (pp. 159–166).

- 22. Richmond, K., Clark, R. A. J., & Fitt, S. (2009). Robust LTS rules with the Combilex speech technology lexicon. In INTERSPEECH (pp. 1295–1298).

- 23. Schmaltz, A. R., Kim, Y., Rush, A. M., & Shieber, S. M. (2016). Sentence-level grammatical error identification as sequence-to-sequence correction. In: Proceedings of the Eleventh Workshop on Innovative Use of NLP for Building Educational Applications (pp. 242–251).

- 24. Sherif, T., & Kondrak, G. (2007). Substring-Based Transliteration. In: Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics (pp. 944–951).

- 25. (Sutskever et al., 2014) ⇒ Ilya Sutskever, Oriol Vinyals, and Quoc V. Le. (2014). “Sequence to Sequence Learning with Neural Networks.” In: Advances in Neural Information Processing Systems. arXiv:1409.321 .

- 27. Vinyals, O., & Le, Q. V. (2015). A Neural Conversational Model. ArXiv Preprint ArXiv:1506.05869.

- 28. Vukotić, V., Raymond, C., & Gravier, G. (2015). Is it time to switch to Word Embedding and Recurrent Neural Networks for Spoken Language Understanding. In InterSpeech (pp. 130–134).

- 29. Wang, Z., Xu, G., Li, H., & Zhang, M. (2014). A Probabilistic Approach to String Transformation. IEEE Transactions on Knowledge and Data Engineering, 26(5), 1063–1075.

- 30. Xie, Z., Avati, A., Arivazhagan, N., Jurafsky, D., & Ng, A. Y. (2016). Neural Language Correction with Character-Based Attention. ArXiv Preprint ArXiv:1603.09727.

- 31. Yao, K., & Zweig, G. (2015). Sequence-to-sequence neural net models for grapheme-to-phoneme conversion. In INTERSPEECH (pp. 3330–3334).

- 32. Yin, W., Ebert, S., & Schütze, H. (2016). Attention-Based Convolutional Neural Network for Machine Comprehension. In: Proceedings of the Workshop on Human-Computer Question Answering (pp. 15–21). ----;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2016 StillNotThereComparingTradition | Chris Dyer Iryna Gurevych Hang Li Pavel Kuksa Ronan Collobert Koray Kavukcuoglu Jason Weston Léon Bottou Yoshua Bengio Michael Karlen Ilya Sutskever Oriol Vinyals Quoc V. Le Zhengdong Lu Kyunghyun Cho Dzmitry Bahdanau Graham Neubig Carsten Schnober Steffen Eger Erik-Lan Do Dinh Manaal Faruqui Yulia Tsvetkov Jiatao Gu Victor O.K. Li | Still Not There? Comparing Traditional Sequence-to-Sequence Models to Encoder-Decoder Neural Networks on Monotone String Translation Tasks | 2011 2014 2015 2016 |