2017 FeUdalNetworksforHierarchicalRe

- (Vezhnevets et al., 2017) ⇒ Alexander Sasha Vezhnevets, Simon Osindero, Tom Schaul, Nicolas Heess, Max Jaderberg, David Silver, and Koray Kavukcuoglu. (2017). “FeUdal Networks for Hierarchical Reinforcement Learning.” In: Proceedings of the 34th International Conference on Machine Learning (ICML2017).

Subject Headings: FeUdal Network (FUN), Feudal Reinforcement Learning (FRL) System, Modular Neural Network, Hierarchical Reinforcement Learning System, Transition Policy Gradient, Policy Gradient Training System, Dilated LSTM (dLSTM).

Notes

Cited By

- Google Scholar: ~ 402 Citations, Retrieved: 2020-12-5.

Quotes

Abstract

We introduce FeUdal Networks (FuNs): a novel architecture for hierarchical reinforcement learning. Our approach is inspired by the feudal reinforcement learning proposal of Dayan and Hinton, and gains power and efficacy by decoupling end-to-end learning across multiple levels - allowing it to utilise different resolutions of time. Our framework employs a Manager module and a Worker module. The Manager operates at a slower time scale and sets abstract goals which are conveyed to and enacted by the Worker. The Worker generates primitive actions at every tick of the environment. The decoupled structure of FuN conveys several benefits - in addition to facilitating very long timescale credit assignment it also encourages the emergence of sub-policies associated with different goals set by the Manager. These properties allow FuN to dramatically outperform a strong baseline agent on tasks that involve long-term credit assignment or memorisation.

1. Introduction

Deep reinforcement learning has recently enjoyed successes in many domains (Mnih et al., 2015; Schulman et al., 2015; Levine et al., 2015; Mnih et al., 2016; Lillicrap et al., 2015). Nevertheless, long-term credit assignment remains a major challenge for these methods, especially in environments with sparse reward signals, such as the infamous Montezuma's Revenge ATARI game. It is symptomatic that the standard approach on the ATARI benchmark suite (Bellemare et al., 2012) is to use an action-repeat heuristic, where each action translates into several (usually 4) consecutive actions in the environment. Yet another dimension of complexity is seen in non-Markovian environments that require memory – these are particularly challenging, since the agent has to learn which parts of experience to store for later, using only a sparse reward signal.

The framework we propose takes inspiration from feudal reinforcement learning (FRL) introduced by Dayan & Hinton (1993), where levels of hierarchy within an [[agent communicate via explicit goals. Some key insights from FRL are that goals can be generated in a top-down fashion, and that goal setting can be decoupled from goal achievement; a level in the hierarchy communicates to the level below it what must be achieved, but does not specify how to do so. Making higher levels reason at a lower temporal resolution naturally structures the agents behaviour into temporally extended sub-policies.

The architecture explored in this work is a fully differentiable neural network with two levels of hierarchy (though there are obvious generalisations to deeper hierarchies). The top level, the Manager, sets goals at a lower temporal resolution in a latent state-space that is itself learnt by the Manager. The lower level, the Worker, operates at a higher temporal resolution and produces primitive actions, conditioned on the goals it receives from the Manager. The Worker is motivated to follow the goals by an intrinsic reward. However, significantly, no gradients are propagated between Worker and Manager; the Manager receives its learning signal from the environment alone. In other words, the Manager learns to select latent goals that maximise extrinsic reward.

The key contributions of our proposal are:

- (1) A consistent, end-to-end differentiable model that embodies and generalizes the principles of FRL.

- (2) A novel, approximate transition policy gradient update for training the Manager, which exploits the semantic meaning of the goals it produces.

- (3) The use of goals that are directional rather than absolute in nature.

- (4) A novel RNN design for the Manager – a dilated LSTM – which extends the longevity of the recurrent state memories and allows gradients to flow through large hops in time, enabling effective back-propagation through hundreds of steps.

Our ablative analysis (Section 5.4) confirms that transitional policy gradient and directional goals are crucial for best performance. Our experiments on a selection of ATARI games (including the infamous Montezuma's revenge) and on several memory tasks in the 3D DeepMind Lab environment (Beattie et al., 2016) show that FuN significantly improves long-term credit assignment and memorisation.

2. Related Work

3. The Model

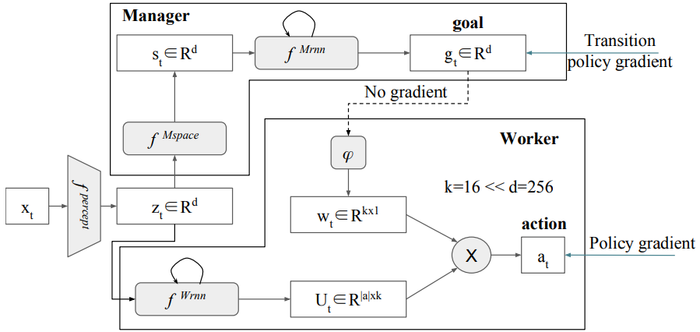

What is FuN? FuN is a modular neural-network consisting of two modules – the Worker and the Manager. The Manager internally computes a latent state representation $s_t$ and outputs a goal vector $g_t$. The Worker produces actions conditioned on external observation, its own state, and the Managers goal. The Manager and the Worker share a perceptual module which takes an observation from the environment $x_t$ and computes a shared intermediate representation $z_t$. The Manager's goals $g_t$ are trained using an approximate transition policy gradient. This is a particularly efficient form of policy gradient training that exploits the knowledge that the Worker's behaviour will ultimately align with the goal directions it has been set. The Worker is then trained via intrinsic reward to produce actions that cause these goal directions to be achieved. Figure 1a illustrates the overall design and the following equations describe the forward dynamics of our network:

| $z_{t}=f^{\text {percept }}\left(x_{t}\right) ; s_{t}=f^{\text {Mspace}}\left(z_{t}\right)$ | (1) |

| $h_{t}^{M}, \hat{g}_{t}=f^{M r n n}\left(s_{t}, h_{t-1}^{M}\right) ; g_{t}=\dfrac{\hat{g}_{t}}{\parallel\hat{g}_{t}\parallel}$ | (2) |

| $w_{t}=\phi\left(\sum_{i=t-c}^{t} g_{i}\right) $ | (3) |

| $h^{W}, U_{t}=f^{W r n n}\left(z_{t}, h_{t-1}^{W}\right) ; \pi_{t}=\operatorname{SoftMax}\left(U_{t} w_{t}\right)$ | (4) |

where both the Manager and the Worker are recurrent. Here $h^M$ and $h^W$ correspond to the internal states of the Manager and the Worker respectively. A linear transform $\phi$ maps a goal $g_t$ into an embedding vector $w_t \in \R^k$, which is then combined via product with matrix $U_t$ (FUN Worker|Workers output) to produce policy $\pi$ – vector of probabilities over primitive actions. The next section provides the details on goal embedding and the following sections 3.2, 3.3 describes how FuN is trained.

|

4. Architecture Details

This section provides the particular details of the model as described in section 3. The perceptual module $f^{percept}$ is a convolutional network (CNN) followed by a fully connected layer. The CNN has a first layer with 16 8 × 8 filters of stride 4, followed by a layer with with 32 4 × 4 filters of stride 2. The fully connected layer has 256 hidden units. Each convolutional and fully-connected layer is followed by a rectifier non-linearity[1]. The state space which the Manager implicitly models in formulating its goals is computed via $f^{Mspace}$, which is another fully connected layer followed by a rectifier non-linearity. The dimensionality of the embedding vectors, $w$, is set as $k = 16$. To encourage exploration in transition policy, at every step with a small probability $\epsilon$ we emit a random goal sampled from a uni-variate Gaussian.

The Worker's recurrent network $f^{Wrnn}$ is a standard LSTM (Hochreiter & Schmidhuber, 1997). For the Manager's recurrent network, $f^{Mrnn}$, we propose a novel design – the dilated LSTM, which is introduced in the next section. Both $f^{Mrnn}$ and $f^{Wrnn}$ have 256 hidden units.

4.1. Dilated LSTM

We propose a novel RNN architecture for the Manager, which operates at lower temporal resolution than the data stream. The main contribution here is the inductive bias towards slowly varying outputs, which have very long-term temporal dependencies. We define a dilated LSTM analogously to dilated convolutional networks (Yu|Yu & Koltun, 2016). For a dilation radius $r$ let the full state of the network be $h = \{\hat{h}^i\}^r_{i=1}$, i.e. it is composed of $r$ separate groups of sub-states or “cores". At time $t$ the network is governed by the following equations: $\hat{h}_t^{t\%r} , g_t = LSTM(s_t, \hat{h}_{t\%r}^{t−1} ; \theta^{ LSTM})$, where $\%$ denotes the modulo operation and allows us to indicate which group of cores is currently being updated. We make the parameters of the LSTM network $\theta^{LSTM}$ explicit to stress that the same set of parameters governs the update for each of the $r$ groups within the dLSTM.

At each time step only the corresponding part of the state is updated and the output is pooled across the previous $c$ outputs. This allows the $r$ groups of cores inside the dLSTM to preserve the memories for long periods, yet the dLSTM as a whole is still able to process and learn from every input experience, and is also able to update its output at every step. This idea is similar to clockwork RNNs (Koutnik et al., 2014), however there the top level "ticks" at a fixed, slow pace, whereas the dLSTM observes all the available training data instead. In the experiments we set or = 10$, and this was also used as the predictions horizon, $c$.

5. Experiments

6. Discussion and Future Work

Acknowledgements

Footnotes

- ↑ This is substantially the same CNN as in (Mnih et al., 2016; 2015), the only difference is that in the pre-processing stage we retain all colour channels.

References

2016

- (Yu & Koltun, 2016) ⇒ Fisher Yu, and Vladlen Koltun. (2016). “Multi-scale Context Aggregation by Dilated Convolutions.” In: Proceedings of 4th International Conference on Learning Representations (ICLR-2016).

2014

- (Koutnik et al., 2014) ⇒ Jan Koutnik, Klaus Greff, Faustino J. Gomez, and Jurgen Schmidhuber. (2014). “A Clockwork RNN.” In: Proceedings of the 31th International Conference on Machine Learning (ICML 2014).

1997

- (Hochreiter & Schmidhuber, 1997) ⇒ Sepp Hochreiter, and Jurgen Schmidhuber. (1997). “Long Short-term Memory". In: Neural computation, 9(8). DOI:10.1162/neco.1997.9.8.1735.

1993

- (Dayan & Hinton, 1993) ⇒ Peter Dayan, and Geoffrey E. Hinton. (1992). “Feudal Reinforcement Learning.” In: Proceedings of Advances in Neural Information Processing Systems 5 (NIPS 1992).

BibTeX

@inproceedings{2017_FeUdalNetworksforHierarchicalRe,

author = {Alexander Sasha Vezhnevets and

Simon Osindero and

Tom Schaul and

Nicolas Heess and

Max Jaderberg and

David Silver and

Koray Kavukcuoglu},

editor = {Doina Precup and

Yee Whye Teh},

title = {FeUdal Networks for Hierarchical Reinforcement Learning},

booktitle = {Proceedings of the 34th International Conference on Machine Learning

(ICML 2017)},

series = {Proceedings of Machine Learning Research},

volume = {70},

pages = {3540--3549},

publisher = {PMLR},

year = {2017},

url = {http://proceedings.mlr.press/v70/vezhnevets17a.html},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2017 FeUdalNetworksforHierarchicalRe | Koray Kavukcuoglu Simon Osindero David Silver Tom Schaul Alexander Sasha Vezhnevets Nicolas Heess Max Jaderberg | FeUdal Networks for Hierarchical Reinforcement Learning | 2017 |