2018 NeuralSpeedReadingviaSkimRNN

- (Seo et al., 2018) ⇒ Minjoon Seo, Sewon Min, Ali Farhadi, and Hannaneh Hajishirzi. (2018). “Neural Speed Reading via Skim-RNN.” In: International Conference on Learning Representations.

Subject Headings: Skim-Reading Task, Skim-RNN.

Notes

Cited By

Quotes

Abstract

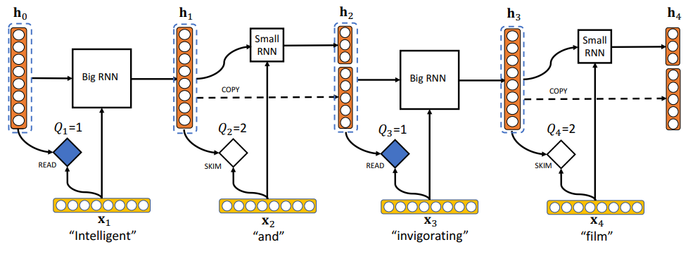

Inspired by the principles of speed reading, we introduce Skim-RNN, a recurrent neural network (RNN) that dynamically decides to update only a small fraction of the hidden state for relatively unimportant input tokens. Skim-RNN gives computational advantage over an RNN that always updates the entire hidden state. Skim-RNN uses the same input and output interfaces as a standard RNN and can be easily used instead of RNNs in existing models. In our experiments, we show that Skim-RNN can achieve significantly reduced computational cost without losing accuracy compared to standard RNNs across five different natural language tasks. In addition, we demonstrate that the trade-off between accuracy and speed of Skim-RNN can be dynamically controlled during inference time in a stable manner. Our analysis also shows that Skim-RNN running on a single CPU offers lower latency compared to standard RNNs on GPUs.

Introduction

Inspired by the principles of human's speed reading, we introduce Skim-RNN (Figure 1), which makes a fast decision on the significance of each input (to the downstream task) and ‘skims’ through unimportant input tokens by using a smaller RNN to update only a fraction of the hidden state. When the decision is to ‘fully read’, Skim-RNN updates the entire hidden state with the default RNN cell.

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2018 NeuralSpeedReadingviaSkimRNN | Ali Farhadi Minjoon Seo Hannaneh Hajishirzi Sewon Min |

{{Publication|doi=|title=Neural Speed Reading via Skim-{RNN}|titleUrl=|abstract=0pub_abstract}}