2019 CUTIELearningtoUnderstandDocume

- (Zhao, Niu et al., 2019) ⇒ Xiaohui Zhao, Endi Niu, Zhuo Wu, and Xiaoguang Wang. (2019). “CUTIE: Learning to Understand Documents with Convolutional Universal Text Information Extractor.” In: arXiv preprint arXiv:1903.12363.

Subject Headings: Structured Visual Document Information Extraction, Visual Document, ICDAR 2019 SROIE Task3, ICDAR.

Notes

- Code at https://github.com/vsymbol/CUTIE

- It suggests that Spatial Information plays intrinsic roles in Documents Key Information Extraction.

Cited By

Quotes

Abstract

Extracting key information from documents, such as receipts or invoices, and preserving the interested texts to structured data is crucial in the document-intensive streamline processes of office automation in areas that includes but not limited to accounting, financial, and taxation areas. To avoid designing expert rules for each specific type of document, some published works attempt to tackle the problem by learning a model to explore the semantic context in text sequences based on the Named Entity Recognition (NER) method in the NLP field. In this paper, we propose to harness the effective information from both semantic meaning and spatial distribution of texts in documents. Specifically, our proposed model, Convolutional Universal Text Information Extractor (CUTIE), applies convolutional neural networks on gridded texts where texts are embedded as features with semantical connotations. We further explore the effect of employing different structures of convolutional neural network and propose a fast and portable structure. We demonstrate the effectiveness of the proposed method on a dataset with up to 4,484 labelled receipts, without any pre-training or post-processing, achieving state of the art performance that is much better than the NER based methods in terms of either speed and accuracy. Experimental results also demonstrate that the proposed CUTIE model being able to achieve good performance with a much smaller amount of training data.

1. Introduction

Implementing Scanned receipts OCR and information extraction (SROIE) is of great benefit to services and applications such as efficient archiving, compliance check, and fast indexing in the document-intensive streamline processes of office automation in areas that includes but not limited to accounting, financial, and taxation areas. There are two specific tasks involved in SROIE: receipt OCR and key information extraction. In this work, we focus on the second task that is rare in published research. In fact, key information extraction faces big challenges, where different types of document structures and the vast number of potential interested key words introduces great difficulties. Although the commonly used rule-based method can be implemented with carefully designed expert rules, it can only work on certain specific type of documents and takes no lesser effort to adapt to new type of documents. Therefore, it is desirable to have a learning-based key information extraction method with limited requirement of human resources and solely employing the deep learning technique without designing an expert rule for any specific type of documents.

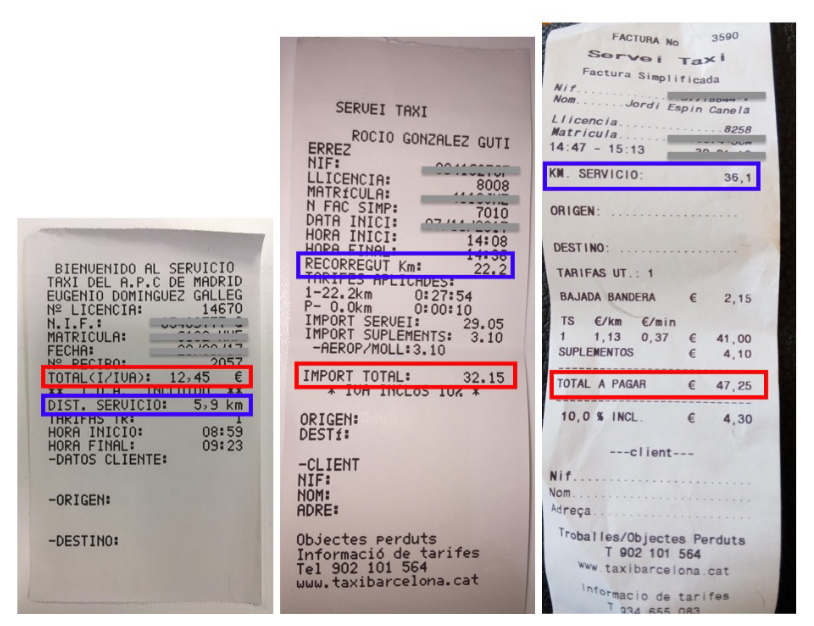

CloudScan is a learning based invoice analysis system [9]. Aiming to not rely on invoice layout templates, Cloud- Scan trains a model that could be generalized to unseen invoice layouts, where a model is trained either using Long Short Term Memory (LSTM) or Logistic Regression (LR) with expert designed rules as training features. This turns out to be extremely similar with solving a Named Entity Recognition (NER) or slot filling task. For that reason, several models can be employed, e.g., the Bi-directional Encoder Representations from Transformers (BERT) model is a recently proposed state of the art method and has achieved great success in a wide of range of NLP tasks including NER [7]. However, the NER models were not originally designed to solve the key information extraction problem in SROIE. To employ NER models, text words in the original document are aligned as a long paragraph based on a line-based rule. In fact, documents, such as receipts and invoices, present with various styles of layouts that were designed for different scenarios or from different enterprise entities. The order or word-to-word distance of the texts in the line-base-aligned long paragraph tend to vary greatly due to layout variations, which is difficult to be handled with the natural language oriented methods. Typical Examples of documents with different layouts are illustrated in Fig. 2.

Figure 1. Framework of the proposed method, (a) positional map the scanned document image to a grid with text’s relative spatial relation preserved, (b) feed the generated grid into the CNN for extracting key information, (c) reverse map the extracted key information for visual reference.

In this work, attempting to involve the spatial information into the key information extraction process, we propose to tackle this problem by using the CNN based network structure and involve the semantic features in a carefully designed fashion. In particular, our proposed model, called Convolutional Universal Text Information Extractor (CUTIE), tackles the key information extraction problem by applying convolutional deep learning model on the gridded texts, as illustrated in Fig. 1. The gridded texts are formed with the proposed grid positional mapping method, where the grid is generated with the principle that is preserving texts relative spatial relationship in the original scanned document image. The rich semantic information is encoded from the gridded texts at the very beginning stage of the convolutional neural network with a word embedding layer. The CUTIE allows for simultaneously looking into both semantical information and spatial information of the texts in the scanned document image and can reach a new state of the art result for key information extraction, which outperforms BERT model but without demanding of pretraining on a huge text dataset [7, 12].

2. Related Works

Several rule-based invoice analysis systems were proposed in [10, 6, 8]. Intellix by DocuWare requires a template being annotated with relevant fields [10]. For that reason, a collection of templates have to be constructed. SmartFix employs specifically designed configuration rules for each template [6]. Esser et al. uses a dataset of fixed key information positions for each template [8]. It is not hard to find that the rule-based methods rely heavily on the predefined template rules to extract information from specific invoice layouts.

CloudScan is a work attempting to extract key information with learning based models [9]. Firstly, N-grams features are formed by connecting expert designed rules calculated results on texts of each document line. Then, the features are fed to train a RNN-based or a logistic regression based classifier for key information extraction. Certain post-processings are added to further enhance the extraction results. However, the line-based feature extraction method can not achieve its best performance when document texts are not perfectly aligned. Moreover, the RNN-based classifier, bi-directional LSTM model in CloudScan, has limited ability to learn the relationship among distant words. Bidirectional Encoder Representations from Transformers (BERT) is a recently proposed model that is pre-trained on a huge dataset and can be fine-tuned for a specific task, including Named Entity Recognition (NER), which outperforms most of the state of the art results in several NLP tasks [7]. Since the previous learning based methods treat the key information extraction problem as a NER problem, applying BERT can achieve a better result than the bi-LSTM in CloudScan.

- Figure 2. Example of scanned taxi receipt images. We provide two colored rectangles to help readers find the key information about distance of travel and total amount with blue and red, respectively. Note the different types of spatial layouts and key information texts in these receipt images.

…

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2019 CUTIELearningtoUnderstandDocume | Xiaohui Zhao Endi Niu Zhuo Wu Xiaoguang Wang | Cutie: Learning to Understand Documents with Convolutional Universal Text Information Extractor | 2019 |