2019 DeepResidualNeuralNetworksforAu

- (Alzantot et al., 2019) ⇒ Moustafa Alzantot, Ziqi Wang, and Mani B. Srivastava. (2019). “Deep Residual Neural Networks for Audio Spoofing Detection.” In: Proceedings of 20th Annual Conference of the International Speech Communication Association (Interspeech 2019).

Subject Headings: Residual Neural Network; Deep Residual Neural Network; Spec-ResNet.

Notes

Cited By

- Google Scholar: ~ 19 Citations, Retrieved: 2021-01-24.

Quotes

Author Keywords

Abstract

The state-of-art models for speech synthesis and voice conversion are capable of generating synthetic speech that is perceptually indistinguishable from bonafide human speech. These methods represent a threat to the automatic speaker verification (ASV) systems. Additionally, replay attacks where the attacker uses a speaker to replay a previously recorded genuine human speech are also possible. In this paper, we present our solution for the ASVSpoof2019 competition, which aims to develop countermeasure systems that distinguish between spoofing attacks and genuine speeches. Our model is inspired by the success of residual convolutional networks in many classification tasks. We build three variants of a residual convolutional neural network that accept different feature representations (MFCC, log-magnitude STFT, and CQCC) of input. We compare the performance achieved by our model variants and the competition baseline models. In the logical access scenario, the fusion of our models has zero t-DCF cost and zero equal error rate (EER), as evaluated on the development set. On the evaluation set, our model fusion improves the t-DCF and EER by 25% compared to the baseline algorithms. Against physical access replay attacks, our model fusion improves the baseline algorithms t-DCF and EER scores by 71% and 75% on the evaluation set, respectively.

1. Introduction

2. Related Work

3. Model Design

3.1. Feature Extraction

3.2. Model Architecture

(...)

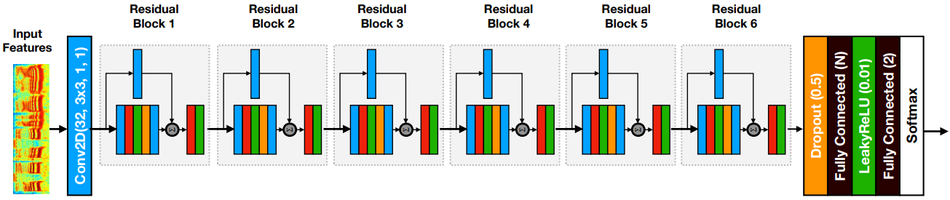

Figure 1 shows the architecture of the Spec-ResNet model which takes the log-magnitude STFT as input features. First, the input is treated as a single channel image and passed through a 2D convolution layer with 32 filters, where filter size = 3 × 3, stride length = 1 and padding = 1. The output volume of the first convolution layer has 32 channels and is passed through a sequence of 6 residual blocks. The output from the last residual block is fed into a dropout layer (with dropout rate = 50%; Srivastava et al., 2014) followed by a hidden fully connected (FC) layer with leaky-ReLU (He et al., 2015) activation function ($\alpha = 0.01$). Outputs from the hidden FC layer are fed into another FC layer with two units that produce classification logits. The logits are finally converted into a probability distribution using a final softmax layer.

|

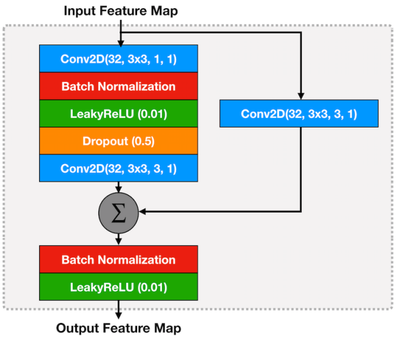

The structure of a residual block is shown in Figure 2. Each residual block has a Conv2D layer (32 filters, filter size = 3×3, stride = 1, padding = 1) followed by a batch normalization layer (Ioffe & Szegedy, 2015), a leaky-ReLU activation layer (He et al., 2015), a dropout (with dropout probability = 0.5; Srivastava et al., 2014), and another final Conv2D layer (also 32 filters and filter size = 3 × 3, but with stride = 3 and padding = 1). Dropout is used as a regularizer to reduce the model overfitting, and batch normalization (Ioffe & Szegedy, 2015) accelerates the network training progress. A skip-through connection is established by directly add the inputs to the outputs. To guarantee that the dimension agrees, we apply a Conv2D layer (32 filters, filter size = 3 × 3, stride = 3, padding = 1) on the bypass route. Finally, batch normalization (Ioffe & Szegedy, 2015) and leaky-ReLU nonlinearlity are used to produce the residual block output.

|

(...)

4. Evaluation

5. Conclusions

References

2014

- (Srivastava et al., 2014) ⇒ N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov (2014). “Dropout: a simple way to prevent neural networks from overfitting". In: The Journal of Machine Learning Research, vol. 15, no. 1, pp. 1929–1958.

2015a

- (He et al., 2015) ⇒ K. He, X. Zhang, S. Ren, and J. Sun (2015). “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification". In: Proceedings of the IEEE International Conference on computer vision, pp. 1026–1034.

2015b

- (Ioffe & Szegedy, 2015) ⇒ S. Ioffe and C. Szegedy (2015) "Batch normalization: Accelerating deep network training by reducing internal covariate shift". In: arXiv preprint arXiv:1502.03167.

BibTeX

@inproceedings{2019_DeepResidualNeuralNetworksforAu,

author = {Moustafa Alzantot and

Ziqi Wang and

Mani B. Srivastava},

editor = {Gernot Kubin and

Zdravko Kacic},

title = {Deep Residual Neural Networks for Audio Spoofing Detection},

booktitle = {Proceedings of 20th Annual Conference of the International Speech

Communication Association (Interspeech 2019)},

pages = {1078--1082},

publisher = {ISCA},

year = {2019},

url = {https://doi.org/10.21437/Interspeech.2019-3174},

doi = {10.21437/Interspeech.2019-3174},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2019 DeepResidualNeuralNetworksforAu | Moustafa Alzantot Ziqi Wang Mani B. Srivastava | Deep Residual Neural Networks for Audio Spoofing Detection | 2019 |