2020 MachineLearningTestingSurveyLan

- (Zhang, Harman et al., 2020) ⇒ Jie M. Zhang, Mark Harman, Lei Ma, and Yang Liu. (2020). “Machine Learning Testing: Survey, Landscapes and Horizons.” In: IEEE Transactions on Software Engineering (Early Access).

Subject Headings: Machine Learning (ML) Testing, Machine Learning (ML) Bugs.

Notes

- Preprint at https://arxiv.org/abs/1906.10742

Cited By

Quotes

Abstract

This paper provides a comprehensive survey of Machine Learning Testing (ML testing) research. It covers 138 papers on testing properties (e.g., correctness, robustness, and fairness), testing components (e.g., the data, learning program, and framework), testing workflow (e.g., test generation and test evaluation), and application scenarios (e.g., autonomous driving, machine translation). The paper also analyses trends concerning datasets, research trends, and research focus, concluding with research challenges and promising research directions in machine learning testing.

1 INTRODUCTION

…

2 PRELIMINARIES OF MACHINE LEARNING

…

3 MACHINE LEARNING TESTING

This section gives a definition and analyses of ML testing. It describes the testing workflow (how to test), testing properties (what to test), and testing components (where to test).

3.1 Definition

A software bug refers to an imperfection in a computer program that causes a discordance between the existing and the required conditions [41]. In this paper, we refer the term ‘bug’ to the differences between existing and required behaviours of an ML system [1].

Definition 1 (ML Bug). An ML bug refers to any imperfection in a machine learning item that causes a discordance between the existing and the required conditions. We define ML testing as any activity aimed to detect ML bug.

Definition 2 (ML Testing). Machine Learning Testing (ML testing) refers to any activity designed to reveal machine learning bugs.

The definitions of machine learning bugs and ML testing indicate three aspects of machine learning: the required conditions, the machine learning items, and the testing activities. A machine learning system may have different types of ‘required conditions’, such as correctness, robustness, and privacy. An ML bug may exist in the data, the learning program, or the framework. The testing activities may include test input generation, test oracle identification, test adequacy evaluation, and bug triage. In this survey, we refer to the above three aspects as testing properties, testing components, and testing workflow, respectively, according to which we collect and organise the related work.

Note that a test input in ML testing can be much more diverse in its form than that used in traditional software testing, because it is not only the code that may contain bugs, but also the data. When we try to detect bugs in data, one may even use a training program as a test input to check some properties required for the data.

3.2 ML Testing Workflow

ML testing workflow is about how to conduct ML testing with different testing activities. In this section, we first briefly introduce the role of ML testing when building ML models, then present the key procedures and activities in ML testing. We introduce more details of the current research related to each procedure in Section 5.

3.2.1 Role of Testing in ML Development

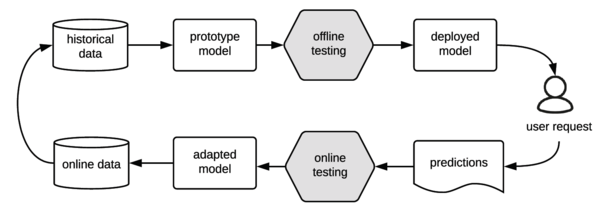

Figure 4 shows the life cycle of deploying a machine learning system with ML testing activities involved. At the very beginning, a prototype model is generated based on historical data; before deploying the model online, one needs to conduct offline testing, such as cross-validation, to make sure that the model meets the required conditions. After deployment, the model makes predictions, yielding new data that can be analysed via online testing to evaluate how the model interacts with user behaviours.

There are several reasons that make online testing essential. First, offline testing usually relies on test data, while test data usually fails to fully represent future data [42]; Second, offline testing is not able to test some circumstances that may be problematic in real applied scenarios, such as data loss and call delays. In addition, offline testing has no access to some business metrics such as open rate, reading time, and click-through rate.

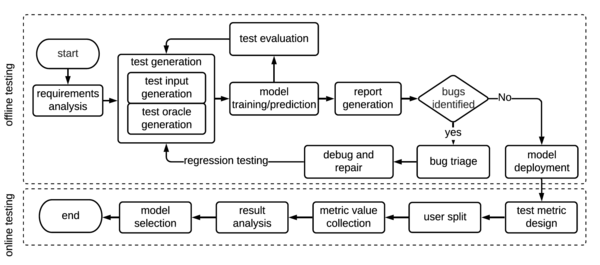

In the following, we present an ML testing workflow adapted from classic software testing workflows. Figure 5 shows the workflow, including both offline testing and online testing.

3.2.2 Offline Testing

The workflow of offline testing is shown by the top dotted rectangle of Figure 5. At the very beginning, developers need to conduct requirement analysis to define the expectations of the users for the machine learning system under test. In requirement analysis, specifications of a machine learning system are analysed and the whole testing procedure is planned. After that, test inputs are either sampled from the collected data or generated based on a specific purpose. Test oracles are then identified or generated (see Section 5.2 for more details of test oracles in machine learning). When the tests are ready, they need to be executed for developers to collect results. The test execution process involves building a model with the tests (when the tests are training data) or running a built model against the tests (when the tests are test data), as well as checking whether the test oracles are violated. After the process of test execution, developers may use evaluation metrics to check the quality of tests, i.e., the ability of the tests to expose ML problems.

The test execution results yield a bug report to help developers to duplicate, locate, and solve the bug. Those identified bugs will be labelled with different severity and assigned for different developers. Once the bug is debugged and repaired, regression testing is conducted to make sure the repair solves the reported problem and does not bring new problems. If no bugs are identified, the offline testing process ends, and the model is deployed.

3.2.3 Online Testing

Offline testing tests the model with historical data without in the real application environment. It also lacks the data collection process of user behaviours. Online testing complements the shortage of offline testing, and aims to detect bugs after the model is deployed online.

The workflow of online testing is shown by the bottom of Figure 5. There are different methods of conducting online testing for different purposes. For example, runtime monitoring keeps checking whether the running ML systems meet the requirements or violate some desired runtime properties. Another commonly used scenario is to monitor user responses, based on which to find out whether the new model is superior to the old model under certain application contexts. A/B testing is one typical type of such online testing [43]. It splits customers to compare two versions of the system (e.g., web pages). When performing A/B testing on ML systems, the sampled users will be split into two groups using the new and old ML models separately.

MAB (Multi-Armed Bandit) is another online testing approach [44]. It first conducts A/B testing for a short time and finds out the best model, then put more resources on the chosen model.

3.3 ML Testing Components

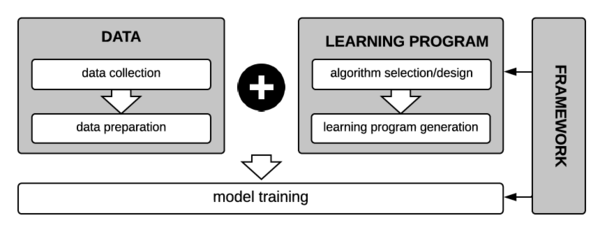

To build a machine learning model, an ML software developer usually needs to collect data, label the data, design learning program architecture, and implement the proposed architecture based on specific frameworks. The procedure of machine learning model development requires interaction with several components such as data, learning program, and learning framework, while each component may contain bugs.

Figure 6 shows the basic procedure of building an ML model and the major components involved in the process. Data are collected and pre-processed for use; the learning program is the code for running to train the model; the framework (e.g., Weka, scikit-learn, and TensorFlow) offers algorithms and other libraries for developers to choose from, when writing the learning program.

Thus, when conducting ML testing, developers may need to try to find bugs in every component including the data, the learning program, and the framework. In particular, error propagation is a more serious problem in ML development because the components are more closely bonded with each other than traditional software [8], which indicates the importance of testing each of the ML components. We introduce the bug detection in each ML component below:

The behaviours of a machine learning system largely depends on data [8]. Bugs in data affect the quality of the generated model, and can be amplified to yield more serious problems over a period a time [45]. Bug detection in data checks problems such as whether the data is sufficient for training or test a model (also called completeness of the data [46]), whether the data is representative of future data, whether the data contains a lot of noise such as biased labels, whether there is skew between training data and test data [45], and whether there is data poisoning [47] or adversary information that may affect the model’s performance.

Machine Learning requires a lot of computations. As shown by Figure 6, ML frameworks offer algorithms to help write the learning program, and platforms to help train the machine learning model, making it easier for developers to build solutions for designing, training and validating algorithms and models for complex problems. They play a more important role in ML development than in traditional software development. ML Framework testing thus checks whether the frameworks of machine learning have bugs that may lead to problems in the final system [48].

A learning program can be classified into two parts: the algorithm designed by the developer or chosen from the framework, and the actual code that developers write to implement, deploy, or configure the algorithm. A bug in the learning program may arise either because the algorithm is designed, chosen, or configured improperly, or because the developers make typos or errors when implementing the designed algorithm.

3.4 ML Testing Properties

Testing properties refer to what to test in ML testing: for what conditions ML testing needs to guarantee for a trained model. This section lists some typical properties that the literature has considered. We classified them into basic functional requirements (i.e., correctness and model relevance) and non-functional requirements (i.e., efficiency, robustness3, fairness, interpretability).

These properties are not strictly independent of each other when considering the root causes, yet they are different external manifestations of the behaviours of an ML system and deserve being treated independently in ML testing.

3.4.1 Correctness

Correctness measures the probability that the ML system under test ‘gets things right’.

Definition 3 (Correctness). Let D be the distribution of future unknown data. Let x be a data item belonging to D. Let h be the machine learning model that we are 3 we adopt the more general understanding from software engineering community [49], [50], and regard robustness as a non-functional requirement.

formula F IF A1 SUM C1 A1 A1 + C2 formula F: =IF(A1,SUM(B1,B2),C1)+C2 formula F IF range SUM range range range math range HLOOKUP ROW IFERROR HLOOKUP AND ISNUMBER IF ISNUMBER IF OR GETPIVOTDATA GETPIVOTDATA IF SUM IF COUNT ISNUMBER IF AND IF ISBLANK GETPIVOTDATA CONCATENATE INDEX MATCH DATE YEAR MONTH DAY IF VLOOKUP AND IF VLOOKUP MATCH ISERROR GETPIVOTDATA IFERROR VLOOKUP ISERROR VLOOKUP VLOOKUP VLOOKUP (1) (2) (3) (4) (5) (6) (8) (9) (10) (11) (12) (13) (14) (15) (16) (17) (18) (19) (20) (21) formu math COUNTIF COUNTIF (7)

formu math

testing. h(x) is the predicted label of x, c(x) is the true label. The model correctness E(h) is the probability that h(x) and c(x) are identical:

E(h) = Prx�D[h(x) = c(x)] (1)

Achieving acceptable correctness is the fundamental requirement of an ML system. The real performance of an ML system should be evaluated on future data. Since future data are often not available, the current best practice usually splits the data into training data and test data (or training data, validation data, and test data), and uses test data to simulate future data. This data split approach is called crossvalidation. Definition 4 (Empirical Correctness). Let X = (x1; :::; xm) be the set of unlabelled test data sampled from D. Let h be the machine learning model under test. Let Y0 = (h(x1); :::; h(xm)) be the set of predicted labels corresponding to each training item xi. Let Y = (y1; :::; ym) be the true labels, where each yi 2 Y corresponds to the label of xi 2 X. The empirical correctness of model (denoted as ^E (h)) is:

^E (h) = 1 m mX i=1 I(h(xi) = yi) (2)

where I is the indicator function; a predicate returns 1 if p is true, and returns 0 otherwise. 3.4.2 Model Relevance A machine learning model comes from the combination of a machine learning algorithm and the training data. It is important to ensure that the adopted machine learning algorithm be not over-complex than just needed [51]. Otherwise, the model may fail to have good performance on future data, or have very large uncertainty.

The algorithm capacity represents the number of functions that a machine learning model can select (based on the training data at hand) as a possible solution. It is usually approximated by VC-dimension [52] or Rademacher Complexity [53] for classification tasks. VC-dimension is the cardinality of the largest set of points that the algorithm can shatter. Rademacher Complexity is the cardinality of the largest set of training data with fixed features that the algorithm shatters.

We define the relevance between a machine learning algorithm capacity and the data distribution as the problem of model relevance.

Definition 5 (Model Relevance). Let D be the training data distribution. Let R(D;A) be the simplest required capacity of any machine learning algorithm A for D. R0(D;A0) is the capacity of the machine learning algorithm A0 under test. Model relevance is the difference between R(D;A) and R0(D;A0).

f = j(R(D;A) R0(D;A0)j (3)

Model relevance aims to measure how well a machine learning algorithm fits the data. A low model relevance is usually caused by overfitting, where the model is too complex for the data, which thereby fails to generalise to future data or to predict observations robustly. Of course, R0(D;A), the minimum complexity that is ‘just sufficient’ is hard to determine, and is typically approximate [42], [54]. We discuss more strategies that could help alleviate the problem of overfitting in Section 6.2.

3.4.3 Robustness

Robustness is defined by the IEEE standard glossary of software engineering terminology [55], [56] as: ‘The degree to which a system or component can function correctly in the presence of invalid inputs or stressful environmental conditions’. Adopting a similar spirit to this definition, we define the robustness of ML as follows:

Definition 6 (Robustness). Let S be a machine learning system. Let E(S) be the correctness of S. Let �(S) be the machine learning system with perturbations on any machine learning components such as the data, the learning program, or the framework. The robustness of a machine learning system is a measurement of the difference between E(S) and E(�(S)):

r = E(S) E(�(S)) (4)

Robustness thus measures the resilience of an ML system’s correctness in the presence of perturbations.

A popular sub-category of robustness is called adversarial robustness. For adversarial robustness, the perturbations are designed to be hard to detect. Following the work of Katz et al. [57], we classify adversarial robustness into local adversarial robustness and global adversarial robustness. Local adversarial robustness is defined as follows.

Definition 7 (Local Adversarial Robustness). Let x a test input for an ML model h. Let x0 be another test input generated via conducting adversarial perturbation on x. Model h is �-local robust at input x if for any x0.

8x0 : jjx x0jjp� � ! h(x) = h(x0) (5)

jj�jjp represents p-norm for distance measurement. The commonly used p cases in machine learning testing are 0, 2, and 1. For example, when p = 2, i.e. jjx x0jj2 represents the Euclidean distance of x and x0. In the case of p = 0, it calculates the element-wise difference between x and x0. When p = 1, it measures the the largest element-wise distance among all elements of x and x0.

Local adversarial robustness concerns the robustness at one specific test input, while global adversarial robustness measures robustness against all inputs. We define global adversarial robustness as follows.

Definition 8 (Global Adversarial Robustness). Let x a test input for an ML model h. Let x0 be another test input generated via conducting adversarial perturbation on x. Model h is �-global robust if for any x and x0.

8x; x0 : jjx x0jjp� � ! h(x) h(x0) � � (6)

3.4.4 Security

The security of an ML system is the system’s resilience against potential harm, danger, or loss made via manipulating or illegally accessing ML components. Security and robustness are closely related. An ML system with low robustness may be insecure: if it is less robust in resisting the perturbations in the data to predict, the system may more easily fall victim to adversarial attacks; For example, if it is less robust in resisting training data perturbations, it may also be vulnerable to data poisoning (i.e., changes to the predictive behaviour caused by adversarially modifying the training data).

Nevertheless, low robustness is just one cause of security vulnerabilities. Except for perturbations attacks, security issues also include other aspects such as model stealing or extraction. This survey focuses on the testing techniques on detecting ML security problems, which narrows the security scope to robustness-related security. We combine the introduction of robustness and security in Section 6.3.

3.4.5 Data Privacy

Privacy in machine learning is the ML system’s ability to preserve private data information. For the formal definition, we use the most popular differential privacy taken from the work of Dwork [58].

Definition 9 (�-Differential Privacy). Let A be a randomised algorithm. Let D1 and D2 be two training data sets that differ only on one instance. Let S be a subset of the output set of A. A gives �-differential privacy if Pr[A(D1) 2 S] � exp(�) � Pr[A(D2) 2 S] (7) In other words, �-Differential privacy is a form of �- contained bound on output change in responding to single input change. It provides a way to know whether any one individual’s data has has a significant effect (bounded by �) on the outcome.

Data privacy has been regulated by law makers, for example, the EU General Data Protection Regulation (GDPR) [59] and California Consumer Privacy Act (CCPA) [60]. Current research mainly focuses on how to present privacy-preserving machine learning, instead of detecting privacy violations. We discuss privacy-related research opportunities and research directions in Section 10.

3.4.6 Efficiency

The efficiency of a machine learning system refers to its construction or prediction speed. An efficiency problem happens when the system executes slowly or even infinitely during the construction or the prediction phase. With the exponential growth of data and complexity of systems, efficiency is an important feature to consider for model selection and framework selection, sometimes even more important than accuracy [61]. For example, to deploy a large model to a mobile device, optimisation, compression, and device-oriented customisation may be performed to make it feasible for the mobile device execution in a reasonable time, but accuracy may sacrifice to achieve this.

3.4.7 Fairness

Machine learning is a statistical method and is widely adopted to make decisions, such as income prediction and medical treatment prediction. Machine learning tends to learn what humans teach it (i.e., in form of training data). However, humans may have bias over cognition, further affecting the data collected or labelled and the algorithm designed, leading to bias problems.

The characteristics that are sensitive and need to be protected against unfairness are called protected characteristics [62] or protected attributes and sensitive attributes. Examples of legally recognised protected classes include race, colour, sex, religion, national origin, citizenship, age, pregnancy, familial status, disability status, veteran status, and genetic information.

Fairness is often domain specific. Regulated domains include credit, education, employment, housing, and public accommodation4.

To formulate fairness is the first step to solve the fairness problems and build fair machine learning models. The literature has proposed many definitions of fairness but no firm consensus is reached at this moment. Considering that the definitions themselves are the research focus of fairness in machine learning, we discuss how the literature formulates and measures different types of fairness in Section 6.5.

3.4.8 Interpretability

Machine learning models are often applied to assist/make decisions in medical treatment, income prediction, or personal credit assessment. It may be important for humans to understand the ‘logic’ behind the final decisions, so that they can build trust over the decisions made by ML [64], [65], [66].

The motives and definitions of interpretability are diverse and still somewhat discordant [64]. Nevertheless, unlike fairness, a mathematical definition of ML interpretability remains elusive [65]. Referring to the work of Biran and Cotton [67] as well as the work of Miller [68], we describe the interpretability of ML as the degree to which an observer can understand the cause of a decision made by an ML system.

Interpretability contains two aspects: transparency (how the model works) and post hoc explanations (other information that could be derived from the model) [64]. Interpretability is also regarded as a request by regulations like GDPR [69], where the user has the legal ‘right to explanation’ to ask for an explanation of an algorithmic decision that was made about them. A thorough introduction of ML interpretability can be referred to in the book of Christoph [70]. 3.5 Software Testing vs. ML Testing Traditional software testing and ML testing are different in many aspects. To understand the unique features of ML testing, we summarise the primary differences between traditional software testing and ML testing in Table 1.

1) Component to test (where the bug may exist): traditional software testing detects bugs in the code, while ML testing detects bugs in the data, the learning program, and the framework, each of which play an essential role in building an ML model.

2) Behaviours under test: the behaviours of traditional software code are usually fixed once the requirement is fixed, while the behaviours of an ML model may frequently change as the training data is updated.

3) Test input: the test inputs in traditional software testing are usually the input data when testing code; in ML testing, however, the test inputs in may have more diverse forms. Note that we separate the definition of ‘test input’ and ‘test data’. In particular, we use ‘test input’ to refer to the inputs in any form that can be adopted to conduct machine learning testing; while ‘test data’ specially refers to the data used to validate ML model behaviour (see more in Section 2). Thus, test inputs in ML testing could be, but are 4 To prohibit discrimination ‘in a place of public accommodation on the basis of sexual orientation, gender identity, or gender expression’ [63]. not limited to, test data. When testing the learning program, a test case may be a single test instance from the test data or a toy training set; when testing the data, the test input could be a learning program.

4) Test oracle: traditional software testing usually assumes the presence of a test oracle. The output can be verified against the expected values by the developer, and thus the oracle is usually determined beforehand. Machine learning, however, is used to generate answers based on a set of input values after being deployed online. The correctness of the large number of generated answers is typically manually confirmed. Currently, the identification of test oracles remains challenging, because many desired properties are difficult to formally specify. Even for a concrete domain specific problem, the oracle identification is still time-consuming and labour-intensive, because domain-specific knowledge is often required. In current practices, companies usually rely on third-party data labelling companies to get manual labels, which can be expensive. Metamorphic relations [71] are a type of pseudo oracle adopted to automatically mitigate the oracle problem in machine learning testing.

5) Test adequacy criteria: test adequacy criteria are used to provide quantitative measurement on the degree of the target software that has been tested. Up to present, many adequacy criteria are proposed and widely adopted in industry, e.g., line coverage, branch coverage, dataflow coverage. However, due to fundamental differences of programming paradigm and logic representation format for machine learning software and traditional software, new test adequacy criteria are required to take the characteristics of machine learning software into consideration.

6) False positives in detected bugs: due to the difficulty in obtaining reliable oracles, ML testing tends to yield more false positives in the reported bugs.

7) Roles of testers: the bugs in ML testing may exist not only in the learning program, but also in the data or the algorithm, and thus data scientists or

…

10.2 Research Opportunities in ML testing

There remain many research opportunities in ML testing. These are not necessarily research challenges, but may greatly benefit machine learning developers and users as well as the whole research community.

Testing More Application Scenarios. Much current research focuses on supervised learning, in particular classification problems. More research is needed on problems associated with testing unsupervised and reinforcement learning.

The testing tasks currently tackled in the literature, primarily centre on image classification. There remain open exciting testing research opportunities in many other areas, such as speech recognition, natural language processing and agent/game play.

Testing More ML Categories and Tasks. We observed pronounced imbalance regarding the coverage of testing techniques for different machine learning categories and tasks, as demonstrated by Table 4. There are both challenges and research opportunities for testing unsupervised and reinforcement learning systems.

For instance, transfer learning, a topic gaining much recent interest, focuses on storing knowledge gained while solving one problem and applying it to a different but related problem [291]. Transfer learning testing is also important, yet poorly covered in the existing literature. Testing Other Properties. From Figure 10, we can see that most work tests robustness and correctness, while relatively few papers (less than 3%) study efficiency, model relevance, or interpretability.

Model relevance testing is challenging because the distribution of the future data is often unknown, while the capacity of many models is also unknown and hard to measure. It might be interesting to conduct empirical studies on the prevalence of poor model relevance among ML models as well as on the balance between poor model relevance and high security risks.

For testing efficiency, there is a need to test the efficiency at different levels such as the efficiency when switching among different platforms, machine learning frameworks, and hardware devices.

For testing property interpretability, existing approaches rely primarily on manual assessment, which checks whether humans could understand the logic or predictive results of an ML model. It will be also interesting to investigate the automatic assessment of interpretability and the detection of interpretability violations.

There is a lack of consensus regarding the definitions and understanding of fairness and interpretability. There is thus a need for clearer definitions, formalisation, and empirical studies under different contexts.

There has been a discussion that machine learning testing and traditional software testing may have different requirements in the assurance to be expected for different properties [292]. Therefore, more work is needed to explore and identify those properties that are most important for machine learning systems, and thus deserve more research and test effort.

- Presenting More Testing Benchmarks

A large number of datasets have been adopted in the existing ML testing papers. As Tables 5 to 8 show, these datasets are usually those adopted for building machine learning systems. As far as we know, there are very few benchmarks like CleverHans [2] that are specially designed for ML testing research purposes, such as adversarial example construction.

More benchmarks are needed, that are specially designed for ML testing. For example, a repository of machine learning programs with real bugs would present a good benchmark for bug-fixing techniques. Such an ML testing repository, would play a similar (and equally-important) role to that played by data sets such as Defects4J [3] in traditional software testing.

- Covering More Testing Activities.

As far we know, requirement analysis for ML systems remains absent in the ML testing literature. As demonstrated by Finkelstein et al. [205], [289], a good requirements analysis may tackle many non-functional properties such as fairness.

Existing work is focused on off-line testing. Online testing deserves more research efforts.

According to the work of Amershi et al. [8], data testing is especially important. This topic certainly deserves more research effort. Additionally, there are also many opportunities for regression testing, bug report analysis, and bug triage in ML testing.

Due to the black-box nature of machine learning algorithms, ML testing results are often more difficult for developers to understand, compared to traditional software testing. Visualisation of testing results might be particularly helpful in ML testing to help developers understand the bugs and help with the bug localisation and repair.

- Mutating Investigation in Machine Learning System.

There have been some studies discussing mutating machine learning code [128], [240], but no work has explored how to better design mutation operators for machine learning code so that the mutants could better simulate real-world machine learning bugs. This is another research opportunity.

11 CONCLUSION

We provided a comprehensive overview and analysis of research work on ML testing. The survey presented the definitions and current research status of different ML testing properties, testing components, and testing workflows. It also summarised the datasets used for experiments and the available open-source testing tools/frameworks, and analysed the research trends, directions, opportunities, and challenges in ML testing. We hope this survey will help software engineering and machine learning researchers to become familiar with the current status and open opportunities of and for of ML testing.

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2020 MachineLearningTestingSurveyLan | Mark Harman Lei Ma Yang Liu Jie M. Zhang | Machine Learning Testing: Survey, Landscapes and Horizons | 2020 |

- ↑ 2 The existing related papers may use other terms like ‘defect’ or ‘issue’. This paper uses ‘bug’ as a representative of all such related terms considering that ‘bug’ has a more general meaning [41].

- ↑ https://github.com/tensorflow/cleverhans

- ↑ https://github.com/rjust/defects4j