BERT Embedding System

Jump to navigation

Jump to search

A BERT Embedding System is a Deep Contextualized Word Representation System that is based on a BERT System.

- Example(s):

- Counter-Example(s):

- See: NLP System, Subword Embedding System, OOV Word, Deep Bidirectional Language Model, Bidirectional LSTM, CRF Training Task, Bidirectional RNN, Word Embedding, 1 Billion Word Benchmark.

References

2019

- (Devlin et al., 2019) ⇒ Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. (2019). “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2019), Volume 1 (Long and Short Papers). DOI:10.18653/v1/N19-1423. arXiv:1810.04805

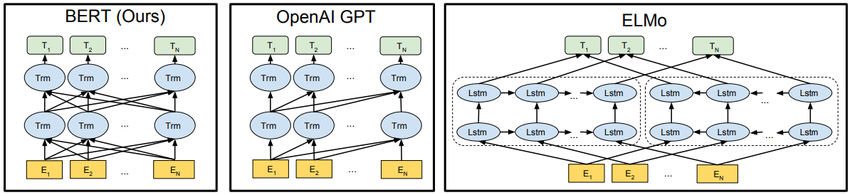

- QUOTE: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers (...)

- QUOTE: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers (...)

|