Exploding Gradient Problem

Jump to navigation

Jump to search

An Exploding Gradient Problem is a Neural Network Training Algorithm problem that arises when using gradient descent and backpropagation.

- Context:

- It can be solved by using a Gradient Clipping Algorithm and Weight Regularization Algorithm.

- …

- Example(s):

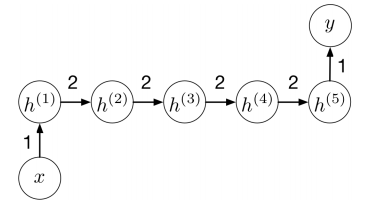

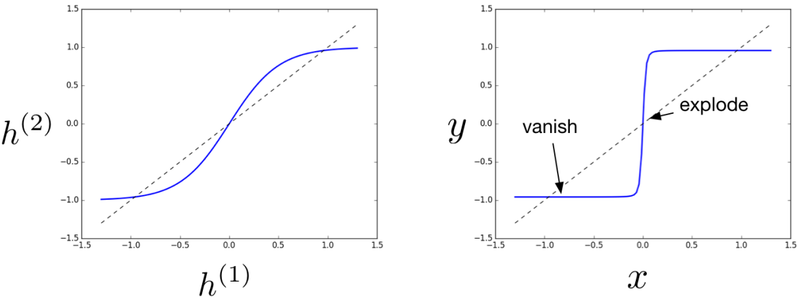

- Figure 3 in Grosse (2017).

- …

- Counter-Example(s):

- See: Recurrent Neural Network, Deep Neural Network Training Algorithm.

References

2021

- (DeepAI, 2021) ⇒ https://deepai.org/machine-learning-glossary-and-terms/exploding-gradient-problem Retrieved:2021-01-23.

- QUOTE: In machine learning, the exploding gradient problem is an issue found in training artificial neural networks with gradient-based learning methods and backpropagation. An artificial neural network is a learning algorithm, also called neural network or neural net, that uses a network of functions to understand and translate data input into a specific output. This type of learning algorithm is designed to mimic the way neurons function in the human brain. Exploding gradients are a problem when large error gradients accumulate and result in very large updates to neural network model weights during training. Gradients are used during training to update the network weights, but when the typically this process works best when these updates are small and controlled. When the magnitudes of the gradients accumulate, an unstable network is likely to occur, which can cause poor predicition results or even a model that reports nothing useful what so ever. There are methods to fix exploding gradients, which include gradient clipping and weight regularization, among others. (...)

Exploding gradients can cause problems in the training of artificial neural networks. When there are exploding gradients, an unstable network can result and the learning cannot be completed. The values of the weights can also become so large as to overflow and result in something called NaN values. NaN values, which stands for not a number, are values that represent an undefined or unrepresentable values. It is useful to know how to identify exploding gradients in order to correct the training.

- QUOTE: In machine learning, the exploding gradient problem is an issue found in training artificial neural networks with gradient-based learning methods and backpropagation. An artificial neural network is a learning algorithm, also called neural network or neural net, that uses a network of functions to understand and translate data input into a specific output. This type of learning algorithm is designed to mimic the way neurons function in the human brain. Exploding gradients are a problem when large error gradients accumulate and result in very large updates to neural network model weights during training. Gradients are used during training to update the network weights, but when the typically this process works best when these updates are small and controlled. When the magnitudes of the gradients accumulate, an unstable network is likely to occur, which can cause poor predicition results or even a model that reports nothing useful what so ever. There are methods to fix exploding gradients, which include gradient clipping and weight regularization, among others.

2017

- (Grosse, 2017) ⇒ Roger Grosse (2017). "Lecture 15: Exploding and Vanishing Gradients". In: CSC321 Course, University of Toronto.

- QUOTE: To make this story more concrete, consider the following RNN, which uses the tanh activation function:

- QUOTE: To make this story more concrete, consider the following RNN, which uses the tanh activation function:

|

|