Vanishing Gradient Problem

A Vanishing Gradient Problem is a Machine Learning Training Algorithm problem that arises when using gradient descent and backpropagation.

- Context:

- It can be solved by using a Gradient Clipping Algorithm, Residual Networks, or Rectified Linear Units.

- Example(s):

- Counter-Example(s):

- See: Recurrent Neural Network, Deep Neural Network Training Algorithm.

References

2021

- (DeepAI, 2021) ⇒ https://deepai.org/machine-learning-glossary-and-terms/vanishing-gradient-problem Retrieved:2021-01-23.

- QUOTE: The vanishing gradient problem is an issue that sometimes arises when training machine learning algorithms through gradient descent. This most often occurs in neural networks that have several neuronal layers such as in a deep learning system, but also occurs in recurrent neural networks. The key point is that the calculated partial derivatives used to compute the gradient as one goes deeper into the network. Since the gradients control how much the network learns during training, if the gradients are very small or zero, then little to no training can take place, leading to poor predictive performance.

2020

- (Roodschild et al., 2021) ⇒ Matias Roodschild, Jorge Gotay Sardinas, and Adrian Will (2020). "A New Approach for the Vanishing Gradient Problem on Sigmoid Activation". In: Progress in Artificial Intelligence, 9(4), 351-360.

- QUOTE: The vanishing gradient problem (VGP) is an important issue at training time on multilayer neural networks using the backpropagation algorithm. This problem is worse when sigmoid transfer functions are used, in a network with many hidden layers. However, the sigmoid function is very important in several architectures such as recurrent neural networks and autoencoders, where the VGP might also appear. In this article, we propose a modification of the backpropagation algorithm for the sigmoid neurons training. It consists of adding a small constant to the calculation of the sigmoid's derivative so that the proposed training direction differs slightly from the gradient while keeping the original sigmoid function in the network.

2018

- (Wikipedia, 2018) ⇒ https://en.wikipedia.org/wiki/vanishing_gradient_problem Retrieved:2018-3-27.

- In machine learning, the vanishing gradient problem is a difficulty found in training artificial neural networks with gradient-based learning methods and backpropagation. In such methods, each of the neural network's weights receives an update proportional to the gradient of the error function with respect to the current weight in each iteration of training. The problem is that in some cases, the gradient will be vanishingly small, effectively preventing the weight from changing its value. In the worst case, this may completely stop the neural network from further training. As one example of the problem cause, traditional activation functions such as the hyperbolic tangent function have gradients in the range (0, 1), and backpropagation computes gradients by the chain rule. This has the effect of multiplying of these small numbers to compute gradients of the "front" layers in an -layer network, meaning that the gradient (error signal) decreases exponentially with while the front layers train very slowly.

Back-propagation allowed researchers to train supervised deep artificial neural networks from scratch, initially with little success. Hochreiter's diploma thesis of 1991 [1] [2] formally identified the reason for this failure in the "vanishing gradient problem", which not only affects many-layered feedforward networks, but also recurrent networks. The latter are trained by unfolding them into very deep feedforward networks, where a new layer is created for each time step of an input sequence processed by the network.

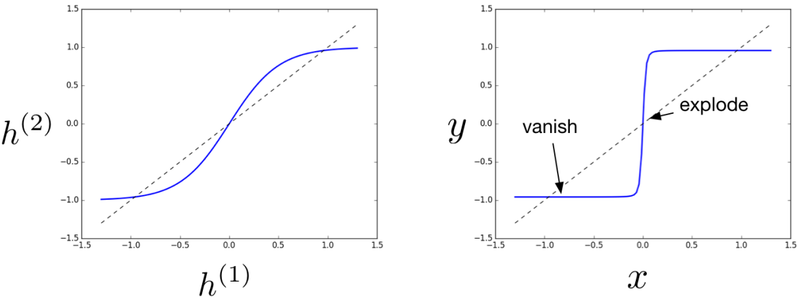

When activation functions are used whose derivatives can take on larger values, one risks encountering the related exploding gradient problem.

- In machine learning, the vanishing gradient problem is a difficulty found in training artificial neural networks with gradient-based learning methods and backpropagation. In such methods, each of the neural network's weights receives an update proportional to the gradient of the error function with respect to the current weight in each iteration of training. The problem is that in some cases, the gradient will be vanishingly small, effectively preventing the weight from changing its value. In the worst case, this may completely stop the neural network from further training. As one example of the problem cause, traditional activation functions such as the hyperbolic tangent function have gradients in the range (0, 1), and backpropagation computes gradients by the chain rule. This has the effect of multiplying of these small numbers to compute gradients of the "front" layers in an -layer network, meaning that the gradient (error signal) decreases exponentially with while the front layers train very slowly.

- ↑ S. Hochreiter. Untersuchungen zu dynamischen neuronalen Netzen. Diploma thesis, Institut f. Informatik, Technische Univ. Munich, 1991. Advisor: J. Schmidhuber.

- ↑ S. Hochreiter, Y. Bengio, P. Frasconi, and J. Schmidhuber. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies. In S. C. Kremer and J. F. Kolen, editors, A Field Guide to Dynamical Recurrent Neural Networks. IEEE Press, 2001.

2017

- (Grosse, 2017) ⇒ Roger Grosse (2017). "Lecture 15: Exploding and Vanishing Gradients". In: CSC321 Course, University of Toronto.

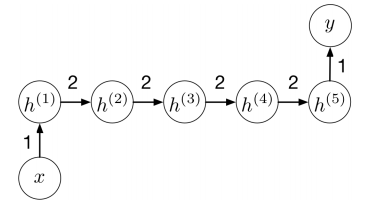

- QUOTE: To make this story more concrete, consider the following RNN, which uses the tanh activation function:

- QUOTE: To make this story more concrete, consider the following RNN, which uses the tanh activation function:

|

|

2015a

- (DL, 2015) ⇒ http://neuralnetworksanddeeplearning.com/chap5.html#discussion_why Why the vanishing gradient problem occurs

- QUOTE: To understand why the vanishing gradient problem occurs, let's explicitly write out the entire expression for the gradient: [math]\displaystyle{ ∂C∂b1=σ′(z1)w2σ′(z2)w3σ′(z3)w4σ′(z4)∂C∂a4.(122) }[/math] Excepting the very last term, this expression is a product of terms of the form wjσ′(zj). To understand how each of those terms behave, let's look at a plot of the function σ′ : -4-3-2-1012340.000.050.100.150.200.25zDerivative of sigmoid function

The derivative reaches a maximum at σ′(0)=1/4. Now, if we use our standard approach to initializing the weights in the network, then we'll choose the weights using a Gaussian with mean 0 and standard deviation 1. So the weights will usually satisfy |wj|<1. Putting these observations together, we see that the terms wjσ′(zj) will usually satisfy |wjσ′(zj)|<1/4. And when we take a product of many such terms, the product will tend to exponentially decrease: the more terms, the smaller the product will be. This is starting to smell like a possible explanation for the vanishing gradient problem. ...

... Indeed, if the terms get large enough - greater than 1 - then we will no longer have a vanishing gradient problem. Instead, the gradient will actually grow exponentially as we move backward through the layers. Instead of a vanishing gradient problem, we'll have an exploding gradient problem.

- QUOTE: To understand why the vanishing gradient problem occurs, let's explicitly write out the entire expression for the gradient: [math]\displaystyle{ ∂C∂b1=σ′(z1)w2σ′(z2)w3σ′(z3)w4σ′(z4)∂C∂a4.(122) }[/math] Excepting the very last term, this expression is a product of terms of the form wjσ′(zj). To understand how each of those terms behave, let's look at a plot of the function σ′ : -4-3-2-1012340.000.050.100.150.200.25zDerivative of sigmoid function

2015b

- (Quora, 2015) ⇒ http://www.quora.com/What-is-the-difference-between-deep-learning-and-usual-machine-learning/answer/Sebastian-Raschka-1?srid=uuoZN

- QUOTE: Now, this is where "deep learning" comes into play. Roughly speaking, you can think of deep learning as "clever" tricks or algorithms that can help you with training such "deep" neural network structures. There are many, many different neural network architectures, but to continue with the example of the MLP, let me introduce the idea of convolutional neural networks (ConvNets). You can think of those as an "addon" to your MLP that helps you to detect features as "good" inputs for your MLP.