Softsign Activation Function

A Softsign Activation Function is a neuron activation function that is based on the mathematical function: [math]\displaystyle{ f(x)= x/(1+|x|) }[/math].

- AKA: Softsign Sigmoid Function.

- Context:

- It can (typically) be used in the activation of Softsign Neurons.

- Example(s):

torch.nn.Softsign,- ...

- …

- Counter-Example(s):

- a Softmax-based Activation Function,

- a Rectified-based Activation Function,

- a Heaviside Step Activation Function,

- a Ramp Function-based Activation Function,

- a Logistic Sigmoid-based Activation Function,

- a Hyperbolic Tangent-based Activation Function,

- a Gaussian-based Activation Function,

- a Softshrink Activation Function,

- a Adaptive Piecewise Linear Activation Function,

- a Bent Identity Activation Function,

- a Maxout Activation Function.

- See: Artificial Neural Network, Artificial Neuron, Neural Network Topology, Neural Network Layer, Neural Network Learning Rate.

References

2018a

- (Pyttorch, 2018) ⇒ http://pytorch.org/docs/master/nn.html#softsign

- QUOTE:

class torch.nn.SoftsignsourceApplies element-wise, the function [math]\displaystyle{ f(x)=\dfrac{x}{1+|x|} }[/math]

Shape:

*** Input: [math]\displaystyle{ (N,∗) }[/math] where * means, any number of additional dimensions

- Output: [math]\displaystyle{ (N,∗) }[/math], same shape as the input

- QUOTE:

- Examples:

>>> m = nn.Softsign() >>> input = autograd.Variable(torch.randn(2)) >>> print(input) >>> print(m(input))

forward(input)sourceDefines the computation performed at every call.

Should be overriden by all subclasses.

Note:

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

2010

- (Glorot & Bengio, 2010) ⇒ Glorot, X., & Bengio, Y. (2010, March). "Understanding the difficulty of training deep feedforward neural networks". In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (pp. 249-256).

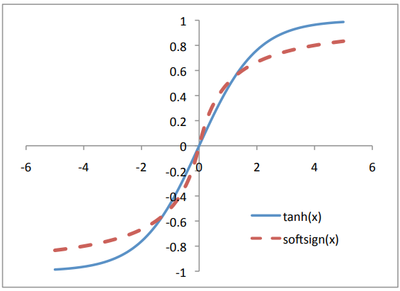

- QUOTE: We varied the type of non-linear activation function in the hidden layers: the sigmoid [math]\displaystyle{ 1/(1 + e^{−x} ) }[/math], the hyperbolic tangent [math]\displaystyle{ tanh(x) }[/math], and a newly proposed activation function (Bergstra et al., 2009) called the softsign, [math]\displaystyle{ x/(1 + |x|) }[/math]. The softsign is similar to the hyperbolic tangent (its range is -1 to 1) but its tails are quadratic polynomials rather than exponentials, i.e., it approaches its asymptotes much slower.

2009

- (Bergstra et al., 2009) ⇒ Bergstra, J., Desjardins, G., Lamblin, P., & Bengio, Y. (2009). "Quadratic polynomials learn better image features (Technical Report 1337)". Département d’Informatique et de Recherche Opérationnelle, Université de Montréal.

- QUOTE: In order to have this sort of asymptotic behavior from a feature activation function, we experimented with the following alternative to the tanh function, which we will refer to as the softsign function,

[math]\displaystyle{ softsign(x) = \dfrac{x}{1 + |x|} }[/math] (5)

illustrated in Figure 1.

Figure 1: Standard sigmoidal activation function (tanh) versus the softsign, which converges polynomially instead of exponentially towards its asymptotes.

Finally, while not explicit in Equation 1, it is well known that the receptive field of simple and complex cells in the V1 area of visual cortex are predominantly local (Hubel & Wiesel, 1968). They respond mainly to regions spanning from about 1/4 of a degree up to a few degrees. This structure inspired the successful multilayer convolutional architecture of LeCun et al. (1998). Inspired by their findings, we experimented with local receptive fields and convolutional hidden units.

This paper is patterned after early neural network research: we start from a descriptive (not mechanical) model of neural activation, simplify it, advance a few variations on it, and use it as a feature extractor feeding a linear classifier. With experiments on both artificial and real data, we show that quadratic activation functions, especially with softsign sigmoid functions, and in sparse and convolutional configurations, have interesting capacity that is not present in standard classification models such as neural networks or support vector machines with standard kernels.

- QUOTE: In order to have this sort of asymptotic behavior from a feature activation function, we experimented with the following alternative to the tanh function, which we will refer to as the softsign function,