2010 ContextualInformationImprovesOO

- (Parada et al., 2010) ⇒ Carolina Parada, Mark Dredze, Denis Filimonov, and Frederick Jelinek. (2010). “Contextual Information Improves OOV Detection in Speech.” In: Proceedings of the Human Language Technologies: Conference of the North American Chapter of the Association of Computational Linguistics (HLT-NAACL 2010).

Subject Headings: OOV Word; OOV Word Detection System; Large Vocabulary Continuous Speech Recognition (LVCSR) System, Maximum Entropy OOV Detection System, Word Error Rate (WER), OOV Corpus, Parada-HLTCOE MaxEnt OOV Detection System.

Notes

Cited By

- Google Scholar: ~ 97 Citations.

Quotes

Abstract

Out-of-vocabulary (OOV) words represent an important source of error in large vocabulary continuous speech recognition (LVCSR) systems. These words cause recognition failures, which propagate through pipeline systems impacting the performance of downstream applications. The detection of OOV regions in the output of a LVCSR system is typically addressed as a binary classification task, where each region is independently classified using local information. In this paper, we show that jointly predicting OOV regions, and including contextual information from each region, leads to substantial improvement in OOV detection. Compared to the state-of-the-art, we reduce the missed OOV rate from 42.6% to 28.4% at 10% false alarm rate.

1. Introduction

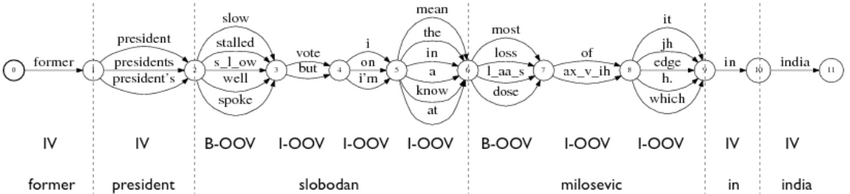

2. Maximum Entropy OOV Detection

Our baseline system is the Maximum Entropy model with features from filler and confidence estimation models proposed by Rastrow et al. (2009a). Based on filler models, this approach models OOVs by constructing a hybrid system which combines words and sub-word units. Sub-word units, or fragments, are variable length phone sequences selected using statistical methods (Siohan and Bacchiani, 2005). The vocabulary contains a word and a fragment lexicon; fragments are used to represent OOVs in the language model text. Language model training text is obtained by replacing low frequency words (assumed OOVs) by their fragment representation. Pronunciations for OOVs are obtained using grapheme to phoneme models (Chen, 2003).

...

|

...

3. Experimental Setup

4 From MaxEnt to CRFs

5. Context for OOV Detection

6. Local Lexical Context

7. Global Utterance Context

8. Final System

9. Related Work

10. Conclusion and Future Work

Acknowledgments

The authors thank Ariya Rastrow for providing the baseline system code, Abhinav Sethy and Bhuvana Ramabhadran for providing the data used in the experiments and for many insightful discussions.

References

...

2009

- (Rastrow, 2009a) ⇒ Ariya Rastrow, Abhinav Sethy, and Bhuvana Ramabhadran (2009). “A New Method for OOV Detection Using Hybrid Word/Fragment System". In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2009).

- ...

2005

- (Siohan & Bacchiani, 2005) ⇒ Olivier Siohan, and Michiel Bacchiani (2005). "Fast Vocabulary-Independent Audio Search Using Path-Based Graph Indexing". In: Proceedings of the 9th European Conference on Speech Communication and Technology (Interspeech 2005 - Eurospeech).

- ...

2003

- (Chen, 2003) ⇒ Stanley F. Chen (2003). "Conditional and Joint Models for Grapheme-to-Phoneme Conversion". In: Proceedings of the 8th European Conference on Speech Communication and Technology (Eurospeech 2003- Interspeech 2003).

- ...

BibTeX

@inproceedings{2010_ContextualInformationImprovesOO,

author = {Carolina Parada and

Mark Dredze and

Denis Filimonov and

Frederick Jelinek},

title = {Contextual Information Improves {OOV} Detection in Speech},

booktitle = {Proceedings of the Human Language Technologies: Conference of the North American Chapter

of the Association of Computational Linguistics (HLT-NAACL 2010)},

pages = {216--224},

publisher = {The Association for Computational Linguistics},

year = {2010},

url = {https://www.aclweb.org/anthology/N10-1025/},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2010 ContextualInformationImprovesOO | Mark Dredze Carolina Parada Denis Filimonov Frederick Jelinek | Contextual Information Improves {OOV} Detection in Speech | 2010 |