2015 ANeuralConversationalModel

- (Vinyals & Le, 2015) ⇒ Oriol Vinyals, and Quoc V. Le. (2015). “A Neural Conversational Model.” In: Proceedings of Deep Leaning Workshop.

Subject Headings: Sequence-to-Sequence Learning Task, Neural Conversational Modelling Task.

Notes

Cited By

Quotes

Abstract

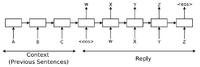

Conversational modeling is an important task in natural language understanding and machine intelligence. Although previous approaches exist, they are often restricted to specific domains (e.g., booking an airline ticket) and require hand-crafted rules. In this paper, we present a simple approach for this task which uses the recently proposed sequence to sequence framework. Our model converses by predicting the next sentence given the previous sentence or sentences in a conversation. The strength of our model is that it can be trained end-to-end and thus requires much fewer hand-crafted rules. We find that this straightforward model can generate simple conversations given a large conversational training dataset. Our preliminary results suggest that, despite optimizing the wrong objective function, the model is able to converse well. It is able extract knowledge from both a domain specific dataset, and from a large, noisy, and general domain dataset of movie subtitles. On a domain-specific IT helpdesk dataset, the model can find a solution to a technical problem via conversations. On a noisy open-domain movie transcript dataset, the model can perform simple forms of common sense reasoning. As expected, we also find that the lack of consistency is a common failure mode of our model.

Figures =

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2015 ANeuralConversationalModel | Oriol Vinyals Quoc V. Le | A Neural Conversational Model | 2015 |