2023 GraphofThoughtsSolvingElaborate

- (Besta et al., 2023) ⇒ Maciej Besta, Nils Blach, Ales Kubicek, Robert Gerstenberger, Lukas Gianinazzi, Joanna Gajda, Tomasz Lehmann, Michal Podstawski, Hubert Niewiadomski, Piotr Nyczyk, and Torsten Hoefler. (2023). “Graph of Thoughts: Solving Elaborate Problems with Large Language Models.” In: arXiv preprint arXiv:2308.09687. doi:10.48550/arXiv.2308.09687

Subject Headings: LLM-based Reasoning, Graph-of-Thoughts (GoT).

Notes

Cited By

2023

- code_your_own_AI. (2023). “Graph-of-Thoughts (GoT) for AI reasoning Agents". YouTube vlog post

Quotes

Abstract

We introduce Graph of Thoughts (GoT): a framework that advances prompting capabilities in large language models (LLMs) beyond those offered by paradigms such as Chain-of-Thought or Tree of Thoughts (ToT). The key idea and primary advantage of GoT is the ability to model the information generated by an LLM as an arbitrary graph, where units of information ("LLM thoughts") are vertices, and edges correspond to dependencies between these vertices. This approach enables combining arbitrary LLM thoughts into synergistic outcomes, distilling the essence of whole networks of thoughts, or enhancing thoughts using feedback loops. We illustrate that GoT offers advantages over state of the art on different tasks, for example increasing the quality of sorting by 62% over ToT, while simultaneously reducing costs by >31%. We ensure that GoT is extensible with new thought transformations and thus can be used to spearhead new prompting schemes. This work brings the LLM reasoning closer to human thinking or brain mechanisms such as recurrence, both of which form complex networks.

Website & code: https://github.com/spcl/graph-of-thoughts

1 Introduction

Large language models (LLMs) are taking over the world of AI. Recent years saw a rapid development of models primarily based on the decoder-only Transformer variant [64], such as GPT [52, 51, 14, 13], PaLM [19], or LLaMA [62]. Prompt engineering is a resource-efficient approach for solving different LLM tasks. In brief, one includes the task description within the input sent to an LLM. If this description is appropriately formulated, the LLM solves the task using its autoregressive token-based mechanism for generating text. Such prompts may contain example tasks with solutions (few-shot prompting, also referred to as in-context learning (ICL)), or even no example tasks at all (zero-shot prompting). Recent years shown that this mechanism can be used to solve a broad set of tasks that involve mathematical, commonsense, or symbolic reasoning.

Chain-of-Thought (CoT) [70] is an approach for prompting, in which one includes the intermediate steps of reasoning within the prompt (intermediate “thoughts”), besides the task input/output. CoT was shown to significantly improve the capability of LLMs to solve problems without resorting to any model updates. One major improvement over CoT, Self-Consistency with CoT (CoT-SC) [66], is a scheme where multiple CoTs are generated, and then the best one is selected as the outcome. More recently, CoT and CoT-SC were extended with Tree of Thoughts (ToT) [43, 76, 74], which models the LLM reasoning process with a tree. This facilitates using different paths of thoughts, and offers novel capabilities such as backtracking from non-promising outcomes. Unfortunately, the ToT approaches still fundamentally limit the reasoning abilities within a prompt by imposing the rigid tree structure on the thought process. In this work, we argue that fundamentally more powerful prompting can be achieved by enabling LLM thoughts to form an arbitrary graph structure. This is motivated by numerous phenomena such as human reasoning, brain structure, or algorithmic execution. When working on a novel idea, a human would not only follow a chain of thoughts (as in CoT) or try different separate ones (as in ToT), but would actually form a more complex network of thoughts. For example, one could explore a certain chain of reasoning, backtrack and start a new one, then realize that a certain idea from the previous chain could be combined with the currently explored one, and merge them both into a new solution, taking advantage of their strengths and eliminating their weaknesses. Similarly, brains form complex networks, with graph-like patterns such as recurrence [28]. Executing algorithms also expose networked patterns, often represented by Directed Acyclic Graphs. The corresponding graph-enabled transformations bring a promise of more powerful prompting when applied to LLM thoughts, but they are not naturally expressible with CoT or ToT.

We observe that these (and many other) thought transformations can be naturally enabled when modeling a reasoning process of an LLM as a graph. For this, we propose Graph of Thoughts (GoT), an approach that enhances LLMs’ capabilities through networked reasoning (contribution #1). In GoT, an LLM thought is modeled as a vertex, while an edge is a dependency between such thoughts. Using GoT, one can aggregate arbitrary thoughts by constructing vertices that have more than one incoming edge. Overall, the graph abstraction harnessed by GoT seamlessly generalizes CoT and ToT to more complex thought patterns, without resorting to any model updates. Yet, putting GoT to practice requires solving several design challenges. For example, what is the best graph structure for different tasks? How to best aggregate thoughts to maximize accuracy and minimize cost? To answer these and many other questions, we carefully design a modular architecture for implementing GoT (contribution #2), coming with two design highlights. First, we enable a fine-grained control over individual thoughts. This enables us to fully control the ongoing conversation with the LLM, and apply advanced thought transformations, such as combining most promising thoughts from the ongoing reasoning into a new one. Second, we ensure that our architecture can be seamlessly extended with novel thought transformations, patterns of reasoning (i.e., graphs of thoughts), and LLM models. This enables rapid prototyping of novel prompting ideas using GoT, while experimenting with different models such as GPT-3.5, GPT-4, or Llama-2 [63].

We illustrate several use cases for GoT (sorting, keyword counting for summaries, set operations, document merging) and we detail how to implement them using the graph-based paradigm (contribution #3). We evaluate GoT and show its advantages over the state of the art (contribution #4). Overall, we observe that GoT is particularly well-suited for tasks that can be naturally decomposed into smaller subtasks that are solved individually and then merged for a final solution. Here, GoT outperforms other schemes, for example improving upon CoT and ToT by, respectively, ≈70% and ≈62%, in terms of the quality of sorting, while simultaneously reducing costs by >31% over ToT.

We qualitatively compare GoT to other prompting schemes in Table 1. GoT is the only one to enable arbitrary graph-based thought transformations within a prompt, such as aggregation, embracing all previously proposed schemes.

Scheme Sc? Mc? Tr? Ag? Chain-of-Thought (CoT) [70] é é é Self-Consistency with CoT [66] é é Thought decomposition [74] é Tree-of-Thought (ToT) [43] é Tree of Thoughts (ToT) [76] é Graph of Thoughts (GoT)

- Table 1: Comparison of prompting schemes, with respect to the supported transformations of thoughts. “Sc?”:

single chain of thoughts? “Mc?”: multiple chains of thoughts? “Tr?”: tree of thoughts? “Ag?”: arbitrary graph of thoughts? “”: full support, “”: partial support, “é”: no support. Note that we do not include a recent scheme called Graph-of-Thought [78] because it is not a prompting scheme. While its name suggests close connections to ToT and CoT, as a fine-tuning scheme, it resorts to model updates, and is thus outside the focus of this work.

Finally, we propose a new metric for evaluating a prompting strategy, the volume of a thought (contribution #5). With this metric, we aim to understand better the differences between prompting schemes. For a given thought v, the volume of v is the number of LLM thoughts, from which one can reach v using directed edges. Intuitively, these are all the LLM thoughts that have had the potential to contribute to v. We show that GoT, by incorporating thought transformations such as aggregation, enables thoughts to have fundamentally larger volumes than other schemes.

2 Background & Notation

We first outline background concepts and notation.

2.1 Language Models & In-Context Learning =

The conversation with the LLM consists of user messages (prompts) and LLM replies (thoughts). We follow the established notation [76] and we denote a pre-trained language model (LM) with parameters θ as pθ. Lowercase letters such as x, y, z, ... indicate LLM thoughts. We purposefully do not prescribe what is a single “thought”, and instead make it use-case specific. Hence, a single thought can be a paragraph (e.g., in article summary), a document (e.g., in document generation), a block of code (e.g., in code debugging or optimization), and so on.

We next describe specific prompting approaches.

- Input-Output (IO)

The Input-Output (IO) prompting is a straightforward approach, in which we use an LLM to turn an input sequence x into the output y directly, without any intermediate thoughts.

- Chain-of-Thought (CoT)

Second, in Chain-of-Thought (CoT), one introduces intermediate thoughts a1, a2, ... between x and y. This strategy was shown to significantly enhance various LM tasks over the plain IO baseline, such as mathematical puzzles [70] or general mathematical reasoning [24].

- Multiple CoTs

Third, one can generalize CoT into multiple CoTs by generating several (independent) k CoTs, and returning the one with the best output (according to some prescribed scoring metric). It was introduced by Wang et al. in the scheme called Self-Consistency with CoT (CoTSC) [66]. This approach enhances CoT because it offers an opportunity to explore different reasoning paths. However, it does not offer “local exploration” within a path, such as backtracking.

- Tree of Thoughts (ToT)

Finally, the Tree of Though (ToT) scheme was introduced independently by Yao [76] and Long [43] (where it is referred to as Tree-of-Thought); it was used implicitly to a certain degree by other schemes such as thought decomposition [74]. It enhances CoT-SC by modeling the process or reasoning as a tree of thoughts. A single tree node represents a partial solution. Based on a given node, the thought generator constructs a given number k of new nodes. Then, the state evaluator generates scores for each such new node. Depending on the use case, the evaluation could be conducted using an LLM itself, or it can harness human scores. Finally, the schedule of extending the tree is dictated by the utilized search algorithm (for example BFS or DFS).

3 The GoT Framework

We now detail the GoT framework. We present it in Figure 1, and compare it to other prompting strategies.

Figure 1: Comparison of Graph of Thoughts (GoT) to other prompting strategies. Formally, GoT can be modeled as a tuple (G, T , E,R), where G is the “LLM reasoning process” (i.e., all the LLM thoughts within the context, with their relationships), T are Input Output Input Output Output Thoughts: Unscored Negative score Output Input Output [This work] Input Positive score Dependencies between thoughts Abandon thought Backtrack Basic Input- Output (IO) Legend Chain-of- Multiple CoTs (CoT-SC) -Thought (CoT) Tree of Thoughts (ToT) Graph of Thoughts (GoT) Key novelty: Intermediate LLM thoughts within a chain Branching out from a chain Selecting a chain with the best score Abandon a chain Key novelty (beyond CoT): Harnessing multiple independent chains of thoughts Key novelty (beyond CoT-SC): Generating several new thoughts based on a given arbitrary thought, exploring it further, and possibly backtracking from it Key novelty (beyond ToT): Arbitrary graph-based thought transformations (aggregating thoughts into a new one, looping over a thought to refine it) Backtracking Refining Aggregating thoughts Backtracking from a chain Intermediate thoughts are also scored Aggregating chains Input Figure 1: Comparison of Graph of Thoughts (GoT) to other prompting strategies. the potential thought transformations, E is an evaluator function used to obtain scores of thoughts, and R is a ranking function used to select most relevant thoughts.

3.1 Reasoning Process

We model the reasoning process as a directed graph G = (V,E); V is a set of vertices and E ⊆ V × V is a set of edges. G is directed and thus the edges are a subset of ordered vertex pairs E ⊆ V × V . A vertex contains a solution to a problem at-hand (be it an initial, intermediate, or a final one). The concrete form of such a thought depends on a use case; it could be a paragraph (in writing tasks) or a sequence of numbers (in sorting). A directed edge (t1, t2) indicates that thought t2 has been constructed using t1 as “direct input”, i.e., by explicitly instructing the LLM to use t1 for generating t2.

In certain use cases, graph nodes belong to different classes. For example, in writing tasks, some vertices model plans of writing a paragraph, while other vertices model the actual paragraphs of text. In such cases, GoT embraces a heterogeneous graph G = (V,E, c) to model the LLM reasoning, where c maps vertices V into their respective classes C (in the above case, it would be C = {plan, par}). Hence, any vertex v can model different aspects of reasoning. We associate G with the LLM reasoning process. To advance this process, one applies thought transformations to G. An example such transformation is to merge best-scoring (so far) thoughts into a new one. Another example is to loop over a thought, in order to enhance it. Note that these transformations strictly extend the set of transformations available in the CoT, CoT-SC, or ToT.

Graph t.he.o.ry view Example sorting task Example writing task Generation Aggregation ... 1 2 7 8 2 3 6 7 1 1 4 5 1 1 1 2 2 3 4 5 6 7 7 8 ... Article 1 Article 2 Article 3 Keyword summary A vertex models a thought. An edge models dependency ... ... Merging sorted subarrays into a sorted array of numbers Splitting an unsorted array into subarrays, for subsequent sorting Combining articles into a coherent summary ... Keyword summary 1 Keyword summary 2 1 4 6 2 4 2 4 9 8 7 5 4 1 4 6 2 4 2 4 9 8 7 5 4 Article 1 Generating summaries from an article, to maximize quality

Figure 2: Examples of aggregation and generation thought transformations.

3.2 Transformations of Thoughts

GoT enables novel transformations of thoughts thanks to the graph-based model for reasoning. We refer to them as graph-enabled transformations. For example, in writing, one could combine several input articles into one coherent summary. In sorting, one could merge several sorted subarrays of numbers into a final sorted array.We illustrate examples of aggregation and generation in Figure 2.

Formally, each such transformation can be modeled as T (G, pθ) where G = (V,E) is the graph reflecting the current state of the reasoning, and pθ is the used LLM. T modifies G usually by adding new vertices and their incoming edges. We have G′ = T (G, pθ) = (V ′,E′), where V ′ = (V ∪ V +) \ V − and E′ = (E ∪ E+) \ E−. V + and E+ are new vertices and edges inserted into G to model the new thoughts and their dependencies, respectively. To maximize the expressiveness of GoT – we also enable the user to explicitly remove thoughts, by specifying the corresponding vertices and edges to be removed (V − and E−, respectively). Here, it is the user’s responsibility to ensure that the sets V +,E+, V −, and E− come with consistent transformations (i.e., for example, that the user does not attempt to remove a vertex that does not exist). This enables seamless incorporation of schemes where, in order to save space within the context, one can remove parts of reasoning that do not promise improvements.

The specific form of T and how it impacts G depends on a specific transformation. We first detail the primary graph enabled thought transformations, and then proceed to describe how GoT embraces the transformations from the earlier schemes. Unless stated otherwise, V − = E− = ∅. Aggregation Transformations First, with GoT, one can aggregate arbitrary thoughts into new ones, to combine and reinforce the advantages of these thoughts, while eliminating their disadvantages. In the basic form, in which only one new vertex is created, V + = {v+} and E+ = {(v1, v+), ..., (vk, v+)}, where v1, ..., vk are the merged k thoughts. More generally, this enables aggregating reasoning paths, i.e., longer chains of thoughts, beyond just individual

thoughts. With the graph model, is it simply achieved

by adding outgoing edges from the vertices v1, ..., vk modeling final thoughts in several chains, into a single thought v+ combining these chains.

Refining Transformations Another thought transformation is the refining of a current thought v by modifying its content: V + = {} and E+ = {(v, v)}. This loop in the graph indicates an iterated thought with the same connections as the original thought.

Generation Transformations Finally, one can generate one or more new thoughts based on an existing single thought v. This class embraces analogous reasoning steps from earlier schemes, such as ToT or CoT-SC. Formally, we have V + = {v+ 1 , ..., v+ k } and E+ = {(v, v+ 1 ), ..., (v, v+ k )}.

3.3 Scoring & Ranking Thoughts

Thoughts are scored to understand whether the current solution is good enough. A score is modeled as a general function E(v, G, pθ), where v is a thought to be evaluated. We use the state of the whole reasoning process (G) in E for maximum generality, because – for example – in some evaluation scenarios, scores may be relative to other thoughts. GoT can also rank thoughts. We model this with a function R(G, pθ, h) where h specifies the number of highest-ranking thoughts in G to be returned by R. While the specific form of R depends on a use case, we most often use a simple yet effective strategy where h thoughts with highest scores are returned, i.e., v1, ..., vh = R(G, pθ, h). Specific forms of E and R depend on a use case. We discuss the details in Section 5. For example, the score (or rank) for sorting corresponds to the count of elements correctly sorted (or incorrectly, when obtaining the error as a score).

4 System Architecture & Extensibility

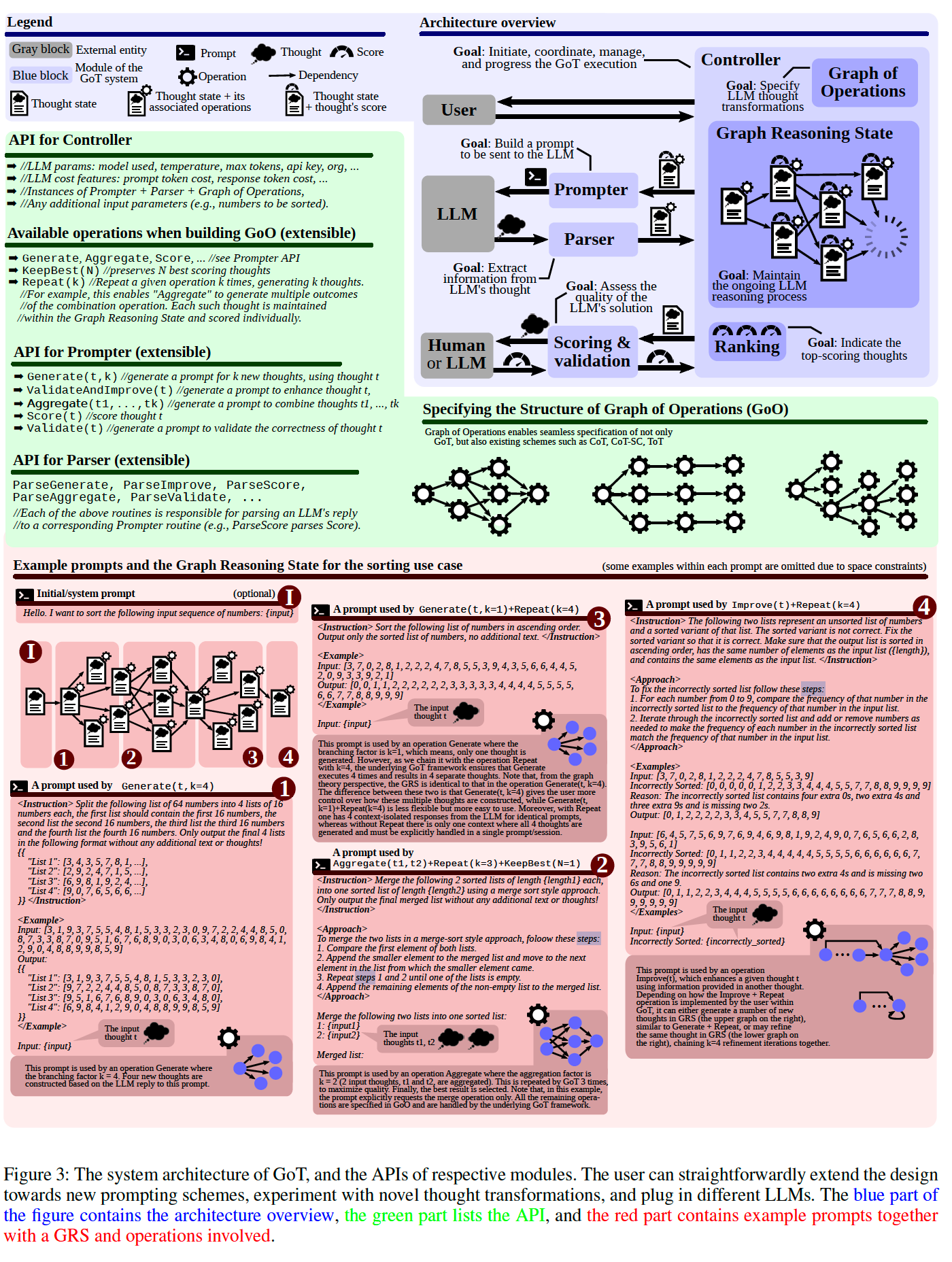

The GoT architecture consists of a set of interacting modules, see Figure 3 (the blue part). These modules are the Prompter (prepares the messages for the LLM), the Parser (extracts information from LLMs’ replies), the Scoring module (verifies and scores the LLM replies), and the Controller (coordinates the entire reasoning process, and decides on how to progress it). The Controller contains two further important elements: the Graph of Operations (GoO) and the Graph Reasoning State (GRS). GoO is a static structure that specifies the graph decomposition of a given task, i.e., it prescribes transformations to be applied to LLM thoughts, together with their order & dependencies. GRS is a dynamic structure that maintains the state of the ongoing LLM reasoning process (the history of its thoughts and their states).

4.1 Prompter

The Prompter prepares the prompt to be sent to the LLM. This module is responsible for the specifics of encoding the graph structure within the prompt. The GoT architecture enables the user to implement use-case specific graph encodings by providing full access to the graph structure.

4.2 Parser

The Parser extracts information from LLM’s thoughts. For each such thought, the Parser constructs the thought state, which contains this extracted information. The thought state is then used to update GRS accordingly.

4.3 Scoring & Validation

Here, we verify whether a given LLM’s thought satisfies potential correctness conditions, and then we assign it a score. Depending on how the score is derived, the module may consult the LLM. Moreover, depending on the use case, the score may also be assigned by a human. Finally, use cases such as sorting use simple local scoring functions.

4.4 Controller

The Controller implements a specific strategy for selecting thoughts from its GRS structure. It also selects what transformations should be applied to which thoughts, and then passes this information to the Prompter. It also decides whether the whole process should be finalized, or whether the next round of interaction with the LLM should be initiated. In our current design, this is dictated by the execution plan specified in GoO.

4.5 GoO & GRS

The user constructs a GoO instance, which prescribes the execution plan of thought operations. GoO is a static structure that is constructed once, before the execution starts. Each operation object knows its predecessor operations and successor operations. Then, during the execution, an instance of GoO maintains the continually updated information about the LLM reasoning process. This includes which operation has been executed so far, the states of all the generated LLM thoughts, their validity and scores, and any other relevant information.

The above elements offer extensible APIs, enabling straightforward implementations of different prompting schemes. The APIs are outlines in the green part of Figure 3, and detailed in the documentation. We also provide examples of prompts used by these operations and a corresponding GRS in the red part of Figure 3.

5 Example Use Cases

We now describe several use cases of GoT.

Goal: Build a prompt to be sent to the LLM Legend Architecture overview Example prompts and the Graph Reasoning State for the sorting use case (some examples within each prompt are omitted due to space constraints) A prompt used by Parser Goal: Extract information from LLM's thought Goal: Assess the quality of the LLM's solution Goal: Initiate, coordinate, manage, Controller and progress the GoT execution External entity Prompt Thought Thought state Score Operation Thought state + its associated operations Thought state + thought's score Module of the Dependency GoT system Graph of Goal: Specify Operations LLM thought transformations Graph Reasoning State Goal: Maintain the ongoing LLM reasoning process User Goal: Indicate the top-scoring thoughts Graph of Operations enables seamless specification of not only GoT, but also existing schemes such as CoT, CoT-SC, ToT API for Prompter (extensible) ➡ Generate(t,k) //generate a prompt for k new thoughts, using thought t ➡ //LLM params: model used, temperature, max tokens, api key, org, ... ➡ //LLM cost features: prompt token cost, response token cost, ... ➡ //Instances of Prompter + Parser + Graph of Operations, ➡ //Any additional input parameters (e.g., numbers to be sorted). //Each of the above routines is responsible for parsing an LLM's reply //to a corresponding Prompter routine (e.g., ParseScore parses Score). ➡ Score(t) //score thought t ➡ Validate(t) //generate a prompt to validate the correctness of thought t ➡ ValidateAndImprove(t) //generate a prompt to enhance thought t, ➡ Aggregate(t1,...,tk) //generate a prompt to combine thoughts t1, ..., tk API for Controller API for Parser (extensible) ParseGenerate, ParseImprove, ParseScore, ParseAggregate, ParseValidate, ... ➡ Generate, Aggregate, Score, ... //see Prompter API ➡ KeepBest(N) //preserves N best scoring thoughts ➡ Repeat(k) //Repeat a given operation k times, generating k thoughts. //For example, this enables "Aggregate" to generate multiple outcomes //of the combination operation. Each such thought is maintained //within the Graph Reasoning State and scored individually. Generate(t,k=1)+Repeat(k=4) Aggregate(t1,t2)+Repeat(k=3)+KeepBest(N=1) Available operations when building GoO (extensible) Specifying the Structure of Graph of Operations (GoO) Scoring & Ranking validation Prompter LLM Human or LLM Gray block Blue block A prompt used by <Instruction> Sort the following list of numbers in ascending order. Output only the sorted list of numbers, no additional text. </Instruction> <Example> Input: [3, 7, 0, 2, 8, 1, 2, 2, 2, 4, 7, 8, 5, 5, 3, 9, 4, 3, 5, 6, 6, 4, 4, 5, 2, 0, 9, 3, 3, 9, 2, 1] Output: [0, 0, 1, 1, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 4, 4, 4, 4, 5, 5, 5, 5, 6, 6, 7, 7, 8, 8, 9, 9, 9] </Example> Input: {input} <Instruction> Merge the following 2 sorted lists of length {length1} each, into one sorted list of length {length2} using a merge sort style approach. Only output the final merged list without any additional text or thoughts! </Instruction> <Approach> To merge the two lists in a merge-sort style approach, foloow these steps: 1. Compare the first element of both lists. 2. Append the smaller element to the merged list and move to the next element in the list from which the smaller element came. 3. Repeat steps 1 and 2 until one of the lists is empty. 4. Append the remaining elements of the non-empty list to the merged list. </Approach> Merge the following two lists into one sorted list: 1: {input1} 2: {input2} Merged list: This prompt is used by an operation Aggregate where the aggregation factor is k = 2 (2 input thoughts, t1 and t2, are aggregated). This is repeated by GoT 3 times, to maximize quality. Finally, the best result is selected. Note that, in this example, the prompt explicitly requests the merge operation only. All the remaining operations are specified in GoO and are handled by the underlying GoT framework. The input thought t The input thoughts t1, t2

This prompt is used by an operation Generate where the branching factor is k=1, which means, only one thought is generated. However, as we chain it with the operation Repeat with k=4, the underlying GoT framework ensures that Generate executes 4 times and results in 4 separate thoughts. Note that, from the graph theory perspective, the GRS is identical to that in the operation Generate(t, k=4). The difference between these two is that Generate(t, k=4) gives the user more control over how these multiple thoughts are constructed, while Generate(t, k=1)+Repeat(k=4) is less flexible but more easy to use. Moreover, with Repeat one has 4 context-isolated responses from the LLM for identical prompts, whereas without Repeat there is only one context where all 4 thoughts are generated and must be explicitly handled in a single prompt/session. A prompt used by Generate(t,k=4)

<Instruction> Split the following list of 64 numbers into 4 lists of 16 numbers each, the first list should contain the first 16 numbers, the second list the second 16 numbers, the third list the third 16 numbers and the fourth list the fourth 16 numbers. Only output the final 4 lists in the following format without any additional text or thoughts! {{ "List 1": [3, 4, 3, 5, 7, 8, 1, ...], "List 2": [2, 9, 2, 4, 7, 1, 5, ...], "List 3": [6, 9, 8, 1, 9, 2, 4, ...], "List 4": [9, 0, 7, 6, 5, 6, 6, ...] }} </Instruction> <Example> Input: [3, 1, 9, 3, 7, 5, 5, 4, 8, 1, 5, 3, 3, 2, 3, 0, 9, 7, 2, 2, 4, 4, 8, 5, 0, 8, 7, 3, 3, 8, 7, 0, 9, 5, 1, 6, 7, 6, 8, 9, 0, 3, 0, 6, 3, 4, 8, 0, 6, 9, 8, 4, 1, 2, 9, 0, 4, 8, 8, 9, 9, 8, 5, 9] Output: {{ "List 1": [3, 1, 9, 3, 7, 5, 5, 4, 8, 1, 5, 3, 3, 2, 3, 0], "List 2": [9, 7, 2, 2, 4, 4, 8, 5, 0, 8, 7, 3, 3, 8, 7, 0], "List 3": [9, 5, 1, 6, 7, 6, 8, 9, 0, 3, 0, 6, 3, 4, 8, 0], "List 4": [6, 9, 8, 4, 1, 2, 9, 0, 4, 8, 8, 9, 9, 8, 5, 9] }} </Example> Input: {input} This prompt is used by an operation Generate where the branching factor k = 4. Four new thoughts are constructed based on the LLM reply to this prompt. The input thought t Improve(t)+Repeat(k=4) <Instruction> The following two lists represent an unsorted list of numbers and a sorted variant of that list. The sorted variant is not correct. Fix the sorted variant so that it is correct. Make sure that the output list is sorted in ascending order, has the same number of elements as the input list ({length}), and contains the same elements as the input list. </Instruction> <Approach> To fix the incorrectly sorted list follow these steps: 1. For each number from 0 to 9, compare the frequency of that number in the incorrectly sorted list to the frequency of that number in the input list. 2. Iterate through the incorrectly sorted list and add or remove numbers as needed to make the frequency of each number in the incorrectly sorted list match the frequency of that number in the input list. </Approach> <Examples> Input: [3, 7, 0, 2, 8, 1, 2, 2, 2, 4, 7, 8, 5, 5, 3, 9] Incorrectly Sorted: [0, 0, 0, 0, 0, 1, 2, 2, 3, 3, 4, 4, 4, 5, 5, 7, 7, 8, 8, 9, 9, 9, 9] Reason: The incorrectly sorted list contains four extra 0s, two extra 4s and three extra 9s and is missing two 2s. Output: [0, 1, 2, 2, 2, 2, 3, 3, 4, 5, 5, 7, 7, 8, 8, 9] Input: [6, 4, 5, 7, 5, 6, 9, 7, 6, 9, 4, 6, 9, 8, 1, 9, 2, 4, 9, 0, 7, 6, 5, 6, 6, 2, 8, 3, 9, 5, 6, 1] Incorrectly Sorted: [0, 1, 1, 2, 2, 3, 4, 4, 4, 4, 4, 5, 5, 5, 5, 6, 6, 6, 6, 6, 6, 7, 7, 7, 8, 8, 9, 9, 9, 9, 9] Reason: The incorrectly sorted list contains two extra 4s and is missing two 6s and one 9. Output: [0, 1, 1, 2, 2, 3, 4, 4, 4, 5, 5, 5, 5, 6, 6, 6, 6, 6, 6, 6, 6, 7, 7, 7, 8, 8, 9, 9, 9, 9, 9, 9] </Examples> Input: {input} Incorrectly Sorted: {incorrectly_sorted} A prompt used by ... ...

This prompt is used by an operation Improve(t), which enhances a given thought t using information provided in another thought. Depending on how the Improve + Repeat operation is implemented by the user within GoT, it can either generate a number of new thoughts in GRS (the upper graph on the right), similar to Generate + Repeat, or may refine the same thought in GRS (the lower graph on the right), chaining k=4 refinement iterations together.

1 2 2 3 3 Initial/system prompt (optional) Hello. I want to sort the following input sequence of numbers: {input} I I 4 4 The input thought t 1

Figure 3: The system architecture of GoT, and the APIs of respective modules. The user can straightforwardly extend the design towards new prompting schemes, experiment with novel thought transformations, and plug in different LLMs. The blue part of the figure contains the architecture overview, the green part lists the API, and the red part contains example prompts together with a GRS and operations involved.

5.1 Sorting

Due to space constraints, we detail one use case (sorting). We focus on its decomposition and Graph of Operations, which are central for implementing and executing any workload within GoT.We consider sorting numbers 0–9 with duplicates. The considered LLMs are unable to sort a sequence of such numbers correctly beyond a certain length consistently because duplicate counts do not match.

In GoT, we employ merge-based sorting: First, one decomposes the input sequence of numbers into subarrays.

Then, one sorts these subarrays individually, and then respectively merges them into a final solution. Figure 4 illustrates this use case together with its graph decomposition. Here, an LLM thought is a sequence of sorted numbers. To score an outcome, denote an input sequence with [a1, a2, ..., an] and an output one with [b1, b2, ..., bm]. We use the following score that determines “the scope” of errors:

error-scope = X + Y where p ∈ {1, ...,m}, q ∈ {1, ..., n}, and X = mX−1 i=1 sgn(max(bi − bi+1, 0)), Y = X9 i=0 | |{bp : bp = i}| − |{aq : aq = i}| |

Here, X indicates how many consecutive pairs of numbers are incorrectly sorted. If two numbers i and i + 1 are incorrectly sorted (i.e., bi > bi+1), then the expression within the summation returns 1, increasing the error score by one. For two numbers correctly sorted, this expression amounts to 0. Then, Y determines how well a given output sequence preserves the frequency of output numbers. Specifically, for each considered number x (x ∈ {0, ..., 9}), we obtain the difference between the count of input elements being equal to x, vs. the count of output elements equal to x. For an output sequence perfectly preserving the frequency of x, this would amount to 0. Any single “deviation” in this count, increases the “error scope” by 1). We then sum this over all considered values of x. When plotting this score, to improve the clarity of plots, we additionally apply clipping min(error-scope, n), as some baselines (IO, CoT) result in large numbers of outliers with high error scope. Finally, to use a “positive score” describing “the scope of correctly sorted” elements, one can use the value max(n − error-scope, 0).

5.2 Set Operations

Moreover, we also consider set operations, focusing on set intersection. They have numerous applications (particularly set intersection) in problems ranging from genome or document comparisons to pattern matching [20, 57, 38, 1, 27, 49, 10, 9]. Set intersection of two sets is implemented similarly as the sorting. The second input set is split into subsets and the intersection of those subsets with the first input set is determined with the help of the LLM. Afterwards the resulting

..... ..... ..... ..... 1 4 ... 4 3 16 numbers 8 2 ... 1 3 16 numbers 1 1 ... 4 2 1 9 ... 5 4 16 numbers 16 numbers Sort Partial solution Graph of Operations (GoO) for sorting 64 numbers Partial solution Partial solution Partial solution N = 3 Generate(N) Score Sort Generate(N) Sort Generate(N) 1 2 ... 7 8 16 numbers 1 1 ... 5 7 16 numbers Partial solution Partial solution 1 3 ... 4 8 16 numbers Partial solution 1 2 ... 7 8 16 numbers 1 1 ... 5 7 16 numbers Partial solution Partial solution 1 3 ... 4 8 16 numbers Partial solution Score: 78% Score: 86% KeepBest(N) Keep the best scored thoughts N = 1 Merge into a 32 element subarray Aggregate(N) N = 10 N = 3 N = 3 Assess how well each sequence is sorted How do we score? 64 numbers 1 4 6 2 4 ... 9 8 7 5 4 Splitting into four 16-element chunks Input Generate(N) N = 4 Sort Generate(N) N = 3 Score: 100% 1 3 ... 4 8 16 numbers Partial solution Score: 100% 1 3 ... 4 6 16 numbers Partial solution Score: 97% ..... ..... ..... 1 1 ... 8 9 32 numbers Partial solution Score: 100% 1 3 ... 6 8 32 numbers Partial solution Score: 97% Merge into a 64 element subarray Aggregate(N) N = 2 S Score S Score S Score S Score K G Score G Score Score Score K K K A G K A A Score Score K K K K K G S A K G Legend Generate Details of the highlighted part of GoO are below Details of the highlighted part of GoO from above The first Generate splits the 64-element input array into four 16-element chunks. Sorting is implemented within the Generate operation. Here, N=3 means that, for each 16 element chunk, we generate three different sortings. Here, N=1 means that we maintain a single best sorting outcome out of the three input ones. Here, N=10 means that we try 10 different aggregations of the two input 16-element subarrays. To obtain the score, for every number 0 - 9, we get the difference between the input and the sorted list, and we sum all 10 values. Zero indicates correctly sorted. To show "the higher the better", we do max(input_length - score, 0) Note that this is an example graph decomposition. The structure of connections between all operations can be arbitrarily modified. Sort KeepBest Aggregate

Figure 4: An example graph decomposition of the sorting use case in GoT. All the used operations (Generate, Aggregate, Score, KeepBest) are described in Figure 3.

intersection sets are aggregated for the final results. For the evaluation we use different set sizes of 32, 64 and 128 elements and we vary the number of elements found in both sets to be between 25% and 75%.

Our score indicates the total number of missing or incorrectly included elements in the final intersection. Specifically, denote two input sets with A = [a1, a2, ..., an] and B = [b1, b2, ..., bn], and the output set with C = [c1, c2, ..., cm]. Then, error-scope = X1 + X2 + Xd

where X1 = |C \ (A∩B)| are the number of elements in C that are not supposed to be there, X2 = |(A∩B)\C| are the number of elements missing from C, and Xd is the number of duplicates in C (because the LLM expresses the set as a list in natural language). Finally, to use a “positive score” describing “the scope of correctly computed” elements, one can use the value max(n − error-scope, 0).

5.3 Keyword Counting

Keyword counting finds the frequency of keywords in a given category (countries in our example implementation) within the input text. GoT splits the input text into multiple passages, counts the keywords in each passage and aggregates the sub-results. The number of passages is configurable and can also be left to the LLM, making it possible to treat each sentence as a separate passage. Here, to score a thought, we first – for each keyword – derive the absolute difference between the computed count and the correct one. We then sum all these differences to get the final score.

5.4 Document Merging

Finally, we also provide document merging. Here, the goal is to generate a new Non-Disclosure Agreement (NDA) document based on several input ones that partially overlap in terms of their contents. The goal is to ensure minimal amount of duplication, while maximizing information retention. Document merging is broadly applicable in, e.g., legal procedures, where multiple sources of information have to be combined into a single document or article. To score a solution, we query the LLM for two values (3 times for each value, and take the average). The first value corresponds to the solution redundancy (10 indicates no redundancy, 0 implies at least half the information is redundant), the second value stands for information retention (10 indicates all information is retained, 0 says that none is retained).We compute the harmonic mean of these values.

6 The Latency-Volume Tradeoff

We now show that GoT improves upon previous prompting schemes in terms of the tradeoff between latency (number of hops in the graph of thoughts to reach a given final thought) and volume. We define volume – for a given thought t – as the number of preceding LLM thoughts that could have impacted t. Formally, the volume of t is the number of thoughts from which there exists a path to t in the graph of thoughts. We assume that outputting a single thought costs O(1) time and fix the total cost to Θ(n) for each prompting scheme. The structure of the schemes is as follows. CoT-SC consists of k independent chains originating from a single starting thought. ToT is a complete k-ary tree. Finally, in GoT, a complete k-ary tree is joined at its leaves with a “mirrored” k-ary tree of the same size but with its edges reversed. The analysis is detailed in Table 2. CoT offers a large volume of up to N, but at the cost of a high latency of N. CoTSC reduces the latency by a factor of k (which corresponds to its branching factor), but it simultaneously decreases the volume by k as well. ToT offers a latency of logk N but also has low volume. GoT is the only scheme to come with both a low latency of logk N and a high volume N. This is enabled by the fact that GoT harnesses aggregations of thoughts, making it possible to reach the final thought from any other intermediate thought in the graph decomposition.

Scheme Latency Volume Chain-of-Thought (CoT) N N Self-Consistency with CoT (CoT-SC) N/k N/k Tree of Thoughts (ToT) logk N O(logk N) Graph of Thoughts (GoT) logk N N Table 2: Comparison of prompting schemes, with respect to their fundamental tradeoff between latency and volume. GoT offers the best tradeoff.

7 Evaluation

We show the advantages of GoT over the state of the art.We focus on comparing GoT to ToT, as it was shown to consistently outperform other schemes. Still, for a broad comparison, we also experiment with IO, CoT, and CoT-SC. As our analysis results in a large evaluation space, we present representative results and omit data that does not bring relevant insights (e.g., CoT-SC).

7.1 Evaluation Methodology

We use 100 input samples for each task and comparison baseline. We set temperature to be 1.0 and we use 4k context unless stated otherwise. For each experiment, we fix the numbers of thoughts in respective schemes to achieve similar costs in each experiment.

Parameters We experiment extensively with the branching factor k and the number of levels L to ensure that we compare GoT to cost-effective and advantageous configurations. We plot two variants of ToT: one with higher k and lower depth (ToT), the other with lower k but higher L (ToT2). We usually aim to achieve a sweetspot in the tradeoff between sparser generation rounds (lower k) vs. more rounds (larger L). Usually more responses per round is more expensive (e.g., 80 vs. 60 total responses for Figure 7 but $6 vs. $3 costs).We also try different problem sizes P (e.g., in sorting, P states how many numbers are to be sorted).

Used LLMs Due to budget restrictions, we focus on GPT- 3.5, using GPT-4. We also experimented with Llama-2, but it was usually worse than GPT-3.5 and also much slower to run, making it infeasible to obtain enough samples. IO CoT ToT ToT2GoT 0 2 4 6 8 10 12 14 16

- incorrectly sorted elements; the lower the better

32 elements 0.0 0.2 0.4 0.6 0.8 1.0 1.2 1.4 1.6 IO CoT ToT ToT2GoT 0 4 8 12 16 20 24 28 32 36 40 44 48 52 56 60 64 64 elements 0.0 0.3 0.6 0.9 1.2 1.5 1.8 2.1 2.4 2.7 3.0 3.3 3.6 3.9 4.2 4.5 4.8 IO CoT ToT ToT2GoT 0 8 16 24 32 40 48 56 64 72 80 88 96 104 112 120 128 128 elements 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Total Cost ($); the lower the better L=2 k=20 L=3 k=10 GoT: Figure 4 clipped L=4 k=20 L=7 k=10 GoT: Figure 4 clipped L=4 k=20 L=10 k=10 GoT: Figure 4

Figure 5: Number of errors and cost in sorting tasks with ChatGPT-3.5. L and k indicate the structure of ToT (see Sections 3.2 and 6).

7.2 Analysis of GoT’s Advantages

The results of analysis are in Figure 5 (sorting), 6 (set intersection), 7 (keyword counting), and 8 (document merging); see Section 5 for the description of specific use cases. Overall, GoT improves the quality of outcomes over all the considered baselines and it reduces inference costs compared to ToT.

GoT vs. ToT GoT improves upon ToT and ToT2 by a large margin over all the considered problem instances. ToT usually comes with somewhat higher quality than ToT2, but simultaneously much higher costs. GoT’s costs are always lower than ToT, and comparable (in some cases lower, in others higher) than ToT2. For example, it reduces median error by ≈62%, thereby achieving a higher quality of sorting, for P = 128 in comparison to ToT while ensuring >31% cost reductions. These advantages are due to GoT’s ability to decompose complex tasks into simpler sub-tasks, solve these sub-tasks independently, and then incrementally merge these outcomes into the final result.

GoT vs. IO and CoT GoT consistently delivers much higher quality of outcomes than IO/CoT. For example, for sorting (P = 64), GoT’s median error is ≈65% and ≈83% lower than, respectively, CoT and IO. Yet, the costs of GoT – and ToT – are much higher than in IO and CoT. This is mostly due to our configuration of CoT, where we do not artificially inflate the lengths of the chains of reasoning if this does not improve the outcomes. The higher costs of GoT and ToT are driven by k new thoughts built for each Generate operation; these multiple thoughts are one of the reasons for GoT’s superiority in quality.

Increasing Complexity of Tackled Problems Most importantly, the advantages of GoT in the quality increase for all the baselines with the growing size of the problem P. For example, in sorting, while for P = 32 GoT only negligibly improves upon ToT2, its median error count becomes lower by ≈61% for P = 64 and ≈69% for P = 128. The quartiles also become respectively better. The results for other schemes also follow the intuition; for example, IO becomes consistently worse with the increasing P, which is expected as a single thought is unlikely to solve a large problem instance. Overall, this analysis illustrates that GoT is indeed well-suited for elaborate problem cases, as the execution schedules usually become more complex with the growing problem sizes.

7.3 Discussion on Task Decomposition

When splitting a task into subtasks and then solving these subtasks, the size of responses and the input (in tokens) are reduced proportionally to the degree of task decomposition. However, the “static” part of the prompt (i.e., few-shot examples) may become a significant overhead (see GoT4 to GoT8 in Figure 7). Here, we observe that these few-shot examples can usually also be reduced in size (e.g., the passages used to demonstrate keyword counting can also be made smaller and still be indicative of the actual input size), thus actively working towards decreasing the cost (e.g., see the difference between GoT8 and GoTx in Figure 7). The overall goal when conducting graph decomposition is to break down a task to the point, where the LLM can solve it correctly for the majority of time using a single prompt (or with a few additional improvement steps). This significantly lowers the number of improvement/refinement steps needed during the later stages of the graph exploration. Furthermore, as indicated by our results, combining or concatenating sub-results is usually an easier task than solving large task instances from scratch. Hence, the LLM is often successful when aggregating the final solution.

IO CoT ToT ToT2GoT 0 2 4 6 8 10 12 14 16 18

- incorrect elements; the lower the better

7 6 31 29 43 32 elements 0.0 0.2 0.4 0.6 0.8 1.0 1.2 1.4 1.6 1.8 IO CoT ToT ToT2GoT 0 4 8 12 16 20 24 28 32 0 0 0 0 4 64 elements 0.0 0.6 1.2 1.8 2.4 3.0 3.6 4.2 4.8 IO CoT ToT ToT2GoT 0 8 16 24 32 40 48 56 64 72 80 88 0 0 0 0 0 128 elements 0 1 2 3 4 5 6 7 8 9 10 11 Total Cost ($); the lower the better L=2 k=20 Solved correctly: L=7 k=10 L=4 k=25 L=9 k=10 L=3 k=10 L=4 k=20

Figure 6: Number of errors and cost in set intersection with ChatGPT-3.5. L and k indicate the structure of ToT (see Sections 3.2 and 6).

IO CoT ToT ToT2 GoT4 GoT8 GoTx 0 5 10 15 20 25 30 35 Number of errors; the lower the better 0 0 1 0 8 7 25 0 1 2 3 4 5 6 7 8 Total Cost ($); the lower the better Samples solved correctly Splits the input text into 4 passages, counts keywords in each one, aggregates the subresults always 2 at a time L=4 k=20 L=6 k=10 Splits the input into sentences (each input has 12-19 sentences) As GoT4, but splits the input text into 8 passages

Figure 7: Number of errors and cost in keyword counting with ChatGPT-3.5. L and k indicate the structure of ToT (see Sections 3.2 and 6).

IO CoT ToT GoT GoT2 0 2 4 6 8 Score (out of 10); the higher the better 0 3 6 9 12 15 Total Cost ($); the lower the better L=3 k=10 Aggregation of fully merged NDAs Aggregation of partially merged NDAs

Figure 8: Score and cost in document merging with ChatGPT-3.5. L and k indicate the structure of ToT (see Sections 3.2 and 6). Number of samples: 50; context size: 16k tokens.

8 Related Work

We summarize relations between GoT and related work.

8.1 Prompting Paradigms & Approaches

We detail different prompting paradigms in Section 1 and Table 1. There are numerous other work related to prompting. We now briefly summarize selected most related ones; more extensive descriptions can be found in dedicated surveys [68, 40, 69, 34]. Wang et al. proposed Plan-and-Solve, an approach to enhance CoT with an explicit planning stage [65]. Using complexity-based criteria to enhance prompting within a CoT was designed by Fu et al. [66, 29]. The self-taught reasoner (STaR) [79] generates several chain of thoughts, and selects the ones that are valid. Similarly, a scheme by Shum et al. [60] generates a pool of CoT candidates, and selects the best candidate based on whether the candidates match the ground truth and on a policy gradient-based method. Automatic prompt generation overcomes the issues of scaling in CoT [58, 42, 41]. Zhou et al. proposes to harness selecting the best prompt out of a candidate set [83].

Finally, in prompt chaining, one cascades different LLMs. This enables prompting different LLMs via different contexts, enabling more powerful reasoning [21, 47, 72, 23, 50, 71, 72]. GoT is orthogonal to this class of schemes, as it focuses on a single context capabilities.

8.2 Self-Reflection & Self-Evaluation

Self-reflection and self-evaluation were introduced recently [59, 48, 45, 74]. They are used to enhance different tasks, for example for code generation [17] or computer operation tasks [39]. In GoT, we partially rely on self-evaluation when taking decisions on how to expand the graph of thoughts within a prompt.

8.3 LLMs & Planning

There are many works recently on how to plan complex tasks with LLMs [36, 80, 77, 75, 67, 37]. GoT could be seen as a generic framework that could potentially be used to enhance such schemes, by offering a paradigm for generating complex graph-based plans.

8.4 Graphs and Graph Computing

Graphs have become an immensely popular and important part of the general computing landscape [44, 46, 32, 31, 55]. Recently, there has been a growing interest in domains such as graph databases [53, 54, 11, 4, 5, 8], graph pattern matching [25, 18, 61, 10, 2, 1], graph streaming [26, 22, 3], and graph machine learning as well as graph neural networks [33, 73, 82, 81, 16, 33, 12, 6, 30, 56, 7]. The graph abstraction has been fruitful for many modern research domains, such as social sciences (e.g., studying human interactions), bioinformatics (e.g., analyzing protein structures), chemistry (e.g., designing chemical compounds), medicine (e.g., drug discovery), cybersecurity (e.g., identifying intruder machines), healthcare (e.g., exposing groups of people who submit fraudulent claims), web graph analysis (e.g., providing accurate search services), entertainment services (e.g., predicting movie popularity), linguistics (e.g., modeling relationships between words), transportation (e.g., finding efficient routes), physics (e.g., understanding phase transitions and critical phenomena), and many others [44, 20, 38, 35, 15]. In this work, we harness the graph abstraction as a key mechanism that enhances prompting capabilities in LLMs.

9 Conclusion

Prompt engineering is one of the central new domains of the large language model (LLM) research. It enables using LLMs efficiently, without any model updates. However, designing effective prompts is a challenging task.

In this work, we propose Graph of Thoughts (GoT), a new paradigm that enables the LLM to solve different tasks effectively without any model updates. The key idea is to model the LLM reasoning as an arbitrary graph, where thoughts are vertices and dependencies between thoughts are edges. This enables novel transformations of thoughts, such as aggregation. Human’s task solving is often non-linear, and it involves combining intermediate solutions into final ones, or changing the flow of reasoning upon discovering new insights. GoT reflects this with its graph structure.

GoT outperforms other prompting schemes, for example ensuring 62% increase in the quality of sorting over ToT, while simultaneously reducing costs by >31%.We also propose a novel metric for a prompting scheme, the volume of a thought, to indicate the scope of information that a given LLM output could carry with it, where GoT also excels. This provides a step towards more principled prompt engineering. The graph abstraction has been the

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2023 GraphofThoughtsSolvingElaborate | Maciej Besta Nils Blach Ales Kubicek Robert Gerstenberger Lukas Gianinazzi Joanna Gajda Tomasz Lehmann Michal Podstawski Hubert Niewiadomski Piotr Nyczyk Torsten Hoefler | Graph of Thoughts: Solving Elaborate Problems with Large Language Models | 10.48550/arXiv.2308.09687 | 2023 |