ContractNLI Dataset

A ContractNLI Dataset is a document-level natural language inference dataset that provides 607 annotated non-disclosure agreements with 17 fixed hypotheses for evaluating contract review systems through three-class inference classification and evidence span identification.

- AKA: Contract Natural Language Inference Dataset, Contract-NLI Corpus, Stanford Contract NLI Dataset, Document-Level Contract Inference Dataset, Contract Hypothesis Verification Dataset.

- Context:

- It can typically contain 607 non-disclosure agreements annotated with 17 hypothesis statements applied uniformly across all contract documents.

- It can typically support three-class classification distinguishing entailment, contradiction, and not mentioned relationships between contract text and hypotheses.

- It can typically require evidence identification as multi-label binary classification over text spans when the NLI label is entailment or contradiction.

- It can typically include 37,000+ premise-hypothesis pairs covering diverse contracts like master service agreements, NDAs, and settlement agreements.

- It can typically feature manually written hypotheses created by law students based on contract review scenarios.

- It can typically present imbalanced label distributions with not mentioned cases being most frequent in real-world contracts.

- It can typically challenge models with contract-specific linguistic patterns including negation by exceptions and conditional clauses.

- It can typically serve as the first NLI dataset for contracts and the largest annotated contract corpus as of 2021.

- It can typically provide comprehensive evidence annotations where redundant spans must be identified even if non-contiguous.

- It can typically enable multi-task learning combining inference classification with evidence extraction.

- It can often facilitate automated contract review by verifying whether contractual claims are supported by actual contract text.

- It can often support contract consistency checking by identifying contradictory provisions within legal agreements.

- It can often provide interpretable predictions through evidence highlighting for legal professionals.

- It can often serve as challenging benchmark due to scarce training data, complex legal language, and document-length context.

- It can often enable cross-dataset evaluation when combined with CUAD, LEDGAR, and other legal NLP datasets.

- It can often support few-shot learning experiments given its limited training size and fixed hypothesis set.

- It can range from being a Binary NLI Dataset to being a Three-Class NLI Dataset, depending on its classification scheme.

- It can range from being a Sentence-Level Evidence Dataset to being a Document-Level Evidence Dataset, depending on its context scope.

- It can range from being a Single-Domain Contract Dataset to being a Multi-Domain Contract Dataset, depending on its contract type coverage.

- It can range from being a English-Only Dataset to being a Multilingual Extension, depending on its language scope.

- It can range from being a Research Benchmark to being a Production Training Set, depending on its deployment context.

- ...

- Examples:

- ContractNLI Inference Examples, such as:

- Entailment Example:

- Premise: "This Agreement shall be binding upon and inure to the benefit of the parties hereto and their respective successors and assigns."

- Hypothesis: "The Agreement is binding on the parties."

- Label: Entailment with evidence span highlighting binding clause.

- Contradiction Example:

- Premise: "This Agreement may be terminated by either party upon 30 days written notice."

- Hypothesis: "The Agreement cannot be terminated by the parties."

- Label: Contradiction with evidence span showing termination provision.

- Neutral Example:

- Premise: "This Agreement shall commence on January 1, 2022."

- Hypothesis: "The Agreement commences in the summer."

- Label: Not Mentioned as seasonal reference is absent.

- Entailment Example:

- ContractNLI Hypothesis Types, such as:

- Termination-Related Hypotheses about contract ending conditions and survival clauses.

- Obligation-Related Hypotheses concerning party duties and performance requirements.

- Confidentiality-Related Hypotheses regarding information protection and disclosure restrictions.

- Assignment-Related Hypotheses about transfer rights and succession provisions.

- Liability-Related Hypotheses covering indemnification and damage limitations.

- ContractNLI Dataset Components, such as:

- ContractNLI Training Split with annotated NDAs for model development.

- ContractNLI Development Split for hyperparameter tuning and model selection.

- ContractNLI Test Split with held-out contracts for final evaluation.

- ContractNLI Evidence Annotations marking all supporting spans comprehensively.

- ContractNLI Hypothesis Set with 17 fixed statements across all documents.

- ContractNLI Evaluation Metrics, such as:

- ContractNLI Applications, such as:

- Contract Review Automation Systems using NLI for clause verification.

- Legal Compliance Platforms checking regulatory requirements against contract terms.

- Contract Intelligence Tools providing interpretable analysis for legal professionals.

- Due Diligence Systems identifying contractual risks through hypothesis testing.

- ...

- ContractNLI Inference Examples, such as:

- Counter-Examples:

- CUAD Dataset, which focuses on clause extraction and span selection rather than inference classification.

- LEDGAR Dataset, which performs provision categorization rather than hypothesis verification.

- LEXTREME Benchmark, which provides multilingual tasks rather than English-only NLI.

- LegalBench, which offers diverse legal tasks rather than focusing on contract inference.

- General NLI Datasets like SNLI or MultiNLI, which lack legal domain specificity and contract focus.

- SQuAD Dataset, which performs question answering rather than inference determination.

- See: ContractNLI Task, Document-Level Natural Language Inference, Legal Contract Review, Natural Language Inference Dataset, Legal NLP Benchmark, Contract-Focused AI System, Evidence Identification Task, Stanford NLP Group, Contract Understanding Task, Hypothesis Verification, Legal Language Processing, Contract Analysis Automation, Yuta Koreeda, Christopher D. Manning, EMNLP 2021, GitHub Repository.

References

2023

- (Ghosh et al., 2023) ⇒ Sreyan Ghosh, Chandra Kiran Evuru, Sonal Kumar, S. Ramaneswaran, S. Sakshi, Utkarsh Tyagi, and Dinesh Manocha. (2023). “DALE: Generative Data Augmentation for Low-Resource Legal NLP.” doi:10.48550/arXiv.2310.15799

- NOTES:

- It contains annotated premise-hypothesis pairs extracted from contracts to classify their logical relationship as entailment, contradiction or neutral.

- The premises are extracts from contract sentences while hypotheses are manually written by law students.

- It has 37k pairs covering diverse contracts like MSAs, NDAs, settlements etc. from various sources.

- NOTES:

2021a

- (Koreeda & Manning, 2021a) ⇒ Yuta Koreeda, and Christopher D. Manning. (2021). “ContractNLI: A Dataset for Document-level Natural Language Inference for Contracts.” doi:10.48550/arXiv.2110.01799

- QUOTE: ... Our contributions are as follows:

1) We annotated and release [1] a dataset consisting of 607 contracts. This is the first dataset to utilize NLI for contracts and is also the largest corpus of annotated contracts. ...

- QUOTE: ... Our contributions are as follows:

2021b

- (GitHub, 2021) ⇒ https://stanfordnlp.github.io/contract-nli/

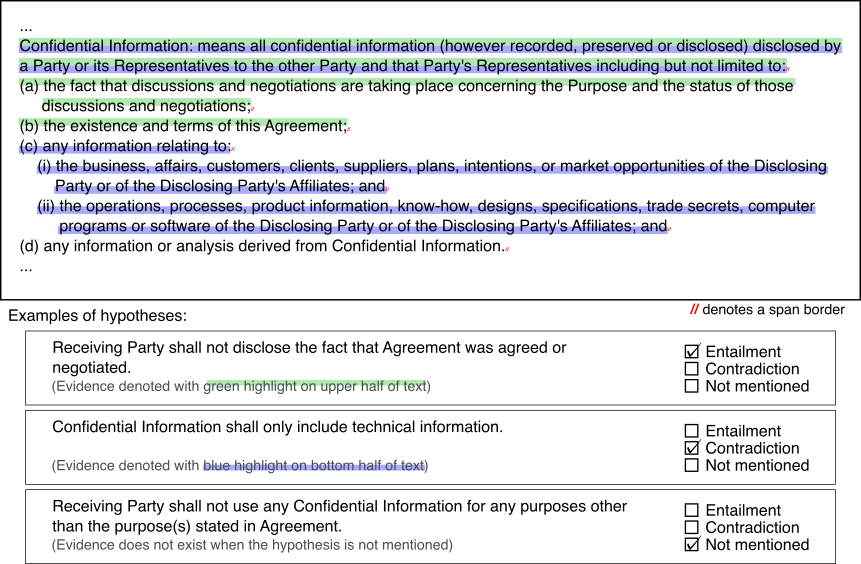

- QUOTE: ContractNLI is a dataset for document-level natural language inference (NLI) on contracts whose goal is to automate/support a time-consuming procedure of contract review. In this task, a system is given a set of hypotheses (such as “Some obligations of Agreement may survive termination.”) and a contract, and it is asked to classify whether each hypothesis is entailed by, contradicting to or not mentioned by (neutral to) the contract as well as identifying evidence for the decision as spans in the contract.

An overview of document-level NLI for contracts

ContractNLI is the first dataset to utilize NLI for contracts and is also the largest corpus of annotated contracts (as of September 2021). ContractNLI is an interesting challenge to work on from a machine learning perspective (the label distribution is imbalanced and it is naturally multi-task, all the while training data being scarce) and from a linguistic perspective (linguistic characteristics of contracts, particularly negations by exceptions, make the problem difficult).

Details of ContractNLI can be found in our paper that was published in “Findings of EMNLP 2021”. If you have a question regarding our dataset, you can contact us by emailing koreeda@stanford.edu or by creating an issue in this repository.

- Dataset specification

More formally, the task consists of:

- Natural language inference (NLI): Document-level three-class classification (one of Entailment, Contradiction or NotMentioned).

- Evidence identification: Multi-label binary classification over span_s, where a _span is a sentence or a list item within a sentence. This is only defined when NLI label is either Entailment or Contradiction. Evidence spans need not be contiguous but need to be comprehensively identified where they are redundant.

- We have 17 hypotheses annotated on 607 non-disclosure agreements (NDAs). The hypotheses are fixed throughout all the contracts including the test dataset.

- QUOTE: ContractNLI is a dataset for document-level natural language inference (NLI) on contracts whose goal is to automate/support a time-consuming procedure of contract review. In this task, a system is given a set of hypotheses (such as “Some obligations of Agreement may survive termination.”) and a contract, and it is asked to classify whether each hypothesis is entailed by, contradicting to or not mentioned by (neutral to) the contract as well as identifying evidence for the decision as spans in the contract.