Exponential Linear Activation Function

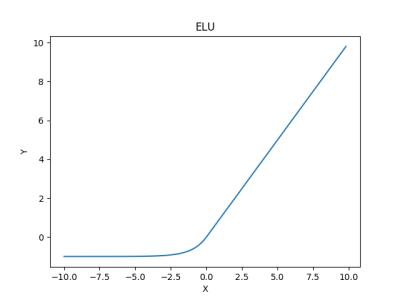

An Exponential Linear Activation Function is a Rectified-based Activation Function that is based on the mathematical function: [math]\displaystyle{ f(x)=max(0,x)+min(0,\alpha∗(\exp(x)−1)) }[/math] where [math]\displaystyle{ \alpha }[/math] a positive hyperparameter.

- AKA: ELU.

- Context:

- It can (typically) be used in the activation of Exponential Linear Neurons.

- Example(s):

- Counter-Example(s):

- a Clipped Rectifier Unit Activation Function,

- a Concatenated Rectified Linear Activation Function,

- a Leaky Rectified Linear Activation Function,

- a Noisy Rectified Linear Activation Function,

- a Parametric Rectified Linear Activation Function,

- a Randomized Leaky Rectified Linear Activation Function,

- a Scaled Exponential Linear Activation Function,

- a Softplus Activation Function,

- a S-shaped Rectified Linear Activation Function.

- See: Artificial Neural Network, Artificial Neuron, Neural Network Topology, Neural Network Layer, Neural Network Learning Rate.

References

2018a

- (Pytorch, 2018) ⇒ http://pytorch.org/docs/master/nn.html#elu Retrieved:2018-2-10

- QUOTE:

class torch.nn.ELU(alpha=1.0, inplace=False)sourceApplies element-wise, [math]\displaystyle{ f(x)=max(0,x)+min(0,alpha∗(exp(x)−1)) }[/math]

Parameters:

*** alpha – the alpha value for the ELU formulation. Default: 1.0

- inplace – can optionally do the operation in-place. Default:

False

- inplace – can optionally do the operation in-place. Default:

- QUOTE:

- Shape:

- Input: (N,∗) where * means, any number of additional dimensions

- Output: (N,∗), same shape as the input

- Examples:

- Shape:

>>> m = nn.ELU() >>> input = autograd.Variable(torch.randn(2)) >>> print(input) >>> print(m(input))

2018b

- (TensorFlow.org, 2018) ⇒ https://www.tensorflow.org/versions/r0.12/api_docs/python/nn/activation_functions_#elu Retrieved: 2018-2-18

- QUOTE:

tf.nn.elu(features, name=None)Computes exponential linear: exp(features) - 1 if < 0, features otherwise.

See Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs)

Args:

features: ATensor. Must be one of the following types:float32,float64,int32,int64,uint8,int16,int8,uint16,half.name: A name for the operation (optional).

- QUOTE:

- Returns:

A

Tensor. Has the same type asfeatures.

- Returns:

2018c

- (Chainer, 2018) ⇒ http://docs.chainer.org/en/stable/reference/generated/chainer.functions.elu.html Retrieved:2018-2-18

- QUOTE:

chainer.functions.elu(x, alpha=1.0)source Exponential Linear Unit function.

For a parameter [math]\displaystyle{ \alpha }[/math], it is expressed as

[math]\displaystyle{ f(x) = \begin{cases} x & \mbox{if } x \geq 0 \\ \alpha(\exp(x)-1) & \mbox{otherwise} \end{cases} }[/math]

See: https://arxiv.org/abs/1511.07289

Parameters:

- x (Variable or

numpy.ndarrayorcupy.ndarray) – Input variable. A [math]\displaystyle{ (s_1,s_2,\cdots,s_N) }[/math]-shaped float array. - alpha (float) – Parameter [math]\displaystyle{ \alpha }[/math]. Default is 1.0.

- x (Variable or

- QUOTE:

- Returns: Output variable. A [math]\displaystyle{ (s_1,s_2,\cdots,s_N) }[/math]-shaped float array.

- Return type: Variable

- Example:

>>> x = np.array([ [-1, 0], [2, -3] ], 'f')

>>> x

array([ [-1., 0.],

[ 2., -3.] ], dtype=float32)

>>> y = F.elu(x, alpha=1.)

>>> y.data

array([ [-0.63212055, 0. ],

[ 2. , -0.95021296] ], dtype=float32)

2018d

- (Wikipedia, 2018) ⇒ https://en.wikipedia.org/wiki/Rectifier_(neural_networks)#ELUs Retrieved:2018-2-5.

- Exponential linear units try to make the mean activations closer to zero which speeds up learning. It has been shown that ELUs can obtain higher classification accuracy than ReLUs. [math]\displaystyle{ f(x) = \begin{cases} x & \mbox{if } x \geq 0 \\ a(e^x-1) & \mbox{otherwise} \end{cases} }[/math]

[math]\displaystyle{ a }[/math] is a hyper-parameter to be tuned and [math]\displaystyle{ a \geq 0 }[/math] is a constraint.

- Exponential linear units try to make the mean activations closer to zero which speeds up learning. It has been shown that ELUs can obtain higher classification accuracy than ReLUs. [math]\displaystyle{ f(x) = \begin{cases} x & \mbox{if } x \geq 0 \\ a(e^x-1) & \mbox{otherwise} \end{cases} }[/math]

2017

- (Mate Labs, 2017) ⇒ Mate Labs Aug 23, 2017. Secret Sauce behind the beauty of Deep Learning: Beginners guide to Activation Functions

- QUOTE: Exponential Linear Unit (ELU) — Exponential linear units try to make the mean activations closer to zero which speeds up learning. It has been shown that ELUs can obtain higher classification accuracy than ReLUs. α is a hyper-parameter here and to be tuned and the constraint is [math]\displaystyle{ \alpha \ge 0 }[/math](zero).

Range: [math]\displaystyle{ (-\alpha,+\infty) }[/math]

[math]\displaystyle{ f(x) = \begin{cases} \alpha(e^x-1)x & \mbox{if } x \lt 0 \\ x & \mbox{if } x\ge 0 \end{cases} }[/math]

- QUOTE: Exponential Linear Unit (ELU) — Exponential linear units try to make the mean activations closer to zero which speeds up learning. It has been shown that ELUs can obtain higher classification accuracy than ReLUs. α is a hyper-parameter here and to be tuned and the constraint is [math]\displaystyle{ \alpha \ge 0 }[/math](zero).