Neural Network Max-Pooling Layer

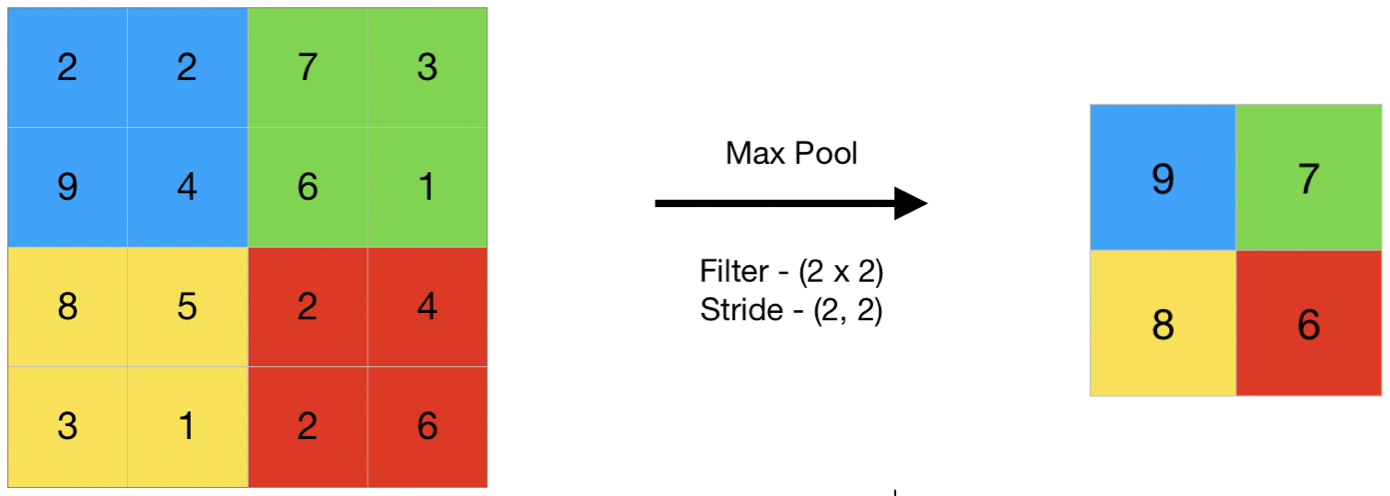

A Neural Network Max-Pooling Layer is a Neural Network Pooling Layer that selects the maximum element from the region of the feature map covered by the filter.

- AKA: Max-Pooling Layer.

- Example(s):

- Counter-Example(s)

- See: Neural Network, Artificial Neuron, Recurrent Neural Network.

References

2020a

- (Geeks4Geeks, 2020) ⇒ https://www.geeksforgeeks.org/cnn-introduction-to-pooling-layer/ 2020-11-27.

- QUOTE: Max pooling is a pooling operation that selects the maximum element from the region of the feature map covered by the filter. Thus, the output after max-pooling layer would be a feature map containing the most prominent features of the previous feature map.

- QUOTE: Max pooling is a pooling operation that selects the maximum element from the region of the feature map covered by the filter. Thus, the output after max-pooling layer would be a feature map containing the most prominent features of the previous feature map.

2020b

- (Quora, 2020b) ⇒ https://www.quora.com/What-is-max-pooling-in-convolutional-neural-networks Retrieved:2020-11-27.

- QUOTE: Max pooling is a sample-based discretization process. The objective is to down-sample an input representation (image, hidden-layer output matrix, etc.), reducing its dimensionality and allowing for assumptions to be made about features contained in the sub-regions binned.

This is done to in part to help over-fitting by providing an abstracted form of the representation. As well, it reduces the computational cost by reducing the number of parameters to learn and provides basic translation invariance to the internal representation.

Max pooling is done by applying a max filter to (usually) non-overlapping subregions of the initial representation.

- QUOTE: Max pooling is a sample-based discretization process. The objective is to down-sample an input representation (image, hidden-layer output matrix, etc.), reducing its dimensionality and allowing for assumptions to be made about features contained in the sub-regions binned.

2020c

- (CS 230 DL, 2020) ⇒ https://stanford.edu/~shervine/teaching/cs-230/cheatsheet-convolutional-neural-networks Retrieved:2020-11-27.

- QUOTE: Pooling (POOL)- The pooling layer (POOL) is a downsampling operation, typically applied after a convolution layer, which does some spatial invariance. In particular, max and average pooling are special kinds of pooling where the maximum and average value is taken, respectively.

2019

- (Christlein et al., 2019) ⇒ Vincent Christlein, Lukas Spranger, Mathias Seuret, Anguelos Nicolaou, Pavel Kral, and Andreas Maier (2019, September). "Deep Generalized Max Pooling". In: The Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR) (pp. 1090-1096). IEEE.

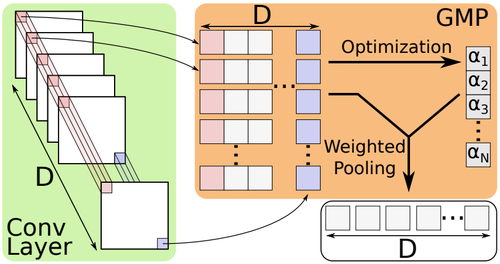

- QUOTE: We propose a new global pooling that aggregates activations in a spatially coherent way. Therefore, a weighted aggregation of local activation vectors is applied, where a local activation vector is defined to be a position in the activation volume along the depth dimension, see Fig.1(left). In contrast to global average/max pooling, activations of specific locations are balanced jointly with each other.

The weights for each local vector are computed through an optimization process, where we chose the use of Generalized Max Pooling (GMP) [7]. It was originally developed to balance local descriptors in a traditional Bag-of-Words (BoW) setting, where a global representation is computed from embeddings of local descriptors. Average pooling is commonly used to aggregate local embeddings. Yet, average pooling may suffer from over-frequent local descriptors computed from very similar or identical image regions. GMP balances the influence of frequent and rare descriptors according to the global descriptor similarity. We propose the use of GMP in a deep learning setting, and phrase GMP as a network layer, denoted as Deep Generalized Max Pooling (DGMP).

- QUOTE: We propose a new global pooling that aggregates activations in a spatially coherent way. Therefore, a weighted aggregation of local activation vectors is applied, where a local activation vector is defined to be a position in the activation volume along the depth dimension, see Fig.1(left). In contrast to global average/max pooling, activations of specific locations are balanced jointly with each other.

|

2014

- (Graham, 2014) ⇒ Benjamin Graham (2014). "Fractional Max-Pooling". Preprint arXiv:1412.6071.

- QUOTE: Convolutional networks almost always incorporate some form of spatial pooling, and very often it is α × α max-pooling with α=2. Max-pooling act on the hidden layers of the network, reducing their size by an integer multiplicative factor α. The amazing by-product of discarding 75% of your data is that you build into the network a degree of invariance with respect to translations and elastic distortions. However, if you simply alternate convolutional layers with max-pooling layers, performance is limited due to the rapid reduction in spatial size, and the disjoint nature of the pooling regions. We have formulated a fractional version of max-pooling where α is allowed to take non-integer values. Our version of max-pooling is stochastic as there are lots of different ways of constructing suitable pooling regions. We find that our form of fractional max-pooling reduces overfitting on a variety of datasets: for instance, we improve on the state-of-the art for CIFAR-100 without even using dropout.

2018

- (Wikipedia, 2018) ⇒ https://en.wikipedia.org/wiki/Convolutional_neural_network#Pooling Retrieved:2018-3-4.

- Convolutional networks may include local or global pooling layers, which combine the outputs of neuron clusters at one layer into a single neuron in the next layer[1][2]. For example, max pooling uses the maximum value from each of a cluster of neurons at the prior layer[3]. Another example is average pooling, which uses the average value from each of a cluster of neurons at the prior layer.

- ↑ Ciresan, Dan; Ueli Meier; Jonathan Masci; Luca M. Gambardella; Jurgen Schmidhuber (2011). "Flexible, High Performance Convolutional Neural Networks for Image Classification" (PDF). Proceedings of the Twenty-Second international joint conference on Artificial Intelligence-Volume Volume Two. 2: 1237–1242. Retrieved 17 November 2013.

- ↑ Krizhevsky, Alex. "ImageNet Classification with Deep Convolutional Neural Networks" (PDF). Retrieved 17 November 2013.

- ↑ Ciresan, Dan; Meier, Ueli; Schmidhuber, Jürgen (June 2012). "Multi-column deep neural networks for image classification". 2012 IEEE Conference on Computer Vision and Pattern Recognition. New York, NY: Institute of Electrical and Electronics Engineers (IEEE): 3642–3649. arXiv:1202.2745v1 Freely accessible. doi:10.1109/CVPR.2012.6248110. ISBN 978-1-4673-1226-4. OCLC 812295155. Retrieved 2013-12-09.