Out-of-Distribution (OOD) Detection Task

(Redirected from Out-of-Distribution Detection)

Jump to navigation

Jump to search

An Out-of-Distribution (OOD) Detection Task is a detection task that ...

- See: Outlier Detection, Anomaly Detection, Novelty Detection, Open Set Recognition, Out-of-Distribution Detection.

References

2021

- (Shen et al., 2021) ⇒ Zheyan Shen, Jiashuo Liu, Yue He, Xingxuan Zhang, Renzhe Xu, Han Yu, and Peng Cui. (2021). “Towards Out-of-distribution Generalization: A Survey.” arXiv preprint arXiv:2108.13624

- ABSTRACT: Classic machine learning methods are built on the i.i.d. assumption that training and testing data are independent and identically distributed. However, in real scenarios, the i.i.d. assumption can hardly be satisfied, rendering the sharp drop of classic machine learning algorithms' performances under distributional shifts, which indicates the significance of investigating the Out-of-Distribution generalization problem. Out-of-Distribution (OOD) generalization problem addresses the challenging setting where the testing distribution is unknown and different from the training. This paper serves as the first effort to systematically and comprehensively discuss the OOD generalization problem, from the definition, methodology, evaluation to the implications and future directions. Firstly, we provide the formal definition of the OOD generalization problem. Secondly, existing methods are categorized into three parts based on their positions in the whole learning pipeline, namely unsupervised representation learning, supervised model learning and optimization, and typical methods for each category are discussed in detail. We then demonstrate the theoretical connections of different categories, and introduce the commonly used datasets and evaluation metrics. Finally, we summarize the whole literature and raise some future directions for OOD generalization problem. The summary of OOD generalization methods reviewed in this survey can be found at this http URL.

- QUOTE: ... the general out-of-distribution (OOD) generalization problem can be defined as the instantiation of supervised learning problem where the test distribution [math]\displaystyle{ P_{te}(X,Y) }[/math] shifts from the training distribution [math]\displaystyle{ P_{tr}(X,Y) }[/math] and remains unknown during the training phase. In general, the OOD generalization problem is infeasible unless we make some assumptions on how test distribution may change. Among the different distribution shifts, the covariate shift is the most common one and has been studied in depth. Specifically, the covariate shift is defined as [math]\displaystyle{ P_{tr}(Y|X) = P_{te}(Y|X) }[/math] and [math]\displaystyle{ P_{tr}(X) \ne P_{te}(X) }[/math], which means the marginal distribution of X shifts from training phase to test phase while the label generation mechanism keeps unchanged. In this work, we mainly focus on the covariate shift and describe various efforts which have been made to handle it 1 . A relative field with OOD generalization is domain adaptation, which assumes the availability of test distribution either labeled ([math]\displaystyle{ P_{te}(X,Y) }[/math]) or unlabeled ([math]\displaystyle{ P_{te}(X) }[/math]). In a sense, domain adaptation can be seen as a special instantiation of OOD generalization where we have some prior knowledge on test distribution. ...

2021

- (Yang, Zhou et al., 2021) ⇒ Jingkang Yang, Kaiyang Zhou, Yixuan Li, and Ziwei Liu. (2021). “Generalized Out-of-Distribution Detection: A Survey.” arXiv preprint arXiv:2110.11334

- ABSTRACT: Out-of-distribution (OOD) detection is critical to ensuring the reliability and safety of machine learning systems. For instance, in autonomous driving, we would like the driving system to issue an alert and hand over the control to humans when it detects unusual scenes or objects that it has never seen before and cannot make a safe decision. This problem first emerged in 2017 and since then has received increasing attention from the research community, leading to a plethora of methods developed, ranging from classification-based to density-based to distance-based ones. Meanwhile, several other problems are closely related to OOD detection in terms of motivation and methodology. These include anomaly detection (AD), novelty detection (ND), open set recognition (OSR), and outlier detection (OD). Despite having different definitions and problem settings, these problems often confuse readers and practitioners, and as a result, some existing studies misuse terms. In this survey, we first present a generic framework called generalized OOD detection, which encompasses the five aforementioned problems, i.e., AD, ND, OSR, OOD detection, and OD. Under our framework, these five problems can be seen as special cases or sub-tasks, and are easier to distinguish. Then, we conduct a thorough review of each of the five areas by summarizing their recent technical developments. We conclude this survey with open challenges and potential research directions.

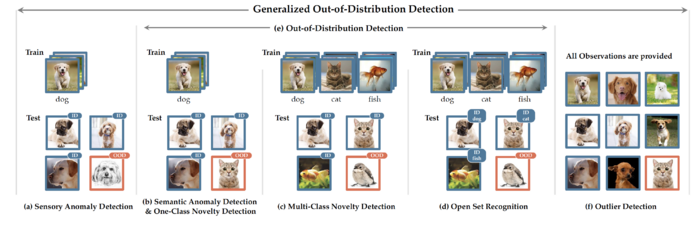

Figure 2: Exemplar problem settings for tasks under generalized OOD detection framework. Tags on test images refer to model’s expected predictions. (a) In sensory anomaly detection, test images with covariate shift will be considered as OOD. No semantic shift occurs in this setting. (b) In semantic anomaly detection and one-class novelty detection, normality/ID images belong to one class. Test images with semantic shift will be considered as OOD. No covariate shift occurs in this setting. (c) In multi-class novelty detection, ID images belong to multiple classes. Test images with semantic shift will be considered as OOD. No covariate shift occurs in this setting. (d) Open set recognition is identical to multi-class novelty detection in the task of detection, with the only difference that open set recognition further requires in-distribution classification. (e) Out-of-distribution detection is a super-category that covers semantic AD, one-class ND, multi-class ND, and open-set recognition, which canonically aims to detect test samples with semantic shift without losing the ID classification accuracy. (f) Outlier detection does not follow a train-test scheme. All observations are provided. It fits in the generalized OOD detection framework by defining the majority distribution as ID. Outliers can have any distribution shift from the majority samples.

2019

- (Ren et al., 2019) ⇒ Jie Ren, Peter J. Liu, Emily Fertig, Jasper Snoek, Ryan Poplin, Mark Depristo, Joshua Dillon, and Balaji Lakshminarayanan. (2019). “Likelihood Ratios for Out-of-Distribution Detection.” Advances in Neural Information Processing Systems, 32 (Neurips-2019).

- ABSTRACT: Discriminative neural networks offer little or no performance guarantees when deployed on data not generated by the same process as the training distribution. On such out-of-distribution (OOD) inputs, the prediction may not only be erroneous, but confidently so, limiting the safe deployment of classifiers in real-world applications. One such challenging application is bacteria identification based on genomic sequences, which holds the promise of early detection of diseases, but requires a model that can output low confidence predictions on OOD genomic sequences from new bacteria that were not present in the training data. We introduce a genomics dataset for OOD detection that allows other researchers to benchmark progress on this important problem. We investigate deep generative model based approaches for OOD detection and observe that the likelihood score is heavily affected by population level background statistics. We propose a likelihood ratio method for deep generative models which effectively corrects for these confounding background statistics. We benchmark the OOD detection performance of the proposed method against existing approaches on the genomics dataset and show that our method achieves state-of-the-art performance. Finally, we demonstrate the generality of the proposed method by showing that it significantly improves OOD detection when applied to deep generative models of images.