Sequence-to-Sequence (seq2seq) Neural Network with Attention

Jump to navigation

Jump to search

A Sequence-to-Sequence (seq2seq) Neural Network with Attention is a neural seq2seq network that includes an attention mechanism.

- Context:

- It can be classified as a Memory-Augmented Neural Network.

- Example(s):

- Counter-Example(s):

- See: Sequence-to-Sequence Model, Neural Machine Translation, Recurrent Encoder-Decoder Neural Network, Seq2Seq with Attention Training Algorithm, Artificial Neural Network, Natural Language Processing Task, Language Model.

References

2017a

- (See et al., 2017) ⇒ Abigail See, Peter J. Liu, and Christopher D. Manning. (2017). “Get To The Point: Summarization with Pointer-Generator Networks.” In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). DOI:10.18653/v1/P17-1099.

- QUOTE: Our pointer-generator network is a hybrid between our baseline and a pointer network (Vinyals et al., 2015), as it allows both copying words via pointing, and generating words from a fixed vocabulary. In the pointer-generator model (depicted in Figure 3) the attention distribution $a^t$ and context vector $h^∗_t$ are calculated as in section 2.1. In addition, the generation probability $p_{gen} \in [0,1]$ for timestep $t$ is calculated from the context vector $h^∗_t$ , the decoder state $s_t$ and the decoder input $x_t$ :[math]\displaystyle{ p_{gen} = \sigma(w^T_{h^∗} h^∗_t +w^T_s s_t +w^T_x x_t +b_{ptr})\quad\quad }[/math] (8)

where vectors $w_{h^∗}$ , $w_s$ , $w_x$ and scalar $b_{ptr}$ are learnable parameters and $\sigma$ is the sigmoid function (...)

- QUOTE: Our pointer-generator network is a hybrid between our baseline and a pointer network (Vinyals et al., 2015), as it allows both copying words via pointing, and generating words from a fixed vocabulary. In the pointer-generator model (depicted in Figure 3) the attention distribution $a^t$ and context vector $h^∗_t$ are calculated as in section 2.1. In addition, the generation probability $p_{gen} \in [0,1]$ for timestep $t$ is calculated from the context vector $h^∗_t$ , the decoder state $s_t$ and the decoder input $x_t$ :

|

2017b

- (Gupta et al., 2017) ⇒ Rahul Gupta, Soham Pal, Aditya Kanade, and Shirish Shevade. (2017). “DeepFix: Fixing Common C Language Errors by Deep Learning.” In: Proceeding of AAAI.

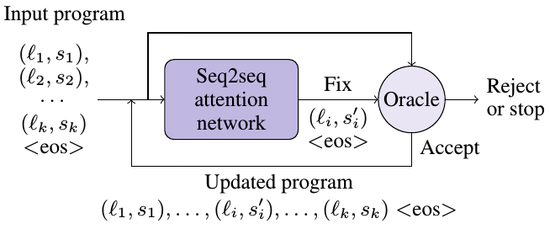

- QUOTE: We present an end-to-end solution, called DeepFix, that does not use any external tool to localize or fix errors. We use a compiler only to validate the fixes suggested by DeepFix. At the heart of DeepFix is a multi-layered sequence-to-sequence neural network with attention (Bahdanau, Cho, and Bengio 2014), comprising of an encoder recurrent neural network (RNN) to process the input and a decoder RNN with attention that generates the output. The network is trained to predict an erroneous program location along with the correct statement. DeepFix invokes it iteratively to fix multiple errors in the program one-by-one. (...)

DeepFix uses a simple yet effective iterative strategy to fix multiple errors in a program as shown in Figure 2 (...)

Figure 2: The iterative repair strategy of DeepFix.

- QUOTE: We present an end-to-end solution, called DeepFix, that does not use any external tool to localize or fix errors. We use a compiler only to validate the fixes suggested by DeepFix. At the heart of DeepFix is a multi-layered sequence-to-sequence neural network with attention (Bahdanau, Cho, and Bengio 2014), comprising of an encoder recurrent neural network (RNN) to process the input and a decoder RNN with attention that generates the output. The network is trained to predict an erroneous program location along with the correct statement. DeepFix invokes it iteratively to fix multiple errors in the program one-by-one. (...)

2015a

- (Bahdanau et al., 2015) ⇒ Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. (2015). “Neural Machine Translation by Jointly Learning to Align and Translate.” In: Proceedings of the Third International Conference on Learning Representations, (ICLR-2015).

2015b

- (Vinyals et al., 2015) ⇒ Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly. (2015). "Pointer Networks". In: Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems (NIPS 2015).