1993 AgentOrientedProgramming

- (Shoham, 1993) ⇒ Yoav Shoham. (1993). “Agent-oriented Programming.” In: Elsevier - Artificial Intelligence Journal, 60(1). doi:10.1016/0004-3702(93)90034-9

Subject Headings: Agent-Oriented Programming Paradigm; Agent-Oriented System; AOP Mental State; Software Agent, AGENT-O.

Notes

- It has also been cited as:

- (Shoham, 1991) ⇒ Yoav Shoham. (1991). “Elsevier ARTINT 931 Agent-oriented programming (1991)". CiteSeerx Archive

- (Shoham, 1992) ⇒ Yoav Shoham. (1992)."Agent-oriented Programming". Semantic Scholar

- Related Publication:

Cited By

- Google Scholar: ~3,540 citations [1]

- Semantic Scholar: ~1,255 citations [2]

- CiteSeer: ~3,367 citations [3]

- Elsevier ScienceDirect: ~1,108 citations [4]

Quotes

Abstract

A new computational framework is presented, called agent-oriented programming (AOP), which can be viewed as a specialization of object-oriented programming. The state of an agent consists of components such as beliefs, decisions, capabilities, and obligations; for this reason the state of an agent is called its mental state. The mental state of agents is described formally in an extension of standard epistemic logics: beside temporalizing the knowledge and belief operators, AOP introduces operators for obligation, decision, and capability. Agents are controlled by agent programs, which include primitives for communicating with other agents. In the spirit of speech act theory, each communication primitive is of a certain type: informing, requesting, offering, and so on. This article presents the concept of AOP, discusses the concept of mental state and its formal underpinning, defines a class of agent interpreters, and then describes in detail a specific interpreter that has been implemented.

1. Introduction

This paper proposes a new programming paradigm. The paradigm promotes a societal view of computation. in which multiple “agents” interact with one another, although in this document we will concentrate on the design of the individual agent. Many of the ideas here intersect and interact with the ideas of others. For the sake of continuity, however, 1 will not p1ace this work in the context of other work until the end.

1.1 What Is An Agent?

The term “agent” is used frequently these days. This is true in A1, but also outside it, for example in connection with databases and manufacturing automation. Although increasingly popular. the term has been used in such diverse ways that it has become meaningless without reference to a particular notion of agenthood. Some notions are primarily intuitive others quite formal. Some are very austere. defining an agent in automata-theoretic terms. and others use a more lavish vocabulary. The original sense of the word. of someone acting on behalf of someone else, has been all but lost in A1 (an exception that comes to mind is the use of the word in the intelligent-interfaces community. where there is talk of “software agents“ carrying out the user‘s wishes: this is also the sense of agency theory in economics [64]). Most often. when people in Al use the term “agent". they refer to an entity that functions continuously and autonomously in an environment in which other processes take place and other agents exist. This is perhaps the only property that is assumed uniformly by those in A1 who use the term. The sense of “autonomy” is not precise. but the term is taken to mean that the agents" activities do not require constant human guidance or intervention. Often certain further assumptions are made about the environment, for example that it is physical and partially unpredictable. In fact, agents are sometimes taken to be robotic agents, in which case other issues such as sensory input. motor control and time pressure are mentioned. Finally. agents are often taken to be “high-level". Although this sense is quite vague, many take some version of it to distinguish agents from other software or hardware components The “high level” is manifested in symbolic representation and/or some cognitive-like function: agents may be “informable” [24], may contain symbolic plans in addition to stimulus-response rules [13.18.2953]. and may even possess natural language capabilities. This sense is not assumed uniformly in Al. and in fact a certain counter-ideology deliberately denies the centrality or even existence of high-level representation in agents [1.7].

Clearly, the notion of agenthood in Al is anything but crisp. 1 should therefore make it clear what I mean by the term “agent", which is precisely this: An agent is an entity whose state is viewed as consisting of mental components such as beliefs, capabilities, choices. and commitments. These components are defined in a precise fashion, and stand in rough correspondence to their common sense counterparts. In this view. therefore, agenthood is in the mind of the programmer: What makes any hardware or software component an agent is precisely the fact that one has chosen to analyze and control it in these mental terms. The question of what an agent is is now replaced by the question of what entities can be viewed as having mental states The answer is that anything can be so described, although it is not always advantageous to do so. This view is not original to me. For example in [15] and other publications, Daniel Dennett proposes the “intentional stance". from which systems are ascribed mental qualities such as intentions and free will. The issue, according to Dennett, is not whether a system really is intentional, but whether we can coherently view it as such. Similar sentiments are expressed by John McCarthy in [47]. who also distinguishes between the “legitimacy" of ascribing mental qualities to machines and its “usefulness“: To ascribe certain beliefs, free will, intentions. consciousness, abilities or wants to a machine or computer program is legitimate when such an ascription expresses the same information about the machine that it expresses about a person. It is useful when the ascription helps us understand the structure of the machine. its past or future behavior, or how to repair or improve it. It is perhaps never logically required even for humans, but expressing reasonably briefly what is actually known about the state of the machine in a particular situation may require mental qualities or qualities isomorphic to them. Theories of belief. knowledge and wanting can be constructed for machines in a simpler setting than for humans, and later applied to humans. Description of mental qualities is most straight/brimrd for machines of known structure such as thermostats and computer operating systems. but is most useful when applied to entities whose structure is very incompletely known.

In [67] 1 illustrate the point through the light switch example. It is perfectly coherent to treat a light switch as a (very cooperative) agent with the capability of transmitting current at will, who invariably transmits current when it believes that we want it transmitted and not otherwise: flicking the switch is simply our way of communicating our desires. However, while this is a coherent view, it does not buy us anything. since we essentially understand the mechanism sufficiently to have a simpler. mechanistic description of its behavior. In contrast. we do not have equally good knowledge 01" the operation of complex systems such robots, people, and, arguably, operating systems. In these cases it is often most convenient to employ mental terminology; the application of the concept of “knowledge" to distributed computation, discussed below, is an example of this convenience. [1]

1.2. On The Responsible Use Pseudo-Mental Terminology

When I (like Dennett and McCarthy before me) state that in principle anything can be ascribed intentionality. 1 do not mean that there are no rules for this ascription. We do not arbitrarily label one component of the machine a “be1ief‘” and another a “commitment"; that would be gratuitous fancy naming, against which MCDermott has already warned [51]. Indeed, ifthe labeling were arbitrary, there would be no reason not to exchange the labels “belief” and "commitment"; or For that matter not to call the first component “1:112“ and the second “1:358”?

When then are we justified in ascribing a particular mental quality such as belief to a particular component of a machine? It seems to be reasonable to require the following elements as justification:

- a precise theory regarding the particular mental category: the theory must have clear semantics (or, to quote McDermott in [51]. “No Notation without Denotation“). and should correspond to the common- sense use of the term:

- a demonstration that the component of the machine obeys the theory;

- a demonstration that the formal theory plays a nontrivial role in analyzing or designing the machine (or. to coin a new phrase “N0 Notation without Exploitation").

As an example. it is instructive to consider the use of logics of knowledge and belief in distributed computation (cf. [26,27]). The initial motivation behind that line of research was to prove properties 01" distributed protocols. Researchers noted that intuitive reasoning about protocols included statements such as: “Processor A does not yet know that the network is back up. but since processor B knows that processor A doesn‘t know it. it (processor B) will not send the next message.“ Wishing to formalize this son of reasoning, researchers adopted a logic of knowledge which had been introduced in analytic philosophy by Hintikka [32] and imported to Al by Moore [54] (subsequent work on knowledge and belief within Al includes [37.41,55,69]).

The logic widely (though not universally) adopted, the SS modal system, is at best an idealization of the common-sense notion of knowledge. Some of its more counter-intuitive properties include tautological closure {you know all that follows from your knowledge). positive introspection (if you know something then you know that you know it). and negative introspection (if you do not know something then you know that you do not). The important point is that, For the applications considered in distributed computation, the deviation from common sense was harmless. Intuitively, in those applications the knowledge possessed by agents was so simple that complexity of internal reasoning could be neglected.

The first lesson, then, is that while the formal theory of the mental category should correspond to common sense, this correspondence will not be exact. It is up to the consumer of the theory to consider the application at hand, and judge whether the correspondence between theory and common sense is sufficiently close that s/he will not be misled by allowing common sense intuition to guide reasoning with the formal construct. For example. if in the application all the beliefs are propositional Horn clauses, and if in the application all linear-time computation is negligible, then the axiom of tautological closure is quite reasonable, since propositional Horn theories admit a linear-time decision procedure. On the other hand, this same axiom renders the logic useless for reasoning about number-theoretic cryptographic protocols[2].

The distributed computation application serves also to illustrate the second element required to justify the use of a mental term. a demonstration that the machine obeys the properties of the formal construct. The semantics adopted for the knowledge operator were standard possible-worlds semantics [38]. but here possible worlds were given a very concrete interpretation: A possible world was a possible global state of the system (that is, a possible combination of local states of the various processors) given a fixed protocol, and the worlds accessible to each agent from a given world consisted of all global states in which its local state was the same as in the given world. It was easy to show that this concrete definition obeyed the SS properties.

Finally, using this definition of knowledge. it became possible to prove certain properties of distributed protocols. The logic of knowledge was in principle dispensable—one could theoretically replace the knowledge operator by the corresponding statement about the states of the various processors — but the statements would get complex. and intuition would be lost. In practice, therefore, logics of knowledge proved invaluable, satisfying the third requirement of ascribing a mental attitude to a machine.

In this article, we will consider mental constructs that are somewhat more involved than knowledge. We will consider belief, obligation, and capability. and add a temporal component to each of those. As in the ease of knowledge, however, we will not reach an exact match between the formal properties of these formal constructs and common sense, but rather will aim to strike a balance between computational utility and common sense.

1.3. AOP versus OOP

It was mentioned in the previous section that the ascription of mental constructs must be coherent and useful. The application of the logic of knowledge in distributed computation. given there as an example. used the mental construct “knowledge“ in a particular way: It mapped it onto an existing computational framework (a distributed network of processors). and used it to reason about the system. The use we will make of mental constructs is different: Rather than use them for mete analysis. we will employ them to design the computational system. The various mental categories will appear in the programming language itself, and the semantics of the programming language will be related to the semantics of the mental constructs. This is similar in spirit to a development within the distributed-computation community, where a proposal has been made to include tests for epistemic properties in the protocols themselves [28]; at this time the proposal has not yet been followed up on.

Specifically~ I will propose a computational framework called agent-oriented programming (AOP). The name is not accidental. since from the engineering point of view, AOP can be viewed as a specialization of the Object-oriented programming (OOP) paradigm. I mean the latter in the spirit of Hewitt‘s original Actors formalism [31]. rather than in the more specific sense in which it is used today. Intuitively. whereas OOP proposes viewing a computational system as made up of modules that are able to communicate with one another and that have individual ways of handling incoming messages, AOP specializes the framework by fixing the state (now called mental state) of the modules (now called agents) to consist of components such as beliefs (including beliefs about the world. about themselves and about one another). capabilities. and decisions. each of which enjoys a precisely defined syntax. Various constraints are placed on the mental state of an agent, which roughly correspond to constraints on their common sense counterparts. A computation consists of these agents informing, requesting, offering. accepting. rejecting. competing, and assisting one another. This idea is borrowed directly from the Spt’t’t’ll art literature [4125.66]. Speech act theory categorizes speech. distinguishing between informing. requesting, offering. and so on; each such type of communicative act involves different presuppositions and has different effects Speech act theory has been applied in A1. in natural language research as well as in plan recognition [36.59]. To my knowledge. AOP and McCarthy’s ElephantZOOO language (see Section 8) are the first attempts to base a programming language in part on speech acts. Table 1 summarizes the relation between A)P and 00P [3].

| OOP | AOP | |

|---|---|---|

| Basic unit | object | agent |

| Parameters defining state of basic unit | unconstrained | beliefs, commitments, capabilities, choices, ... |

| Process of computation | message passing and response methods | message passing and response methods |

| Types of message | unconstrained | inform, request. offer, promise, decline, ... |

| Constraints on methods | none | honesty, consistency, ... |

1.4. Organization Of The Document

The rest of the document is organized as follows. In Section 2, I provide further motivation for the AOP paradigm by looking at two scenarios in which AOP is expected to prove useful. In Section 3. I outline the main ingredients of the AOP framework. The bulk of the paper then describes progress made to date towards realizing the concept. In Section 4, I discuss the mental categories that are essential to AOP~ and as many of the details involved in formalizing those that are needed for the remainder. In Section 5, I discuss a general family of agent interpreters. In Section 6. I define a simple programming language called AGENT-O, and its specific interpreter. In Section 7, I briefly discuss the process of“agentification", or transforming an arbitrary device into a programmable agent. In Section 8, I discuss related work by others in Al. Finally, in the last section I discuss some of the directions in which the work described here can be extended.

2. Two Scenarios

Below are two scenarios. The first is fairly complex, and illustrates the type of future applications envisioned. The second is a toy example, and serves three purposes: It illustrates a number of AOP ideas more crisply, it is implementable in the simple language defined later in this article, and it illustrates the fact that agents need not be robotic agents.

2.1. Manufacturing Automation

Alfred and Brenda work at a car-manufacturing plant. Alfred handles regular-order cars, and Brenda handles special-order ones. The plant has a welding robot, Calvin. The plant is controlled by a coordinating program, Dashiel. The following scenario develops, involving communication between Alfred. Brenda. Calvin. and Dashiel. It contains communication acts such as informing. requesting. committing. permitting. and commanding. and requires agents to reason about the beliefs, capabilities. and commitments of other agents.

- 8:00: Alfred requests that Calvin promise to weld ten bodies for him that day; Calvin agrees to do so.

- 8:30: Alfred requests that Calvin accept the first body. Calvin agrees. and the first body arrives. Calvin starts welding it and promises Alfred to notify him when it is ready for the next body.

- 8:45: Brenda requests that Calvin work on a special-order car which is needed urgently. Calvin responds that it cannot right then. but that it will when it finishes the current job. at approximately 9:00.

- 9:05: Calvin completes welding Alfred's first car. ships it out. and offers to weld Brenda‘s car. Brenda ships it the car. and Calvin starts welding.

- 9:15: Alfred enquires why Calvin is not yet ready for his (Alfred’s) next car. Calvin explains why, and also that it (Calvin) expects to be ready by about 10:00.

- 9:55: Calvin completes welding Brenda‘s car. and ships it out. Brenda requests that it re-accept it and do some painting. but Calvin refuses explaining that it does not know how to paint. Calvin then offers to weld another car for Alfred. and proceeds to weld Alfred‘s cars for a while.

- 12:15: Brenda requests that Calvin commit to welding four more special- order cars that day. Calvin replies that it cannot. since that conflicts with its commitment to Alfred. who still has six unwelded ears. Brenda requests Alfred to release Calvin from its commitment to Alfred. Alfred refuses. Brenda requests that Dashiel (remember Dashiel?) order Calvin to accept her important request and revoke its commitment to Alfred. Dashiel orders Calvin to weld two of Brenda's ears and then as many of Alfred‘s as time allows.

- 3:30: Calvin senses it is low on lubricant. and. without interrupting its work. asks Alfred for when the next lubrication is scheduled, Alfred responds that he doesn't know. but that Dashiel should know. Calvin asks Dashiel. who informs it that the next lubrication is scheduled for

- 4:00. Since that is sufficiently soon. Calvin continues to weld.

2. 2. Airline Reservation

This example is a minor modification of one due to John McCarthy [48], who uses it to illustrate his Elephant2000 programming language (see Section 8). The example involves three agents: a passenger I). an airline clerk C, and Cs supervisor S. P and S are human. and C is a program (or is it the other way around? Or perhaps all three are programs?). Note that by confirming a reservation the airline enters into a commitment to issue a boarding pass to the passenger at the appropriate time. Again. the following exchange involves requesting (a query is a request to inform), informing, permitting. and denying. as well as mental components such as knowledge and commitment.

March

- P to C: Please inform me what flights you have from San Francisco to New York on April 18.

- C to P: Flight #354 departs at 08:30. flight #293 departs at 10:00. flight #441 departs at noon.

- P to C: Please book me on #354.

- C to P: That is sold out.

- P to C: Please book me on #293.

- C to P: That is confirmed; your reservation number is 112358.

- P to C: Please book me also on #441.

- C to P: That conflicts with #293: I am not allowed to double book a passenger.

- P to C: Please get permission to do so.

- C to S: I request permission for the following double booking:

- S to C: Permission denied.

- C to P: Sorry, I cannot get approval.

April 18 at the airport

- P to C: My name is P; I have a reservation for flight #293.

- C to P: Here is your boarding pass.

3. Overview of the AOP framework

A complete AOP system will include three primary components:

- a restricted formal language with clear syntax and semantics for describing a mental state; the mental state will be defined uniquely by several modalities, such as belief and commitment;

- an interpreted programming language in which to define and program agents. with primitive commands such as

REQUESTandINFORM; the semantics of the programming language will be required to be faithful to the semantics of mental state; - an “agentifier” converting neutral devices into programmable agents.

In this article, I will fall short of supplying a complete system. My main purpose of #this article is to discuss the second component. However, since it relies in part on the first one. I will start with a short discussion of mental state. I will limit discussion of logic to the minimum needed for the discussion of the interpreter. There is, however, a substantial literature on the logic of various mental categories, and I will provide references to it.

In contrast to the first two components of which I have a relatively good understanding, the third component is still somewhat mysterious to me, and I will discuss it only briefly at the end.

4. Mental Categories And Their Properties

The first step in the enterprise is to define the various components of mental state and their properties. There is not a unique “correct” selection of mental categories. nor a correct theory regarding them. as different appli- cations can be expected to call for specific mental properties[4]. 4 In this section I will discuss what could be viewed as a bare-bones theory of mental state. a kernel that will in the future be modified and augmented (for in-depth treatment within our research group of various logical aspects of mental state. see [14,43,6973] ).

4.1. Components Of Mental State

In related past research by others in AI (see Section 8). three modalities were explored: belief. desire. and intention (giving rise to the pun on BDI agent architectures). Other similar notions. such as goals and plans. were also pressed into service. These are clearly important notions: however, I propose starting with a slightly different set of modalities. which are more modest and. I find. more basic.

By way of motivation. here is an informal view of the world which underlies the selection. At any point in time. the future is determined by two factors: The past history. and the current actions of agents. For example. past history alone does not (in this view) determine whether I raise my arm; that is determined by whether in fact I take the appropriate action. The actions of an agent are determined by its decisions or choices[5]. In other words, some facts are true for natural reasons, and other facts are true because agents decided to make them so. Decisions are logically constrained. though not determined. by the agent’s be/i'e/s: these beliefs refer to the state of the world (in the past. present. or future), to the mental state of other agents. and to the capabilitws of this and other agents. For example. given that the robot believes that it is incapable of passing through the narrow doorway, it will not decide to go through it. Decisions are also constrained by prior decisions; the robot cannot decide to be in Room 5 in five minutes if it has already decided to be in Room 3 at that time.

This perspective motivates the introduction of two basic mental categories. belief and decision (or choice), and a third category which is not a mental construct per se. capability. These are precisely the categories I wi11 adopt, with one modification: rather than take choice as basic, I will start with the notion of Obligation, or commitment, and will treat decision simply as obligation to oneself.

By restricting the components of mental state to these modalities I have in some informal sense excluded representation of motivation. Indeed, I wi11 not assume that agents are “rational” beyond assuming that their beliefs, obligations and capabilities are internally and mutually consistent. This stands in contrast to the other work mentioned above, which makes further assumptions about agents acting in their own best interests. and so on. Such stronger notions of rationality are obviously important, and I am convinced that in the future we will wish to consider them. However, neither the concept of agenthood nor the utility of agent-oriented programming depend on them.

In the remainder of this section I will describe the various modalities in more detail. My goal here is not to provide a comprehensive analysis of them; that is the subject of the papers mentioned earlier. Here I will discuss them only to the extent that they bear on the development of the interpreter defined later.

4.2. A Language For Belief Obligation, And Capability

Time

Time is basic to the mental categories; we believe things both about dif- ferent times and at different times, and the same is true of other modalities. We will adopt a simple point-based temporal language to talk about time; a typical sentence will be

holding(robot ,cup)‘

meaning that the robot is holding the cup at time t.

Action

Actions take place at different points in time, and, depending on the circumstances at the time they are taken, have certain effects. However, for the purposes of this article, we will not distinguish between actions and facts, and the occurrence of an action will be represented by the corresponding fact holding. For example, strictly speaking, rather than say that the robot took the action raise—arm at time t, we will say that the sentence raise—arm(robot)‘ is true. (However, to retain the agency behind the action. we will introduce the notion of decision; see below.) Given that actions are facts. they too are instantaneous. This too is a limitation in the current language.

There is substantial literature on representing action; the best-known representative in Al is the situation calculus [50]. There are a number of proposals to allow time. durational facts. and durational actions all in the same language. In fact. in [42] we represent time and parallel action in the situation calculus itself. However. the details are somewhat involved. and these extensions are not essential to the current discussion. Nonetheless. since actions are such a natural concept. in the programming language discussed later we will introduce them as syntactic sugar.

Belief

We now augment the language with a modal operator B, denoting belief. As mentioned above. an agent believes things both at certain times and about certain times. The general form of belief statement is

Btw

where a is an agent. t a time term, and (p a (recursively defined) sentence. For example. B&Bfil’likemmfl will mean that at time 3 agent a believes that at time 10 agent b will believe that at time 7 a liked b.

Obligation

So far I have used largely well-known notions: Temporal languages are standard fare. operators denoting belief common. and their combination. although somewhat novel. is straightforward. We now depart more radically from past constructions and introduce a new modal operator. DBL. DBL has one more argument than B:

DBLlhw

will mean that at time t agent a is obligated, or committed. to agent b about (p. Notice that. since actions are represented simply as facts. the agent is obligated to a fact holding rather than to taking action.

Decision (choice)

The freedom to choose among several possible actions is central to the notion of agenthood[6]. and earlier on in the research we indeed took decision. or choice. to be a primitive notion. The current definition of obligation provides an alternative, however: decision is defined to be simply obligation. or commitment, to oneself:

Daeggp =def OBngtp.

Again, the term “choice" carries many connotations. and I emphasize that I mean it in the sense of “decision"; an agent has chosen something if it has decided that something be true. Thus. most of us can decide to own a new pair of shoes. but few of us can decide to own yacht.

Capability

Intimately bound to the notion of agenthood is also that of capability. I may decide to move my arm, but if] am not capable of it then it will not move. I will not decide to do anything I believe myself incapable of. Similarly. I will not request a two-year-old, nor a mobile robot, to climb a ladder, since I do not believe they are capable of it.

It is debatable whether capability is best defined in mental terms or not. For example. one definition would say that agent a is capable of go just in case the following is true: If a were to decide (p, then (p would be true. There are also reasonable philosophical arguments against this definition (Cf. [5.8.19] ). Here I will simply introduce the notation

CAnto

to represent the fact that at time t a is capable of (/3. We will place certain constraints on such sentences. but not take a stance on whether CAN is reducible to mental notions or not [7]. Note that. like the other modalities. capabilities refer to specific times; a typical sentence is

CANfolMopen(door)8.

Thus at time 5 the robot might be able to ensure that the door is open at time 8, but at time 6 it might no longer have that capability. We may define ABLE to be the “immediate” version of CAN. First, for any sentences ¢. we define time(tp) to be the outermost time occurring in it; for example. time(open(door)t) = time(BFLqJ) = t. We now define ABLE as follows:

ABLEW =dct~ CANLW‘WJ

and thus

robot open (door) '

4.3. Properties Of The Various Components

I have so far not placed any constraints on the various modalities defined. and therefore have not guaranteed that they in any way resemble their common sense counterparts. We will now place such constraints. Just as there is no objectively “right” collection of mental categories. there is no “right" list of properties for any particular mental category. It was already stated in the introduction that the correspondence between the formal definition and common sense will always be only approximate, and that we would like to strike a balance between common sense and utility. Indeed. I expect different applications of ADP to call for different properties of belief, commitment, and capability. In this section I will define a number of properties I assume about the modalities. These properties are quite weak, but they are sufficient to justify the terminology. and necessary for the design of the interpreter. The weakness of the assumptions ensures that the interpreters apply to a wide variety of applications. Still. even these assumptions will be inappropriate for some purposes. in which case a new type of interpreter will be required.

Internal consistency

We assume that both the beliefs and the obligations are internally consis- tent. Specifically. we assume:

- for any t.a: {oz 33¢} is consistent;

- for any ea: {q}: OBLg‘htp for some b} is consistent.

Good Faith

We further assume that agents commit only to what they believe them- selves capable of, and only if they really mean it:

- for any t,a,b,(p: UBLIt m.to 3 BLUABLEutp) Arp).

Introspection

Although in general we do not assume that agents have total introspective capabilities. we do assume that they are aware of their obligations:

- for any t,a,b,(p: OBLt (p E BQ;OBL}L_[,;0; All) i

- for any t,a,b,¢: fiOBLl‘Lhta E BgfiOBLA‘htp,

On the other hand, we do not assume that agents are necessarily aware of commitments made to them.

Persistence of mental State

We have only placed restrictions on mental attitudes at a single instant of time. We conclude this section by discussing how mental states change or persist over time. However, unlike in the previous discussion, we will not place precise constraints, but rather informal guidelines.

Consider, for example, belief. Our restrictions so far allow agents which at one time believe nothing at all, shortly afterwards have a belief about every sentence, and then again become quite agnostic. Common sense suggests that beliefs tend to be more stable than that. and it would indeed be difficult to rely on the behavior of agents with such volatile beliefs. We will now place a strong condition on belief: we will assume that agents have perfect memory of, and faith in. their beliefs, and only let go of a belief if they learn a contradictory fact. Beliefs therefore persist by default. Furthermore, we will assume that the absence of belief also persists by default, although in a slightly different sense: if an agent does not believe a fact at a certain time (as opposed to believing the negation of the fact), then the only reason he wi11 come to believe it is if he learns it.

How to formally capture these two kinds of default persistence is another story, and touches on issues that are painfully familiar to researchers in nonmonotonic temporal reasoning and belief revision. In fact, a Close look at the logical details of belief (or knowledge) persistence reveals several very subtle phenomena, which have so far not been addressed in the literature [43].

Obligations too should persist; they wouldn’t be obligations otherwise. As in the case of belief, however, the persistence is not absolute. Although by default obligations persist, there are conditions under which obligations are revoked. These conditions presumably include explicit release of the agent by the party to which it is obligated. or alternatively a realization on the part of the agent that it is no longer able to fuIfill the obligation. (In their discussion of the persistence of commitment, Cohen and Levesque [9] actually propose a more elaborate second condition, one that requires common knowledge by the committer and committee of the impossibility; however further discussion of their position and arguments against it would be too long a detour; see a brief discussion of their work in Section 4.4.)

Since decision is defined in terms of obligation. it inherits the default persistence. Notice, however, an interesting point about the persistence of decision: While an agent cannot unilaterally revoke obligations it has to- wards others, it can cancel obligations held towards it—including obligations it holds towards itself, namely decisions. An agent is therefore free to modify an existing decision, but unless he explicitly does so the decision will stand.

Finally, capabilities too tend to not fluctuate wildly. In fact, in this document I assume that capabilities are fixed: What an agent can do at one time it can do at any other time. However. I will allow to condition a capability of an action on certain conditions that hold at the time of action.

The contextual nature of modal statements

I have throughout the discussion talked of "unequivocal" statements re- garding beliefs, obligations, and capabilities. Common sense. however. sug- gests that each of these modalities is context-sensitive: I can print the document right now. but only in the context of the network being up: I am obligated to you to finish the work by tomorrow, but if my child has just been rushed to hospital then all bets are off (even though I am still capable of finishing the work). Indeed. McCarthy has argued that all statements. not on1y modaI ones. should be Viewed in context [49]. Although I agree in principle. and discuss it further in [68]. in this article I will ignore the issue of context sensitivity.

4.4. A short detour: comparison with Cohen and Levesque

In several publications (e.g.. [9,10] ) Cohen. Levesque, and several asso- ciates have investigated the logical relationships between several modalities such as the above-mentioned ones. As mentioned earlier, I have deferred discussion of related work to a later section. However. since there is room for confusion between Cohen and Levesquc‘s definitions of mental catc- gories and our own, I will make an exception in this case. The reader may skip this subsection. although I believe the discussion may provide further intuition about our definition as well as Cohen and Levesque‘s.

Cohen and Levesque employ two basic modalities. BEL (belief) and G (goal. or choice). Although these bear a resemblance to our belief and choice modalities. important differences exist. Their belief modality is really the same as ours. except that they are not as explicit about its temporal aspect. In their language. one may use the O tense operator to specify that “Sometime in the future an agent will believe a fact“ or “Sometime in the future the agent will believe a fact either about that time or about a time yet further into the future“. but one is not able to talk about (e.g.) the agent believing in the future something about the past. This capability could be achieved by adding other tense operators, if the authors insisted on a tense logic[8].

The primary intuition offered about the G modality is that it denotes choice (“Consider the desire that the agent has chosen to pursue as put into a new category. Call this chosen desire. loosely. a goal“). However. I have already noted that the term “choice" is multi-faceted. and indeed G is quite different from our DEC. For Cohen and Levesque. the choices of an agent constitute a consistent subset of the agent’s desires. those that the agent has adopted as goals. In contrast, our DBL modality (and hence the derived DEC modality) reflects absolutely no motivation of the agent, and merely describes the actions to which the agent is obligated.

The difference in senses is the difference between a decision to act and a decision to pursue a goal. This difference is reflected in the different interactions between choice (or decision) and belief. In our construction, obligation (and therefore also decision) implies belief: DEcgp 3 ng (if an agent decides on an action he believes it will take place]. The con- verse implication does not hold in our construction (the agent may believe that the sun will rise tomorrow without making a choice in this regard). For Cohen and Levesque, on the other hand. belief does imply choice: (BEL a p) D (G a p) (see. e.g.. their Proposition 17). The intuition there is that the G modality specifies possible worlds chosen by the agent. and these worlds are selected among the ones the agent believes are possible; therefore ifa fact is true in all worlds believed possible by the agent. it must be true in the subset he selects. Note that both senses of choice guarantee that an agent does not choose something he believes impossible: both DECf‘p 3 amp}; and (G a p) D a(BEL a op) hold in the respective systems.

Both senses of choice are worthwhile, although one can imagine other ways of capturing a decision to pursue a goal. In particular, I am not sure that it is best to use a single operator to capture both “having a goal” and “deciding to adopt a goal". For example, it may prove advantageous to start with a G modality denoting “having a goal", and define “goal adoption" by DECaG(a,p) (with the appropriate temporal arguments added). However. I have deliberately avoided such more complex notions in this document as they are not needed for the fundamentals of AOP. and will not pursue the issue further.

In summary, the pioneering work of Cohen and Levesque introduces mental categories that are different from ours. The two frameworks share the essential view of belief and time. They each introduce modalities absent from the other: obligation and capability in our framework. goals and intentions in theirs. Even two notions that at first appear to be similar—our decision and their ehoice—turn out to be quite different.

5. A Generic Agent Interpreter

In the previous section, I discussed the first component of the AOP framework, namely the definition of agents. I now turn to the central topic of this paper, the programming of agents. In this section, I will outline a generic agent interpreter. In the next section I describe a specific programming language and its implemented interpreter.

The role of agent programs is to control the evolution of an agent's mental state; actions occur as a side-effect of the agent’s being committed (that is obligated) to an action whose time has come. Since the mental state of agents is captured in a formal language it might be tempting to view AOP as a form of logic programming. In this view, the program would consist of logical statements about the mental state of the agent. and through a process of theorem proving the mental state at each point in time wi11 be determined. However, this is not what I intend; although it might be possible in principle to develop such a programming language[9], multi-modal temporal theorem proving is sufficiently daunting to discourage me from attempting it at this point. Instead, I will allow standard operationally-defined languages: indeed, I will define such a language myself in the next section. These languages, however, will include data structures which represent various logical sentences (for example, those denoting beliefs and commitments), and the (extra-logical) operations on these data structures will be required to obey the properties of the various logical operators. For example, no two data structures denoting contradictory beliefs may be instantiated by the interpreter at the same time.

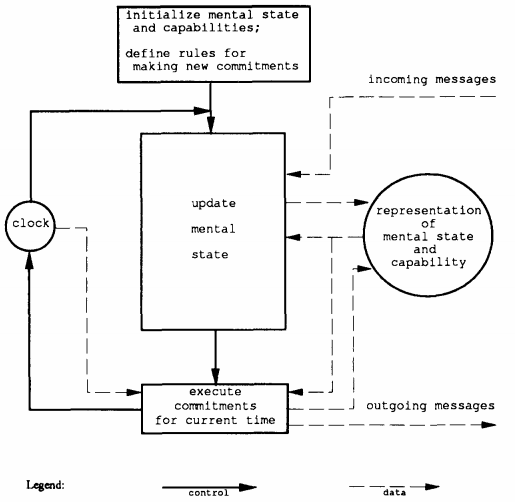

The basic loop

The behavior of agents is, in principle, quite simple. Each agent iterates the following two steps at regular intervals:

(1) read the current message and update your mental state. including your beliefs and commitments (the agent program is crucial for this update);

(2) execute the commitments for the current time, possibly resulting in further belief change (this phase is independent of the agent's program).

Actions to which agents can be committed include communicative ones such as informing and requesting, as well as arbitrary “private” actions. The process is i11ustrated in Fig. 1; dashed arrows represent flow of data, solid arrows temporal sequencing.

Assumption about message passing

Agent programs will include. among other things, communication com- mands. In order that those be executable I will assume that the platform is capable of passing messages to other agents addressable by name. whether those reside in the same machine or in others. The programming language will define the form of these messages. and the interpreter will determine when messages are sent.

Assumption about the clock

Central to the operation of the interpreter is the existence of a clock; agents are inherently “real-time” (to use another overloaded term). The main role of the clock is to initiate iterations of the two-step loop at regular intervals (every 10 milliseconds, every hour, etc.); the length of these intervals. called the “time grain”, is determined by the settable variable timegrain. I do not discuss the implementation of such a clock, which will vary among platforms, and simply assume that it exists. We also assume a variable now, whose value is set by the clock to the current time in the format defined in the programming language (an integer, date:h0ur:minute, etc.).

In the remainder of the description we make the very strong assumption that a single iteration through the loop lasts less than the time grain; in future versions of the language we will relax this assumption, and correspondingly will complicate the details of the loop itself.

Of course, the fact that agents use the same temporal language does not ensure that their clocks are synchronized. lf all agents are running on the same machine there will be no problem. but otherwise the possibility of clock drift exists. Although synchronization does not impact the design and programming of single agents, it is crucial for ensuring that a society of agents is able to function usefully. Fortunately. there exist synchronization protocols which ensure limited drift among clocks (for an overview. see [65])t and we expect to use these in our applications. However. since the focus in this article is on the design of single agents. 1 will not discuss this issue further.

6. AGENT-O, a simple language and its interpreter

Agent interpreters may vary along many dimensions, and in general pose many challenging problems. In this section I describe a particular programming language called AGENT-O whose interpreter is an extremely simple instance of the generic agent interpreter. In fact, the simplifications embodied in AGENT-O are so extreme that it may be tempting to dismiss it as uninteresting. However, it was recognized early on that one would not gain good insight into the strengths and weaknesses of AOP without writing actual programs. It was decided therefore to implement a simple interpreter first, and design more complex languages and interpreters based on this experience. It turned out the design of AGENT-O itself posed some challenges, and we have been surprised by the diversity of applications that even this simple language admits. Furthermore. AGENT-O is designed in a way that suggests obvious extensions; a few are being currently pursued. and will be described in the last section.

6.1 The syntax of AGENT-0

In the programming language itself, one specifies only conditions for making commitments; commitments are actually made, and later carried out automatically at the appropriate times (see discussion of the interpreter in Section 6.2 below). Commitments are only to primitive actions, those that the agent can directly execute. In other words, in AGENT-O an agent cannot commit to achieving any condition which requires some sort of planning. Before we define the syntax of commitments we need a few preliminary definitions. I will first develop the syntax of AGENT-O in a bottom-up fashion and then summarize it in BNF notation. The reader may wish to refer forward to the formal definition while reading the following description.

Fact Statements

Fact statements are fundamental to AGENT-O; they are used to specify the content of actions as well as conditions for their execution. Fact statements constitute a tiny fragment of the temporal language described earlier; they are essentially the atomic objective sentences (that is, no conjunction or disjunction, nor modal operators). Typical fact statements will be (t (employee smith acme)) and (NOT (t (employee jones acme))).

Private and communicative action statements

Agents commit to action, and so we now specify what actions are. We make two orthogonal distinctions between types of action: Actions may be private or communicative. and, independently. they may be conditional 01‘ unconditional.

The syntax for private actions is

(D0 t p-action)

where t is a time point and p-action is a private action name. Private action names are idiosyncratic and unconstrained; a database agent may have retrieval primitives, a statistical computation agent may run certain mathematical procedures, and a robot may serve itself. The effects of private actions may be invisible to other agents. as in the database example, but need not be so, as in the robot example.

Private actions may or may not involve IO. Communicative actions, on the other hand, always involve 10. Unlike private actions, communicative actions are uniform, and common to all agents. While in a general AOP system we can expect many types of communicative action, the restricted version AGENT-O has only three types of communicative action: informing, requesting, and cancelling a request.

The syntax of informing is

(INFORM t a fact)

where t is a time point, a is an agent name, and fact is a fact statement. Note that 1: is the time at which the informing is to take place, and fact itself contains other temporal information, as in (INFORM 5 b (1 (employee smith acme))).

The syntax of requesting is

(REQUEST t a action)

where t is a time point, a is an agent name, and action is an ac- tion statement, defined recursively. So, for example, (REQUEST 1 a (DD 10 update—database)) constitutes a legitimate request. Again, one should dis- tinguish between the time at which the requesting is to be done (1, in this example) and the time of the requested action (10‘ in the example). Requests can be embedded further, as in

(REQUEST 1 a (REQUEST 5 b (INFORM 10 c fact))).

The syntax of cancelling a request is:

(UNREQUEST t a action)

where t is a time point. a is an agent name, and action is an action statement.

The last unconditional action in AGENT-O is really a nonaction. Its syntax is:

(REFRAIN action)

where action is an action statement. The role of refraining will be to prevent commitment to particular actions.

Conditional action statements

1n AGENT-O we distinguish between commitments for conditional actions, which include conditions to be tested right before acting, and conditions for entering into commitments in the first place. I now discuss only conditional actions, and will discuss conditions for entering into commitments later.

Conditional actions rely on one form of condition. called a mental cone dilian. Mental conditions refer to the mental state of the agent. and the intuition behind them is that when the time comes to execute the action, the mental state at that time will be examined to see whether the mental condition is satisfied. For this reason the agent- and time-eomponcnts of the mental state are implicit and can be omitted in the specification of mental conditions. A mental condition is thus any combination of modal statements in the temporal-modal language, with the primary “agent“ and “time" arguments omitted.

Specifically, a mental condition is a logical combination ofmema/pancms. A mental pattern is one of two pairs:

(B fact) or ((CMT a) action)

where fact is a fact statement. a is an agent name. and action is an action statement (we use the term CMT rather than DBL since, for historical reasons, this is the notation used in the actual implementation). An example of a mental pattern is (B (1; (employee smith acme))). Given the syntax of mental conditions, the syntax of a conditional action is

(IF mntlcond action)

where mntlcond is a mental condition and action is an action statement. An example of a conditional action is

(IF (B (t) (employee smith acme))) (INFORM t a (t’ (employee smith acme)))),

The intuitive reading of this action is “if at time t: you believe that at time t’ smith is an employee of acme, then at time t inform agent a of that fact”.

As was said, mental conditions may contain logical connectives. These connectives are AND, OR, and NOT. The following three actions illustrate the use of NOT; together they constitute a QUERY about whether fact is true (b is the one being queried, a is the one he is asked to inform):

(REQUEST t b (IF (B,fact) (INFORM t+1 a fact))). (REQUEST t b (IF (B (NUT fact)) (INFORM t+l a (NOT fact)))). (REQUEST t b (IF (NOT (Bw fact)) (INFORM t+1 a (NUT (t+l (Bw a fact)))))).[10]

Variables

In the style of logic programming and production systems, in AGENT-O procedures are invoked in a pattern—directed fashion. Specifically, we will see that commitment rules are “activated" based on certain patterns in the incoming messages and current mental state. Variables play a crucial role in these patterns.

A variable is denoted by the prefix “?”. Variables may substitute agent names, fact statements, or action statements. Thus the following is a legiti- mate conditional action:

(IF (NOT ((CMT ?x) (REFRAIN sing))) sing)

In the tradition of logic programming, variables in action statements (in- cluding the mental condition part) are interpreted as existentially quantified. The scope ofthe quantifier is upwards until the scope of the first NUT, or it is the entire statement, if the variable does not lie in the scope of a NOT. Thus the last statement reads informally as “if you are not currently committed to anyone to refrain from singing, then sing".

It is advantageous to allow other quantifiers as well. The one quantifier included in AGENT-O is a limited form of the universal quantifier, but in the future others. such as the “latest (earliest) time point such that” quantifier, may be introduced. The universally-quantified variables will be denoted by the prefix “7 ! ”. The scope of these variables is always the entire formula, and thus the conditional action

(IF (B (t (emp ?!x acme))) (INFORM a (t: (emp ?lx acme))))

results in informing a of all the individuals who the agent believes to be acme employees [11]. Having discussed action statements, we can now finally discuss the type of statements that actually appear in the program, namely commitment rules.

Commitment rules

Since action statements contain information about what needs to be done. about when it needs to be done, and even the preconditions for doing it. one might have expected a collection of action statements to constitute a program. However, there is another crucial layer of abstraction in AGENT- 0. Most of the action statements are unknown at programming time: they are later communicated by other agents (one of which may be the “user"i in situations where that concept is applicable). The program itself merely contains conditions under which the agent will enter into new commitments. Some of these conditions may be trivial. resulting in a priori commitments, but most commitments will be in response to messages.

The conditions under which a commitment is made include both mental conditions, discussed above, and message conditions, which refer to the current incoming messages. A message condition is a logical combination of Iriessagr) patterns. A message pattern is a triple

(From Type Content)

where From is the sender’s name. Type is INFORM. REQUEST, 0r UNREQUEST. and Content: is a fact statement or an action statement. depending on the type. The other information associated with each incoming message its destination and arrival time, are implicit in this context and are thus omitted from the message pattern (of course, the Content will include reference to time, but that is the time of the fact or action, not the arrival time of the message). An example of a message pattern is (a INFORM fact), meaning that one of the new messages is from a informing the agent of fact, An example of a more complex message condition is

(AND (a REQUEST (DU t, walk» (NUT (?x REQUEST (DU 1: Chew-gum)))),

meaning that there is a new message from a requesting the agent to walk. and there is no new request from anyone that the agent chew-gum. The syntax of a commitment rule is

(COMMIT msgcond mntleond (agent action)*)

where msgcond and mntlcond are respectively message and mental conditions, agent is an agent name, action is an action statement, and * denote repetition of zero or more times[12]. Note that the action statement itself may be conditional, containing its own mental condition.

An example of a simple commitment rule is

(COMMIT (?a REQUEST ?action) (B (now (myfriend ?a))) [13] (?a ?action ))

Finally, a program is simply a sequence of commitment rules, preceded by a definition of the agent‘s capabilities and initial beliefs, and the fixing of the time grain.

A BNF description of the AGENT-O

Before describing the interpreter for AGENT-O and providing an example, let me summarize the discussion of the syntax by giving its BNF definition. (In accordance with standard conventions, * denotes repetition of zero or more times.)

<pregram> ::= timegrain :=

<commitru1e> ::= (COMMIT <msgcond> <mntlcond> (<agent> <action>)*) <msgcond> ::= <msgconj> | (OR <msgconj>*) <msgconj> ::= <msgpattern> I (AND <msgpattern>*) <msgpattern> ::= (<agent> INFORM <fact>) |

(<agent> REQUEST <action>> l (NUT <msgpattern>)

<mntlc0nd> ::= <mntlconj> I (DR <mntleonj>*)

<mnt1conj> ::= <mntlpattern> I (AND <mnt1pattern>*)

<mntlpattern> ::= (B <fact>) l ((CMT <agent>) <action>) (NOT <mnt1pattern>)

<action> ::= (DU

(INFORM

(UNREQUEST

(IF <mntlcond> <action>)

<fact> ::= (

(+

- Time may be a <variab1e> when

- it appears in a commitment rule

<time—constant> ::= m | h l d | y

- m (minute) = 60, h (hour) 3600, etc.

<agent> ::= <alphanumeric_string> | (variable) <predicate> ::= <alphanumeric_string> <arg> ::= <alphanumeric_str1ng> | <variable> <variable> ::= ?<alphanumeric_string> | ?!<alphanumeric_string>

A note about time: The programming language allows use of symbolic dates and times; in the actual implementation, described below. each date is represented internally by the number of seconds that have passed since 1900.

6.2. The A GENT—O interpreter

Since it is an instance of the generic interpreter, the AGENT-O interpreter inherits its two-step loop design However, since in AGENT-O the mental state is made up of three specific components, one of which (capabilities) is fixed, the first step in the loop may be specialized as follows:

(1a) Update the beliefs. (1b) Update the commitments.

We now look at the various substeps in more detail.

Updating beliefs and commitments

In AGENT-O the beliefs, commitments, and capabilities of an agent are each represented by a database. The belief database is updated either as a result of being informed. or as a result of taking a private action. There is little to say about the latter; a database agent will come to believe a fact after performing a retrieval operation. and a robotic agent will come to believe something after performing a Visual routine. These updates are implemented by the analogue of brain surgery, that is, by providing the appropriate routines with the ability to directly modify the belief databases More interesting is the former sort of update. In its full generality, the assimilation of new information into an existing belief base poses difficult problems, both semantieal and algorithmic. It is not obvious what in general the semantics of this assimilation should be. Indeed, normative theories have been proposed for at least two different sorts of assimilation, revision [21] and update [35], and a number of new results on these operations have recently been discovered.

Beside the semantical issue, one is faced with an algorithmic one as well. Consider a given database F and a new fact (p. Most theories of belief assimilation require that, if o is consistent with F, then assimilation amounts to simply adding (p to F. But Checking consistency for unconstrained theories is a notoriously hard problem, either intractable (in the propositional case) or undeeidable (in the first-order case). Furthermore, if (p is inconsistent with T, most theories of assimilation require that F be “minimally” modified so as to restore consistency, and this is even a harder problem. What then are we to do?

There are at least to approaches to getting around the computational complexity. The first is to relax the requirements, and adopt a heuristic assimilation algorithm which compromises either soundness, or complete- nesst or both. The second approach which is the one taken in AGENT-O, is to restrict the sentences in the languages sufficiently so that the problem becomes tractable. In fact, as we have seen, AGENT-O imposes an extreme restriction, which is to disallow logical connectives other than negation (this is in addition to disallowing modal operators, necessary for representing nested beliefs such as “I believe that you believe ..."). This makes the consistency checking trivial—at most linear in the size of the database. and much less with good data structuring.

This still leaves open the question of what to do with new information. We will ultimately require a theory of authority, which will dictate whether or not new information is believed. However, in AGENT-O agents are completely gullible; they incorporate any fact of which they are informed, retracting the contradictory atomic belief if that were previously held.

We now turn to the process of updating commitments. For that we need to explain the structure of the commitment and capability databases. Items in the database of commitments are simply pairs (agent action) (the agent to which the commitment was made, and the content of the commitment). Items in the database ofcapabilities are pairs (privateaction mntlcond). The mental condition part allows one to prevent commitment to incompatible actions, each of which might on its own be possible. An example of an item in the capability database is:

((!?time (rotate wheelbase ?degrees)) (NOT ((CMT ?x) ?!time (service wheelbase)))).

Existing commitments are removed either as a result of the belief change, or as a result of UNREQUEST messages. Considering the former first, recall that agents must believe in their ability to perform the actions to which they are committed. Belief change may affect capabilities, since the capability of each private action depends on mental preconditions. And thus whenever a belief update occurs, the AGENT-O interpreter examines the current com— mitments to private actions. and removes those whose preconditions in the capability database have been violated. Exhaustive examinations of all cur- rent commitments upon a belief change can be avoided through intelligent indexing. but I will not pursue this optimization issue. It is recommended that in such a case the agent add a commitment to immediately inform the agents to whom he was committed of this development. using commitment rules, but AGENT-O does not enforce this.

The handling of UNREQUEST messages is trivial: The agent removes the corresponding item from the commitment database if it exists. and otherwise does nothing.

Note that the removal of existing commitments is independent of the program. The addition of commitments, on the other hand, depends on the program very strongly. The algorithm adding commitments is as follows:

Algorithm. Check all program commitment statements; for each program statement (COMMIT msgcond mntlcond (a, action,)*). if:

- the message condition msgcond holds of the new incoming messages.

- the mental condition mntleond holds of the current mental state.

- for all i. the agent is currently capable of the action,. and

- for all i. the agent is not committed to REFRAIN action;, and‘ if action, is itself of the form REFRAIN actionj, the agent is not committed to action}

then for all i. commit to a, to perform action,.

Although I am not explicit about it here, it is clear what it means for the message conditions and mental conditions to hold, given their definitions. An agent is capable of an action under the following conditions:

- An agent can request and unrequest anything from anyone.

- An agent can inform anyone of a fact he (the agent) believes. An agent can inform [lsdfof any fact whatsoever (this is useful to implement reasoning in the agent: of course it presents a danger as well. and it is up to the programmer of commitment rules to prevent self-delusion).

- An agent is capable of any private action in the capability database provided the mental condition associated in the database with that private action holds at that time [14].

- An agent can refrain from any action, provided he is not already committed to that action.

- An agent can perform a conditional action (IF mntlcond action) if he can perform action under the condition mntlcond.

Carrying out commitments

We have so far discussed the first of the two steps in each iteration of the interpreter, updating the mental state. We now discuss the second step, which is less complex by far. Recall that each commitment in the commitment database has a time associated with it: (INFORM t a fact), (IF mntlcond (D0 t privateaetion)), etc. In this second step the interpreter simply exe- cutes all the actions whose time falls in the interval (now-timegrain , now]. The meaning of “execute" depends on the type of action:

- INFORM, REQUEST, UNREQUEST: Send the appropriate message.

- REFRAIN: No effect on execution (REFRAIN commitments play a role only in preventing commitment to other actions).

- DO: Consulting the belief and commitment databases, check the mental condition associated in the capability database with the primitive action; if it holds then perform the primitive action.

- IF: Consulting the belief and commitment databases, test the mental condition: if it holds then (recursively) execute the action.

6.3. A sample program and its interpretation

As an example of AGENT-O programs, consider the flight-reservation scenario described in Section 2. We now present an annotated program implementing the airline representative. Although the scenario was simple to begin with, here I simplify it further by ignoring the exchange relating to the supervisor as well as other aspects of the communication. The idea behind the program is that the relevant activity on the part of the airline is issuing a boarding pass to the passenger, and that confirming a reservation is in fact a commitment to issue a boarding pass at the appropriate time.

Since some of the low-level definitions are long. it will be convenient to use abbreviations. We will therefore assume that AGENT-O supports the use of macros (the actual implementation. mentioned below, does not). We define the following macros:

(issuabp pass flightnum time) => (IF (AND (B ((- time h) (present: pass))) (B (time (flight ?from ?to flightnum)))) (DD time-h (physical_issue-bp pass flightnum time)».

Explanation: This no-frills airline issues boarding passes precisely one hour prior to the flight; there are no seat assignments. physicaLissueJup is a private action involving some external events such as printing a boarding pass and presenting it to the passenger.

(query_which t asker askee q) 2? (REQUEST t askee (IF (B q) (INFORM (+ t 1) asker q))).

Explanation: query_which requests only a positive answer; if q contains a universally-quantified variable then query_which requests to be informed of all instances of the answer to the query q.

(query-whether t asker askee q) :> (REQUEST t askee (IF (B q) (INFORM (+ t 1) asker q))) (REQUEST t askee (IF (B (NDT q)) (INFORM (+ t 1) asker (NOT q))))»

Explanation: query_whether expects either a confirmation or a disconfirma- tion of a fact.

We now define the airline agent. To do so we need to define its initial beliefs. capabilities, and commitment rules. Of the initial beliefs, the ones relevant here refer to the flight schedule. and the number of available seats for each flight. The former are represented in the form (time (flight from to number)) (ignoring the fact that in practice airlines have a more-or-less fixed weekly schedule), and the latter in the form (time (remaining-seats cimel flightJlumber seats)). We also assume that the agent can evaluate arithmetic comparisons, such as 4>0.

There are two relevant capabilities here: Issuing boarding passes, and updating the count of the available seats on flights. Thus the capability database contains two items:

((issue.bp ?a ?flight ?time) true)

((DO ?time (updatelemainingseats ?timel ?flightatumber ?additional_seats)) (B (?time (remaining-seats ?timel ?flight_number ?current-seats))))-

Explanation: updatelemainingjeat is a private action which changes the belief regarding remainingjeats.

Finally, the airline agent has two commitment rules:

(COMMIT (?pass REQUEST (IF (B,?p) (INFORM ?t ?pass ?p))) true ?pass (IF (B,?p) (INFORM ?t ?pass ?p)))

(COMMIT (?cust REQUEST (issue_bp ?pass ?flight ?time))

| agent | action |

|---|---|

| smith | (querymhich 1march/1:OO smith airline (18apri1/.7!time (flight sf ny,?!num))) |

| airline | (INFORM 1march/2:OO smith (18april/8:30 (flight: sf ny #354”) |

| airline | (INFORM 1march/2:OO smith (18april/10:00 (flight sf my #293”) |

| airline | (INFURM 1march/2:OO smith (18april/ smith (REQUEST 1march/3:00 airline (jesus_bp smith #354 18april/8:30)) |

| smith | (querymhether 1march/4:OO smith airline ((CMT smith) (issue_bp smith #354 18april/8:30))) |

| airline | (INFORM 1march/5:OO smith (NEIT ((CMT smith) (issueJap smith #354 18april/8:30)))) |

| smith | (REQUEST 1mareh/6:00 airline (issue_bp smith. 8293 18april/10:00)) |

| smith | (queryswhether 1march/7:00 smith airline ((CMT smith) (issuejp smith #293 18april/10:OO))) |

| airline | (INFORM 1march/8:OO smith ((CMT smith) (issue_bp smith #293 18april/10:00))) |

| ... | |

| smith | (INFORM ISapril/Q:OO airline (present smith» |

| airline | (DD 183pri1/9:00 (issue_bp smith #293 18apri1/10:00)) |

(AND (B (?time (remaining_seats ?flight ?n)))

(?n>0)

(NOT ((CMT ?anyone)

(issue_bp ?pass ?anyflight ?time)))) (myself (D0 (+ new 1) (update_remaining_seats ?time ?flight —1)))

(?eust (issue-bp ?pass ?flight ?time)))

In a more realistic example one would have other commitment rules, no- tifying the passenger whether his reservation was confirmed, and the reasons for rejecting it in case it was not accepted. In the current implementation the passenger must query that separately.

This concludes the definition of the simple airline agent. Table 2 is a sample exchange between a passenger, smith, and the airline agent. The messages from the passenger are determined by him; the actions of the airline are initiated by the agent interpreter in response. The times are given in a convenient date/hhzrnm format, rather than the number of seconds that have passed fince 1900.

6.4. Implementation

A prototype AGENT-O interpreter has been implemented in Common Lisp. and has been installed on Sun/Unix. DecStation/Ultrix. and Macintosh computers. Both the interpreter and the programming manual [74] are available to the scientific community. A separate implementation has been developed by Hewlett Packard as part of a joint project to incorporate AOP in the New WaveTM architecture, The interpreter for AGENT-l, which extends AGENT-O in a number of ways (see below), is under development.

7. Agentification

In the previous two sections I discussed the second component of the AOP framework, agent programs and their interpretation. In this section I discuss, briefly, the process of agentification. My purpose in this section is not to make a substantial contribution to the topic. but to clarify some of the issues involved and point to some related work.

Agentitication refers to bridging the gap between the low-level machine process and the intensional level of agent programs. Of course, the interpreter itself is one such bridge, but it requires a direct mapping between the constructs in the agent language and the machine implementing the agent. In particular, it requires explicit representation in the machine of beliefs commitments, and capabilities. When we are the ones creating the agents we indeed have the luxury of incorporating these components into their design in which case the interpreter is adequate. However, we intend AOP as a framework for controlling and coordinating general devices. and those—ears, cameras, digital watches, spreadsheets do not come equipped with beliefs and commitment rules.

Even if we were in a position to persuade (say) General Motors, Finmec- canica, and Matsushita to equip every single product with a mental state. we would be ill-advised to do so. AOP offers a perspective on computation and communication that has its advantages, but it is not proposed as a uniform replacement of other process representations. It would be ridiculous to require that every robot-arm designer augment his differential equations with beliefs, or that the digital-watch design verifier augment finite automata with commitments.

However, releasing the manufacturers from the requirement to supply a mental state creates a gap between the intensional level of agent programs on the one hand. and the mechanistic process representation of a given device on the other hand. The role of the agentifier is to bridge this gap.

We inherit this decoupling ofthe intensional level from the machine level from situated amomala. introduced by Rosensehein in [62] and further developed by him and Kaelbling [34,63]. In situated automata there is a low—level language for describing the device. and another, high-level language for the designer to reason about the device. The compiler takes a program written in the high—level language and produces a description of a device in the low-level language.

Like the “knowledge-based" camp in distributed computation we adopt Rosenschein and Kaelbling’s insight that intensional notions can be viewed as the designer’s way of conceptualizing a device (as was discussed also in the introductory section, in connection with McCarthy’s and Dennett’s ideas). Having accepted this decoupling. however, we depart from situated automata in important ways. First, we consider different high-lcvel and low- level languages. Concerning the high-level language. situated automata has had several versions; published versions have included a knowledge operator (K) and a tense operator (0. or “eventually“). Our intensional language has already been discussed—it is the AGENT—O language defined in the previous section, which is quite richer. In fact, we are currently engaged in enriching the language even further.

The low-level process languages in the two cases are also different. Many process languages exist—synchronous Boolean circuits with or without delays (the choice of situated automata as a process language), finite automata and Turing machines, and various formalisms aimed at capturing concurrency. Our requirements of the process language included the following:

- representation of process time, including real-valued durations,

- asynchronous processes,

- multiple levels of abstraction.

We found that no existing process models met all requirements. and have developed an alternative process model, called temporal automata [15]. The details of temporal automata are not relevant to the current discussion, 50 I will omit them; they appear in [39,40].

The choice of intensional languages and process description is important, but more crucial than anything is the translation process envisioned. As was mentioned, in situated automata the idea is to generate a low-level process model from a high-level, intentional description. In contrast to that, the goal of agentiflcation is to agentify a particular, given machine. The input to the translator will include a description of a machine in the process language, and the output will be an intentional program. From this perspective, the process considered in situated automata is de—agentification. Since we have not tackled the problem of agentification in a substantial way it is premature to assert with certainty how difficult it is. However, I expect that the unconstrained problem will be quite difficult. By this I mean that, given only a particular device (say, a camera) and general constraints on mental state of the sort described in the article it will be hard to generate an intensional description of the device which fully captures is functioning. After all. who is to say where in the camera lie the beliefs? Is it in the state of a particular component or perhaps in a complicated sequence of state changes? It seems to be more fruitful to include in the input information about the location of at least some mental attitudes (“when the light meter registers x, the camera believes that ...".) and attempt to synthesize a high-level program based on this information (this is closer in spirit to Rosenschein’s original writing, but farther from the subsequent work). Again, I stress that we do not have sufficient experience with the problem to report any results.

8. Related work

Except occasionally, I have so far not discussed related past work This body of related work is in fact so rich that in this section I will mention only the most closely related work, and briefly at that. I do not discuss again past work on logics of knowledge and belief, which the logic of mental state extends, since I already did that in the introduction. For the same reason, I will not discuss object-oriented programming and Hewitt‘s work. The following is ordered in what I see as decreasing relevance to, and overlap with, AOP. The order (or. for that matter, inclusion in the list) reflects no other ranking, nor is it implied that researchers high up on the list would necessarily endorse any part of AOP.

- McCarthy’s work on ElephantZOOO [48]. This language under develop- ment is also based on speech acts. and the airline-reservation scenario I have discussed is due to McCarthy. One issue explored in connection with E1ephant2000 is the distinction between illocutionary and per— Iocutionary specifications which I have not addressed. In contrast to AOP, in Elephant2000 there is currently no explicit representation of state, mental or otherwise. Conditional statements therefore refer to the history of past communication rather than to the current mental state.