2015 AHierarchicalRecurrentEncoderDe

- (Sordoni et al., 2015) ⇒ Alessandro Sordoni, Yoshua Bengio, Hossein Vahabi, Christina Lioma, Jakob Grue Simonsen, and Jian-Yun Nie. (2015). “A Hierarchical Recurrent Encoder-Decoder for Generative Context-Aware Query Suggestion.” In: Proceedings of the 24th ACM International Conference on Information and Knowledge Management (CIKM 2015). DOI:10.1145/2806416.2806493. arXiv:1507.02221.

Subject Headings: Hierarchical Recurrent Encoder-Decoder Neural Network Algorithm, Hierarchical Recurrent Encoder-Decoder (HRED) Neural Network.

Notes

Cited By

- Google Scholar: ~ 389 Citations, Retrieved:2021-03-21.

Quotes

Author Keywords

Abstract

Users may strive to formulate an adequate textual query for their information need. Search engines assist the users by presenting query suggestions. To preserve the original search intent, suggestions should be context-aware and account for the previous queries issued by the user. Achieving context awareness is challenging due to data sparsity. We present a probabilistic suggestion model that is able to account for sequences of previous queries of arbitrary lengths. Our novel hierarchical recurrent encoder-decoder architecture allows the model to be sensitive to the order of queries in the context while avoiding data sparsity. Additionally, our model can suggest for rare, or long-tail, queries. The produced suggestions are synthetic and are sampled one word at a time, using computationally cheap decoding techniques. This is in contrast to current synthetic suggestion models relying upon machine learning pipelines and hand-engineered feature sets. Results show that it outperforms existing context-aware approaches in a next query prediction setting. In addition to query suggestion, our model is general enough to be used in a variety of other applications.

1. Introduction

2. Key Idea

3. Mathematical Framework

3.1 Recurrent Neural Network

3.2 Architecture

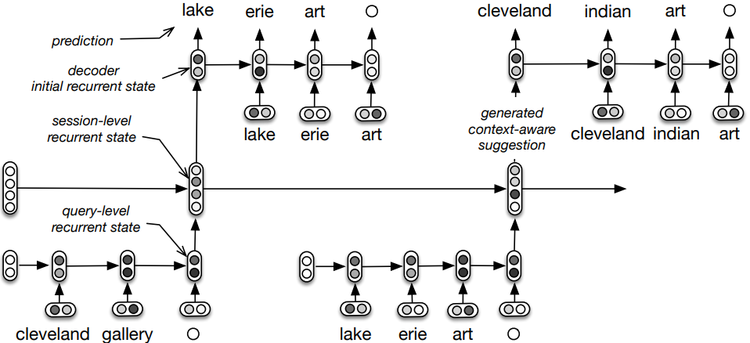

Our hierarchical recurrent encoder-decoder (HRED) is pictured in Figure 3. Given a query in the session, the model encodes the information seen up to that position and tries to predict the following query. The process is iterated throughout all the queries in the session. In the forward pass, the model computes the query-level encodings, the session-level recurrent states and the log-likelihood of each query in the session given the previous ones. In the backward pass, the gradients are computed and the parameters are updated.

|

3.2.1 Query-Level Encoding

3.2.2 Session-Level Encoding

3.2.3 Next-Query Decoding

3.3 Learning

3.4 Generation and Rescoring

4. Experiments

5. Related Works

6. Conclusion

Acknowledgments

References

BibTeX

@inproceedings{2015_AHierarchicalRecurrentEncoderDe,

author = {Alessandro Sordoni and

Yoshua Bengio and

Hossein Vahabi and

Christina Lioma and

Jakob Grue Simonsen and

Jian-Yun Nie},

editor = {James Bailey and

Alistair Moffat and

Charu C. Aggarwal and

Maarten de Rijke and

Ravi Kumar and

Vanessa Murdock and

Timos K. Sellis and

Jeffrey Xu Yu},

title = {A Hierarchical Recurrent Encoder-Decoder for Generative Context-Aware

Query Suggestion},

booktitle = {Proceedings of the 24th ACM International Conference on Information

and Knowledge Management (CIKM 2015)},

pages = {553--562},

publisher = {ACM},

year = {2015},

url = {https://doi.org/10.1145/2806416.2806493},

doi = {10.1145/2806416.2806493},

}

@article{2015_AHierarchicalRecurrentEncoderDe,

author = {Alessandro Sordoni and Yoshua Bengio and Hossein Vahabi and Christina Lioma and Jakob Grue Simonsen and Jian-Yun Nie}, title = {A Hierarchical Recurrent Encoder-Decoder For Generative Context-Aware Query Suggestion}, journal = {CoRR}, volume = {abs/1507.02221}, year = {2015}, url = {http://arxiv.org/abs/1507.02221}, archivePrefix = {arXiv}, eprint = {1507.02221},}

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2015 AHierarchicalRecurrentEncoderDe | Yoshua Bengio Hossein Vahabi Alessandro Sordoni Jian-Yun Nie Christina Lioma Jakob Grue Simonsen | A Hierarchical Recurrent Encoder-Decoder for Generative Context-Aware Query Suggestion | 2015 |