2018 IndraAWordEmbeddingandSemanticR

- (Sales et al., 2018) ⇒ Juliano Efson Sales, Leonardo Souza, Siamak Barzegar, Brian Davis, Andre Freitas, and Siegfried Handschuh. (2018). “Indra: A Word Embedding and Semantic Relatedness Server.” In: Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018).

Subject Headings: Word Embedding System; Indra.

Notes

- Online Resource(s):

Cited By

- Google Scholar: ~ 15 Citations

Quotes

Author Keywords

Abstract

In recent years word embedding/distributional semantic models evolved to become a fundamental component in many natural language processing (NLP) architectures due to their ability of capturing and quantifying semantic associations at scale. Word embedding models can be used to satisfy recurrent tasks in NLP such as lexical and semantic generalisation in machine learning tasks, finding similar or related words and computing semantic relatedness of terms. However, building and consuming specific word embedding models require the setting of a large set of configurations, such as corpus-dependant parameters, distance measures as well as compositional models. Despite their increasing relevance as a component in NLP architectures, existing frameworks provide limited options in their ability to systematically build, parametrise, compare and evaluate different models. To answer this demand, this paper describes INDRA, a multi-lingual word embedding/distributional semantics framework which supports the creation, use and evaluation of word embedding models. In addition to the tool, INDRA also shares more than 65 pre-computed models in 14 languages.

1. Introduction

Word embedding is a popular semantic model which represents words and sentences in computational linguistics systems and machine learning models. In recent years a large set of algorithms for both generating and consuming word embedding models (WEMs) have been proposed, which includes corpus pre-processing strategies, WEM algorithms or weighting schemes, vector compositions and distance measures (Turney and Pantel, 2010; Lapesa and Evert, 2014; Mitchell and Lapata, 2010). Determining the optimal set of strategies for a given problem demands the support of a tool that facilitates the exploration of the configuration space of parameters.

Furthermore, given the applicability and maturity achieved by these systems and models, they have been promoted from academic prototypes to industry-level applications (Loebbecke and Picot, 2015; Hengstler et al., 2016; Moro et al., 2015). In this new production scenario, a candidate tool should be able to scale to large number of requests and to the construction of models from large corpora, making use of parallel execution and traceability. From the functional point of view, integrated corpus pre-processing, generation of predictive-based and count-based models and unified access as a service are key features.

To support this demand, this paper describes INDRA, a word embedding/distributional semantics framework which supports the creation, use and evaluation of word embedding models. INDRA provides a software infrastructure to facilitate the experimentation and customisation of multilingual WEMs, allowing end-users and applications to consume and operate over multiple word embedding spaces as a service or library.

INDRA is available from two repositories (github.com/Lambda-3/Indra and IndraIndexer github.com/Lambda-3/ IndraIndexer) both licensed as open-source software. Additionally, INDRA also provides a Python client (pyindra) available via pip and from github.com/Lambda-3/pyindra.

2. Related Work

3. Implementation Design

The INDRA PROJECT is divided into two major modules: INDRAINDEXER and INDRA. INDRAINDEXER is responsible for the generation of the models, whereas INDRA implements the consumption methods.

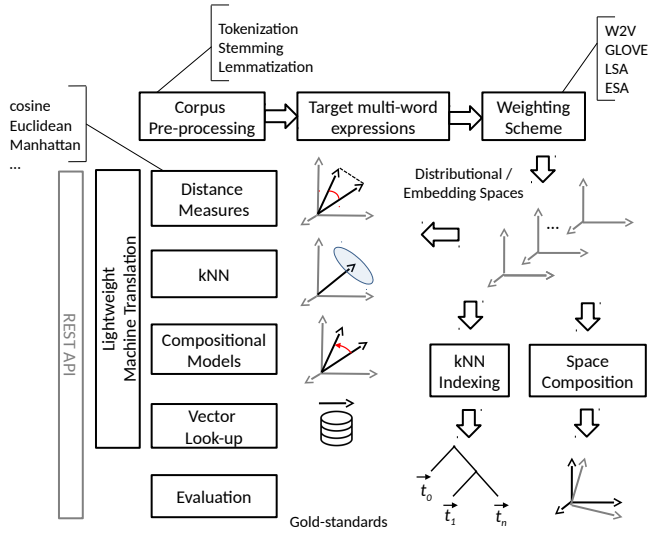

INDRA is designed to be a stand-alone library and also a web service. Figure 1 depicts the main components of its architecture. INDRAINDEXER supports the generation of WEMs directly from text files (Wikipedia-dump or plaintext formats), passing through the corpus pre-processing and multiword expression identification, to the model generation itself. INDRA dynamically builds the pipeline based on the metadata information produced during the model generation. This strategy guarantees that the same set of pre-processing operations are consistently applied to the input query. Additionally, the translation-based word embedding (Freitas et al., 2016; Barzegar et al., 2018b) can be conveniently activated in the pipeline as described in Section 4.

|

Different languages, domains and application scenarios require different parametrisations of the underlying embedding models. Together with the availability of pregenerated models, INDRA's system architecture favours the exploration of a large grid of parameters. INDRA currently shares more than 65 pre-computed models which varies in languages, model algorithms and corpora (general-purpose and domain-specific). The list of available models are in the Github project’s Wiki.

4. Use Examples

5. Python Client

6. Summary

7. Acknowledgment

References

BibTeX

@inproceedings{DBLP:conf/lrec/SalesSBDFH18,

author = {Juliano Efson Sales and

Leonardo Souza and

Siamak Barzegar and

Brian Davis and

Andre Freitas and

Siegfried Handschuh},

title = {Indra: A Word Embedding and Semantic Relatedness Server},

booktitle = {Proceedings of the Eleventh International Conference on Language Resources

and Evaluation (LREC 2018)},

publisher = {European Language Resources Association (ELRA)},

year = {2018},

url = {http://www.lrec-conf.org/proceedings/lrec2018/summaries/914.html},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2018 IndraAWordEmbeddingandSemanticR | Brian Davis Siegfried Handschuh Juliano Efson Sales Leonardo Souza Siamak Barzegar Andre Freitas | Indra: {A} Word Embedding and Semantic Relatedness Server | 2018 |