Deep Residual Neural Network

(Redirected from Deep residual network)

Jump to navigation

Jump to search

A Deep Residual Neural Network is a Residual Neural Network (ResNet) that is a Deep Neural Network.

- Countext:

- It ranges from being a 18-layer to being a 152-layer deep convolutional neural network.

- Example(s):

- a ResNet-34 model such as:

torchvision.models.resnet34(pretrained=False, **kwargs)[1]

- a ResNet-50 model such as:

- a ResNet-101 model such as:

- a ResNet-152 model such as:

- a He-Zhang-Ren-Sun Deep Residual Network (He et al., 2016; 2016),

- a Multi-Scale Residual Network (MSRN) (Li et al., 2018),

- a Spec-ResNet (Alzantot et al, 2019).

- a Wide Residual Network (WRN) (Zagoruyko & Komodakis, 2016).

- …

- a ResNet-34 model such as:

- Counter-Example(s):

- an AlexNet,

- a DenseNet,

- a GoogLeNet,

- an InceptionV3,

- a LeNet-5,

- a MatConvNet,

- a SqueezeNet,

- a VGG CNN,

- a ZF Net.

- See: Convolutional Neural Network, Machine Learning, Deep Learning, Machine Vision.

References

2019

- (Alzantot et al., 2019) ⇒ Moustafa Alzantot, Ziqi Wang, and Mani B. Srivastava. (2019). “Deep Residual Neural Networks for Audio Spoofing Detection.” In: Proceedings of 20th Annual Conference of the International Speech Communication Association (Interspeech 2019).

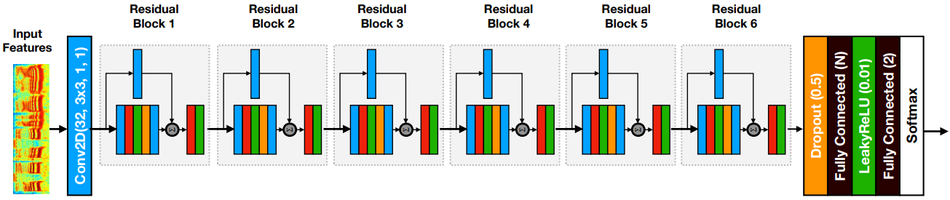

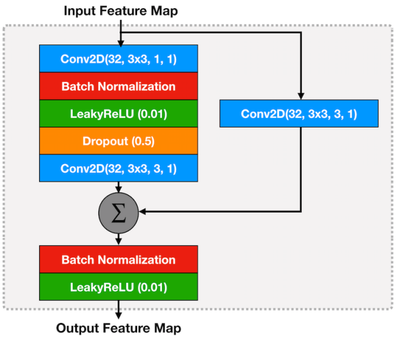

- QUOTE: Figure 1 shows the architecture of the Spec-ResNet model which takes the log-magnitude STFT as input features. First, the input is treated as a single channel image and passed through a 2D convolution layer with 32 filters, where filter size = 3 × 3, stride length = 1 and padding = 1. The output volume of the first convolution layer has 32 channels and is passed through a sequence of 6 residual blocks. The output from the last residual block is fed into a dropout layer (with dropout rate = 50%; Srivastava et al., 2014) followed by a hidden fully connected (FC) layer with leaky-ReLU (He et al., 2015) activation function ($\alpha = 0.01$). Outputs from the hidden FC layer are fed into another FC layer with two units that produce classification logits. The logits are finally converted into a probability distribution using a final softmax layer.

- QUOTE: Figure 1 shows the architecture of the Spec-ResNet model which takes the log-magnitude STFT as input features. First, the input is treated as a single channel image and passed through a 2D convolution layer with 32 filters, where filter size = 3 × 3, stride length = 1 and padding = 1. The output volume of the first convolution layer has 32 channels and is passed through a sequence of 6 residual blocks. The output from the last residual block is fed into a dropout layer (with dropout rate = 50%; Srivastava et al., 2014) followed by a hidden fully connected (FC) layer with leaky-ReLU (He et al., 2015) activation function ($\alpha = 0.01$). Outputs from the hidden FC layer are fed into another FC layer with two units that produce classification logits. The logits are finally converted into a probability distribution using a final softmax layer.

|

|

2018a

- (Li et al., 2018) ⇒ Juncheng Li, Faming Fang, Kangfu Mei, and Guixu Zhang. (2018). “Multi-scale Residual Network for Image Super-Resolution.” In: Proceedings of 15th European Conference in Computer Vision (ECCV 2018) - Part VIII.

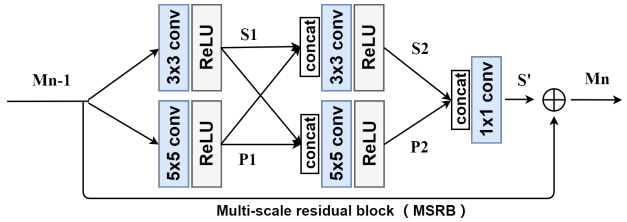

- QUOTE: In order to detect the image features at different scales, we propose multi-scale residual block (MSRB). Here we will provide a detailed description of this structure. As shown in Fig. 3, our MSRB contains two parts: multi-scale features fusion and local residual learning.

- QUOTE: In order to detect the image features at different scales, we propose multi-scale residual block (MSRB). Here we will provide a detailed description of this structure. As shown in Fig. 3, our MSRB contains two parts: multi-scale features fusion and local residual learning.

|

2018b

- (CS231N, 2018) ⇒ https://cs231n.github.io/convolutional-networks/#case Retrieved 2018-09-30

- QUOTE: There are several architectures in the field of Convolutional Networks that have a name. The most common are:

- (...)

- ResNet. Residual Network developed by [Kaiming He et al. was the winner of ILSVRC 2015. It features special skip connections and a heavy use of batch normalization. The architecture is also missing fully connected layers at the end of the network. The reader is also referred to Kaiming’s presentation (video, slides), and some recent experiments that reproduce these networks in Torch. ResNets are currently by far state of the art Convolutional Neural Network models and are the default choice for using ConvNets in practice (as of May 10, 2016). In particular, also see more recent developments that tweak the original architecture from Kaiming He et al. Identity Mappings in Deep Residual Networks (published March 2016).

- QUOTE: There are several architectures in the field of Convolutional Networks that have a name. The most common are:

2017

- (Li, Johnson & Yeung, 2017) ⇒ Fei-Fei Li, Justin Johnson, and Serena Yeung (2017). Lecture 9: CNN Architectures

2016a

- (He et al., 2016) ⇒ Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. (2016). “Deep Residual Learning for Image Recognition.” In: Proceedings 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016).

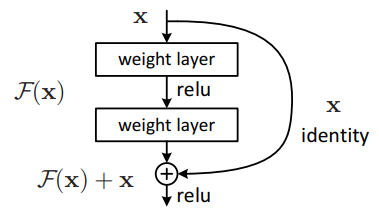

- QUOTE: The formulation of $F(x) +x$ can be realized by feedforward neural networks with “shortcut connections” (Fig. 2). Shortcut connections (Bishop, 1995; Ripley, 1996, Venables & Ripley, 1999) are those skipping one or more layers. In our case, the shortcut connections simply perform identity mapping, and their outputs are added to the outputs of the stacked layers (Fig. 2). Identity shortcut connections add neither extra parameter nor computational complexity. The entire network can still be trained end-to-end by SGD with backpropagation, and can be easily implemented using common libraries (e.g., Caffe Jia et al., 2014) without modifying the solvers.

- QUOTE: The formulation of $F(x) +x$ can be realized by feedforward neural networks with “shortcut connections” (Fig. 2). Shortcut connections (Bishop, 1995; Ripley, 1996, Venables & Ripley, 1999) are those skipping one or more layers. In our case, the shortcut connections simply perform identity mapping, and their outputs are added to the outputs of the stacked layers (Fig. 2). Identity shortcut connections add neither extra parameter nor computational complexity. The entire network can still be trained end-to-end by SGD with backpropagation, and can be easily implemented using common libraries (e.g., Caffe Jia et al., 2014) without modifying the solvers.

|

2016b

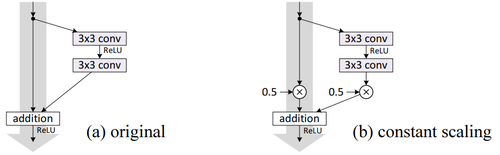

- (He et al., 2016) ⇒ Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. (2016). “Identity Mappings in Deep Residual Networks.” In: Proceedings of the 14th European Conference on Computer Vision (ECCV 2016) Part IV. DOI:10.1007/978-3-319-46493-0_38.

- QUOTE: Though our above analysis is driven by identity $f$, the experiments in this section are all based on $f = ReLU$ as in (He et al., 2016); we address identity $f$ in the next section. Our baseline ResNet-110 has 6.61% error on the test set. The comparisons of other variants (Fig. 2 and Table 1) are summarized as follows:

(...)

- QUOTE: Though our above analysis is driven by identity $f$, the experiments in this section are all based on $f = ReLU$ as in (He et al., 2016); we address identity $f$ in the next section. Our baseline ResNet-110 has 6.61% error on the test set. The comparisons of other variants (Fig. 2 and Table 1) are summarized as follows:

|

|

|

2016c

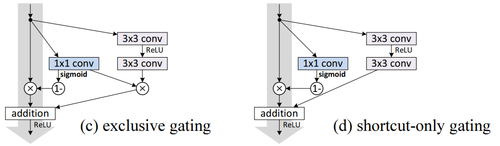

- (Zagoruyko & Komodakis, 2016) ⇒ Sergey Zagoruyko, and Nikos Komodakis. (2016). “Wide Residual Networks.” In: Proceedings of the British Machine Vision Conference 2016 (BMVC 2016).

- QUOTE: Residual block with identity mapping can be represented by the following formula:

| [math]\displaystyle{ \mathbf{x}_{l+1}=\mathbf{x}_{l}+\mathcal{F}\left(\mathbf{x}_{l}, \mathcal{W}_{l}\right) }[/math] | (1) |

- where $\mathbf{x}_{l+1}$ and $\mathbf{x}_{l}$ are input and output of the $l$-th unit in the network, $\mathcal{F}$ is a residual function and $\mathcal{W}_{l}$ are parameters of the block. Residual network consists of sequentially stacked residual block.

- where $\mathbf{x}_{l+1}$ and $\mathbf{x}_{l}$ are input and output of the $l$-th unit in the network, $\mathcal{F}$ is a residual function and $\mathcal{W}_{l}$ are parameters of the block. Residual network consists of sequentially stacked residual block.

|