2016 IdentityMappingsinDeepResidualN

- (He et al., 2016) ⇒ Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. (2016). “Identity Mappings in Deep Residual Networks.” In: Proceedings of the 14th European Conference on Computer Vision (ECCV 2016) Part IV. DOI:10.1007/978-3-319-46493-0_38.

Subject Headings: Deep Residual Network, GPT-2.

Notes

- Computing Resource(s):

- Link(s):

Cited By

- Google Scholar: ~ 3,756 Citations, Retrieved: 2020-06-25.

- Semantic Scholar: ~ 3,233Citations, Retrieved: 2020-06-25.

- MS Academic: ~ 3,652 Citations, Retrieved: 2020-06-25.

Quotes

Author Keywords

Abstract

Deep residual networks have emerged as a family of extremely deep architectures showing compelling accuracy and nice convergence behaviors. In this paper, we analyze the propagation formulations behind the residual building blocks, which suggest that the forward and backward signals can be directly propagated from one block to any other block, when using identity mappings as the skip connections and after-addition activation. A series of ablation experiments support the importance of these identity mappings. This motivates us to propose a new residual unit, which makes training easier and improves generalization. We report improved results using a 1001-layer ResNet on CIFAR-10 (4.62% error) and CIFAR-100, and a 200-layer ResNet on ImageNet. Code is available at: https://github.com/KaimingHe/resnet-1k-layers

1. Introduction

2. Analysis of Deep Residual Networks

3. On the Importance of Identity Skip Connections

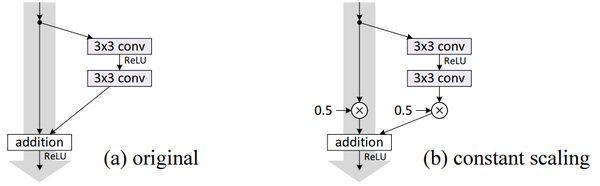

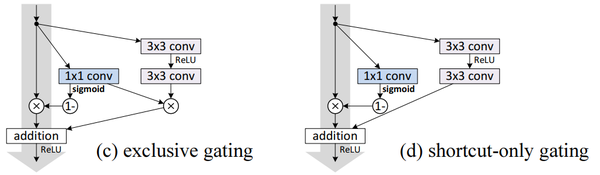

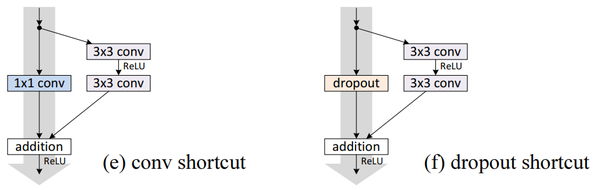

3.1 Experiments on Skip Connections

(...)

Though our above analysis is driven by identity $f$, the experiments in this section are all based on $f = ReLU$ as in (He et al., 2016); we address identity $f$ in the next section. Our baseline ResNet-110 has 6.61% error on the test set. The comparisons of other variants (Fig. 2 and Table 1) are summarized as follows:

(...)

|

|

|

(...)

4. On the Usage of Activation Functions

5. Results

6. Conclusions

= Appendix: Implementation Details

References

2016

- (He et al., 2016) ⇒ Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. (2016). “Deep Residual Learning for Image Recognition.” In: Proceedings 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016).

BibTeX

@inproceedings{2016_IdentityMappingsinDeepResidualN,

author = {Kaiming He and

Xiangyu Zhang and

Shaoqing Ren and

Jian Sun},

editor = {Bastian Leibe and

Jiri Matas and

Nicu Sebe and

Max Welling},

title = {Identity Mappings in Deep Residual Networks},

booktitle = {Proceedings of the 14th European Conference on Computer Vision (ECCV 2016) Part IV},

series = {Lecture Notes in Computer Science},

volume = {9908},

pages = {630--645},

publisher = {Springer},

year = {2016},

month = {October},

address = {Amsterdam, The Netherlands},

url = {https://arxiv.org/pdf/1607.06450},

doi = {10.1007/978-3-319-46493-0_38},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2016 IdentityMappingsinDeepResidualN | Kaiming He Xiangyu Zhang Shaoqing Ren Jian Sun | Identity Mappings in Deep Residual Networks | 2016 |