Pointer-Generator Sequence-to-Sequence Neural Network

(Redirected from Pointer-generator network)

Jump to navigation

Jump to search

A Pointer-Generator Sequence-to-Sequence Neural Network is a Sequence-to-Sequence Neural Network With Attention that is based on a Pointer Network Model and a Word Generation Probability Function.

- AKA: Pointer-Generator Network.

- Context:

- It was initially developed by See et al., (2017) for copying words from the source text via Vinyals Pointer Network.

- It can also be categorized as a Modular Neural Network.

- It can be trained using a See-Liu-Manning Text Summarization System.

- Example(s):

- Counter-Example(s):

- See: Sequence-to-Sequence Model, Neural Machine Translation, Encoder-Decoder Neural Network, Artificial Neural Network, Natural Language Processing Task, Language Model, Summarization NLP Task.

References

2017

- (See et al., 2017) ⇒ Abigail See, Peter J. Liu, and Christopher D. Manning. (2017). “Get To The Point: Summarization with Pointer-Generator Networks.” In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). DOI:10.18653/v1/P17-1099.

- QUOTE: Our hybrid pointer-generator network facilitates copying words from the source text via pointing (Vinyals et al., 2015), which improves accuracy and handling of OOV words, while retaining the ability to generate new words. The network, which can be viewed as a balance between extractive and abstractive approaches, is similar to Gu et al. ’s (2016) CopyNet and Miao and Blunsom’s (2016) Forced-Attention Sentence Compression, that were applied to short-text summarization. (...)

- QUOTE: Our hybrid pointer-generator network facilitates copying words from the source text via pointing (Vinyals et al., 2015), which improves accuracy and handling of OOV words, while retaining the ability to generate new words. The network, which can be viewed as a balance between extractive and abstractive approaches, is similar to Gu et al. ’s (2016) CopyNet and Miao and Blunsom’s (2016) Forced-Attention Sentence Compression, that were applied to short-text summarization.

|

2015

- (Vinyals et al., 2015) ⇒ Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly. (2015). "Pointer Networks". In: Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems (NIPS 2015).

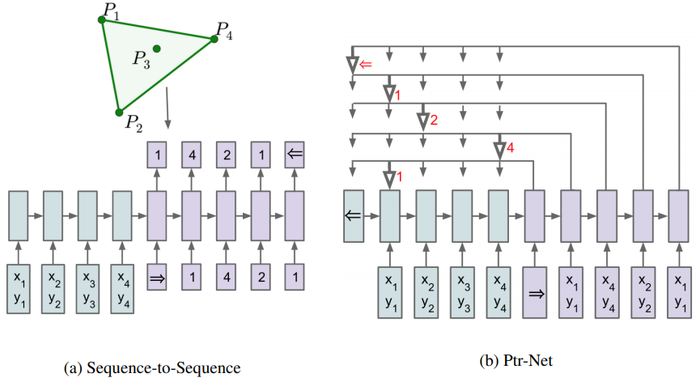

- QUOTE: Nonetheless, these methods still require the size of the output dictionary to be fixed a priori. Because of this constraint we cannot directly apply this framework to combinatorial problems where the size of the output dictionary depends on the length of the input sequence. In this paper, we address this limitation by repurposing the attention mechanism of Bahdanau et al. (2014) to create pointers to input elements. We show that the resulting architecture, which we name Pointer Networks (Ptr-Nets), can be trained to output satisfactory solutions to three combinatorial optimization problems – computing planar convex hulls, Delaunay triangulations and the symmetric planar Travelling Salesman Problem (TSP). The resulting models produce approximate solutions to these problems in a purely data driven fashion (i.e., when we only have examples of inputs and desired outputs). The proposed approach is depicted in Figure 1.

|