Single-Layer Perceptron Training System

A Single-Layer Perceptron Training System is a Single-Layer ANN Training System that implements a Single-Layer Perceptron Algorithm to solve a Single-Layer Perceptron Training Task.

- AKA: One-Layer Perceptron Training System.

- Example(s):

- Perceptron Classifier (Raschka, 2015) ⇒

class Perceptron(object)[1]

- Perceptron Classifier (Raschka, 2015) ⇒

- Counter-Example(s):

- See: Perceptron Learning Rule, Neural Network Learning Rate, Artificial Neural Network, Neural Network Layer, Artificial Neuron, Neuron Activation Function, Neural Network Topology.

References

2015

- (Raschka, 2015) ⇒ Raschka, S. (2015). “Chapter 2: Training Machine Learning Algorithms for Classification". In:"Python Machine Learning: Unlock Deeper Insights Into Machine Learning with this Vital Guide to Cutting-edge Predictive Analytics". Community experience distilled Series. Packt Publishing Ltd. ISBN:9781783555130 pp. 17-47.

- QUOTE: The whole idea behind the MCP neuron and Rosenblatt's thresholded perceptron model is to use a reductionist approach to mimic how a single neuron in the brain works: it either fires or it doesn't. Thus, Rosenblatt's initial perceptron rule is fairly simple and can be summarized by the following steps:

- 1. Initialize the weights to 0 or small random numbers.

- 2. For each training sample [math]\displaystyle{ x^{(i)} }[/math] perform the following steps:

- QUOTE: The whole idea behind the MCP neuron and Rosenblatt's thresholded perceptron model is to use a reductionist approach to mimic how a single neuron in the brain works: it either fires or it doesn't. Thus, Rosenblatt's initial perceptron rule is fairly simple and can be summarized by the following steps:

- Here, the output value is the class label predicted by the unit step function that we defined earlier, and the simultaneous update of each weight [math]\displaystyle{ w_j }[/math] in the weight vector [math]\displaystyle{ \mathbf{w} }[/math] can be more formally written as:

[math]\displaystyle{ w_j:= w_j+ \Delta w_j }[/math]

The value of [math]\displaystyle{ \Delta w_j }[/math], which is used to update the weight [math]\displaystyle{ w_j }[/math] , is calculated by the perceptron learning rule:

[math]\displaystyle{ \Delta w_j=\eta \left( y^{(i)}-\hat{y}^{(i)} \right) x_j^{(i)} }[/math]

Where [math]\displaystyle{ \eta }[/math] is the learning rate (a constant between 0.0 and 1.0), [math]\displaystyle{ y^{(i)} }[/math] is the true class label of the i-th training sample, and [math]\displaystyle{ \hat{y}^{(i)} }[/math] is the predicted class label (...)

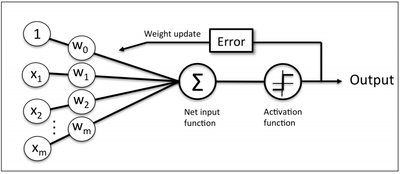

Now, before we jump into the implementation in the next section, let us summarize what we just learned in a simple figure that illustrates the general concept of the perceptron:

The preceding figure illustrates how the perceptron receives the inputs of a sample [math]\displaystyle{ x }[/math] and combines them with the weights [math]\displaystyle{ w }[/math] to compute the net input. The net input is then passed on to the activation function (here: the unit step function), which generates a binary output -1 or +1 — the predicted class label of the sample. During the learning phase, this output is used to calculate the error of the prediction and update the weights.

- Here, the output value is the class label predicted by the unit step function that we defined earlier, and the simultaneous update of each weight [math]\displaystyle{ w_j }[/math] in the weight vector [math]\displaystyle{ \mathbf{w} }[/math] can be more formally written as:

1957

- (Rosenblatt, 1957) ⇒ Rosenblatt,m F. (1957). "The perceptron, a perceiving and recognizing automaton (Project Para)". Cornell Aeronautical Laboratory.

- PREFACE: The work described in this report was supported as a part of the internal research program of the Cornell Aeronautical Laboratory, Inc. The concepts discussed had their origins in some independent research by the author in the field of physiological psychology, in which the aim has been to formulate a brain analogue useful in analysis. This area of research has been of active interest to the author for five or six years. The perceptron concept is a recent product of this research program; the current effort is aimed at establishing the technical and economic feasibility of the perceptron.

1943

- (McCulloch & Pitts, 1943) ⇒ McCulloch, W. S., & Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. The bulletin of mathematical biophysics, 5(4), 115-133.

- ABSTRACT: Because of the “all-or-none” character of nervous activity, neural events and the relations among them can be treated by means of propositional logic. It is found that the behavior of every net can be described in these terms, with the addition of more complicated logical means for nets containing circles; and that for any logical expression satisfying certain conditions, one can find a net behaving in the fashion it describes. It is shown that many particular choices among possible neurophysiological assumptions are equivalent, in the sense that for every net behaving under one assumption, there exists another net which behaves under the other and gives the same results, although perhaps not in the same time. Various applications of the calculus are discussed.