2017 FastQAASimpleandEfficientNeural

- (Weissenborn et al., 2017) ⇒ Dirk Weissenborn, Georg Wiese, and Laura Seiffe. (2017). “FastQA: A Simple and Efficient Neural Architecture for Question Answering.” In: CoRR, abs/1703.04816v1.

Subject Headings: FastQA; FastQAExt; Question Answering Dataset; Reading Comprehension Dataset

Notes

Cited By

- Google Scholar: ~ 61 Citations, Retrieved:2020-12-27.

Quotes

Abstract

Recent development of large-scale question answering (QA) datasets triggered a substantial amount of research into end-to-end neural architectures for QA. Increasingly complex systems have been conceived without comparison to a simpler neural baseline system that would justify their complexity. In this work, we propose a simple heuristic that guided the development of FastQA, an efficient end-to-end neural model for question answering that is very competitive with existing models. We further demonstrate, that an extended version (FastQAExt) achieves state-of-the-art results on recent benchmark datasets, namely SQuAD, NewsQA and MsMARCO, outperforming most existing models. However, we show that increasing the complexity of FastQA to FastQAExt does not yield any systematic improvements. We argue that the same holds true for most existing systems that are similar to FastQAExt. A manual analysis reveals that our proposed heuristic explains most predictions of our model, which indicates that modeling a simple heuristic is enough to achieve strong performance on extractive QA datasets. The overall strong performance of FastQA puts results of existing, more complex models into perspective.

1. Introduction

2. FastQA

(...)

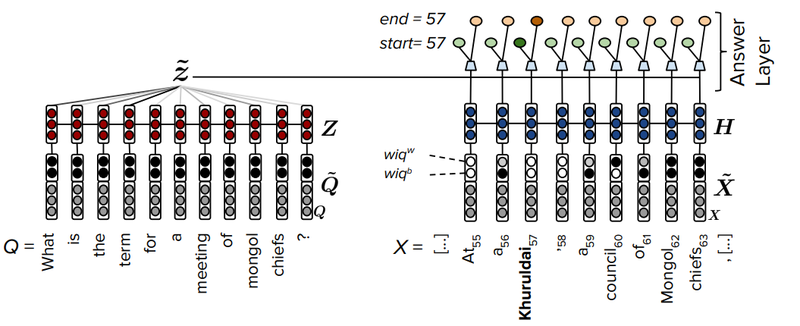

On a high level, the FastQA model consists of three basic layers, namely the embedding, encoding and answer layer, that are described in detail in the following. We denote the hidden dimensionality of the model by $n$, the question tokens by $Q = \left(q_1, \cdots, q_{LQ} \right)$, and the context tokens by $X = \left(x_1, \cdots, x_{LX} \right)$. An illustration of the basic architecture is provided in Figure 1.

|

(...)

3. Comparison to Prior Work

4. FastQA Extended

5. Experimental Setup

6. Results

7. Related Work

8. Conclusion

Acknowledgments

References

BibTeX

@article{2017_FastQAASimpleandEfficientNeural,

author = {Dirk Weissenborn and

Georg Wiese and

Laura Seiffe},

title = {FastQA: A Simple and Efficient Neural Architecture for Question

Answering},

journal = {CoRR},

volume = {abs/1703.04816v1},

year = {2017},

url = {http://arxiv.org/abs/1703.04816v1},

archivePrefix = {arXiv},

eprint = {1703.04816v1},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2017 FastQAASimpleandEfficientNeural | Dirk Weissenborn Georg Wiese Laura Seiffe | FastQA: A Simple and Efficient Neural Architecture for Question Answering | 2017 |