2017 UnsupervisedMachineTranslationU

- (Lample et al., 2017) ⇒ Guillaume Lample, Alexis Conneau, Ludovic Denoyer, and Marc'Aurelio Ranzato. (2017). “Unsupervised Machine Translation Using Monolingual Corpora Only.” In: arXiv preprint arXiv:1711.00043.

Subject Headings:

Notes

- Version(s) and URL(s):

Cited By

- Google Scholar: ~ 446 Citattions Retrieved:2020-07-24.

- Semantic Scholar: ~ 446 Citations Retrieved:2020-07-24.

- MS Academic: ~ 463 Citations Retrieved:2020-07-24.

Quotes

Abstract

Machine translation has recently achieved impressive performance thanks to recent advances in deep learning and the availability of large-scale parallel corpora. There have been numerous attempts to extend these successes to low-resource language pairs, yet requiring tens of thousands of parallel sentences. In this work, we take this research direction to the extreme and investigate whether it is possible to learn to translate even without any parallel data. We propose a model that takes sentences from monolingual corpora in two different languages and maps them into the same latent space. By learning to reconstruct in both languages from this shared feature space, the model effectively learns to translate without using any labeled data. We demonstrate our model on two widely used datasets and two language pairs, reporting BLEU scores of 32.8 and 15.1 on the Multi30k and WMT English-French datasets, without using even a single parallel sentence at training time.

1 Introduction

Thanks to recent advances in deep learning (Sutskever et al., 2014; Bahdanau et al., 2015) and the availability of large-scale parallel corpora, machine translation has now reached impressive performance on several language pairs (Wu et al., 2016). However, these models work very well only when provided with massive amounts of parallel data, in the order of millions of parallel sentences. Unfortunately, parallel corpora are costly to build as they require specialized expertise, and are often nonexistent for low-resource languages. Conversely, monolingual data is much easier to find, and many languages with limited parallel data still possess significant amounts of monolingual data.

There have been several attempts at leveraging monolingual data to improve the quality of machine translation systems in a semi-supervised setting (Munteanu et al., 2004; Irvine, 2013; Irvine & Callison-Burch, 2015; Zheng et al., 2017). Most notably, Sennrich et al. (2015a) proposed a very effective data-augmentation scheme, dubbed “back-translation”, whereby an auxiliary translation system from the target language to the source language is first trained on the available parallel data, and then used to produce translations from a large monolingual corpus on the target side. The pairs composed of these translations with their corresponding ground truth targets are then used as additional training data for the original translation system.

Another way to leverage monolingual data on the target side is to augment the decoder with a language model (Gulcehre et al., 2015). And finally, Cheng et al. (2016); He et al. (2016) have proposed to add an auxiliary auto-encoding task on monolingual data, which ensures that a translated sentence can be translated back to the original one. All these works still rely on several tens of thousands parallel sentences, however.

Previous work on zero-resource machine translation has also relied on labeled information, not from the language pair of interest but from other related language pairs (Firat et al., 2016; Johnson et al., 2016; Chen et al., 2017) or from other modalities (Nakayama & Nishida, 2017; Lee et al., 2017). The only exception is the work by Ravi & Knight (2011); Pourdamghani & Knight (2017), where the machine translation problem is reduced to a deciphering problem. Unfortunately, their method is limited to rather short sentences and it has only been demonstrated on a very simplistic setting comprising of the most frequent short sentences, or very closely related languages.

In this paper, we investigate whether it is possible to train a general machine translation system without any form of supervision whatsoever. The only assumption we make is that there exists a monolingual corpus on each language. This set up is interesting for a twofold reason. First, this is applicable whenever we encounter a new language pair for which we have no annotation. Second, it provides a strong lower bound performance on what any good semi-supervised approach is expected to yield.

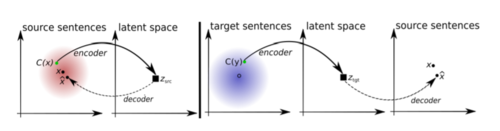

The key idea is to build a common latent space between the two languages (or domains) and to learn to translate by reconstructing in both domains according to two principles: (i) the model has to be able to reconstruct a sentence in a given language from a noisy version of it, as in standard denoising auto-encoders (Vincent et al., 2008). (ii) The model also learns to reconstruct any source sentence given a noisy translation of the same sentence in the target domain, and vice versa. For (ii), the translated sentence is obtained by using a back-translation procedure (Sennrich et al., 2015a), i.e. by using the learned model to translate the source sentence to the target domain. In addition to these reconstruction objectives, we constrain the source and target sentence latent representations to have the same distribution using an adversarial regularization term, whereby the model tries to fool a discriminator which is simultaneously trained to identify the language of a given latent sentence representation (Ganin et al., 2016). This procedure is then iteratively repeated, giving rise to translation models of increasing quality. To keep our approach fully unsupervised, we initialize our algorithm by using a na¨ıve unsupervised translation model based on a word by word translation of sentences with a bilingual lexicon derived from the same monolingual data (Conneau et al., 2017). As a result, and by only using monolingual data, we can encode sentences of both languages into the same feature space, and from there, we can also decode/translate in any of these languages; see Figure 1 for an illustration.

While not being able to compete with supervised approaches using lots of parallel resources, we show in section 4 that our model is able to achieve remarkable performance. For instance, on the WMT dataset we can achieve the same translation quality of a similar machine translation system trained with full supervision on 100,000 sentence pairs. On the Multi30K-Task1 dataset we achieve a BLEU above 22 on all the language pairs, with up to 32.76 on English-French.

Next, in section 2, we describe the model and the training algorithm. We then present experimental results in section 4. Finally, we further discuss related work in section 5 and summarize our findings in section 6.

2 UNSUPERVISED NEURAL MACHINE TRANSLATION

In this section, we first describe the architecture of the translation system, and then we explain how we train it.

2.1 NEURAL MACHINE TRANSLATION MODEL

The translation model we propose is composed of an encoder and a decoder, respectively responsible for encoding source and target sentences to a latent space, and to decode from that latent space to the source or the target domain. We use a single encoder and a single decoder for both domains (Johnson et al., 2016). The only difference when applying these modules to different languages is the choice of lookup tables.

Let us denote by $\mathcal{W}_{S}$ the set of words in the source domain associated with the (learned) words embeddings $\mathcal{Z}^{S}=\left(z_{1}^{s}, \ldots, z_{\left|\mathcal{W}_{S}\right|}^{s}\right),$ and by $\mathcal{W}_{T}$ the set of words in the target domain associated with the embeddings $\mathcal{Z}^{T}=\left(z_{1}^{t}, \ldots, z_{\left|\mathcal{W}_{T}\right|}^{t}\right), \mathcal{Z}$ being the set of all the embeddings. Given an input sentence of $m$ words $x=\left(x_{1}, x_{2}, \ldots, x_{m}\right)$ in a particular language $\ell, \ell \in\{s r c, \text { tg} t\},$ an encoder $e_{\theta_{\text {enc }}, \mathcal{Z}}(\boldsymbol{x}, \ell)$ computes a sequence of $m$ hidden states $\boldsymbol{z}=\left(z_{1}, z_{2}, \ldots, z_{m}\right)$ by using the corresponding word embeddings, i.e. $\mathcal{Z}_{S}$ if $\ell=\operatorname{src}$ and $\mathcal{Z}_{T}$ if $\ell=\operatorname{tg} t ;$ the other parameters $\theta_{\text {enc }}$ are instead shared between the source and target languages. For the sake of simplicity, the encoder will be denoted as $e(\boldsymbol{x}, \ell)$ in the following. These hidden states are vectors in $\mathbb{R}^{n}, n$ being the dimension of the latent space.

A decoder $d_{\theta_{\text {dec }}, \mathcal{Z}}(\boldsymbol{z}, \ell)$ takes as input $\boldsymbol{z}$ and a language $\ell,$ and generates an output sequence $\boldsymbol{y}=$ $\left(y_{1}, y_{2}, \ldots, y_{k}\right),$ where each word $y_{i}$ is in the corresponding vocabulary $\mathcal{W}^{\ell} .$ This decoder makes use of the corresponding word embeddings, and it is otherwise parameterized by a vector $\theta_{\text {dec }}$ that does not depend on the output language. It will thus be denoted $d(z, \ell)$ in the following. To generate an output word $y_{i},$ the decoder iteratively takes as input the previously generated word $y_{i-1}\left(y_{0}\right.$ being a start symbol which is language dependent), updates its internal state, and returns the word that has the highest probability of being the next one. The process is repeated until the decoder generates a stop symbol indicating the end of the sequence.

In this article, we use a sequence-to-sequence model with attention (Bahdanau et al., 2015), without input-feeding. The encoder is a bidirectional-LSTM which returns a sequence of hidden states $\boldsymbol{z}=\left(z_{1}, z_{2}, \ldots, z_{m}\right) .$ At each step, the decoder, which is also an LSTM, takes as input the previous hidden state, the current word and a context vector given by a weighted sum over the encoder states. In all the experiments we consider, both encoder and decoder have 3 layers. The LSTM layers are shared between the source and target encoder, as well as between the source and target decoder. We also share the attention weights between the source and target decoder. The embedding and LSTM hidden state dimensions are all set to $300 .$ Sentences are generated using greedy decoding.

2.2 OVERVIEW OF THE METHOD

We consider a dataset of sentences in the source domain, denoted by $\mathcal{D}_{s r c}$, and another dataset in the target domain, denoted by $\mathcal{D}_{\text {tgt. }}$ These datasets do not correspond to each other, in general. We train the encoder and decoder by reconstructing a sentence in a particular domain, given a noisy version of the same sentence in the same or in the other domain.

At a high level, the model starts with an unsupervised na¨ıve translation model obtained by making word-by-word translation of sentences using a parallel dictionary learned in an unsupervised way (Conneau et al., 2017). Then, at each iteration, the encoder and decoder are trained by minimizing an objective function that measures their ability to both reconstruct and translate from a noisy version of an input training sentence. This noisy input is obtained by dropping and swapping words in the case of the auto-encoding task, while it is the result of a translation with the model at the previous iteration in the case of the translation task. In order to promote alignment of the latent distribution of sentences in the source and the target domains, our approach also simultaneously learns a discriminator in an adversarial setting. The newly learned encoder/decoder are then used at the next iteration to generate new translations, until convergence of the algorithm. At test time and despite the lack of parallel data at training time, the encoder and decoder can be composed into a standard machine translation system.

2.3 DENOISING AUTO-ENCODING

Training an autoencoder of sentences is a trivial task, if the sequence-to-sequence model is provided with an attention mechanism like in our work ${ }^{1}$. Without any constraint, the auto-encoder very quickly learns to merely copy every input word one by one. Such a model would also perfectly copy sequences of random words, suggesting that the model does not learn any useful structure in the data. To address this issue, we adopt the same strategy of Denoising Auto-encoders (DAE) (Vincent et al., 2008 ) , and add noise to the input sentences (see Figure 1-left ), similarly to Hill et al. (2016) . Considering a domain $\ell=\operatorname{src}$ or $\ell=\operatorname{tg} t,$ and a stochastic noise model denoted by $C$ which operates on sentences, we define the following objective function:

$$ \mathcal{L}_{\text {auto}}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}, \ell\right)=\mathbb{E}_{x \sim \mathcal{D}_{\ell}, \hat{x} \sim d(e(C(x), \ell), \ell)}[\Delta(\hat{x}, x)] $$

where $\hat{x} \sim d(e(C(x), \ell), \ell)$ means that $\hat{x}$ is a reconstruction of the corrupted version of $x,$ with $x$ sampled from the monolingual dataset $\mathcal{D}_{\ell} .$ In this equation, $\Delta$ is a measure of discrepancy between the two sequences, the sum of token-level cross-entropy losses in our case.

Noise model $\quad C(x)$ is a randomly sampled noisy version of sentence $x .$ In particular, we add two different types of noise to the input sentence. First, we drop every word in the input sentence with a probability $p_{w d}$. Second, we slightly shuffle the input sentence. To do so, we apply a random permutation $\sigma$ to the input sentence, verifying the condition $\forall i \in\{1, n\},|\sigma(i)-i| \leq k$ where $n$ is the length of the input sentence, and $k$ is a tunable parameter.

To generate a random permutation verifying the above condition for a sentence of size $n,$ we generate a random vector $q$ of size $n,$ where $q_{i}=i+U(0, \alpha),$ and $U$ is a draw from the uniform distribution in the specified range. Then, we define $\sigma$ to be the permutation that sorts the array $q .$ In particular, $\alpha<1$ will return the identity, $\alpha=+\infty$ can return any permutation, and $\alpha=k+1$ will return permutations $\sigma$ verifying $\forall i \in\{1, n\},|\sigma(i)-i| \leq k$. Although biased, this method generates permutations similar to the noise observed with word-by-word translation.

In our experiments, both the word dropout and the input shuffling strategies turned out to have a critical impact on the results, see also section $4.5,$ and using both strategies at the same time gave us the best performance. In practice, we found $p_{w d}=0.1$ and $k=3$ to be good parameters.

2.4 CROSS DOMAIN TRAINING

The second objective of our approach is to constrain the model to be able to map an input sentence from a the source/target domain $\ell_{1}$ to the target/source domain $\ell_{2}$, which is what we are ultimately interested in at test time. The principle here is to sample a sentence $x \in \mathcal{D}_{\ell_{1}},$ and to generate a corrupted translation of this sentence in $\ell_{2} .$ This corrupted version is generated by applying the current translation model denoted $M$ to $x$ such that $y=M(x)$. Then a corrupted version $C(y)$ is sampled (see Figure 1-right). The objective is thus to learn the encoder and the decoder such that they can reconstruct $x$ from $C(y)$. The cross-domain loss can be written as:

$$ \mathcal{L}_{c d}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}, \ell_{1}, \ell_{2}\right)=\mathbb{E}_{x \sim \mathcal{D}_{\ell_{1}}, \hat{x} \sim d\left(e\left(C(M(x)), \ell_{2}\right), \ell_{1}\right)}[\Delta(\hat{x}, x)] $$

where $\Delta$ is again the sum of token-level cross-entropy losses.

2.5 ADVERSARIAL TRAINING =

Intuitively, the decoder of a neural machine translation system works well only when its input is produced by the encoder it was trained with, or at the very least, when that input comes from a distribution very close to the one induced by its encoder. Therefore, we would like our encoder to output features in the same space regardless of the actual language of the input sentence. If such condition is satisfied, our decoder may be able to decode in a certain language regardless of the language of the encoder input sentence.

Note however that the decoder could still produce a bad translation while yielding a valid sentence in the target domain, as constraining the encoder to map two languages in the same feature space does not imply a strict correspondence between sentences. Fortunately, the previously introduced loss for cross-domain training in equation 2 mitigates this concern. Also, recent work on bilingual lexical induction has shown that such a constraint is very effective at the word level, suggesting that it may also work at the sentence level, as long as the two latent representations exhibit strong structure in feature space.

In order to add such a constraint, we train a neural network, which we will refer to as the discriminator, to classify between the encoding of source sentences and the encoding of target sentences (Ganin et al., 2016 ). The discriminator operates on the output of the encoder, which is a sequence of latent vectors $\left(z_{1}, \ldots, z_{m}\right),$ with $z_{i} \in \mathbb{R}^{n},$ and produces a binary prediction about the language of the encoder input sentence: $p_{D}\left(l \mid z_{1}, \ldots, z_{m}\right) \propto \prod_{j=1}^{m} p_{D}\left(\ell \mid z_{j}\right),$ with $p_{D}: \mathbb{R}^{n} \rightarrow[0 ; 1],$ where 0 corresponds to the source domain, and 1 to the target domain.

The discriminator is trained to predict the language by minimizing the following cross-entropy loss: $\mathcal{L}_{\mathcal{D}}\left(\theta_{D} \mid \theta, \mathcal{Z}\right)=-\mathbb{E}_{\left(x_{i}, \ell_{i}\right)}\left[\log p_{D}\left(\ell_{i} \mid e\left(x_{i}, \ell_{i}\right)\right)\right],$ where $\left(x_{i}, \ell_{i}\right)$ corresponds to sentence and language id pairs uniformly sampled from the two monolingual datasets, $\theta_{D}$ are the parameters of the discriminator, $\theta_{\text {enc }}$ are the parameters of the encoder, and $\mathcal{Z}$ are the encoder word embeddings.

The encoder is trained instead to fool the discriminator:

$$ \mathcal{L}_{a d v}\left(\theta_{\text {enc }}, \mathcal{Z} \mid \theta_{D}\right)=-\mathbb{E}_{\left(x_{i}, \ell_{i}\right)}\left[\log p_{D}\left(\ell_{j} \mid e\left(x_{i}, \ell_{i}\right)\right)\right] $$

with $\ell_{j}=\ell_{1}$ if $\ell_{i}=\ell_{2},$ and vice versa.

Final Objective function The final objective function at one iteration of our learning algorithm is thus: \begin{aligned} \mathcal{L}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}\right)=& \lambda_{\text {auto }}\left[\mathcal{L}_{\text {auto }}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}, \text { src }\right)+\mathcal{L}_{\text {auto }}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}, \operatorname{tg} t\right)\right]+\\ & \lambda_{c d}\left[\mathcal{L}_{c d}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}, s r c, t g t\right)+\mathcal{L}_{c d}\left(\theta_{\text {enc }}, \theta_{\text {dec }}, \mathcal{Z}, t g t, s r c\right)\right]+\\ & \lambda_{a d v} \mathcal{L}_{a d v}\left(\theta_{\text {enc }}, \mathcal{Z} \mid \theta_{D}\right) \end{aligned}

where $\lambda_{\text {auto}}, \lambda_{c d},$ and $\lambda_{a d v}$ are hyper-parameters weighting the importance of the auto-encoding, cross-domain and adversarial loss. In parallel, the discriminator loss $\mathcal{L}_{D}$ is minimized to update the discriminator.

3 TRAINING

In this section we describe the overall training algorithm and the unsupervised criterion we used to select hyper-parameters.

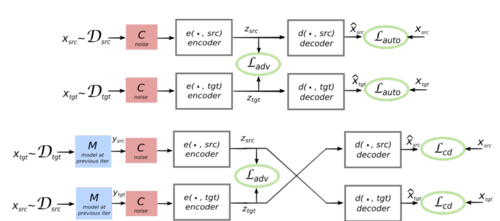

3.1 ITERATIVE TRAINING

The final learning algorithm is described in Algorithm 1 and the general architecture of the model is shown in Figure 2. As explained previously, our model relies on an iterative algorithm which starts from an initial translation model $M^{(1)}$ (line 3). This is used to translate the available monolingual data, as needed by the cross-domain loss function of Equation 2. At each iteration, a new encoder and decoder are trained by minimizing the loss of Equation 4 – line 7 of the algorithm. Then, a new translation model $M^{(t+1)}$ is created by composing the resulting encoder and decoder, and the process repeats.

To jump start the process, $M^{(1)}$ simply makes a word-by-word translation of each sentence using a parallel dictionary learned using the unsupervised method proposed by Conneau et al. (2017), which only leverages monolingual data.

The intuition behind our algorithm is that as long as the initial translation model $M^{(1)}$ retains at least some information of the input sentence, the encoder will map such translation into a representation in feature space that also corresponds to a cleaner version of the input, since the encoder is trained to denoise. At the same time, the decoder is trained to predict noiseless outputs, conditioned on noisy features. Putting these two pieces together will produce less noisy translations, which will enable better back-translations at the next iteration, and so on so forth.

| Algorithm 1 Unsupervised Training for Machine Translation |

|

1: procedure TRAINING($\mathcal{D}_{s r c}, \mathcal{D}_{t g t}, T$)

|

3.2 UNSUPERVISED MODEL SELECTION CRITERION

In order to select hyper-parameters, we wish to have a criterion correlated with the translation quality. However, we do not have access to parallel sentences to judge how well our model translates, not even at validation time. Therefore, we propose the surrogate criterion which we show correlates well with BLEU (Papineni et al., 2002), the metric we care about at test time.

For all sentences $x$ in a domain $\ell_{1}$, we translate these sentences to the other domain $\ell_{2}$, and then translate the resulting sentences back to $\ell_{1}$. The quality of the model is then evaluated by computing the BLEU score over the original inputs and their reconstructions via this two-step translation process. The performance is then averaged over the two directions, and the selected model is the one with the highest average score.

Given an encoder $e,$ a decoder $d$ and two non-parallel datasets $\mathcal{D}_{s r c}$ and $\mathcal{D}_{t g t},$ we denote $M_{s r c \rightarrow t g t}(x)=d(e(x, s r c), t g t)$ the translation model from $s r c$ to $t g t,$ and $M_{t g t \rightarrow s r c}$ the model in the opposite direction. Our model selection criterion $M S\left(e, d, \mathcal{D}_{s r c}, \mathcal{D}_{\text {tgt }}\right)$ is:

$$ \begin{aligned} M S\left(e, d, \mathcal{D}_{s r c}, \mathcal{D}_{t g t}\right)=& \frac{1}{2} \mathbb{E}_{x \sim \mathcal{D}_{s r c}}\left[\operatorname{BLEU}\left(x, M_{s r c \rightarrow t g t} \circ M_{t g t \rightarrow s r c}(x)\right)\right]+\\ & \frac{1}{2} \mathbb{E}_{x \sim \mathcal{D}_{t g t}}\left[\operatorname{BLEU}\left(x, M_{t g t \rightarrow s r c} \circ M_{s r c \rightarrow t g t}(x)\right)\right] \end{aligned} $$

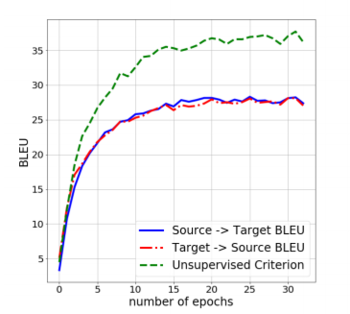

Figure 3 shows a typical example of the correlation between this measure and the final translation model performance (evaluated here using a parallel dataset).

The unsupervised model selection criterion is used both to a) determine when to stop training and b) to select the best hyper-parameter setting across different experiments. In the former case, the Spearman correlation coefficient between the proposed criterion and BLEU on the test set is 0.95 in average. In the latter case, the coefficient is in average 0.75, which is fine but not nearly as good. For instance, the BLEU score on the test set of models selected with the unsupervised criterion are sometimes up to 1 or 2 BLEU points below the score of models selected using a small validation set of 500 parallel sentences.

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2017 UnsupervisedMachineTranslationU | Guillaume Lample Alexis Conneau Ludovic Denoyer Marc'Aurelio Ranzato | Unsupervised Machine Translation Using Monolingual Corpora Only | 2017 |