2019 DatatoTextGenerationwithContent

- (Puduppully et al., 2019) ⇒ Ratish Puduppully, Li Dong, and Mirella Lapata. (2019). “Data-to-Text Generation with Content Selection and Planning.” In: Proceedings of the AAAI Conference on Artificial Intelligence.

Subject Headings: Data-to-Text Generation.

Notes

Cited By

- Google Scholar: ~ 50 Citations.

- Semantic Scholar: ~ 50 Citations.

- MS Academic: ~ 35 Citations

Quotes

Abstract

Recent advances in data-to-text generation have led to the use of large-scale datasets and neural network models which are trained end-to-end, without explicitly modeling what to say and in what order. In this work, we present a neural network architecture which incorporates content selection and planning without sacrificing end-to-end training. We decompose the generation task into two stages. Given a corpus of data records (paired with descriptive documents), we first generate a content plan highlighting which information should be mentioned and in which order and then generate the document while taking the content plan into account. Automatic and human-based evaluation experiments show that our model outperforms strong baselines improving the state-of-the-art on the recently released ROTOWIRE dataset.

1 Introduction

Data-to-text generation broadly refers to the task of automatically producing text from non-linguistic input (Reiter and Dale 2000; Gatt and Krahmer 2018). The input may be in various forms including databases of records, spreadsheets, expert system knowledge bases, simulations of physical systems, and so on. Table 1 shows an example in the form of a database containing statistics on NBA basketball games, and a corresponding game summary.

Traditional methods for data-to-text generation (Kukich 1983; McKeown 1992) implement a pipeline of modules including content planning (selecting specific content from some input and determining the structure of the output text), sentence planning (determining the structure and lexical content of each sentence) and surface realization (converting the sentence plan to a surface string). Recent neural generation systems (Lebret et al. 2016; Mei et al. 2016; Wiseman et al. 2017) do not explicitly model any of these stages, rather they are trained in an end-to-end fashion using the very successful encoder-decoder architecture (Bahdanau et al. 2015) as their backbone.

Despite producing overall fluent text, neural systems have difficulty capturing long-term structure and generating documents more than a few sentences long. Wiseman et al. (2017) show that neural text generation techniques perform poorly at content selection, they struggle to maintain inter-sentential coherence, and more generally a reasonable ordering of the selected facts in the output text. Additional challenges include avoiding redundancy and being faithful to the input. Interestingly, comparisons against templatebased methods show that neural techniques do not fare well on metrics of content selection recall and factual output generation (i.e., they often hallucinate statements which are not supported by the facts in the database).

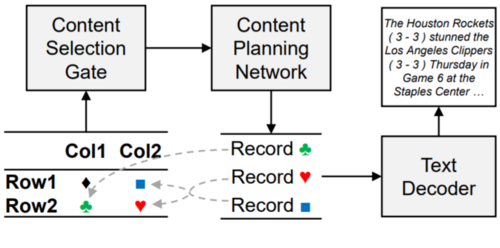

In this paper, we address these shortcomings by explicitly modeling content selection and planning within a neural data-to-text architecture. Our model learns a content plan from the input and conditions on the content plan in order to generate the output document (see figure 1 for an illustration). An explicit content planning mechanism has at least three advantages for multi-sentence document generation: it represents a high-level organization of the document structure allowing the decoder to concentrate on the easier tasks of sentence planning and surface realization; it makes the process of data-to-document generation more interpretable by generating an intermediate representation; and reduces redundancy in the output, since it is less likely for the content plan to contain the same information in multiple places.

|

We train our model end-to-end using neural networks and evaluate its performance on ROTOWIRE (Wiseman et al. 2017), a recently released dataset which contains statistics of NBA basketball games paired with human-written

summaries (see Table 1). Automatic and human evaluation shows that modeling content selection and planning improves generation considerably over competitive baselines.

| TEAM | WIN | LOSS | PTS | FG_PCT | RB | AST | ... |

|---|---|---|---|---|---|---|---|

| Pacers | 4 | 6 | 99 | 42 | 40 | 17 | ... |

| Celtics | 5 | 4 | 105 | 44 | 47 | 22 | ... |

| PLAYER | H/V | AST | RB | PTS | FG | CITY | ... |

| Jeff Teague | H | 4 | 3 | 20 | 4 | Indiana | ... |

| Miles Turner | H | 1 | 8 | 17 | 6 | Indiana | ... |

| Isaiah Thomas | V | 5 | 0 | 23 | 4 | Boston | ... |

| Kelly Olynyk | V | 4 | 6 | 16 | 6 | Boston | ... |

| Amir Johnson | V | 3 | 9 | 14 | 4 | Boston | ... |

| ... | ... | ... | ... | ... | ... | ... | ... |

2 Related Work

The generation literature provides multiple examples of content selection components developed for various domains which are either hand-built (Kukich 1983; McKeown 1992; Reiter and Dale 1997; Duboue and McKeown 2003) or learned from data (Barzilay and Lapata 2005; Duboue and McKeown 2001; 2003; Liang et al. 2009; Angeli et al. 2010; Kim and Mooney 2010; Konstas and Lapata 2013). Likewise, creating summaries of sports games has been a topic of interest since the early beginnings of generation systems (Robin 1994; Tanaka-Ishii et al. 1998).

Earlier work on content planning has relied on generic planners (Dale 1988), based on Rhetorical Structure Theory (Hovy 1993) and schemas (McKeown et al. 1997). Content planners are defined by analysing target texts and devising hand-crafted rules. Duboue and McKeown (2001) study ordering constraints for content plans and in follow-on work (Duboue and McKeown 2002) learn a content planner from an aligned corpus of inputs and human outputs. A few researchers (Mellish et al. 1998; Karamanis 2004) select content plans according to a ranking function.

More recent work focuses on end-to-end systems instead of individual components. However, most models make simplifying assumptions such as generation without any content selection or planning (Belz 2008; Wong and Mooney 2007) or content selection without planning (Konstas and Lapata 2012; Angeli et al. 2010; Kim and Mooney 2010). An exception are Konstas and Lapata (2013) who incorporate content plans represented as grammar rules operating on the document level. Their approach works reasonably well with weather forecasts, but does not scale easily to larger databases, with richer vocabularies, and longer text descriptions. The model relies on the EM algorithm (Dempster, Laird, and Rubin 1977) to learn the weights of the grammar rules which can be very many even when tokens are aligned to database records as a preprocessing step.

Our work is closest to recent neural network models which learn generators from data and accompanying text resources. Most previous approaches generate from Wikipedia infoboxes focusing either on single sentences (Lebret et al. 2016; 2017; Sha et al. 2017; Liu et al. 2017) or short texts (Perez-Beltrachini and Lapata 2018). Mei et al. (2016) use a neural encoder-decoder model to generate weather forecasts and soccer commentaries, while Wiseman et al. (2017) generate NBA game summaries (see Table 1). They introduce a new dataset for data-to-document generation which is sufficiently large for neural network training and adequately challenging for testing the capabilities of document-scale text generation (e.g., the average summary length is 330 words and the average number of input records is 628). Moreover, they propose various automatic evaluation measures for assessing the quality of system output. Our model follows on from Wiseman et al. (2017) addressing the challenges for data-to-text generation identified in their work. We are not aware of any previous neural network-based approaches which incorporate content selection and planning mechanisms and generate multi-sentence documents. Perez-Beltrachini and Lapata (2018) introduce a content selection component (based on multi-instance learning) without content planning, while Liu et al. (2017) propose a sentence planning mechanism which orders the contents of a Wikipedia infobox so as to generate a single sentence.

3 Problem Formulation

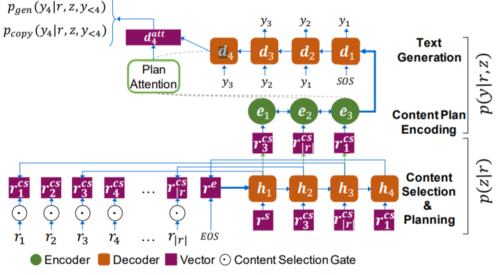

The input to our model is a table of records (see Table 1 left hand-side). Each record $r_j$ has four features including its type ($r_{j,1}$; e.g., LOSS, CITY), entity ($r_{j,2}$; e.g., Pacers, Miles Turner), value ($r_{j,3}$; e.g., 11, Indiana), and whether a player is on the home- or away-team ($r_{j,4}$; see column H/V in Table 1), represented as $\{r_{j,k}\}^4 _{k=1}$. The output y is a document containing words $y = y1 · · · y_{|y|}$ where $|y|$ is the document length. Figure 2 shows the overall architecture of our model which consists of two stages: (a) content selection and planning operates on the input records of a database and produces a content plan specifying which records are to be verbalized in the document and in which order (see Table 2) and (b) text generation produces the output text given the content plan as input; at each decoding step, the generation model attends over vector representations of the records in the content plan.

|

Let $r = \{r_j\}^{|r|} _{j=1}$ denote a table of input records and $y$ the output text. We model $p(y|r)$ as the joint probability of

text $y$ and content plan $z$, given input $r$. We further decompose $p(y, z|r)$ into $p(z|r)$, a content selection and planning phase, and p(y|r, z), a text generation phase: $$ p(y|r) = \sum_{z} p(y, z|r) = \sum_{z}p(z|r)p(y|r, z)$$ In the following we explain how the components $p(z|r)$ and $p(y|r, z)$ are estimated.

Record Encoder

The input to our model is a table of unordered records, each represented as features $\{r_{j,k}\}^4 _{k=1}$. Following previous work (Yang et al. 2017; Wiseman et al. 2017), we embed features into vectors, and then use a multilayer perceptron to obtain a vector representation $r_j$ for each record:

$$r_j = ReLU(W_r[r_{j,1}; r_{j,2}; r_{j,3}; r_{j,4}] + b_r)$$ where $[; ]$ indicates vector concatenation, $W_r \in \mathbb{R}^{n\times4n}$ , $b_r \in \mathbb{R}^{n}$ are parameters, and $ReLU $is the rectifier activation function.

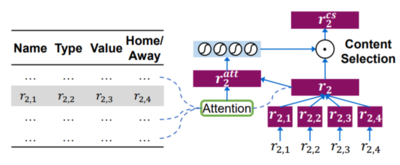

Content Selection Gate

The context of a record can be useful in determining its importance vis-a-vis other records in the table. For example, if a player scores many points, it is likely that other meaningfully related records such as field goals, three-pointers, or rebounds will be mentioned in the output summary. To better capture such dependencies among records, we make use of the content selection gate mechanism as shown in Figure 3. We first compute attention scores $\alpha{j,k}$ over the input table and use them to obtain an attentional vector $r^{att}_j$ for each record $r_j$ : $$\alpha_{j,k} \propto \exp(r_j^TW_ar_k)$$ $$ c_j=\sum_{k\neq j}\alpha_{j,k} r_k$$ $$ r_j^{att} = W_g [r_j :c_j]$$ $W_a \in \mathbb{R}^{n \times n}$ , $W_g \in \mathbb{R}^{n \times 2n}$ are are parameter matrices, and $ \sum_{k\neq j}\alpha_{j,k} = 1 $. We next apply the content selection gating mechanism to $r_j$ , and obtain the new record representation $r^{cs}_j$ via: $$ \mathbf{g}_{j}=\operatorname{sigmoid}\left(\mathbf{r}_{j}^{a t t}\right) $$ $$ \mathbf{r}_{j}^{c s}=\mathbf{g}_{j} \odot \mathbf{r}_{j} $$ where $\odot$ denotes element-wise multiplication, and gate $\mathbf{g}_{j} \in$ $[0,1]^{n}$ controls the amount of information flowing from $\mathbf{r}_{j}$ . In other words, each element in $\mathbf{r}_{\mathbf{j}}$ is weighed by the corresponding element of the content selection gate $\mathbf{g}_{j}$

Content Planning

In our generation task, the output text is long but follows a canonical structure. Game summaries typically begin by discussing which team won/lost, following with various statistics involving individual players and their teams (e.g., who performed exceptionally well or under-performed), and finishing with any upcoming games. We hypothesize that generation would benefit from an explicit plan specifying both what to say and in which order. Our model learns such content plans from training data. However, notice that ROTOWIRE (see Table 1) and most similar data-to-text datasets do not naturally contain content plans. Fortunately, we can obtain these relatively straightforwardly following an information extraction approach (which we explain in Section 4).

Suffice it to say that plans are extracted by mapping the text in the summaries onto entities in the input table, their values, and types (i.e., relations). A plan is a sequence of pointers with each entry pointing to an input record $\left\{r_{j}\right\}_{j=1}^{|r|}$. An excerpt of a plan is shown in Table 2. The order in the plan corresponds to the sequence in which entities appear in the game summary. Let $ z=z_{1} \ldots z_{|z|} $ denote the content planning sequence. Each $z_k$ points to an input record, i.e., $z_{k} \in\left\{r_{j}\right\}_{j=1}^{|r|}$ Given the input records, the probability $p(z|r)$ is decomposed as: $$ p(z \mid r)=\prod_{k=1}^{|z|} p\left(z_{k} \mid z_{<k}, r\right) $$ where $ z_{<k}=z_{1} \ldots z_{k-1}$ .

Since the output tokens of the content planning stage correspond to positions in the input sequence, we make use of Pointer Networks (Vinyals et al. 2015). The latter use attention to point to the tokens of the input sequence rather than creating a weighted representation of source encodings. As shown in Figure 2, given $ \left\{r_{j}\right\}_{j=1}^{|r|} $ we use an LSTM decoder to generate tokens corresponding to positions in the input. The first hidden state of the decoder is initialized by $ \operatorname{avg}\left(\left\{\mathbf{r}_{j}^{c s}\right\}_{j=1}^{1}\right),$ i.e., the average of record vectors. At decoding step $k,$ let $\mathbf{h}_{k}$ be the hidden state of the LSTM. We model $p\left(z_{k}=r_{j} \mid z_{<k}, r\right)$ as the attention over input records: $$ p\left(z_{k}=r_{j} \mid z_{<k}, r\right) \propto \exp \left(\mathbf{h}_{k}^{\top} \mathbf{W}_{c} \mathbf{r}_{j}^{c s}\right) $$ where the probability is normalized to $1,$ and $\mathbf{W}_{c}$ are parameters. Once $z_{k}$ points to record $r_{j},$ we use the corresponding vector $\mathbf{r}_{j}^{c s}$ as the input of the next LSTM unit in the decoder.

| Value | Entity | Type | H/V |

|---|---|---|---|

| Boston | Celtics | TEAM-CITY | V |

| Celtics | Celtics | TEAM-NAME | V |

| 105 | Celtics | TEAM-PTS | V |

| Indiana | Pacers | TEAM-CITY | H |

| Pacers | Pacers | TEAM-NAME | H |

| 99 | Pacers | TEAM-PTS | H |

| 42 | Pacers | TEAM-FG_PCT | H |

| 22 | Pacers | TEAM-FG3_PCT | H |

| 5 | Celtics | TEAM-WIN | V |

| 4 | Celtics | TEAM-LOSS | V |

| Isaiah | Isaiah_Thomas | FIRST_NAME | V |

| Thomas | Isaiah_Thomas | SECOND_NAME | V |

| 23 | Isaiah_Thomas | PTS | V |

| 5 | Isaiah_Thomas | AST | V |

| 4 | Isaiah_Thomas | FGM | V |

| 13 | Isaiah_Thomas | FGA | V |

| Kelly | Kelly_Olynyk | FIRST_NAME | V |

| Olynyk | Kelly_Olynyk | SECOND_NAME | V |

| 16 | Kelly_Olynyk | PTS | V |

| 6 | Kelly_Olynyk | REB | V |

| 4 | Kelly_Olynyk | AST | V |

| ... | ... | ... | ... |

Text Generation

The probability of output text $y$ conditioned on content plan $z$ and input table $r$ is modeled as: $$ p(y \mid r, z)=\prod_{t=1}^{|y|} p\left(y_{t} \mid y_{<t}, z, r\right) $$ where $y_{<t}=y_{1} \ldots y_{t-1} .$ We use the encoder-decoder architecture with an attention mechanism to compute $p(y \mid r, z)$ We first encode content plan $z$ into $\left\{\mathbf{e}_{k}\right\}_{k=1}^{|z|}$ using a bidirectional LSTM. Because the content plan is a sequence of input records, we directly feed the corresponding record vectors $\left\{\mathbf{r}_{j}^{c s}\right\}_{j=1}^{|r|}$ as input to the LSTM units, which share the record encoder with the first stage.

The text decoder is also based on a recurrent neural network with LSTM units. The decoder is initialized with the hidden states of the final step in the encoder. At decoding step $t,$ the input of the LSTM unit is the embedding of the previously predicted word $y_{t-1}$. Let $\mathbf{d}_{t}$ be the hidden state of the $t$ -th LSTM unit. The probability of predicting $y_{t}$ from the output vocabulary is computed via: $$ \beta_{t, k} \propto \exp \left(\mathbf{d}_{t}^{\top} \mathbf{W}_{b} \mathbf{e}_{k}\right) (1)$$ $$ \mathbf{q}_{t}=\sum \beta_{t, k} \mathbf{e}_{k} $$ $$ \mathbf{d}_{t}^{a t t}=\tanh \left(\mathbf{W}_{d}\left[\mathbf{d}_{t} ; \mathbf{q}_{t}\right]\right) $$ $$ p_{g e n}\left(y_{t} \mid y_{<t}, z, r\right)=\operatorname{softmax}_{y_{t}}\left(\mathbf{W}_{y} \mathbf{d}_{t}^{a t t}+\mathbf{b}_{y}\right) (2)$$

where $\sum_{k} \beta_{t, k}=1, \mathbf{W}_{b} \in \mathbb{R}^{n \times n}, \mathbf{W}_{d} \in \mathbb{R}^{n \times 2 n}, \mathbf{W}_{y} \in$ $\mathbb{R}^{n \times\left|\mathcal{V}_{y}\right|}, \mathbf{b}_{y} \in \mathbb{R}^{\left|\mathcal{V}_{y}\right|}$ are parameters, and $\left|\mathcal{V}_{y}\right|$ is the output vocabulary size. where $\sum_{k} \beta_{t, k}=1, \mathbf{W}_{b} \in \mathbb{R}^{n \times n}, \mathbf{W}_{d} \in \mathbb{R}^{n \times 2 n}, \mathbf{W}_{y} \in$ $\mathbb{R}^{n \times\left|\mathcal{V}_{y}\right|}, \mathbf{b}_{y} \in \mathbb{R}^{\left|\mathcal{V}_{y}\right|}$ are parameters, and $\left|\mathcal{V}_{y}\right|$ is the output vocabulary size. We further augment the decoder with a copy mechanism, i.e., the ability to copy words directly from the value portions of records in the content plan (i.e., $\left\{z_{k}\right\}_{k=1}^{|z|}$ ). We ex perimented with joint (Gu et al. 2016) and conditional copy methods (Gulcehre et al. 2016). Specifically, we introduce a variable $u_{t} \in\{0,1\}$ for each time step to indicate whether the predicted token $y_{t}$ is copied $\left(u_{t}=1\right)$ or not $\left(u_{t}=0\right)$ The probability of generating $y_{t}$ is computed by: $$ p\left(y_{t} \mid y_{<t}, z, r\right)=\sum_{u_{t} \in\{0,1\}} p\left(y_{t}, u_{t} \mid y_{<t}, z, r\right) $$ where $u_t$ is marginalized out.

Joint Copy The probability of copying from record values and generating from the vocabulary is globally normalized: $$ p\left(y_{t}, u_{t} \mid y_{<t}, z, r\right) \propto $$ $$ \left\{\begin{array}{ll} \sum_{y_{t} \leftarrow z_{k}} \exp \left(\mathbf{d}_{t}^{\top} \mathbf{W}_{b} \mathbf{e}_{k}\right) & u_{t}=1 \\ \exp \left(\mathbf{W}_{y} \mathbf{d}_{t}^{a t t}+\mathbf{b}_{y}\right) & u_{t}=0 \end{array}\right. $$

where $y_{t} \leftarrow z_{k}$ indicates that $y_{t}$ can be copied from $z_{k}, \mathbf{W}_{b}$ is shared as in Equation $(1),$ and $\mathbf{W}_{y}, \mathbf{b}_{y}$ are shared as in Equation (2).

Conditional Copy The variable ut is first computed as a switch gate, and then is used to obtain the output probability: $$ p\left(u_{t}=1 \mid y_{<t}, z, r\right)=\operatorname{sigmoid}\left(\mathbf{w}_{u} \cdot \mathbf{d}_{t}+b_{u}\right) $$ $$ p\left(y_{t}, u_{t} \mid y_{<t}, z, r\right)= $$ $$ \left\{\begin{array}{ll} p\left(u_{t} \mid y_{<t}, z, r\right) \sum_{y_{t} \leftarrow z_{k}} \beta_{t, k} & u_{t}=1 \\ p\left(u_{t} \mid y_{<t}, z, r\right) p_{g e n}\left(y_{t} \mid y_{<t}, z, r\right) & u_{t}=0 \end{array}\right. $$

where $\beta_{t, k}$ and $p_{\text {gen}}\left(y_{t} \mid y_{<t}, z, r\right)$ are computed as in Equations $(1)-(2),$ and $\mathbf{w}_{u} \in \mathbb{R}^{n}, b_{u} \in \mathbb{R}$ are parameters. Following Gulcehre et al. (2016) and Wiseman et al. $(2017),$ if $y_{t}$ appears in the content plan during training, we assume that $\left.y_{t} \text { is copied (i.e., } u_{t}=1\right)$ .

Training and Inference

Our model is trained to maximize the log-likelihood of the gold content plan given table records r and the gold output text given the content plan and table records: $$\max \sum_{(r, z, y) \in \mathcal{D}} \log p(z \mid r)+\log p(y \mid r, z)$$ where $\mathcal{D}$ represents training examples (input records, plans, and game summaries). During inference, the output for input $r$ is predicted by: $$ \hat{z}=\underset{z^{\prime}}{\arg \max } p\left(z^{\prime} \mid r\right) $$ $$ \hat{y}=\underset{y^{\prime}}{\arg \max } p\left(y^{\prime} \mid r, \hat{z}\right) $$

where $z^{\prime}$ and $y^{\prime}$ represent content plan and output text candidates, respectively. For each stage, we utilize beam search to approximately obtain the best results.

4 Experimental Setup

Data We trained and evaluated our model on ROTOWIRE (Wiseman et al. 2017), a dataset of basketball game summaries, paired with corresponding box- and line-score tables. The summaries are professionally written, relatively well structured and long (337 words on average). The number of record types is 39, the average number of records is 628, the vocabulary size is 11.3K words and token count is 1.6M. The dataset is ideally suited for document-scale generation. We followed the data partitions introduced in Wiseman et al. (2017): we trained on 3,398 summaries, tested on 728, and used 727 for validation.

Content Plan Extraction We extracted content plans from the ROTOWIRE game summaries following an information extraction (IE) approach. Specifically, we used the IE system introduced in Wiseman et al. (2017) which identifies candidate entity (i.e., player, team, and city) and value (i.e., number or string) pairs that appear in the text, and then predicts the type (aka relation) of each candidate pair. For instance, in the document in Table 1, the IE system might identify the pair “Jeff Teague, 20” and then predict that their relation is “PTS”, extracting the record (Jeff Teague, 20, PTS). Wiseman et al. (2017) train an IE system on ROTOWIRE by determining word spans which could represent entities (i.e., by matching them against players, teams or cities in the database) and numbers. They then consider each entity-number pair in the same sentence, and if there is a record in the database with matching entities and values, the pair is assigned the corresponding record type or otherwise given the label “none” to indicate unrelated pairs.

We adopted their IE system architecture which predicts relations by ensembling 3 convolutional models and 3 bidirectional LSTM models. We trained this system on the training portion of the ROTOWIRE corpus. On held-out data it achieved 94% accuracy, and recalled approximately 80% of the relations licensed by the records. Given the output of the IE system, a content plan simply consists of (entity, value, record type, H/V) tuples in their order of appearance in a game summary (the content plan for the summary in Table 1 is shown in Table 2). Player names are pre-processed to indicate the individual’s first name and surname (see Isaiah and Thomas in Table 2); team records are also pre-processed to indicate the name of team’s city and the team itself (see Boston and Celtics in Table 2).

Training Configuration We validated model hyperparameters on the development set. We did not tune the dimensions of word embeddings and LSTM hidden layers; we used the same value of $600 $ reported in Wiseman et al. (2017). We used one-layer pointer networks during content planning, and two-layer LSTMs during text generation. Input feeding (Luong et al. 2015) was employed for the text decoder. We applied dropout (Zaremba et al. 2014) at a rate of 0:3. Models were trained for 25 epochs with the Adagrad optimizer (Duchi et al. 2011); the initial learning rate was $0.15$, learning rate decay was selected from ${0:5; 0:97}$, and batch size was 5. For text decoding, we made use of BPTT (Mikolov et al. 2010) and set the truncation size to 100. We set the beam size to 5 during inference. All models are implemented in OpenNMT-py (Klein et al. 2017).

5 Results

Automatic Evaluation We evaluated model output using the metrics defined in Wiseman et al. (2017). The idea is to employ a fairly accurate IE system (see the description in Section 4) on the gold and automatic summaries and compare whether the identified relations align or diverge.

Let $\hat{y}$ be the gold output, and $y$ the system output. Content selection (CS) measures how well (in terms of precision and recall) the records extracted from $y$ match those found in $\hat{y}$. Relation generation (RG) measures the factuality of the generation system as the proportion of records extracted from $y$ which are also found in $r$ (in terms of precision and number of unique relations). Content ordering (CO) measures how well the system orders the records it has chosen and is computed as the normalized Damerau-Levenshtein Distance between the sequence of records extracted from $y$ and $\hat{y} .$ In addition to these metrics, we report BLEU (Papineni et al. 2002), with human-written game summaries as reference.

Our results on the development set are summarized in Table 3. We compare our Neural Content Planning model (NCP for short) against the two encoder-decoder (ED) models presented in Wiseman et al. (2017) with joint copy (JC) and conditional copy (CC), respectively. In addition to our own re-implementation of these models, we include the best scores reported in Wiseman et al. (2017) which were obtained with an encoder-decoder model enhanced with conditional copy (WS-2017). Table 3 also shows results when NCP uses oracle content plans (OR) as input. In addition, we report the performance of a template-based generator (Wiseman et al. 2017) which creates a document consisting of eight template sentences: an introductory sentence (who won/lost), six player-specific sentences (based on the six highest-scoring players in the game), and a conclusion sentence.

| Model | RG | CS | CO | BLEU | ||

|---|---|---|---|---|---|---|

| # | P% | P% | R% | DLD% | ||

| TEMPL | 54.29 | 99.92 | 26.61 | 59.16 | 14.42 | 8.51 |

| WS-2017 | 23.95 | 75.10 | 28.11 | 35.86 | 15.33 | 14.57 |

| ED+JC | 22.98 | 76.07 | 27.70 | 33.29 | 14.36 | 13.22 |

| ED+CC | 21.94 | 75.08 | 27.96 | 32.71 | 15.03 | 13.31 |

| NCP+JC | 33.37 | 87.40 | 32.20 | 48.56 | 17.98 | 14.92 |

| NCP+CC | 33.88 | 87.51 | 33.52 | 51.21 | 18.57 | 16.19 |

| NCP+OR | 21.59 | 89.21 | 88.52 | 85.84 | 78.51 | 24.11 |

| Model | RG | CS | CO | BLEU | ||

|---|---|---|---|---|---|---|

| # | P% | P% | R% | DLD% | ||

| ED+CC | 21.94 | 75.08 | 27.96 | 32.71 | 15.03 | 13.31 |

| CS+CC | 24.93 | 80.55 | 28.63 | 35.23 | 15.12 | 13.52 |

| CP+CC | 33.73 | 84.85 | 29.57 | 44.72 | 15.84 | 14.45 |

| NCP+CC | 33.88 | 87.51 | 33.52 | 51.21 | 18.57 | 16.19 |

| NCP | 34.46 | — | 38.00 | 53.72 | 20.27 | — |

| Model | RG | CS | CO | BLEU | ||

|---|---|---|---|---|---|---|

| # | P% | P% | R% | DLD% | ||

| TEMPL | 54.23 | 99.94 | 26.99 | 58.16 | 14.92 | 8.46 |

| WS-2017 | 23.72 | 74.80 | 29.49 | 36.18 | 15.42 | 14.19 |

| NCP+JC | 34.09 | 87.19 | 32.02 | 47.29 | 17.15 | 14.89 |

| NCP+CC | 34.28 | 87.47 | 34.18 | 51.22 | 18.58 | 16.50 |

As can be seen, NCP improves upon vanilla encoderdecoder models (ED+JC, ED+CC), irrespective of the copy mechanism being employed. In fact, NCP achieves comparable scores with either joint or conditional copy mechanism which indicates that it is the content planner which brings performance improvements. Overall, NCP+CC achieves best content selection and content ordering scores in terms of BLEU. Compared to the best reported system in Wiseman et al. (2017), we achieve an absolute improvement of approximately 12% in terms of relation generation; content selection precision also improves by 5% and recall by 15%, content ordering increases by 3%, and BLEU by 1.5 points. The results of the oracle system (NCP+OR) show that content selection and ordering do indeed correlate with the quality of the content plan and that any improvements in our planning component would result in better output. As far as the template-based system is concerned, we observe that it obtains low BLEU and CS precision but scores high on CS recall and RG metrics. This is not surprising as the template system is provided with domain knowledge which our model does not have, and thus represents an upper-bound on content selection and relation generation. We also measured the degree to which the game summaries generated by our model contain redundant information as the proportion of non-duplicate records extracted from the summary by the IE system. 84.5% of the records in NCP+CC are non-duplicates compared toWiseman et al. (2017) who obtain 72.9% showing that our model is less repetitive.

We further conducted an ablation study with the conditional copy variant of our model (NCP+CC) to establish whether improvements are due to better content selection (CS) and/or content planning (CP). We see in Table 4 that content selection and planning individually contribute to performance improvements over the baseline (ED+CC), and accuracy further increases when both components are taken into account. In addition we evaluated these components on their own (independently of text generation) by comparing the output of the planner (see p(zjr) block in Figure 2) against gold content plans obtained using the IE system (see row NCP in Table 4. Compared to the full system (NCP+CC), content selection precision and recall are higher (by 4.5% and 2%, respectively) as well as content ordering (by 1.8%). In another study, we used the CS and CO metrics to measure how well the generated text follows the content plan produced by the planner (instead of arbitrarily adding or removing information). We found out that NCP+CC generates game summaries which follow the content plan closely: CS precision is higher than 85%, CS recall is higher than 93%, and CO higher than 84%. This reinforces our claim that higher accuracies in the content selection and planning phase will result in further improvements in text generation.

The test set results in Table 5 follow a pattern similar to the development set. NCP achieves higher accuracy in all metrics including relation generation, content selection, content ordering, and BLEU compared to Wiseman et al. (2017). We provide examples of system output in Figure 4 and the supplementary material.

Human-Based Evaluation We conducted two human evaluation experiments using the Amazon Mechanical Turk (AMT) crowdsourcing platform. The first study assessed relation generation by examining whether improvements in relation generation attested by automatic evaluation metrics are indeed corroborated by human judgments. We compared our best performing model (NCP+CC), with gold reference summaries, a template system and the best model of Wiseman et al. $(2017) .$ AMT workers were presented with a specific NBA game's box score and line score, and four (randomly selected) sentences from the summary. They were asked to identify supporting and contradicting facts mentioned in each sentence. We randomly selected 30 games from the test set. Each sentence was rated by three workers.

|

The left two columns in Table 6 contain the average number of supporting and contradicting facts per sentence as determined by the crowdworkers, for each model. The template-based system has the highest number of supporting facts, even compared to the human gold standard. TEMPL does not perform any èontent selection, it includes a large number of facts from the database and since it does not perform any generation either, it exhibits a few contradictions. Compared to WS-2017 and the Gold summaries, NCP+CC displays a larger number of supporting facts. All models are significantly different in the number of supporting facts (#Supp) from TEMPL (using a one-way ANOVA with posthoc Tukey HSD tests). NCP+CC is significantly different from WS-2017 and Gold. With respect to contradicting facts (#Cont), Gold and TEMPL are not significantly different from each other but are significantly different from the neural systems (WS-2017, NCP+CC).

In the second experiment, we assessed the generation quality of our model. We elicited judgments for the same 30 games used in the first study. For each game, participants were asked to compare a human-written summary, NCP with conditional copy (NCP+CC), Wiseman et al.'s (2017) best model, and the template system. Our study used Best-Worst Scaling (BWS; Louviere, Flynn, and Marley 2015 ), a technique shown to be less labor-intensive and providing more reliable results as compared to rating scales (Kiritchenko and Mohammad 2017). We arranged every 4-tuple of competing summaries into 6 pairs. Every pair was shown to three crowdworkers, who were asked to choose which summary was best and which was worst according to three criteria: Grammaticality (is the summary fluent and grammatical?) Coherence (is the summary easy to read? does it follow a natural ordering of facts?), and Conciseness (does the summary avoid redundant information and repetitions?). The score of a system for each criterion is computed as the difference between the percentage of times the system was selected as the best and the percentage of times it was selected as the worst (Orme 2009 ). The scores range from -100 (absolutely worst to +100 (absolutely best).

The results of the second study are summarized in Table 6. Gold summaries were perceived as significantly better compared to the automatic systems across all criteria (again using a one-way ANOVA with post-hoc Tukey HSD tests. $\mathrm{NCP}+\mathrm{CC}$ was perceived as significantly more grammatical than $\mathrm{WS}-2017$ but not compared to TEMPL which does not suffer from fluency errors since it does not perform any generation. NCP+CC was perceived as significantly more coherent than TEMPL and WS-2017. The template fairs poorly on coherence, its output is stilted and exhibits no variability (see top block in Table 4 ). With regard to conciseness, the neural systems are significantly worse than TEMPL, while NCP+CC is significantly better than WS-2017. By design the template cannot repeat information since there is no redundancy in the sentences chosen to verbalize the summary.

Taken together, our results show that content planning improves data-to-text generation across metrics and systems. We find that $\mathrm{NCP}_{+} \mathrm{CC}$ overall performs best, however there is a significant gap between automatically generated summaries and human-authored ones.

6 Conclusions

We presented a data-to-text generation model which is enhanced with content selection and planning modules. Experimental results (based on automatic metrics and judgment elicitation studies ) demonstrate that generation quality improves both in terms of the number of relevant facts contained in the output text, and the order according to which these are presented. Positive side-effects of content planning are additional improvements in the grammaticality, and conciseness of the generated text. In the future, we would like to learn more detail-oriented plans involving inference over multiple facts and entities. We would also like to verify our approach across domains and languages.

References

2018

- (Gatt et al., 2018) ⇒ Gatt, A., and Krahmer, E. 2018. Survey of the state of the art in natural language generation: Core tasks, applications and evaluation. JAIR 61:65–170.

2017a

- (Chisholm et al., 2017) ⇒ Chisholm, A.; Radford, W.; and Hachey, B. 2017. Learning to generate one-sentence biographies from wikidata. In EACL, 633–642.

2017b

- (Kiritchenko et al., 2017) ⇒ Kiritchenko, S., and Mohammad, S. 2017. Best-worst scaling more reliable than rating scales: A case study on sentiment intensity annotation. In ACL, volume 2, 465–470.

2017c

- (Kleinet et al., 2017) ⇒ Klein, G.; Kim, Y.; Deng, Y.; Senellart, J.; and Rush, A. M. 2017. Opennmt: Open-source toolkit for neural machine translation. arXiv preprint arXiv:1701.02810.

2017d

- (Liu et al., 2017) ⇒ Liu, T.; Wang, K.; Sha, L.; Chang, B.; and Sui, Z. 2017. Table-totext generation by structure-aware seq2seq learning. arXiv preprint arXiv:1711.09724.

2016a

- (Gu et al., 2016) ⇒ Gu, J.; Lu, Z.; Li, H.; and Li, V. O. 2016. Incorporating copying mechanism in sequence-to-sequence learning. In ACL, 1631–1640.

2016b

- (Gulcehre et al., 2016) ⇒ Gulcehre, C.; Ahn, S.; Nallapati, R.; Zhou, B.; and Bengio, Y. 2016. Pointing the unknown words. In ACL, 140–149

2016c

- (Lebret et al., 2016) ⇒ Lebret, R.; Grangier, D.; and Auli, M. 2016. Neural text generation from structured data with application to the biography domain. In EMNLP, 1203–1213.

2015a

- (Bahdanau et al., 2015) ⇒ Bahdanau, D.; Cho, K.; and Bengio, Y. 2015. Neural machine translation by jointly learning to align and translate. In ICLR.

2015b

- (Louviere et al., 2015) ⇒ Louviere, J. J.; Flynn, T. N.; and Marley, A. A. J. 2015. Best-worst scaling: Theory, methods and applications. Cambridge University Press.

2015c

- (Luong et al., 2015) ⇒ Luong, T.; Pham, H.; and Manning, C. D. 2015. Effective approaches to attention-based neural machine translation. In EMNLP, 1412–1421.

2013

- (Konstas et al., 2013) ⇒ Konstas, I., and Lapata, M. 2013. A global model for concept-totext generation. J. Artif. Int. Res. 48(1):305–346.

2012

- (Konstas et al., 2012) ⇒ Konstas, I., and Lapata, M. 2012. Unsupervised concept-to-text generation with hypergraphs. In NAACL, 752–761.

2011

- (Duchi et al., 2011) ⇒ Duchi, J.; Hazan, E.; and Singer, Y. 2011. Adaptive subgradient methods for online learning and stochastic optimization. Journal of Machine Learning Research 12(Jul):2121–2159.

2010a

- (Angeli et al., 2010) ⇒ Angeli, G.; Liang, P.; and Klein, D. 2010. A simple domainindependent probabilistic approach to generation. In EMNLP, 502–512.

2010b

- (Kimet et al., 2010) ⇒ Kim, J., and Mooney, R. 2010. Generative alignment and semantic parsing for learning from ambiguous supervision. In Coling 2010: Posters, 543–551.

2009

- (Kimet et al., 2009) ⇒ Liang, P.; Jordan, M.; and Klein, D. 2009. Learning semantic correspondences with less supervision. In ACL, 91–99.

2008

- (Belz, 2008) ⇒ Belz, A. 2008. Automatic generation of weather forecast texts using comprehensive probabilistic generation-space models. Nat. Lang. Eng. 14(4):431–455.

2005

- (Barzilay et al., 2005) ⇒ Barzilay, R., and Lapata, M. 2005. Collective content selection for concept-to-text generation. In EMNLP.

2004a

- (Duboue et al., 2002) ⇒ Karamanis, N. 2004. Entity coherence for descriptive text structuring. Ph.D. Dissertation, School of Informatics, University of Edinburgh.

2004b

- (Karamanis, 2004) ⇒ Karamanis, N. 2004. Entity coherence for descriptive text structuring. Ph.D. Dissertation, School of Informatics, University of Edinburgh

2003

- (Duboue et al., 2003) ⇒ Duboue, P. A., and McKeown, K. R. 2003. Statistical acquisition of content selection rules for natural language generation.

2002

- (Duboue et al., 2002) ⇒ Duboue, P., and McKeown, K. 2002. Content planner construction via evolutionary algorithms and a corpus-based fitness function. In: Proceedings of the International Natural Language Generation Conference, 89–96.

2001

- (Duboue et al., 2001) ⇒ Duboue, P. A., and McKeown, K. R. 2001. Empirically estimating order constraints for content planning in generation. In ACL, 172–179

1997

- (McKeown, 1997) ⇒ McKeown, K. R.; Pan, S.; Shaw, J.; Jordan, D. A.; and Allen, B. A. 1997. Language generation for multimedia healthcare briefings. In ANLP, 277–282.

1993

- (Hovy, 1993) ⇒ Hovy, E. H. 1993. Automated discourse generation using discourse structure relations. Artificial intelligence 63(1-2):341–385.

1992

- (McKeown, 1993) ⇒ McKeown, K. 1992. Text generation. Cambridge University Press. Mei, H.; Bansal, M.; and Walter, M. R. 2016. What to talk about and how? selective generation using lstms with coarse-tofine alignment. In NAACL, 720–730.

1988

- (Dale, 1988) ⇒ Dale, R. 1988. Generating referring expressions in a domain of objects and processes. Ph.D. Dissertation, University of Edinburgh.

1983

- (Kukich, 1983) ⇒ Kukich, K. 1983. Design of a knowledge-based report generator. In ACL.

1977

- (Dempster et al., 1977 ) ⇒ Dempster, A. P.; Laird, N. M.; and Rubin, D. B. 1977. Maximum likelihood from incomplete data via the em algorithm. Journal of the royal statistical society. Series B (methodological) 1–38.;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2019 DatatoTextGenerationwithContent | Mirella Lapata Li Dong Ratish Puduppully | Data-to-Text Generation with Content Selection and Planning | 2019 |