Hierarchical Recurrent Encoder-Decoder (HRED) Neural Network Training Algorithm

Jump to navigation

Jump to search

A Hierarchical Recurrent Encoder-Decoder (HRED) Neural Network Training Algorithm is a feedforward NNet training algorithm that implements a hierarchical recurrent encoder-decoder neural network.

- Example(s):

- Counter-Example(s):

- See: Long Short-Term Memory, Recurrent Neural Network, Convolutional Neural Network, Gating Mechanism, Encoder-Decoder Neural Network.

References

2015

- (Sordoni et al., 2015) ⇒ Alessandro Sordoni, Yoshua Bengio, Hossein Vahabi, Christina Lioma, Jakob Grue Simonsen, and Jian-Yun Nie. (2015). “A Hierarchical Recurrent Encoder-Decoder for Generative Context-Aware Query Suggestion.” In: Proceedings of the 24th ACM International Conference on Information and Knowledge Management (CIKM 2015). DOI:10.1145/2806416.2806493. arXiv:1507.02221.

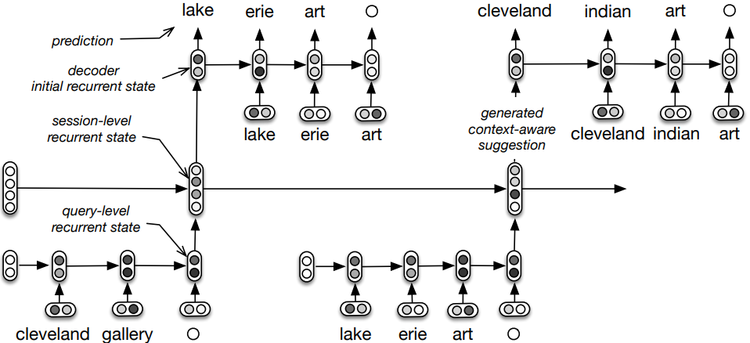

- QUOTE: Our hierarchical recurrent encoder-decoder (HRED) is pictured in Figure 3. Given a query in the session, the model encodes the information seen up to that position and tries to predict the following query. The process is iterated throughout all the queries in the session. In the forward pass, the model computes the query-level encodings, the session-level recurrent states and the log-likelihood of each query in the session given the previous ones. In the backward pass, the gradients are computed and the parameters are updated.

- QUOTE: Our hierarchical recurrent encoder-decoder (HRED) is pictured in Figure 3. Given a query in the session, the model encodes the information seen up to that position and tries to predict the following query. The process is iterated throughout all the queries in the session. In the forward pass, the model computes the query-level encodings, the session-level recurrent states and the log-likelihood of each query in the session given the previous ones. In the backward pass, the gradients are computed and the parameters are updated.

|