Neural Turing Machine (NTM)

A Neural Turing Machine (NTM) is a Memory-Augmented Neural Network that combines a recurrent neural network with an external memory block.

- Context:

- It includes a neural network controller coupled to auxiliary memory resources, which it interacts with through attentional mechanisms.

- Example(s):

- Counter-Example(s):

- An Internal Memory-based Neural Network,

- a Neural Machine Translation (NMT) Network,

- a Hierarchical Attention Network,

- a Gated Convolutional Neural Network with Segment-level Attention Mechanism (SAM-GCNN),

- a Convolutional Neural Network with Segment-level Attention Mechanism (SAM-CNN),

- a Bidirectional Recurrent Neural Network with Attention Mechanism,

- a Sparse Access Memory Neural Network (SAM-ANN).

- See: Turing Machine, Programmable Computer, Auxiliary Memory, Gradient Descent, Long Short-Term Memory, Differentiable Neural Computer, Attention Mechanism.

References

2019

- (Wikipedia, 2019) ⇒ https://en.wikipedia.org/wiki/Neural_Turing_machine Retrieved:2019-1-13.

- A Neural Turing machine (NTMs) is a recurrent neural network model published by Alex Graves et. al. in 2014.[1] NTMs combine the fuzzy pattern matching capabilities of neural networks with the algorithmic power of programmable computers. An NTM has a neural network controller coupled to external memory resources, which it interacts with through attentional mechanisms. The memory interactions are differentiable end-to-end, making it possible to optimize them using gradient descent.[2] An NTM with a long short-term memory (LSTM) network controller can infer simple algorithms such as copying, sorting, and associative recall from input and output examples. They can infer algorithms from input and output examples alone.

The authors of the original NTM paper did not publish the source code for their implementation . The first stable open-source implementation of a Neural Turing Machine was published in 2018 at the 27th International Conference on Artificial Neural Networks, receiving a best-paper award[3] [4][5]. Other open source implementations of NTMs exist but are not stable for production use.

- A Neural Turing machine (NTMs) is a recurrent neural network model published by Alex Graves et. al. in 2014.[1] NTMs combine the fuzzy pattern matching capabilities of neural networks with the algorithmic power of programmable computers. An NTM has a neural network controller coupled to external memory resources, which it interacts with through attentional mechanisms. The memory interactions are differentiable end-to-end, making it possible to optimize them using gradient descent.[2] An NTM with a long short-term memory (LSTM) network controller can infer simple algorithms such as copying, sorting, and associative recall from input and output examples. They can infer algorithms from input and output examples alone.

- The developers either report that the gradients of their implementation sometimes become NaN during training for unknown reasons and causing training to fail; report slow convergence;or do not report the speed of learning of their implementation at all.

- Differentiable neural computers are an outgrowth of neural Turing machines, with attention mechanisms that control where the memory is active, and improved performance.

- ↑ Graves et al. (2014)

- ↑ "Deep Minds: An Interview with Google's Alex Graves & Koray Kavukcuoglu". Retrieved May 17, 2016.

- ↑ Collier & Beel (2018)

- ↑ "MarkPKCollier/NeuralTuringMachine". GitHub. Retrieved 2018-10-20.

- ↑ Beel, Joeran (2018-10-20). "Best-Paper Award for our Publication "Implementing Neural Turing Machines" at the 27th International Conference on Artificial Neural Networks | Prof. Joeran Beel (TCD Dublin)". Trinity College Dublin, School of Computer Science and Statistics Blog. Retrieved 2018-10-20.

2018

- (Collier & Beel, 2018) ⇒ Mark Collier, and Joeran Beel. (2018). "Implementing Neural Turing Machines" (PDF). In: Proceedings of 27th International Conference on Artificial Neural Networks (ICANN). ISBN:978-3-030-01424-7. DOI:10.1007/978-3-030-01424-7_10. arXiv:1807.08518

- QUOTE: Neural Turing Machines (NTMs) [4] are one instance of several new neural network architectures [4, 5, 11] classified as Memory Augmented Neural Networks (MANNs). MANNs defining attribute is the existence of an external memory unit. This contrasts with gated recurrent neural networks such as Long ShortTerm Memory Cells (LSTMs) [7] whose memory is an internal vector maintained over time (...)

Neural Turing Machines consist of a controller network which can be a feed-forward neural network or a recurrent neural network and an external memory unit which is a [math]\displaystyle{ N ∗ W }[/math] memory matrix, where [math]\displaystyle{ N }[/math] represents the number of memory locations and [math]\displaystyle{ W }[/math] the dimension of each memory cell. Whether the controller is a recurrent neural network or not, the entire architecture is recurrent as the contents of the memory matrix are maintained over time.

The controller has read and write heads which access the memory matrix. The effect of a read or write operation on a particular memory cell is weighted by a soft attentional mechanism.

- QUOTE: Neural Turing Machines (NTMs) [4] are one instance of several new neural network architectures [4, 5, 11] classified as Memory Augmented Neural Networks (MANNs). MANNs defining attribute is the existence of an external memory unit. This contrasts with gated recurrent neural networks such as Long ShortTerm Memory Cells (LSTMs) [7] whose memory is an internal vector maintained over time (...)

2016

- (Olah & Carter, 2016) ⇒ Chris Olah, and Shan Carter. (2016). “Attention and Augmented Recurrent Neural Networks.” In: Distill. doi:10.23915/distill.00001

- QUOTE: Neural Turing Machines [2] combine a RNN with an external memory bank. Since vectors are the natural language of neural networks, the memory is an array of vectors:

- QUOTE: Neural Turing Machines [2] combine a RNN with an external memory bank. Since vectors are the natural language of neural networks, the memory is an array of vectors:

2016

- (Gulcehre et al., 2016) ⇒ Caglar Gulcehre, Sarath Chandar, Kyunghyun Cho, and Yoshua Bengio. (2016). “Dynamic Neural Turing Machine with Soft and Hard Addressing Schemes.”

- QUOTE: The proposed dynamic neural Turing machine (D-NTM) extends the neural Turing machine (NTM, (Graves et al., 2014)) which has a modular design. The D-NTM consists of two main modules: a controller, and a memory. The controller, which is often implemented as a recurrent neural network, issues a command to the memory so as to read, write to and erase a subset of memory cells.

2014a

- (Graves et al., 2014) ⇒ Alex Graves, Greg Wayne, and Ivo Danihelka. (2014). “Neural Turing Machines.” In: : arXiv preprint arXiv:1410.5401..

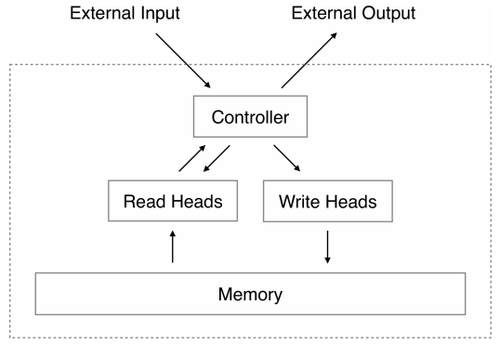

- QUOTE: A Neural Turing Machine (NTM) architecture contains two basic components: a neural network controller and a memory bank. Figure 1 presents a high-level diagram of the NTM architecture. Like most neural networks, the controller interacts with the external world via input and output vectors. Unlike a standard network, it also interacts with a memory matrix using selective read and write operations. By analogy to the Turing machine we refer to the network outputs that parametrise these operations as “heads".

Figure 1: Neural Turing Machine Architecture. During each update cycle, the controller network receives inputs from an external environment and emits outputs in response. It also reads to and writes from a memory matrix via a set of parallel read and write heads. The dashed line indicates the division between the NTM circuit and the outside world.

- QUOTE: A Neural Turing Machine (NTM) architecture contains two basic components: a neural network controller and a memory bank. Figure 1 presents a high-level diagram of the NTM architecture. Like most neural networks, the controller interacts with the external world via input and output vectors. Unlike a standard network, it also interacts with a memory matrix using selective read and write operations. By analogy to the Turing machine we refer to the network outputs that parametrise these operations as “heads".

2014b

- (Graves et al., 2014b) ⇒ http://www.robots.ox.ac.uk/~tvg/publications/talks/NeuralTuringMachines.pdf

- QUOTE: (...)

- First application of Machine Learning to logical flow and external memory

- Extend the capabiliBes of neural networks by coupling them to external memory

- Analogous to TM coupling a finite state machine to infinite tape

- RNN’s have been shown to be Turing-Complete, Siegelmann et al ‘95.

- Unlike TM, NTM is completely differentiable

- QUOTE: (...)