Stacked Recurrent Neural Network

(Redirected from stacking recurrent architecture)

Jump to navigation

Jump to search

A Stacked Recurrent Neural Network is a Stacked Neural Network that is a combination of recurrent neural networks.

- Example(s):

- Counter-Example(s):

- See: Encoder-Decoder Neural Network, Deep Neural Network, Neural Network Training System, Natural Language Processing System, Convolutional Neural Network, Stacked Ensemble-based Learning Task, Attention Mechanism.

References

2014

- (Lambert, 2014) ⇒ John Lambert (2014). "Stacked RNNs for Encoder-Decoder Networks: Accurate Machine Understanding of Images".

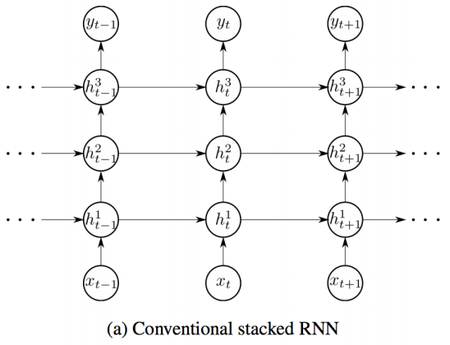

- QUOTE: Pascanu et al. continue the work of Hihi and Bengio (1996) in Pascanu et al., 2013. They define the Stacked-RNN as follows:

[math]\displaystyle{ h_{t}^{(l)}=f_{h}^{(l)}\left(h_{t}^{(l-1)}, h_{t-1}^{(l)}\right)=\phi_{h}\left(W_{l}^{T} h_{t-1}^{(l)}+U_{l}^{T} h_{t}^{(l-1)}\right) }[/math]

- where, $h_{t}^{(l)}$ is the hidden state of the $l$-th level at time $t$. When $l = 1$, the state is computed using $x_t$ instead of $h_{t}^{(l-1)}$. The hidden states of all the levels are recursively computed from the bottom level $l= 1$.

- where, $h_{t}^{(l)}$ is the hidden state of the $l$-th level at time $t$. When $l = 1$, the state is computed using $x_t$ instead of $h_{t}^{(l-1)}$. The hidden states of all the levels are recursively computed from the bottom level $l= 1$.

|

2013

- (Pascanu et al., 2013) ⇒ Razvan Pascanu, Caglar Gulcehre, Kyunghyun Cho, and Yoshua Bengio. "How to Construct Deep Recurrent Neural Networks". Preprint: arXiv:1312.6026.

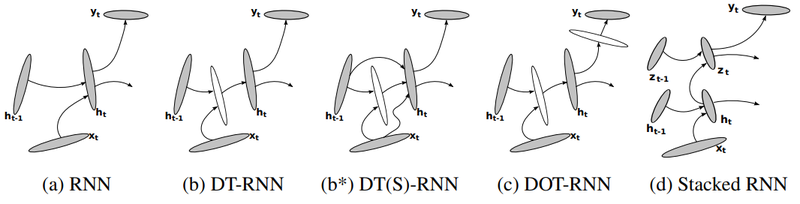

- QUOTE: We compare the conventional recurrent neural network (RNN), deep transition RNN with shortcut connections in the transition MLP (DT(S)-RNN), deep output/transition RNN with shortcut connections in the hidden to hidden transition MLP (DOT(S)-RNN) and stacked RNN (sRNN). See Fig. 2 (a)–(d) for the illustrations of these models.

- QUOTE: We compare the conventional recurrent neural network (RNN), deep transition RNN with shortcut connections in the transition MLP (DT(S)-RNN), deep output/transition RNN with shortcut connections in the hidden to hidden transition MLP (DOT(S)-RNN) and stacked RNN (sRNN). See Fig. 2 (a)–(d) for the illustrations of these models.

|

1996

- (Hihi & Bengio, 1996) ⇒ Salah El Hihi, and Yoshua Bengio (1996). "Hierarchical Recurrent Neural Networks for Long-Term Dependencies". In: Advances in Neural Information Processing Systems 8 (NIPS 95).