2016 DEFEXTASemiSupervisedDefinition

- (Espinosa-Anke et al., 2016) ⇒ Luis Espinosa-Anke, Roberto Carlini, Horacio Saggion, and Francesco Ronzano. (2016). “DEFEXT: A Semi Supervised Definition Extraction Tool.” In: GLOBALEX 2016 Lexicographic Resources for Human Language Technology Workshop Programme.

Subject Headings: DefExt, Definition Extraction System.

Notes

Cited By

- Google Scholar: ~ 5 Citations Retrieved:2020-10-11.

2018

- (Anke & Schockaert, 2018) ⇒ Luis Espinosa Anke, and Steven Schockaert. (2018). “Syntactically Aware Neural Architectures for Definition Extraction". In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2018) Volume 2 (Short Papers).

- QUOTE: ... We run our best performing model over a subset of the ACL-ARC anthology (Bird et al., 2008), specifically the subcorpus described in (Espinosa-Anke et al., 2016a), which removed noisy sentences as produced by the pdf to text conversion. …

2017

- (Esteche et al., 2017) ⇒ Jennifer Esteche, Romina Romero, Luis Chiruzzo, and Aiala Rosá. (2017). “Automatic Definition Extraction and Crossword Generation From Spanish News Text.” CLEI ELECTRONIC JOURNAL 20, no. 2

- QUOTE: ... In (Espinosa-Anke et al., 2016), the authors propose the use of machine learning techniques, implementing a sequential labeling algorithm based on Conditional Random Fields and a bootstrapping approach that enables the model to gradually learn linguistic particularities of the corpus. This semi supervised definition extraction tool achieved a precision of 78%, proving the advantages of using machine learning to improve definition extraction. …

Quotes

Keywords

Abstract

We present DefExt, an easy to use semi-supervised Definition Extraction Tool. DefExt is designed to extract from a target corpus those textual fragments where a term is explicitly mentioned together with its core features, i.e. its definition. It works on the back of a Conditional Random Fields based sequential labeling algorithm and a bootstrapping approach. Bootstrapping enables the model to gradually become more aware of the idiosyncrasies of the target corpus. In this paper we describe the main components of the toolkit as well as experimental results stemming from both automatic and manual evaluation. We release DefExt as open source along with the necessary files to run it in any Unix machine. We also provide access to training and test data for immediate use.

1. Introduction

Definitions are the source of knowledge to consult when the meaning of a term is sought, but manually constructing and updating glossaries is a costly task which requires the cooperative effort of domain experts (Navigli and Velardi, 2010). Exploiting lexicographic information in the form of definitions has proven useful not only for Glossary Building (Muresan and Klavans, 2002; Park et al., 2002) or Question Answering (Cui et al., 2005; Saggion and Gaizauskas, 2004), but also more recently in tasks like Hypernym Extraction (Espinosa-Anke et al., 2015b), Taxonomy Learning (Velardi et al., 2013; Espinosa-Anke et al., 2016) and Knowledge Base Generation (Delli Bovi et al., 2015). Definition Extraction (DE), i.e. the task to automatically extract definitions from naturally occurring text, can be approached by exploiting lexico-syntactic patterns (Rebeyrolle and Tanguy, 2000; Sarmento et al., 2006; Storrer and Wellinghoff, 2006), in a supervised machine learning setting (Navigli et al., 2010; Jin et al., 2013; Espinosa-Anke and Saggion, 2014; Espinosa-Anke et al., 2015a), or leveraging bootstrapping algorithms (Reiplinger et al., 2012; De Benedictis et al., 2013).

In this paper, we extend our most recent contribution to DE by releasing DEFEXT [1], a toolkit based on experiments described in (Espinosa-Anke et al., 2015c), consisting in machine learning sentence-level DE along with a bootstrapping approach. First, we provide a summary of the foundational components of DEFEXT (Section 2.) Next, we summarize the contribution from which it stems (Espinosa-Anke et al., 2015c) as well as its main conclusion, namely that our approach effectively generates a model that gradually adapts to a target domain (Section 3.1.). Furthermore, we introduce one additional evaluation where, after bootstrapping a subset of the ACL Anthology, we present human experts in NLP with definitions and distractors (Section 3.2.), and ask them to judge whether the sentence includes definitional knowledge. Finally, we provide a brief description of the released toolkit along with accompanying enriched corpora to enable immediate use (Section 3.3.).

2. Data Modelling

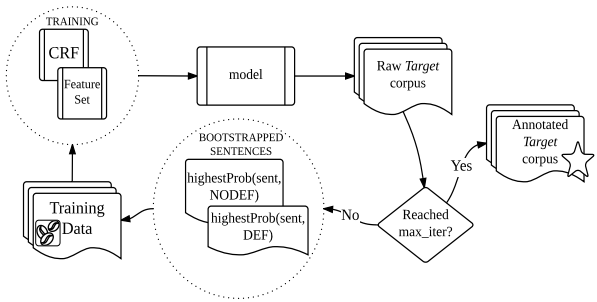

DEFEXT is a weakly supervised DE system based on Conditional Random Fields (CRF) which, starting from a set of manually validated definitions and distractors, trains a seed model and iteratively enriches it with high confidence instances (i.e. highly likely definition sentences, and highly likely not definition sentences, such as a text fragments expressing a personal opinion). What differentiates DEFEXT from any supervised system is that thanks to its iterative architecture (see Figure 1), it gradually identifies more appropriate definitions with larger coverage on non-standard text than a system trained only on WordNet glosses or Wikipedia definitions (experimental results supporting this claim are briefly discussed in Section 3).

2.1 Corpora

We release DEFEXT along with two automatically annotated datasets in column format, where each line represents a word and each column a feature value, the last column being reserved for the token's label, which in our setting is DEF/NODEF. Sentence splitting is encoded as a double line break.

These corpora are enriched versions of (1) The Word Class Lattices corpus (Navigli et al., 2010) (WCLd); and (2) A subset of the ACL Anthology Reference Corpus (Bird et al., 2008) (ACL-ARCd). Statistics about their size in tokens and sentences, as well as definitional distribution (in the case of WCLd) are provided in Table 1.

| WCLd | ACL-ARCd | |

|---|---|---|

| Sentences | 3,707 | 241,383 |

| Tokens | 103,037 | 6,709,314 |

| Definitions | 2,059 | NA |

| Distractors | 1,644 | NA |

2.2. Feature Extraction

The task of DE is modelled as a sentence classification problem, i.e. a sentence may or may not contain a definition. The opting for CRF as the machine learning algorithm is twofold: First, its sequential nature allows us to encode fine-grained features at word level, also considering the context of each word. And second, it has proven useful in previous work in the task of DE (Jin et al., 2013). The features used for modelling the data are based on both linguistic, lexicographic and statistical information, such as:

- Linguistic Features: Surface and lemmatized words, part-of-speech, chunking information (NPs) and syntactic dependencies.

- Lexicographic Information: A feature that looks at noun phrases and whether they appear at potential definiendum (D) or definiens (d) position[2] , as illustrated in the following example:

| The$\langle$o-D$\rangle$ Abwehr$\langle$b-Di$\rangle$ was$\langle$o-d$\rangle$ a$\langle$o-d$\rangle$ German$\langle$b-d$\rangle$ intelligence$\langle$i-d$\rangle$ organization$\langle$i-d$\rangle$ from$\langle$o-d$\rangle$ 1921$\langle$o-d$\rangle$ to$\langle$o-d$\rangle$ 1944$\langle$o-d$\rangle$. |

* Statistical Features: These are features designed to capture the degree of termhood of a word, its frequency in generic or domain-specific corpora, or evidence of their salience in definitional knowledge. These are:

- - termhood: This metric determines the importance of a candidate token to be a terminological unit by looking at its frequency in general and domain-specific corpora (Kit and Liu, 2008). It is obtained as follows:

- - termhood: This metric determines the importance of a candidate token to be a terminological unit by looking at its frequency in general and domain-specific corpora (Kit and Liu, 2008). It is obtained as follows:

[math]\displaystyle{ \text { Termhood }(w)=\dfrac{r_{D}(w)}{\left|V_{D}\right|}-\frac{r_{B}(w)}{\left|V_{B}\right|} }[/math]

Where $r_D$ is the frequency-wise ranking of word $w$ in a domain corpus (in our case, WCLd), and $r_B]$ is the frequency-wise ranking of such word in a general corpus, namely the Brown corpus (Francis and Kucera, 1979). Denominators refer to the token-level size of each corpus. If word $w$ only appears in the general corpus, we set the value of Termhood (w) to $−\infty$, and to $\infty$ in the opposite case.

:: - tf-gen: Frequency of the current word in the general-domain corpus $r_B$ (Brown Corpus).

- - tf-dom: Frequency of the current word in the domain-specific corpus $r_D$ (WCLd).

- -tfidf: Tf-idf of the current word over the training set, where each sentence is considered a separate document.

- -def_prom: The notion of Definitional Prominence describes the probability of a word $w$ to appear in a definitional sentence ($s = def$). For this, we consider its frequency in definitions and non-definitions in the WCLd as follows:

- -def_prom: The notion of Definitional Prominence describes the probability of a word $w$ to appear in a definitional sentence ($s = def$). For this, we consider its frequency in definitions and non-definitions in the WCLd as follows:

[math]\displaystyle{ \text { DefProm }(w)=\dfrac{\text { DF }}{\mid \text { Defs } \mid}-\frac{\text { NF }}{\mid \text { Nodefs } \mid} }[/math]

where $\mathrm{DF}=\sum_{i=0}^{i=n}\left(s_{i}=\text { def } \wedge w \in s_{i}\right)$ and $\mathrm{NF}=\sum_{i=0}^{i=n}\left(s_{i}=\text { nodef } \wedge w \in s_{i}\right)$. Similarly as with the termhood feature, in cases where a word $w$ is only found in definitional sentences, we set the DefProm (w) value to $\infty$, and to $-\infty$ if it was only seen in non-definitional sentences.

- -D_prom: Definiendum Prominence, on the other hand, models our intuition that a word appearing more often in position of potential definiendum might reveal its role as a definitional keyword. This feature is computed as follows:

- -D_prom: Definiendum Prominence, on the other hand, models our intuition that a word appearing more often in position of potential definiendum might reveal its role as a definitional keyword. This feature is computed as follows:

[math]\displaystyle{ \mathrm{DP}(\mathrm{w})=\dfrac{\displaystyle\sum_{i=0}^{i=n} w_{i} \in \operatorname{term}_{D}}{|D T|} }[/math]

where $term_D$ is a noun phrase (i.e. a term candidate) appearing in potential definiendum position and $|DT|$ refers to the size of the candidate term corpus in candidate definienda position.

These features are used to train a CRF algorithm. DEFEXT operates on the back of the CRF toolkit CRF++[3], which allows selecting features to be considered at each iteration, as well as the context window.

2.3. Bootstrapping

We implemented on DEFEXT a bootstrapping approach inspired by the well-known Yarowsky algorithm for Word Sense Disambiguation (Yarowsky, 1995). It works as follows: Assuming a small set of seed labeled examples (in our case, WCLd), a large target dataset of cases to be classified (ACL-ARCd), and a learning algorithm (CRF), the initial training is performed on the initial seeds in order to classify the whole target data. Those instances classified with high confidence are appended to the training data until convergence or a number of maximum iterations is reached. We apply this methodology to the definition bootstrapping process, and for each iteration, extract the highest confidence definition and the highest confidence non-definition from the target corpus, retrain, and classify again. The number of maximum iterations may be introduced as an input parameter by the user. Only the latest versions of each corpus are kept in disk.

3. Experiments and Evaluation

As the bootstrapping process advances, the trained models gradually become more aware of the linguistic particularities of the genre and register of a target corpus. This allows capturing definition fragments with a particular syntactic structure which may not exist in the original seeds. In this section, we summarize the main conclusions drawn from the experiments performed over two held-out test datasets, namely the W00 corpus (Jin et al., 2013), and the MSRNLP corpus (Espinosa-Anke et al., 2015c) [4]. The former is a manually annotated subset of the ACL anthology, which shows high domain-specifity as well as considerable variability in terms of how a term is introduced and defined (e.g. by means of a comparison or by placing the defined term at the end of the sentence). The latter is compiled manually from a set of abstracts available at the Microsoft Research website[5], where the first sentence of each abstract is tagged in the website as a definition. This corpus shows less linguistic variability and thus its definitions are in the vast majority of cases, highly canonical. We show one sample definition from each corpus in Table 2[6].

| CORPUS | DEFINITION |

| WCLd | The Abwehr was a German intelligence organization from 1921 to 1944 |

| W00 | Discourse refers to any form of language-based communication involving multiple sentences or utterances. |

| ACL-ARCd | In computational linguistics, word sense disambiguation (WSD) is an open problem of natural language processing, which governs the process of identifying which sense of a word. |

| MSR-NLP | User interface is a way by which a user communicates with a computer through a particular software application. |

3.1. Definition Extraction

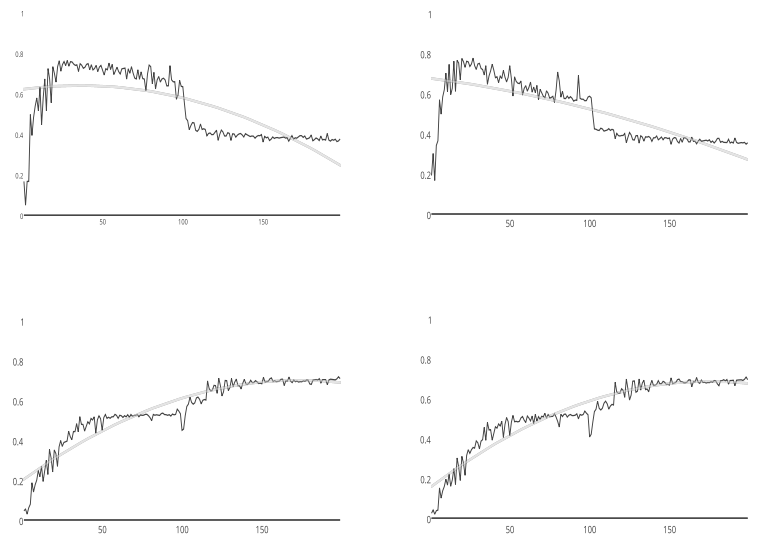

Starting with the WCLd corpus as seed data, and the ACLARCd collection for bootstrapping, we performed 200 iterations and, at every iteration, we computed Precision, Recall and F-Score at sentence level for both the W00 and the MSR-NLP corpora. At iteration 100, we recalculated the statistical features over the bootstrapped training data (which included 200 more sentences, 100 of each label). The trend for both corpora, shown in Figure 2, indicates that the model improves at classifying definitional knowledge in corpora with greater variability, as performance on the W00 corpus suggests. Moreover, it shows decreasing performance on standard language. The best iterations with their corresponding scores for both datasets are shown in Table 3.

|

| Iteration | P | R | F | |

|---|---|---|---|---|

| MSR-NLP | 20 | 78.2 | 76.7 | 77.44 |

| W00 | 198 | 62.47 | 82.01 | 71.85 |

3.2. Human Evaluation

In this additional experiment, we assessed the quality of extracted definitions during the bootstrapping process. To this end, we performed 100 iterations over the ACL-ARCd corpus and presented experts in NLP with 200 sentences (100 candidate definitions and 100 distractors), with shuffled order. Note that since the ACL-ARC corpus comes from parsing pdf papers, there is a considerable amount of noise derived from diverse formatting, presence of equations or tables, and so on. All sentences, however, were presented in their original form, noise included, as in many cases we found that even noise could give the reader an idea of the context in which the sentence was uttered (e.g. if it is followed by a formula, or if it points to a figure or table). The experiment was completed by two judges who had extensive familiarity with the NLP domain and its terminology. Evaluators were allowed to leave the answer field blank if the sentence was unreadable due to noise.

In this experiment, DEFEXT reached an average (over the scores provided by both judges) of 0.50 Precision when computed over the whole dataset, and 0.65 if we only consider sentences which were not considered noise by the evaluators. Evaluators found an average of 23 sentences that they considered unreadable.

3.3. Technical Details

As mentioned earlier, DEFEXT is a bootstrapping wrapper around the CRF toolkit CRF++, which requires input data to be preprocessed in column-based format. Specifically, each sentence is encoded as a matrix, where each word is a row and each feature is represented as a column, each of them tab-separated. Usually, the word's surface form or lemma will be at the first or second column, and then other features such as part-of-speech, syntactic dependency or corpus-based features follow.

The last column in the dataset is the sentence label, which in DEFEXT is either DEF or NODEF, as it is designed as a sentence classification system.

Once training and target data are preprocessed accordingly, one may simply invoke DEFEXT in any Unix machine. Further implementation details and command line arguments can be found in the toolkit's documentation, as well as in comments throughout the code.

4. Discussion and Conclusion

We have presented DEFEXT, a system for weakly supervised DE at sentence level. We have summarized the most outstanding features of the algorithm by referring to experiments which took the NLP domain as a use case (Espinosa-Anke et al., 2015c), and complemented them with one additional human evaluation. We have also covered the main requirements for it to function properly, such as data format and command line arguments. No external Python libraries are required, and the only prerequisite is to have CRF++ installed. We hope the research community in lexicography, computational lexicography or corpus linguistics find this tool useful for automating term and definition extraction, for example, as a support for glossary generation or hypernymic (is-a) relation extraction.

Footnotes

- ↑ https://bitbucket.org/luisespinosa/defext

- ↑ The genus et differentia model of a definition, which traces back to Aristotelian times, distinguishes between the definiendum, the term that is being defined, and the definiens, i.e. the cluster of words that describe the core characteristics of the term.

- ↑ https://taku910.github.io/crfpp/

- ↑ Available at http://taln.upf.edu/MSR-NLP RANLP2015

- ↑ http://academic.research.microsoft.com

- ↑ Note that, for clarity, we have removed from the examples any metainformation present in the original datasets.

References

2016

- (Espinosa-Anke et al., 2016) ⇒ Luis Espinosa-Anke, Horacio Saggion, Francesco Ronzano, and Roberto Navigli (2016, February). "Extasem! Extending, Taxonomizing and Semantifying Domain Terminologies". In: Proceedings of the 30th conference on artificial intelligence (AAAI’16).

2015a

- (Espinosa-Anke, 2015) ⇒ Espinosa-Anke, L., Saggion, H., and Delli Bovi, C. (2015a). Definition extraction using sense-based embeddings. In International Workshop on Embeddings and Semantics, SEPLN.

2015b

- (Espinosa-Anke, 2015) ⇒ Espinosa-Anke, L., Saggion, H., and Ronzano, F. (2015b). Hypernym extraction: Combining machine learning and dependency grammar. In CICLING 2015, page To appear, Cairo, Egypt. Springer-Verlag.

2015c

- (Bovi et al., 2015) ⇒ Delli Bovi, C., Telesca, L., and Navigli, R. (2015). Largescale information extraction from textual definitions through deep syntactic and semantic analysis. Transactions of the Association for Computational Linguistics, 3:529–543.

2015d

- (Espinosa-Anke et al., 2015) ⇒ Espinosa-Anke, L., Saggion, H., and Ronzano, F. (2015c). Weakly supervised definition extraction. In: Proceedings of RANLP 2015.

2014

- (Espinosa-Anke & Saggion, 2014) ⇒ Espinosa-Anke, L. and Saggion, H. (2014). Applying dependency relations to definition extraction. In Natural Language Processing and Information Systems, pages 63–74. Springer.

2013a

- (Benedictis et al., 2013) ⇒ De Benedictis, F., Faralli, S., Navigli, R., et al. (2013). Glossboot: Bootstrapping multilingual domain glossaries from the web. In ACL (1), pages 528–538.

2013b

- (Jin et al., 2013) ⇒ Jin, Y., Kan, M.-Y., Ng, J.-P., and He, X. (2013). Mining scientific terms and their definitions: A study of the ACL anthology. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, pages 780–790, Seattle, Washington, USA, October. Association for Computational Linguistics.

2013c

- (Velardi et al., 2013) ⇒ Paola Velardi, Stefano Faralli and Roberto Navigli (2013). "OntoLearn Reloaded: A Graph-Based Algorithm for Taxonomy Induction". In: Computational Linguistics, 39(3), 665-707.

2012

- (Reiplinger et al., 2012) ⇒ Reiplinger, M., Schafer, U., and Wolska, M. (2012). Extracting glossary sentences from scholarly articles: A comparative evaluation of pattern bootstrapping and deep analysis. In: Proceedings of the ACL-2012 Special Workshop on Rediscovering 50 Years of Discoveries, pages 55–65, Jeju Island, Korea, July. Association for Computational Linguistics.

2010a

- ⇒ Navigli, R. and Velardi, P. (2010). Learning word-class lattices for definition and hypernym extraction. In ACL, pages 1318–1327.

2010b

- ⇒ Navigli, R., Velardi, P., and Ruiz-Martınez, J. M. (2010). An annotated dataset for extracting definitions and hypernyms from the web. In: Proceedings of LREC’10, Valletta, Malta, may.

2008a

- (Bird et al., 2008) ⇒ Bird, S., Dale, R., Dorr, B., Gibson, B., Joseph, M., Kan, M.-Y., Lee, D., Powley, B., Radev, D., and Tan, Y. F. (2008). The acl anthology reference corpus: A reference dataset for bibliographic research in computational linguistics. In: Proceedings of the Sixth International Conference on Language Resources and Evaluation (LREC08), Marrakech, Morocco, May. European Language Resources Association (ELRA). ACL Anthology Identifier: L08-1005.

2008b

- (Kit & Liu, 2008 ⇒ Kit, C. and Liu, X. (2008). Measuring mono-word termhood by rank difference via corpus comparison. Terminology, 14(2):204–229.

2006a

- (Sarmento et al., 2006) ⇒ Sarmento, L., Maia, B., Santos, D., Pinto, A., and Cabral, L. (2006). Corpografo V3 From Terminological Aid to Semi-automatic Knowledge Engineering. In 5th International Conference on Language Resources and Evaluation (LREC’06), Geneva.

2006b

- (Storrer & Wellinghoff, 2006) ⇒ Storrer, A. and Wellinghoff, S. (2006). Automated detection and annotation of term definitions in German text corpora. In Conference on Language Resources and Evaluation (LREC).

2005

- (Cui, 2005) ⇒ Cui, H., Kan, M.-Y., and Chua, T.-S. (2005). Generic soft pattern models for definitional question answering. In: Proceedings of the 28th annual international ACM SIGIR conference on Research and development in information retrieval, pages 384–391. ACM.

2004

- (Saggion & Gaizauskas, 2004) ⇒ Saggion, H. and Gaizauskas, R. (2004). Mining on-line sources for definition knowledge. In 17th FLAIRS, Miami Bearch, Florida.

2002a

- (Muresan & Klavans, 2002) ⇒ Muresan, A. and Klavans, J. (2002). A method for automatically building and evaluating dictionary resources. In: Proceedings of the Language Resources and Evaluation Conference (LREC).

2002b

- (Park et al., 2002) ⇒ Park, Y., Byrd, R. J., and Boguraev, B. K. (2002). Automatic Glossary Extraction: Beyond Terminology Identification. In: Proceedings of the 19th International Conference on Computational Linguistics, pages 1–7, Morristown, NJ, USA. Association for Computational Linguistics.

2000

- (Rebeyrolle & Tanguy, 2000) ⇒ Rebeyrolle, J. and Tanguy, L. (2000). Reperage automatique de structures linguistiques en corpus : le cas des enonces definitoires. Cahiers de Grammaire, 25:153– 174.

1995

- (Yarowsky, 1995) ⇒ Yarowsky, D. (1995). Unsupervised word sense disambiguation rivaling supervised methods. In: Proceedings of the 33rd annual meeting on Association for Computational Linguistics, pages 189–196. Association for Computational Linguistics.

1979

- (Francis & Kucera, 1979) ⇒ Francis, W. N. and Kucera, H. (1979). Brown corpus manual. Brown University.

BibTeX

@article{2016_DEFEXTASemiSupervisedDefinition,

author = {Luis Espinosa Anke and

Roberto Carlini and

Horacio Saggion and

Francesco Ronzano},

title = {DefExt: A Semi Supervised Definition Extraction Tool},

journal = {CoRR},

volume = {abs/1606.02514},

year = {2016},

url = {http://arxiv.org/abs/1606.02514},

archivePrefix = {arXiv},

eprint = {1606.02514},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2016 DEFEXTASemiSupervisedDefinition | Horacio Saggion Luis Espinosa-Anke Roberto Carlini Francesco Ronzano | DEFEXT: A Semi Supervised Definition Extraction Tool | 2016 |