2015 TheUnreasonableEffectivenessofC

- (Goldberg, 2015) ⇒ Yoav Goldberg. (2015). “The Unreasonable Effectiveness of Character-level Language Models (and Why RNNs Are Still Cool).” In: Jupyter Notebook - Nbviewer.

Subject Headings: Character-level LM, Unsmoothed Maximum-Likelihood Character-level LM, LM-based NLG, Python-based Character-Level Maximum Entropy LM System.

Notes

- It analyzes the effectiveness of (Karpathy, 2015).

- PDF Version: KarparthyUNREASONABLY-EFFECTIVE-RNN-15.pdf

Cited By

- Google Scholar: ~ 108 Citations.

Quotes

Introduction

RNNs, LSTMs and Deep Learning are all the rage, and a recent blog post by Andrej Karpathy is doing a great job explaining what these models are and how to train them. It also provides some very impressive results of what they are capable of. This is a great post, and if you are interested in natural language, machine learning or neural networks you should definitely read it.

Go read it now, then come back here.

You're back? good. Impressive stuff, huh? How could the network learn to immitate the input like that? Indeed. I was quite impressed as well.

However, it feels to me that most readers of the post are impressed by the wrong reasons. This is because they are not familiar with unsmoothed maximum-liklihood character level language models and their unreasonable effectiveness at generating rather convincing natural language outputs.

In what follows I will briefly describe these character-level maximum-likelihood langauge models, which are much less magical than RNNs and LSTMs, and show that they too can produce a rather convincing Shakespearean prose. I will also show about 30 lines of python code that take care of both training the model and generating the output. Compared to this baseline, the RNNs may seem somehwat less impressive. So why was I impressed? I will explain this too, below.

Unsmoothed Maximum Likelihood Character Level Language Model

The name is quite long, but the idea is very simple. We want a model whose job is to guess the next character based on the previous [math]\displaystyle{ n }[/math] letters. For example, having seen ello, the next character is likely to be either a commma or space (if we assume is the end of the word "hello"), or the letter w if we believe we are in the middle of the word "mellow". Humans are quite good at this, but of course seeing a larger history makes things easier (if we were to see 5 letters instead of 4, the choice between space and w would have been much easier).

We will call [math]\displaystyle{ n }[/math], the number of letters we need to guess based on, the order of the language model.

RNNs and LSTMs can potentially learn infinite-order language model (they guess the next character based on a "state" which supposedly encode all the previous history). We here will restrict ourselves to a fixed-order language model.

So, we are seeing $n$ letters, and need to guess the $n+1$th one. We are also given a large-ish amount of text (say, all of Shakespeare works) that we can use. How would we go about solving this task?

Mathematically, we would like to learn a function [math]\displaystyle{ P(c|h) }[/math]. Here, [math]\displaystyle{ c }[/math] is a character, [math]\displaystyle{ h }[/math] is a n-letters history, and [math]\displaystyle{ P(c|h) }[/math] stands for how likely is it to see [math]\displaystyle{ c }[/math] after we've seen [math]\displaystyle{ h }[/math].

Perhaps the simplest approach would be to just count and divide (a.k.a maximum likelihood estimates). We will count the number of times each letter [math]\displaystyle{ c′ }[/math] appeared after [math]\displaystyle{ h }[/math], and divide by the total numbers of letters appearing after [math]\displaystyle{ h }[/math]. The unsmoothed part means that if we did not see a given letter following [math]\displaystyle{ h }[/math], we will just give it a probability of zero.

And that's all there is to it.

Training Code

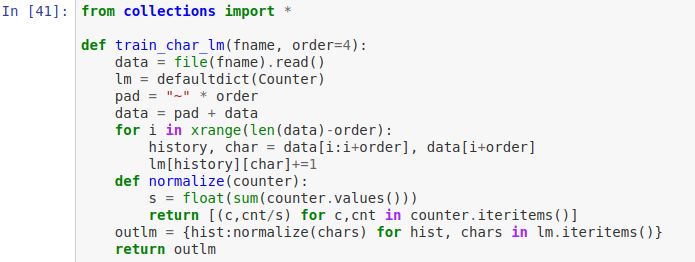

Here is the code for training the model. fname is a file to read the characters from. order is the history size to consult. Note that we pad the data with leading ~ so that we also learn how to start.

Let's train it on Andrej's Shakespears's text:

Input [42]:!wget http://cs.stanford.edu/people/karpathy/char-rnn/shakespeare_input.txt

Input [43]: lm = train_char_lm("shakespeare_input.txt", order=4)

Ok. Now let's do some queries:

Input [44]: lm['ello']

Output [44]: ....

Generated Shakespeare from different order models

Order 2:

Order 4:

Order 7:

How about 10?

This works pretty well

With an order of 4, we already get quite reasonable results. Increasing the order to 7 (~word and a half of history) or 10 (~two short words of history) already gets us quite passable Shakepearan text. I'd say it is on par with the examples in Andrej's post. And how simple and un-mystical the model is!

So why am I impressed with the RNNs after all?

Generating English a character at a time -- not so impressive in my view. The RNN needs to learn the previous n letters, for a rather small n, and that's it.

However, the code-generation example is very impressive. Why ? because of the context awareness. Note that in all of the posted examples, the code is well indented, the braces and brackets are correctly nested, and even the comments start and end correctly. This is not something that can be achieved by simply looking at the previous n letters.

If the examples are not cherry-picked, and the output is generally that nice, then the LSTM did learn something not trivial at all.

Just for the fun of it, let's see what our simple language model does with the linux-kernel code:

Order 10 is pretty much junk. In order 15 things sort-of make sense, but we jump abruptly between the and by order 20 we are doing quite nicely -- but are far from keeping good indentation and brackets.

How could we? we do not have the memory, and these things are not modeled at all. While we could quite easily enrich our model to support also keeping track of brackets and indentation (by adding information such as "have I seen (but not )" to the conditioning history), this requires extra work, non-trivial human reasoning, and will make the model significantly more complex.

The LSTM, on the other hand, seemed to have just learn it on its own. And that's impressive.

References

- (Karpathy, 2015) ⇒ Andrej Karpathy. (2015). “The Unreasonable Effectiveness of Recurrent Neural Networks.” In: Blog post 2015-05-21.

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2015 TheUnreasonableEffectivenessofC | Yoav Goldberg Andrej Karpathy (1986-) | The Unreasonable Effectiveness of Character-level Language Models (and Why RNNs Are Still Cool) | 2015 |