2016 ADecomposableAttentionModelforN

- (Parikh et al., 2016) ⇒ Ankur P. Parikh, Oscar Tackstrom, Dipanjan Das, and Jakob Uszkoreit. (2016). “A Decomposable Attention Model for Natural Language Inference.” In: Proceedings of 2016_Conference on Empirical Methods in Natural Language Processing (EMNLP 2016). arXiv:1606.01933

Subject Headings: Attention Mechanism, Textual Entailment Recognition.

Notes

Cited By

- Google Scholar: ~ 401 Citations

- Semantic Scholar: ~ 397 Citations

Quotes

Abstract

We propose a simple neural architecture for natural language inference. Our approach uses attention to decompose the problem into subproblems that can be solved separately, thus making it trivially parallelizable. On the Stanford Natural Language Inference (SNLI) dataset, we obtain state-of-the-art results with almost an order of magnitude fewer parameters than previous work and without relying on any word-order information. Adding intra-sentence attention that takes a minimum amount of order into account yields further improvements.

1 Introduction

Natural language inference (NLI) refers to the problem of determining entailment and contradiction relationships between a premise and a hypothesis. NLI is a central problem in language understanding (Katz, 1972; Bos and Markert, 2005; van Benthern, 2008; MaCCaItney and Manning, 2009) and recently the large SNLI corpus of 570K sentence pairs was created for this task (Bowman et a1, 2015). We present a new model for NLI and leverage this corpus for comparison with prior work.

A large body of work based on neural networks for text similarity tasks including NLI has been published in recent years (Hu et a1., 2014; Rockt'aschel et a1., 2016; Wang and Jiang, 2016; Yin et a1., 2016, inter alia). The dominating trend in these models is to build complex, deep text representation models, for example, with convolutional networks (LeCun et al., 1990, CNNs henceforth) or long short-term memory networks (Hochreiter and Schmidhuber, 1997, LSTMs henceforth) with the goal of deeper sentence comprehension. While these approaches have yielded impressive results, they are often computationally very expensive, and result in models having millions of parameters (excluding embeddings).

Here, we take a different approach, arguing that for natural language inference it can often suffice to simply align bits of local text substructure and then aggregate this information. For example, consider the following sentences:

- Bob is in his room, but because of the thunder and lightning outside, he cannot sleep.

- Bob is awake.

- It is sunny outside.

The first sentence is complex in structure and it is challenging to construct a compact representation that expresses its entire meaning. However, it is fairly easy to conclude that the second sentence follows from the first one, by simply aligning Bob with Bob and cannot sleep with awake and recognizing that these are synonyms. Similarly, one can conclude that It is sunny outside contradicts the first sentence, by aligning thunder and lightning with sunny and recognizing that these are most likely incompatible.

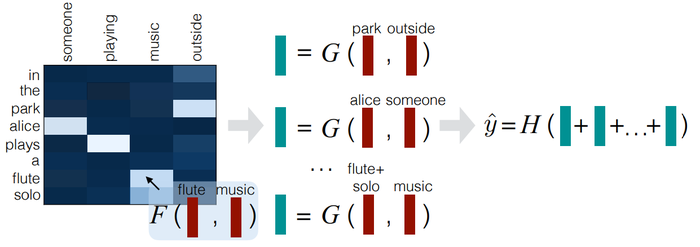

We leverage this intuition to build a simpler and more lightweight approach to NLI within a neural framework; with considerably fewer parameters, our model outperforms more complex existing neural architectures. In contrast to existing approaches, our approach only relies on alignment and is fully computationally decomposable with respect to the input text. An overview of our approach is given in Figure 1. Given two sentences, where each word is represented by an embedding vector, we first create a soft alignment matrix using neural attention (Bahdanau et al., 2015). We then use the (soft) alignment to decompose the task into subproblems that are solved separately. Finally, the results of these subproblems are merged to produce the final classification. In addition, we optionally apply intra-sentence attention (Cheng et al., 2016) to endow the model with a richer encoding of substructures prior to the alignment step. Asymptotically our approach does the same total work as a vanilla LSTM encoder, while being trivially parallelizable across sentence length, which can allow for considerable speedups in low-latency settings. Empirical results on the SNLI corpus show that our approach achieves state-of-the-art results, while using almost an order of magnitude fewer parameters compared to complex LSTM-based approaches.

2 Related Work

3 Approach

3.1 Attend

3.2 Compare

3.3 Aggregate

3.4 Intra-Sentence Attention (Optional)

4 Computational Complexity

5 Experiments

5.1 Implementation Details

5.2 Results

6 Conclusion

Acknowledgements

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2016 ADecomposableAttentionModelforN | Dipanjan Das Jakob Uszkoreit Ankur P. Parikh Oscar Tackstrom | A Decomposable Attention Model for Natural Language Inference | 2016 |