2019 MultiTaskDeepNeuralNetworksforN

- (Liu, He et al., 2019) ⇒ Xiaodong Liu, Pengcheng He, Weizhu Chen, and Jianfeng Gao. (2019). “Multi-Task Deep Neural Networks for Natural Language Understanding.”, In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. ACL. DOI:10.18653/v1/P19-1441

Subject Headings: Neural Natural Language Processing System, Multi-Task Deep Neural Network.

Notes

- Ground AI : https://www.groundai.com/project/multi-task-deep-neural-networks-for-natural-language-understanding/

- ACL Anthology: P19-1441

- ArXiv: 1901.11504

Cited By

- Google Scholar: ~ 61 Citations (Retrieved:2019-10-27).

- Semantic Scholar: ~ 79 Citations (Retrieved:2019-10-27).

Quotes

Abstract

In this paper, we present a Multi-Task Deep Neural Network (MT-DNN) for learning representations across multiple natural language understanding (NLU) tasks. MT-DNN not only leverages large amounts of cross-task data, but also benefits from a regularization effect that leads to more general representations in order to adapt to new tasks and domains. MT-DNN extends the model proposed in Liu et al. (2015) by incorporating a pre-trained bidirectional transformer language model, known as BERT (Devlin et al., 2018). MT-DNN obtains new state-of-the-art results on ten NLU tasks, including SNLI, SciTail, and eight out of nine GLUE tasks, pushing the GLUE benchmark to 82.2% (1.8% absolute improvement). We also demonstrate using the SNLI and SciTail datasets that the representations learned by MT-DNN allow domain adaptation with substantially fewer in-domain labels than the pre-trained BERT representations. Our code and pre-trained models will be made publicly available.

1 Introduction

2 Tasks

3 The Proposed MT-DNN Model

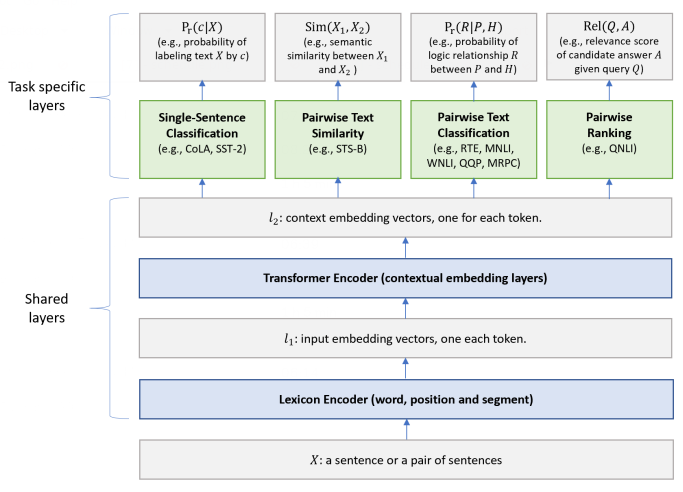

The architecture of the MT-DNN model is shown in Figure 1. The lower layers are shared across all tasks, while the top layers represent task-specific outputs. The input [math]\displaystyle{ X }[/math], which is a word sequence (either a sentence or a pair of sentences packed together) is first represented as a sequence of embedding vectors, one for each word, in [math]\displaystyle{ \ell_1 }[/math]. Then the transformer encoder captures the contextual information for each word via self-attention, and generates a sequence of contextual embeddings in [math]\displaystyle{ \ell_2 }[/math]. This is the shared semantic representation that is trained by our multi-task objectives. In what follows, we elaborate on the model in detail.

Figure 1: Architecture of the MT-DNN model for representation learning. The lower layers are shared across all tasks while the top layers are task-specific. The input [math]\displaystyle{ X }[/math] (either a sentence or a pair of sentences) is first represented as a sequence of embedding vectors, one for each word, in [math]\displaystyle{ \ell_1 }[/math]. Then the Transformer encoder captures the contextual information for each word and generates the shared contextual embedding vectors in [math]\displaystyle{ \ell_2 }[/math]. Finally, for each task, additional task-specific layers generate task-specific representations, followed by operations necessary for classification, similarity scoring, or relevance ranking.

(...)

3.1 The Training Procedure

4 Experiments

4.1 Datasets

4.2 Implementation details

4.3 GLUE Results

4.4 SNLI and SciTail Results

4.5 Domain Adaptation Results

5 Conclusion

6 Acknowledgements

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2019 MultiTaskDeepNeuralNetworksforN | Weizhu Chen Jianfeng Gao Xiaodong Liu Pengcheng He | Multi-Task Deep Neural Networks for Natural Language Understanding | 2019 |