Bidirectional Encoder Representations from Transformers (BERT) Network Architecture

A Bidirectional Encoder Representations from Transformers (BERT) Network Architecture is an transformer-based encoder-only architecture based on a Multi-layer Bidirectional Transformer Encoder Neural Network architecture.

- Context:

- It can be instantiate in a BERT-based Language Model.

- …

- Example(s):

- …

- Counter-Example

- See: Bidirectional Neural Network, Unsupervised Machine Learning System, Seq2Seq Network.

References

2019a

- (Devlin et al., 2019) ⇒ Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. (2019). “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2019), Volume 1 (Long and Short Papers). DOI:10.18653/v1/N19-1423. arXiv:1810.04805

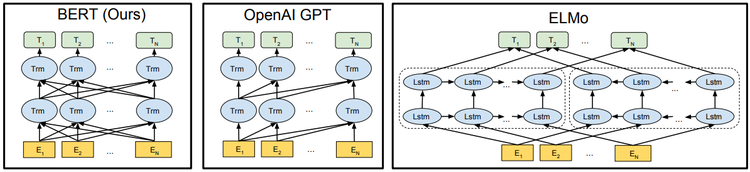

- QUOTE: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers (...)

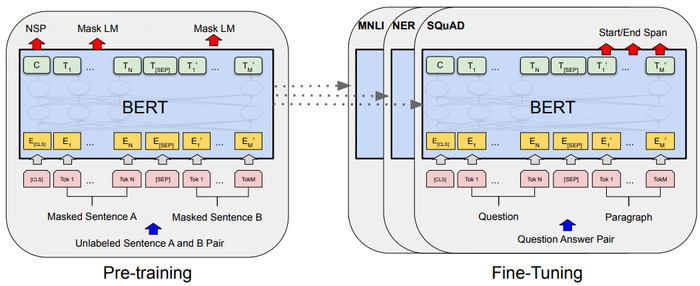

A distinctive feature of BERT is its unified architecture across different tasks. There is minimal difference between the pre-trained architecture and the final downstream architecture.

Model Architecture: BERT’s model architecture is a multi-layer bidirectional Transformer encoder (...)

Input/Output Representations: To make BERT handle a variety of down-stream tasks, our input representation is able to unambiguously represent both a single sentence and a pair of sentences (e.g., [math]\displaystyle{ \langle \text{Question, Answer}\rangle }[/math]) in one token sequence(...)

Figure 1: Overall pre-training and fine-tuning procedures for BERT. Apart from output layers, the same architectures are used in both pre-training and fine-tuning. The same pre-trained model parameters are used to initialize models for different down-stream tasks. During fine-tuning, all parameters are fine-tuned. [CLS] is a special symbol added in front of every input example, and [SEP] is a special separator token (e.g. separating questions/ answers).

Figure 1: Overall pre-training and fine-tuning procedures for BERT. Apart from output layers, the same architectures are used in both pre-training and fine-tuning. The same pre-trained model parameters are used to initialize models for different down-stream tasks. During fine-tuning, all parameters are fine-tuned. [CLS] is a special symbol added in front of every input example, and [SEP] is a special separator token (e.g. separating questions/ answers).

- QUOTE: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers (...)

...

…

…