Neural Network Depth

A Neural Network Depth is a network topology metric that accounts for the number of hidden layers.

- Example(s):

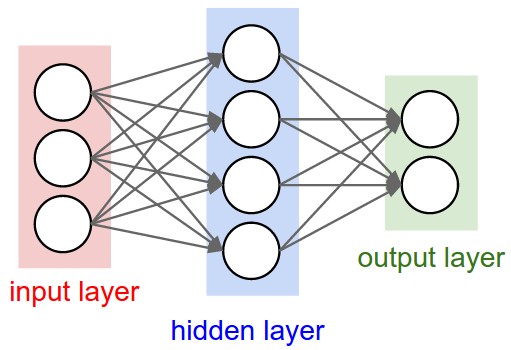

- A neural network 1-layer deep:

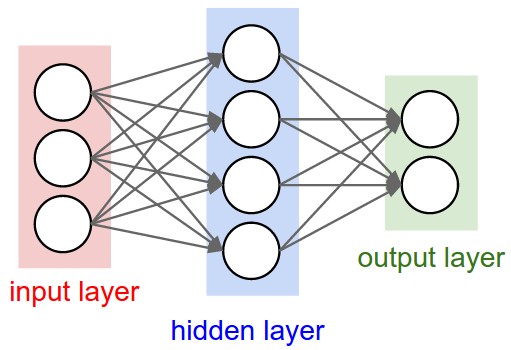

- A neural network 3-layer deep:

- A Deep Learning Neural Networks can be more than 10-layers deep.

- …

- A neural network 1-layer deep:

- Counter-Example(s)

- See: Neural Network Topology; Neural Network Layer; Model Structure; Neural Network Size.

References

2017

- (CS231n, 2017) ⇒ http://cs231n.github.io/neural-networks-1/#layers Retrieved: 2017-12-31

- QUOTE: Sizing neural networks. The two metrics that people commonly use to measure the size of neural networks are the number of neurons, or more commonly the number of parameters. Working with the two example networks in the above picture:

::* The first network (left) has [math]\displaystyle{ 4 + 2 = 6 }[/math] neurons (not counting the inputs), [math]\displaystyle{ [3 \times 4] + [4 \times 2] = 20 }[/math] weights and 4 + 2 = 6 biases, for a total of 26 learnable parameters.

- QUOTE: Sizing neural networks. The two metrics that people commonly use to measure the size of neural networks are the number of neurons, or more commonly the number of parameters. Working with the two example networks in the above picture:

- The second network (right) has [math]\displaystyle{ 4 + 4 + 1 = 9 }[/math] neurons, [math]\displaystyle{ [3 \times 4] + [4 \times 4] + [4 \times 1] = 12 + 16 + 4 = 32 }[/math] weights and [math]\displaystyle{ 4 + 4 + 1 = 9 }[/math] biases, for a total of 41 learnable parameters.

To give you some context, modern Convolutional Networks contain on orders of 100 million parameters and are usually made up of approximately 10-20 layers (hence deep learning).

- The second network (right) has [math]\displaystyle{ 4 + 4 + 1 = 9 }[/math] neurons, [math]\displaystyle{ [3 \times 4] + [4 \times 4] + [4 \times 1] = 12 + 16 + 4 = 32 }[/math] weights and [math]\displaystyle{ 4 + 4 + 1 = 9 }[/math] biases, for a total of 41 learnable parameters.

|

|

Left: A 2-layer Neural Network (one hidden layer of 4 neurons (or units) and one output layer with 2 neurons), and three inputs. Right:A 3-layer neural network with three inputs, two hidden layers of 4 neurons each and one output layer. Notice that in both cases there are connections (synapses) between neurons across layers, but not within a layer. |

.