Neural Network with Attention Mechanism

A Neural Network with Attention Mechanism is Memory-based Neural Network that includes an attention mechanism.

- AKA: Attention-based Neural Network, Attentional Neural Network.

- Example(s):

- a Bidirectional Recurrent Neural Network with Attention Mechanism,

- an Hierarchical Attention Network,

- a Neural Machine Translation Network with Attention Mechanism,

- a Pointer Network (Ptr-Net),

- a Pointer-Generator Network,

- a Sequence-to-Sequence Neural Network with Attention Mechanism,

- a Transformer Neural Network.

- …

- Counter-Example(s):

- See: Neural Memory Cell, Long Short-Term Memory, Recurrent Neural Network, Convolutional Neural Network, Gating Mechanism.

References

2018a

- (Brown et al., 2018) ⇒ Andy Brown, Aaron Tuor, Brian Hutchinson, and Nicole Nichols. (2018). “Recurrent Neural Network Attention Mechanisms for Interpretable System Log Anomaly Detection.” In: Proceedings of the First Workshop on Machine Learning for Computing Systems (MLCS'18). ISBN:978-1-4503-5865-1 doi:10.1145/3217871.3217872

- QUOTE: In recent work [4, 16, 22], researchers have augmented LSTM language models with attention mechanisms in order to add capacity for modeling long term syntactic dependencies. Yogatama et al. [22] characterize attention as a differentiable random access memory. They compare attention language models with differentiable stack based memory [6] (which provides a bias for hierarchical structure), demonstrating the superiority of stack based memory on a verb agreement task with multiple attractors. Daniluk et al. [4] explore three additive attention mechanisms [2] with successive partitioning of the output of the LSTM; splitting the output into separate key, value, and prediction vectors performed best, likely due to removing the need for a single vector to encode information for multiple steps in the computation. In contrast we augment our language models with dot product attention [11, 18], but also use separate vectors for the components of our attention mechanisms.

2018b

- (Yogatama et al., 2018) ⇒ Dani Yogatama, Yishu Miao, Gabor Melis, Wang Ling, Adhiguna Kuncoro, Chris Dyer, and Phil Blunsom. (2018). “Memory Architectures in Recurrent Neural Network Language Models.” In: Proceedings of 6th International Conference on Learning Representations.

- QUOTE: We compare how a recurrent neural network uses a stack memory, a sequential memory cell (i.e., an LSTM memory cell), and a random access memory (i.e., an attention mechanism) for language modeling. Experiments on the Penn Treebank and Wikitext-2 datasets (§3.2) show that both the stack model and the attention-based model outperform the LSTM model with a comparable (or even larger) number of parameters, and that the stack model eliminates the need to tune window size to achieve the best perplexity.(...)

Random access memory. One common approach to retrieve information from the distant past more reliably is to augment the model with a random access memory block via an attention based method.

- QUOTE: We compare how a recurrent neural network uses a stack memory, a sequential memory cell (i.e., an LSTM memory cell), and a random access memory (i.e., an attention mechanism) for language modeling. Experiments on the Penn Treebank and Wikitext-2 datasets (§3.2) show that both the stack model and the attention-based model outperform the LSTM model with a comparable (or even larger) number of parameters, and that the stack model eliminates the need to tune window size to achieve the best perplexity.(...)

2017

- (See et al., 2017) ⇒ Abigail See, Peter J. Liu, and Christopher D. Manning. (2017). “Get To The Point: Summarization with Pointer-Generator Networks.” In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). DOI:10.18653/v1/P17-1099.

- QUOTE: The pointer network (Vinyals et al., 2015) is a sequence-to-sequence model that uses the soft attention distribution of Bahdanau et al. (2015) to produce an output sequence consisting of elements from the input sequence. The pointer network has been used to create hybrid approaches for NMT (Gulcehre et al., 2016), language modeling (Merity et al., 2016), and summarization (Gu et al., 2016; Gulcehre et al., 2016; Miao and Blunsom, 2016; Nallapati et al., 2016; Zeng et al., 2016).

2017a

- (Vaswani et al., 2017) ⇒ Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. (2017). “Attention is all You Need.” In: Advances in Neural Information Processing Systems.

- QUOTE: The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder and decoder configuration. The best performing such models also connect the encoder and decoder through an attention mechanisms. We propose a novel, simple network architecture based solely on an attention mechanism, dispensing with recurrence and convolutions entirely. (...)

Self-attention, sometimes called intra-attention is an attention mechanism relating different positions of a single sequence in order to compute a representation of the sequence. Self-attention has been used successfully in a variety of Tasks including reading comprehension, abstractive summarization, textual entailment and learning task independent sentence representations [4, 22, 23, 19]. End-to-end memory networks are based on a recurrent attention mechanism instead of sequence aligned recurrence and have been shown to perform well on simple-language question answering and language modeling tasks [28].

To the best of our knowledge, however, the Transformer is the first transduction model relying entirely on self-attention to compute representations of its input and output without using sequence aligned RNNs or convolution.

- QUOTE: The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder and decoder configuration. The best performing such models also connect the encoder and decoder through an attention mechanisms. We propose a novel, simple network architecture based solely on an attention mechanism, dispensing with recurrence and convolutions entirely. (...)

2017b

- (Synced Review, 2017) ⇒ Synced (2017). “A Brief Overview of Attention Mechanism." In: Medium - Synced Review Blog Post.

- QUOTE: ... Attention is simply a vector, often the outputs of dense layer using softmax function. Before Attention mechanism, translation relies on reading a complete sentence and compress all information into a fixed-length vector, as you can imagine, a sentence with hundreds of words represented by several words will surely lead to information loss, inadequate translation, etc. However, attention partially fixes this problem. It allows machine translator to look over all the information the original sentence holds, then generate the proper word according to current word it works on and the context. It can even allow translator to zoom in or out (focus on local or global features).

2016a

- (Yang et al., 2016) ⇒ Zichao Yang, Diyi Yang, Chris Dyer, Xiaodong He, Alex Smola, and Eduard Hovy. (2016). “Hierarchical Attention Networks for Document Classification.” In: Proceedings of the 2016_Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies.

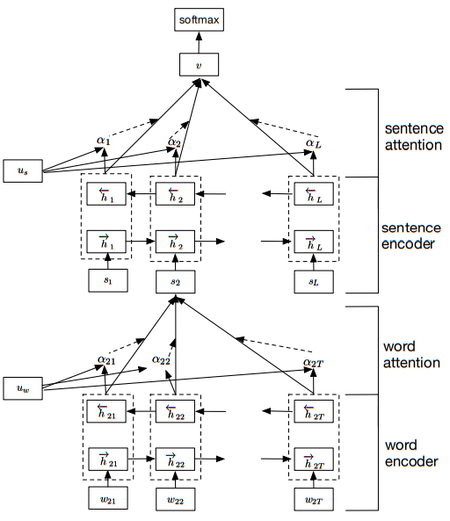

- QUOTE: The overall architecture of the Hierarchical Attention Network (HAN) is shown in Fig. 2. It consists of several parts: a word sequence encoder, a word-level attention layer, a sentence encoder and a sentence-level attention layer. (...)

- QUOTE: The overall architecture of the Hierarchical Attention Network (HAN) is shown in Fig. 2. It consists of several parts: a word sequence encoder, a word-level attention layer, a sentence encoder and a sentence-level attention layer. (...)

|

2016c

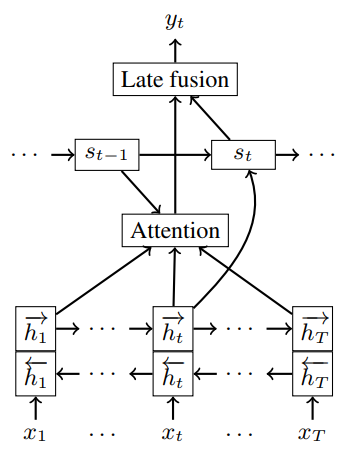

- (Tilk & Alumae, 2016) ⇒ Ottokar Tilk, and Tanel Alumae. (2016). “Bidirectional Recurrent Neural Network with Attention Mechanism for Punctuation Restoration.” In: Proceedings of Interspeech 2016. doi:10.21437/Interspeech.2016

- QUOTE: We incorporated an attention mechanism [25] into our model to further increase its capacity of finding relevant parts of the context for punctuation decisions. For example the model might focus on words that indicate a question, but may be relatively far from the current word, to nudge the model towards ending the sentence with a question mark instead of a period.

To fuse together the model state at current input word and the output from the attention mechanism we use a late fusion approach [28] adapted from LSTM to GRU. This allows the attention model output to directly interact with the recurrent layer state while not interfering with its memory.

- QUOTE: We incorporated an attention mechanism [25] into our model to further increase its capacity of finding relevant parts of the context for punctuation decisions. For example the model might focus on words that indicate a question, but may be relatively far from the current word, to nudge the model towards ending the sentence with a question mark instead of a period.

|

2015a

- (Bahdanau et al., 2015) ⇒ Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. (2015). “Neural Machine Translation by Jointly Learning to Align and Translate.” In: Proceedings of the Third International Conference on Learning Representations (ICLR-2015).

2015b

- (Luong, Pham et al., 2015) ⇒ Minh-Thang Luong, Hieu Pham, and Christopher D. Manning. (2015). “Effective Approaches to Attention-based Neural Machine Translation". In: Proceedings of Conference on Empirical Methods in Natural Language Processing (EMNLP-2015).

- QUOTE: In parallel, the concept of “attention” has gained popularity recently in training neural networks, allowing models to learn alignments between different modalities, e.g., between image objects and agent actions in the dynamic control problem (Mnih et al., 2014), between speech frames and text in the speech recognition task (Chorowski et al., 2014), or between visual features of a picture and its text description in the image caption generation task (Xu et al., 2015)

2015c

- (Rush et al., 2015) ⇒ Alexander M. Rush, Sumit Chopra, and Jason Weston. (2015). “A Neural Attention Model for Abstractive Sentence Summarization.” In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing (EMNLP-2015).

- QUOTE: ... The models proposed recently for neural machine translation often belong to a family of encoder-decoders and consists of an encoder that encodes a source sentence into a fixed-length vector from which a decoder generates a translation. In this paper, we conjecture that the use of a fixed-length vector is a bottleneck in improving the performance of this basic encoder-decoder architecture, and propose to extend this by allowing a model to automatically (soft-) search for parts of a source sentence that are relevant to predicting a target word, without having to form these parts as a hard segment explicitly.

2015d

- (Vinyals et al., 2015) ⇒ Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly. (2015). "Pointer Networks". In: Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems (NIPS 2015).