Regression Tree Learning Algorithm: Difference between revisions

Jump to navigation

Jump to search

m (Text replacement - "]] Category" to " [[Category") |

m (Text replacement - "<P> [[" to "<P> [[") |

||

| Line 40: | Line 40: | ||

=== 1999 === | === 1999 === | ||

* ([[1999_InductiveLearningofTreebasedReg|Torgo, 1999]]) ⇒ [[Luis Torgo]]. ([[1999]]). “[http://www.dcc.fc.up.pt/~ltorgo/PhD/ Inductive Learning of Tree-based Regression Models]." Ph.D. Thesis, Thesis, Faculty of Sciences, University of Porto | * ([[1999_InductiveLearningofTreebasedReg|Torgo, 1999]]) ⇒ [[Luis Torgo]]. ([[1999]]). “[http://www.dcc.fc.up.pt/~ltorgo/PhD/ Inductive Learning of Tree-based Regression Models]." Ph.D. Thesis, Thesis, Faculty of Sciences, University of Porto | ||

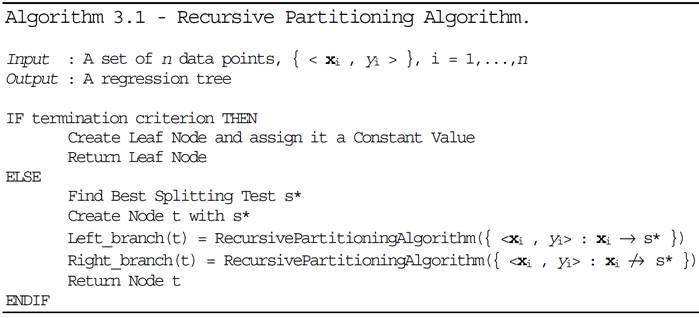

** QUOTE: [[Regression tree]]s are constructed using a [[recursive partitioning (RP) algorithm]]. This [[algorithm]] builds a [[Decision Treet|tree]] by [[recursively splitting]] the [[training sample]] into smaller [[subset]]s. We give below a [[high level description]] of the [[algorithm]]. The [[RP algorithm]] receives as [[input]] a [[set]] of <math>n</math> [[data point]]s, <math>D_t=\{\langle \mathbf{x_i},y_i\rangle\}^{n_t}_{i=1}</math>, and if certain [[termination criteria]] are not met it generates a [[test node]] <math>t</math>, whose [[Decision Treet Branch|branches]] are obtained by applying the same [[algorithm]] with two [[subset]]s of the [[input]] [[data point]]s. These [[subset]]s consist of the cases that logically entail the [[split test]] <math>s^*</math> in the [[node]] <math>t</math>, <math> D_{t_L}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \to s^*\} </math>, and the remaining cases, <math> D_{t_R}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \not \to s^*\}</math>. At each node the best [[split test]] is chosen according to some local [[criterion]], which means that this is a [[greedy hill-climbing algorithm]]. <P> | ** QUOTE: [[Regression tree]]s are constructed using a [[recursive partitioning (RP) algorithm]]. This [[algorithm]] builds a [[Decision Treet|tree]] by [[recursively splitting]] the [[training sample]] into smaller [[subset]]s. We give below a [[high level description]] of the [[algorithm]]. The [[RP algorithm]] receives as [[input]] a [[set]] of <math>n</math> [[data point]]s, <math>D_t=\{\langle \mathbf{x_i},y_i\rangle\}^{n_t}_{i=1}</math>, and if certain [[termination criteria]] are not met it generates a [[test node]] <math>t</math>, whose [[Decision Treet Branch|branches]] are obtained by applying the same [[algorithm]] with two [[subset]]s of the [[input]] [[data point]]s. These [[subset]]s consist of the cases that logically entail the [[split test]] <math>s^*</math> in the [[node]] <math>t</math>, <math> D_{t_L}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \to s^*\} </math>, and the remaining cases, <math> D_{t_R}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \not \to s^*\}</math>. At each node the best [[split test]] is chosen according to some local [[criterion]], which means that this is a [[greedy hill-climbing algorithm]]. <P> [[File:1999_InductiveLearningofTreebasedReg_Algorithm3.1.jpg]]<P> The [[algorithm]] has three main components: | ||

*** A way to select a [[split test]] (the [[splitting rule]]). | *** A way to select a [[split test]] (the [[splitting rule]]). | ||

*** A [[rule]] to determine when a [[tree node]] is [[terminal]] ([[termination criterion]]). | *** A [[rule]] to determine when a [[tree node]] is [[terminal]] ([[termination criterion]]). | ||

Revision as of 01:50, 27 February 2024

A Regression Tree Learning Algorithm is a decision tree learning algorithm that is a model-based regression algorithm.

- Context:

- It can (typically) apply a Regression Tree Splitting Criterion (such as minimization of the squared error)

- It can be implemented by a Regression Tree Learning System (to solve a regression tree learning task that requires a regression tree).

- It can be supported by a Regression Tree Pruning Algorithm, such as a Regression Tree Post-Pruning Algorithm or a Regression Tree Pre-Pruning Algorithm.

- It can be constructed by using a Recursive Partitioning Algorithm.

- Example(s):

- A Least Squares Regression Tree Algorithm.

- A First-Order Regression Tree Algorithm.

- the one implemented by

sklearn.tree.DecisionTreeRegressor. - the one proposed in (Hothorn et al., 2006).

- …

- Counter-Example(s):

- See: Regression Algorithm, Kernel-based Classification Algorithm.

References

2017a

- (Wikipedia, 2017) ⇒ https://en.wikipedia.org/wiki/Decision_tree_learning Retrieved:2017-10-15.

- Decision tree learning uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). It is one of the predictive modelling approaches used in statistics, data mining and machine learning. Tree models where the target variable can take a discrete set of values are called classification trees ; in these tree structures, leaves represent class labels and branches represent conjunctions of features that lead to those class labels. Decision trees where the target variable can take continuous values (typically real numbers) are called regression trees.

In decision analysis, a decision tree can be used to visually and explicitly represent decisions and decision making. In data mining, a decision tree describes data (but the resulting classification tree can be an input for decision making). This page deals with decision trees in data mining.

- Decision tree learning uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). It is one of the predictive modelling approaches used in statistics, data mining and machine learning. Tree models where the target variable can take a discrete set of values are called classification trees ; in these tree structures, leaves represent class labels and branches represent conjunctions of features that lead to those class labels. Decision trees where the target variable can take continuous values (typically real numbers) are called regression trees.

2017b

- (Torgo, 2017) ⇒ Luı́s Torgo, (2017). "Regression Trees". In Encyclopedia of Machine Learning and Data Mining pp 1080-1083.

- QUOTE: Regression trees are supervised learning methods that address multiple regression problems. They provide a tree-based approximation f^, of an unknown regression function [math]\displaystyle{ Y \in \mathcal{R} }[/math] and [math]\displaystyle{ \epsilon \approx N (0, \sigma^2) }[/math], based on a given sample of data [math]\displaystyle{ D=\{\langle x_i^1,\cdots, x_i^p,y_i\}^n_{i=1} }[/math]. The obtained models consist of a hierarchy of logical tests on the values of any of the [math]\displaystyle{ p }[/math] predictor variables. The terminal nodes of these trees, known as the leaves, contain the numerical predictions of the model for the target variable [math]\displaystyle{ Y }[/math]

2013

- (Melli, 2013-07-24) ⇒ Gabor Melli. (2013). Regression Tree Learning Techniques and Systems." Informal Presentation.

- QUOTE: a trained predictor tree that is a regressed point estimation function (where each leaf node and typically also internal nodes makes a point estimate).

2006

- (Hothorn et al., 2006) ⇒ Torsten Hothorn, Kurt Hornik, and Achim Zeileis. (2006). “Unbiased Recursive Partitioning: A Conditional Inference Framework.” In: Journal of Computational and Graphical statistics, 15(3). doi:10.1198/106186006X133933

- QUOTE: Recursive binary partitioning is a popular tool for regression analysis. Two fundamental problems of exhaustive search procedures usually applied to fit such models have been known for a long time: Overfitting and a selection bias towards covariates with many possible splits or missing values. While pruning procedures are able to solve the overfitting problem, the variable selection bias still seriously effects the interpretability of tree-structured regression models.

1999

- (Torgo, 1999) ⇒ Luis Torgo. (1999). “Inductive Learning of Tree-based Regression Models." Ph.D. Thesis, Thesis, Faculty of Sciences, University of Porto

- QUOTE: Regression trees are constructed using a recursive partitioning (RP) algorithm. This algorithm builds a tree by recursively splitting the training sample into smaller subsets. We give below a high level description of the algorithm. The RP algorithm receives as input a set of [math]\displaystyle{ n }[/math] data points, [math]\displaystyle{ D_t=\{\langle \mathbf{x_i},y_i\rangle\}^{n_t}_{i=1} }[/math], and if certain termination criteria are not met it generates a test node [math]\displaystyle{ t }[/math], whose branches are obtained by applying the same algorithm with two subsets of the input data points. These subsets consist of the cases that logically entail the split test [math]\displaystyle{ s^* }[/math] in the node [math]\displaystyle{ t }[/math], [math]\displaystyle{ D_{t_L}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \to s^*\} }[/math], and the remaining cases, [math]\displaystyle{ D_{t_R}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \not \to s^*\} }[/math]. At each node the best split test is chosen according to some local criterion, which means that this is a greedy hill-climbing algorithm.

The algorithm has three main components:

- A way to select a split test (the splitting rule).

- A rule to determine when a tree node is terminal (termination criterion).

- A rule for assigning a value to each terminal node.

- QUOTE: Regression trees are constructed using a recursive partitioning (RP) algorithm. This algorithm builds a tree by recursively splitting the training sample into smaller subsets. We give below a high level description of the algorithm. The RP algorithm receives as input a set of [math]\displaystyle{ n }[/math] data points, [math]\displaystyle{ D_t=\{\langle \mathbf{x_i},y_i\rangle\}^{n_t}_{i=1} }[/math], and if certain termination criteria are not met it generates a test node [math]\displaystyle{ t }[/math], whose branches are obtained by applying the same algorithm with two subsets of the input data points. These subsets consist of the cases that logically entail the split test [math]\displaystyle{ s^* }[/math] in the node [math]\displaystyle{ t }[/math], [math]\displaystyle{ D_{t_L}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \to s^*\} }[/math], and the remaining cases, [math]\displaystyle{ D_{t_R}=\{\langle \mathbf{x_i},y_i\rangle\} \in D_t : \mathbf{x_i} \not \to s^*\} }[/math]. At each node the best split test is chosen according to some local criterion, which means that this is a greedy hill-climbing algorithm.

1997

- (Wang & Witten, 1997) ⇒ Yong Wang, and Ian H. Witten. (1997). “Inducing Model Trees for Continuous Classes.” In: Proceedings of the European Conference on Machine Learning.

- ABSTRACT: Many problems encountered when applying machine learning in practice involve predicting a “class” that takes on a continuous numeric value, yet few machine learning schemes are able to do this. This paper describes a “rational reconstruction” of M5, a method developed by Quinlan (1992) for inducing trees regression models. In order to accommodate data typically encountered in practice it is necessary to deal efiectively with enumerated attributes and with missing values and techniques devised by Breiman et a1. (1954) are adapted for this purpose. The resulting system seems to outperform M5 based on the scanty published data that is available.

1992

- (Quinlan, 1992) ⇒ J. Ross Quinlan. (1992). “Learning with Continuous Classes.” In: Proceedings of the 5th Australian joint Conference on Artificial Intelligence.

- ABSTRACT: Some empirical learning tasks are concerned with predicting values rather than the more familiar categories. This paper describes a new system, m5, that constructs tree-based piecewise linear models. Four case studies are presented in which m5 is compared to other methods.

1984

- (Breiman et al., 1984) ⇒ Leo Breiman, Jerome H. Friedman, Charles J. Stone, and R. A. Olshen. (1984). “Classification and Regression Trees." Chapman & Hall. ISBN:0412048418

- QUOTE: The methodology used to construct tree structured rules is the focus of this monograph. Unlike many other statistical procedures, which moved from pencil and paper to calculators, this text's use of trees was unthinkable before computers. Both the practical and theoretical sides have been developed in the authors' study of tree methods. Classification and Regression Trees reflects these two sides, covering the use of trees as a data analysis method, and in a more mathematical framework, proving some of their fundamental properties.