2019 Code2vecLearningDistributedRepr

- (Alon et al., 2019) ⇒ Uri Alon, Meital Zilberstein, Omer Levy, and Eran Yahav. (2019). “code2vec: Learning Distributed Representations of Code.” In: Proceedings of the ACM on Programming Languages (POPL), Volume 3.

Subject Headings: Code2vec; Automatic Code Summarization Task.

Notes

- Article Version(s):

- Online Resource(s):

Cited By

- Google Scholar: ~ 257 Citations, Retrieved: 2021-02-21.

Quotes

Abstract

We present a neural model for representing snippets of code as continuous distributed vectors ("code embeddings"). The main idea is to represent a code snippet as a single fixed-length code vector, which can be used to predict semantic properties of the snippet. This is performed by decomposing code to a collection of paths in its abstract syntax tree, and learning the atomic representation of each path simultaneously with learning how to aggregate a set of them. We demonstrate the effectiveness of our approach by using it to predict a method's name from the vector representation of its body. We evaluate our approach by training a model on a dataset of 14M methods. We show that code vectors trained on this dataset can predict method names from files that were completely unobserved during training. Furthermore, we show that our model learns useful method name vectors that capture semantic similarities, combinations, and analogies. Comparing previous techniques over the same data set, our approach obtains a relative improvement of over 75%, being the first to successfully predict method names based on a large, cross-project, corpus. Our trained model, visualizations and vector similarities are available as an interactive online demo at this http://code2vec.org. The code, data, and trained models are available at https://github.com/tech-srl/code2vec .

1. Introduction

Distributed representations of words (such as “word2vec”; Mikolov et al. 2013a, b; Pennington et al. 2014) , sentences, paragraphs, and documents (such as “doc2vec”; Le and Mikolov 2014) played a key role in unlocking the potential of neural networks for natural language processing (NLP) tasks (Bengio et al. 2003; Collobert and Weston 2008; Glorot et al. 2011; Socher et al. 2011; Turian et al. 2010; Turney 2006). Methods for learning distributed representations produce low-dimensional vector representations for objects, referred to as embeddings. In these vectors, the “meaning” of an element is distributed across multiple vector component, such that semantically similar objects are mapped to close vectors.

Goal: The goal of this paper is to learn code embeddings, continuous vectors for representing snippets of code. By learning code embeddings, our long-term goal is to enable the application of neural techniques to a wide-range of programming-languages tasks. In this paper, we use the motivating task of semantic labeling of code snippets.

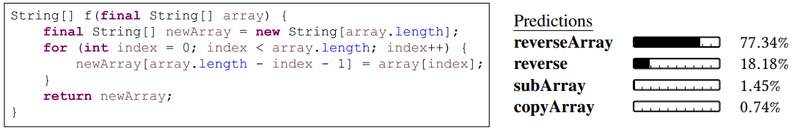

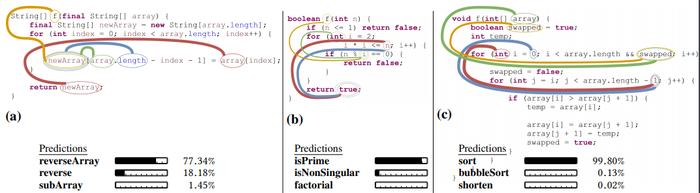

Motivating task: semantic labeling of code snippets. Consider the method in Figure 1. The method contains only low-level assignments to arrays, but a human reading the code may (correctly) label it as performing the reverse operation. Our goal is to predict such labels automatically. The right hand side of Figure 1 shows the labels predicted automatically using our approach. The most likely prediction (77.34%) is reverseArray. Section 6 provides additional examples.

|

Intuitively, this problem is hard because it requires learning a correspondence between the entire content of a method and a semantic label. That is, it requires aggregating possibly hundreds of expressions and statements from the method body into a single, descriptive label.

Our Approach

We present a novel framework for predicting program properties using neural networks. Our main contribution is a neural network that learns code embeddings - continuous distributed vector representations for code. The code embeddings allow us to model correspondence between code snippet and labels in a natural and effective manner.

Our neural network architecture uses a representation of code snippets that leverages the structured nature of source code, and learns to aggregate multiple syntactic paths into a single vector. This ability is fundamental for the application of deep learning in programming languages. By analogy, word embeddings in natural language processing (NLP) started a revolution of application of deep learning for NLP tasks.

The input to our model is a code snippet and a corresponding tag, label, caption, or name. This label expresses the semantic property that we wish the network to model, for example: a tag that should be assigned to the snippet, or the name of the method, class, or project that the snippet was taken from. Let $C$ be the code snippet and $\mathcal{L}$ be the corresponding label or tag. Our underlying hypothesis is that the distribution of labels can be inferred from syntactic paths in $C$. Our model therefore attempts to learn the label distribution, conditioned on the code: $P \left(\mathcal{L}|C\right)$.

We demonstrate the effectiveness of our approach for the task of predicting a method's name given its body. This problem is important as good method names make code easier to understand and maintain. A good name for a method provides a high-level summary of its purpose. Ideally, “If you have a good method name, you don’t need to look at the body.” (Fowler and Beck 1999). Choosing good names can be especially critical for methods that are part of public APIs, as poor method names can doom a project to irrelevance (Allamanis et al. 2015a; H∅st and ∅stvold 2009).

Capturing Semantic Similarity between Names

During the process of learning code vectors, a parallel vocabulary of vectors of the labels is learned. When using our model to predict method names, the method-name vectors provide surprising semantic similarities and analogies. For example, vector(equals) + vector(toLowerCase) results in a vector that is closest to vector (equalsIgnoreCase).

Similar to the famous NLP example of: vec(“king ′′)−vec(“man′′)+vec(“woman′′) ≈ vec(“queen′′) (Mikolov et al. 2013c), our model learns analogies that are relevant to source code, such as: “receive is to send as download is to: upload”. Table 1 shows additional examples, and Section 6.4 provides a detailed discussion.

| A | ≈B | A | ≈B |

|---|---|---|---|

| size | getSize, length, getCount, getLength | executeQuery | executeSql, runQuery, getResultSet |

| active | isActive, setActive, getIsActive, enabled | actionPerformed | itemStateChanged, mouseClicked, keyPressed |

| done | end, stop, terminate | toString | getName, getDescription, getDisplayName |

| toJson | serialize, toJsonString, getJson, asJson, | equal | eq, notEqual, greaterOrEqual, lessOrEqual |

| run | execute, call, init, start | error | fatalError, warning, warn |

1.1 Applications

Embedding a code snippet as a vector has a variety of machine-learning based applications, since machine-learning algorithms usually take vectors as their inputs. Such direct applications, that we examine in this paper, are:

- (1) Automatic code review - suggesting better method names when the name given by the developer doesn’t match the method's functionality, thus preventing naming bugs, improving the readability and maintenance of code, and easing the use of public APIs. This application was previously shown to be of significant importance (Allamanis et al. 2015a; Fowler and Beck 1999; H∅st and ∅stvold 2009).

- (2) Retrieval and API discovery - semantic similarities enable search in “the problem domain” instead of search “in the solution domain”. For example, a developer might look for a

serializemethod, while the equivalent method of the class is namedtoJsonas serialization is performed viajson. An automatic tool that looks for the most similar vector to the requested name among the available methods will findtoJson(Table 1). Such semantic similarities are difficult to find without our approach. Further, an automatic tool which uses our vectors can easily notice that a programmer is using the methodequalsright aftertoLowerCaseand suggest to useequalsIgnoreCaseinstead (Table 6).

The code vectors we produce can be used as input to any machine learning pipeline that performs tasks such as code retrieval, captioning, classification and tagging, or as a metric for measuring similarity between snippets of code for ranking and clone detection. The novelty of our approach is in its ability to produce vectors that capture properties of snippets of code, such that similar snippets (according to any desired criteria) are assigned with similar vectors. This ability unlocks a variety of applications for working with machine-learning algorithms on code, since machine learning algorithms usually take vectors as their input, just as word2vec unlocked a wide range of applications and improved almost every NLP application.

We deliberately picked the difficult task of method name prediction, for which prior results were low (Allamanis et al. 2015a, 2016; Alon et al. 2018) as an evaluation benchmark. Succeeding in this challenging task implies good performance in other tasks such as: predicting whether or not a program performs I/O, predicting the required dependencies of a program, and predicting whether a program is a suspected malware. We show that even for this challenging benchmark, our technique provides dramatic improvement over previous works.

1.2 Challenges: Representation and Attention

Assigning a semantic label to a code snippet (such as a name to a method) is an example for a class of problems that require a compact semantic descriptor of a snippet. The question is how to represent code snippets in a way that captures some semantic information, is reusable across programs, and can be used to predict properties such as a label for the snippet. This leads to two challenges:

- Representing a snippet in a way that enables learning across programs;

- Learning which parts in the representation are relevant to prediction of the desired property, and learning what is the order of importance of each part.

Representation

NLP methods typically treat text as a linear sequence of tokens. Indeed, many existing approaches also represent source code as a token stream (Allamanis et al. 2014, 2016; Allamanis and Sutton 2013; Hindle et al. 2012; Movshovitz-Attias and Cohen 2013; White et al. 2015). However, as observed previously Alon et al. (2018); Bielik et al. (2016); Raychev et al. (2015), programming languages can greatly benefit from representations that leverage the structured nature of their syntax.

We note that there is a tradeoff between the degree of program-analysis required to extract the representation, and the learning effort that follows. Performing no program-analysis at all, and learning from the program's surface text, incurs a significant learning effort that is often prohibitive in the amounts of data required. Intuitively, this is because the learning model has to re-learn the syntax and semantics of the programming language from the data. On the other end of the spectrum, performing a deep program-analysis to extract the representation may make the learned model language-specific (and even task-specific).

Following previous works Alon et al. (2018); Raychev et al. (2015), we use paths in the program's abstract syntax tree (AST) as our representation. By representing a code snippet using its syntactic paths, we can capture regularities that reflect common code patterns. We find that this representation significantly lowers the learning effort (compared to learning over program text), and is still scalable and general such that it can be applied to a wide range of problems and large amounts of code.

We represent a given code snippet as a bag (multiset) of its extracted paths. The challenge is then how to aggregate a bag of contexts, and which paths to focus on for making a prediction.

Attention

Intuitively, the problem is to learn a correspondence between a bag of path-contexts and a label. Representing each bag of path-contexts monolithically is going to suffer from sparsity – even similar methods will not have the exact same bag of path-contexts. We therefore need a compositional mechanism that can aggregate a bag of path-contexts such that bags that yield the same label are mapped to close vectors. Such a compositional mechanism would be able to generalize and represent new unseen bags by leveraging the fact that during training it observed the individual path-contexts and their components (paths, values, etc.) as parts of other bags.

To address this challenge, which is the focus of this paper, we use a novel attention network architecture. Attention models have gained much popularity recently, mainly for neural machine translation (NMT) (Bahdanau et al. 2014; Luong et al. 2015; Vaswani et al. 2017), reading comprehension (Levy et al. 2017; Seo et al. 2016), image captioning (Xu et al. 2015), and more (Ba et al. 2014; Bahdanau et al. 2016; Chorowski et al. 2015; Mnih et al. 2014).

In our model, neural attention learns how much focus (“attention”) should be given to each element in a bag of path-contexts. It allows us to precisely aggregate the information captured in each individual path-context into a single vector that captures information about the entire code snippet. As we show in Section 6.4, our model is relatively interpretable: the weights allocated by our attention mechanism can be visualized to understand the relative importance of each path-context in a prediction. The attention mechanism is learned simultaneously with the embeddings, optimizing both the atomic representations of paths and the ability to compose multiple contexts into a single code vector.

Soft and Hard Attention

The terms “soft” and “hard” attention were proposed for the task of image caption generation by Xu et al.(2015). Applied in our setting, soft-attention means that weights are distributed “softly” over all path-contexts in a code snippet, while hard-attention refers to selection of a single path-context to focus on at a time. The use of soft-attention over syntactic paths is the main understanding that provides this work much better results than previous works. We compare our model with an equivalent model that uses hard-attention in Section 6.2, and show that soft-attention is more efficient for modeling code.

1.3 Existing Techniques

The problem of predicting program properties by learning from big code has seen tremendous interest and progress in recent years (Allamanis et al. 2014; Allamanis and Sutton 2013; Bielik et al. 2016; Hindle et al. 2012; Raychev et al. 2016). The ability to predict semantic properties of a program without running it, and with little or no semantic analysis at all, has a wide range of applications: predicting names for program entities (Allamanis et al. 2015a; Alon et al. 2018; Raychev et al. 2015), code completion (Mishne et al. 2012; Raychev et al. 2014), code summarization (Allamanis et al. 2016), code generation (Amodio et al. 2017; Lu et al. 2017; Maddison and Tarlow 2014; Murali et al. 2017), and more (see Allamanis et al. 2017; Vechev and Yahav 2016 for a survey).

A recent work Alon et al. (2018) used syntactic paths with Conditional Random Fields (CRFs) for the task of predicting method names in Java. Our work achieves significantly better results for the same task on the same dataset: F1 score of 58.4 vs. 49.9 (a relative improvement of 17%), while training 5× faster thanks to our ability to use a GPU, which cannot be used in their model. Further, their approach can only perform predictions for the exact task that it was trained for, while our approach produces code vectors that once trained for a single task, are useful for other tasks as well. In Section 5 we discuss more conceptual advantages compared to the model of Alon et al. (2018) which are generalization ability and a reduction of polynomial space complexity with linear space.

Distributed representations of code identifiers were first suggested by Allamanis et al. (2015a), and used to predict variable, method, and class names based on token context features. Allamanis et al. (2016) were also the first to consider the problem of predicting method names. Their technique used a Convolutional Neural Network (CNN) where locality in the model is based on textual locality in source code. While their technique works well when training and prediction are performed within the scope of the same project, they report poor results when used across different projects (as we reproduce in Section 6.1). Thus, the problem of predicting method names based on a large corpus has remained an open problem until now. To the best of our knowledge, our technique is the first to train an effective cross-project model for predicting method names.

1.4 Contributions

The main contributions of this paper are:

- A path-based attention model for learning vectors for arbitrary-sized snippet of code. This model allows to embed a program, which is a discrete object, into a continuous space, such that it can be fed into a deep learning pipeline for various tasks.

- As a benchmark for our approach, we perform a quantitative evaluation for predicting cross-project method names, trained on more than 14M methods of real-world data, and compared with previous works. Experiments show that our approach achieves significantly better results than previous works which used Long Short-Term Memory networks (LSTMs), CNNs and CRFs.

- A qualitative evaluation that interprets the attention that the model has learned to give to the different path-contexts when making predictions.

- A collection of method name embeddings, which often assign semantically similar names to similar vectors, and even allows to compute analogies using simple vector arithmetic

- An analysis that shows the significant advantages in terms of generalization ability and space complexity of our model, compared to previous non-neural works such as Alon et al. (2018) and Raychev et al. (2015).

2. Overview

In this section we demonstrate how our model assigns different vectors to similar snippets of code, in a way that captures the subtle differences between them. The vectors are useful for making a prediction about each snippet, even though none of these snippets has been exactly observed in the training data.

The main idea of our approach is to extract syntactic paths from within a code snippet, represent them as a bag of distributed vector representations, and use an attention mechanism to compute a learned weighted average of the path vectors in order to produce a single code vector. Finally, this code vector can be used for various tasks, such as to predict a likely name for the whole snippet.

2.1 Motivating Example

Since method names are usually descriptive and accurate labels for code snippets, we demonstrate our approach for the task of learning code vectors for method bodies, and predicting the method name given the body. In general, the same approach can be applied to any snippet of code that has a corresponding label.

Consider the three Java methods of Figure 2. These methods share a similar syntactic structure: they all (i) have a single parameter named target (ii) iterate over a field named elements and (iii) have an if condition inside the loop body. The main differences are that the method of Fig.2a returns true when elements contains target and false otherwise; the method of Fig.2b returns the element from elements which target equals to its hashCode; and the method of Fig.2c returns the index of target in elements. Despite their shared characteristics, our model captures the subtle differences and predicts the descriptive method names: contains, get, and indexOf respectively.

|

Path Extraction

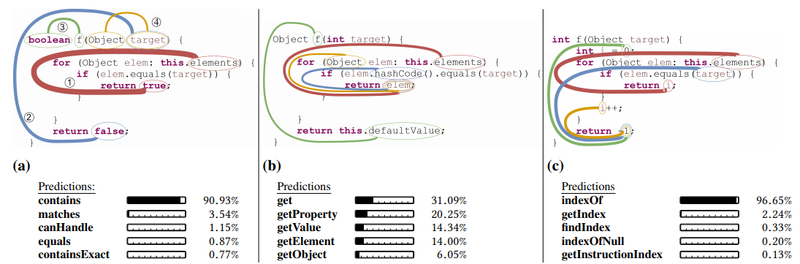

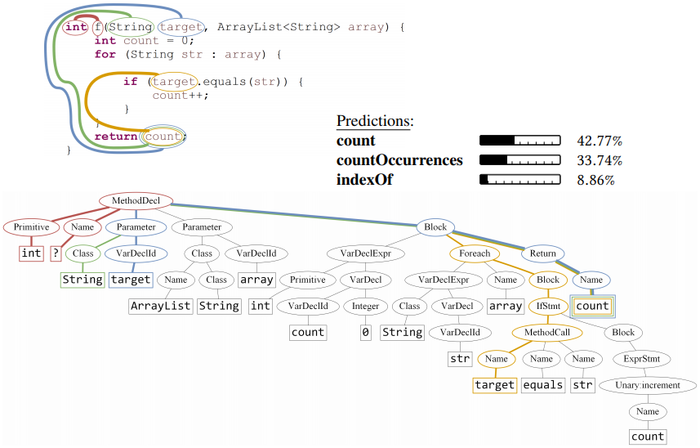

First, each query method in the training corpus is parsed to construct an AST. Then, the AST is traversed and syntactic paths between AST leaves are extracted. Each path is represented as a sequence of AST nodes, linked by up and down arrows, which symbolize the up or down link between adjacent nodes in the tree. The path composition is kept with the values of the AST leaves it is connecting, as a tuple which we refer to as a path-context. These terms are defined formally in Section 3. Figure 3 portrays the top-four path-contexts that were given the most attention by the model, on the AST of the method from Figure 2a, such that the width of each path is proportional to the attention it was given by the model during this prediction.

|

Distributed Representation of Contexts

Each of the path and leaf-values of a path-context is mapped to its corresponding real-valued vector representation, or its embedding. Then, the three vectors of each context are concatenated to a single vector that represents that path-context. During training, the values of the embeddings are learned jointly with the attention parameter and the rest of the network parameters.

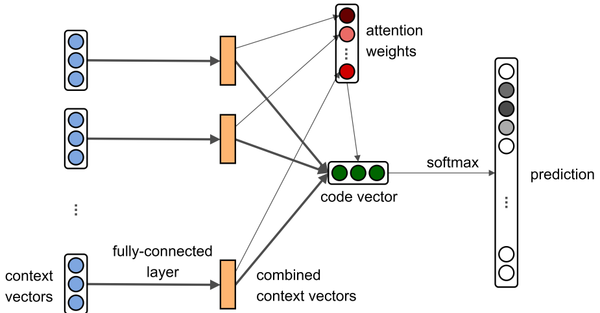

Path-attention network. The Path-Attention network aggregates multiple path-contexts embeddings into a single vector that represents the whole method body. Attention is the mechanism that learns to score each path-context, such that higher attention is reflected in a higher score. These multiple embeddings are aggregated using the attention scores into a single code vector. The network then predicts the probability for each target method name given the code vector. The network architecture is described in Section 4.

Path-Attention Interpretation

While it is usually difficult or impossible to interpret specific values of vector components in neural networks, it is possible and interesting to observe the attention scores that each path-context was given by the network. Each code snippet in Figure 2 and Figure 3 highlights the top-four path-contexts that were given the most weight (attention) by the model in each example. The widths of the paths are proportional to the attention score that each of these path-contexts was given. The model has learned how much weight to give every possible path on its own, as part of training on millions of examples. For example, it can be seen in Figure 3 that the red ➀ path-context, which spans from the field elements to the return value true was given the highest attention. For comparison, the blue ➁ path-context, which spans from the parameter target to the return value false was given a lower attention.

Consider the red ➁ path-context of Figure 2a and Figure 3. As we explain in Section 3, this path is represented as:

elements, Name$\uparrow$FieldAccess$\uparrow$Foreach$\downarrow$Block$\downarrow$IfStmt$\downarrow$Block$\downarrow$Return$\downarrow$BooleanExpr, true) Inspecting this path node-by-node reveals that this single path captures the main functionality of the method: the method iterates over a field called elements, and for each of its values it checks an if condition; if the condition is true, the method returns true. Since we use soft-attention, the final prediction takes into account other paths as well, such as paths that describe the if condition itself, but it can be understood why the model gave this path the highest attention.

Figure 2 also shows the top-5 suggestions from the model for each method. As can be seen in all of the three examples, in many cases most of the top suggestions are very similar to each other and all of them are descriptive regarding the method. Observing the top-5 suggestions in Figure 2a shows that two of them (contains and containsExact) are very accurate, but it can also be imagined how a method called matches would share similar characteristics: a method called matches is also likely to have an if condition inside a for loop, and to return true if the condition is true.

Another interesting observation is that the orange ➃ path-context of Figure 2a which spans from Object to target was given a lower attention than other path-contexts in the same method, but higher attention than the same path-context in Figure 2c. This demonstrates how attention is not constant, but given with respect to the other path-contexts in the code.

Comparison with N-grams

The method in Figure 2a shows the four path-contexts that were given the most attention during the prediction of the method name contains. Out of them, the orange➃ path-context spans between two consecutive tokens: Object and target. This might create the (false) impression that representing this method as a bag-of-bigrams could be as expressive as syntactic paths. However, as can be seen in Figure 3, the orange ➃ path goes through an AST node of type Parameter, which uniquely distinguishes it from, for example, a local variable declaration of the same name and type. In contrast, a bigram model will represent the expression Object target equally whether target is a method parameter or a local variable. This shows that a model using a syntactic representation of a code snippet can distinguish between two snippets of code that other representations cannot, and by aggregating all the contexts using attention, all these subtle differences contribute to the prediction.

Key Aspects

The illustrated examples highlight several key aspects of our approach:

- A code snippet can be efficiently represented as a bag of path-contexts.

- Using a single context is not enough to make an accurate prediction. An attention-based neural network can identify the importance of multiple path-contexts, and aggregate them accordingly to make a prediction.

- Subtle differences between code snippets are easily distinguished by our model, even if the code snippets have a similar syntactic structure and share many common tokens and n-grams.

- Large corpus, cross-project prediction of method names is possible using this model.

- Although our model is based on a neural network, the model is human-interpretable and provides interesting observations.

3. Background - Representing Code Using AST Paths

In this section, we briefly describe the representation of a code snippet as a set of syntactic paths in its abstract syntax tree (AST). This representation is based on the general-purpose approach for representing program elements by Alon et al. (2018). The main difference in this definition is that we define this representation to handle whole snippets of code, rather than a single program element (such as a single variable), and use it as input to our path-attention neural network.

We start by defining an AST, a path and a path-context.

Definition 1 (Abstract Syntax Tree). An Abstract Syntax Tree (AST) for a code snippet $\mathcal{C}$ is a tuple $\langle N, T, X, s, \delta, \phi\rangle$ where $N$ is a set of nonterminal nodes, $T$ is a set of terminal nodes, $X$ is a set of values, $s \in N$ is the root node, $\delta: N \rightarrow(N \cup T)^{*}$ is a function that maps a nonterminal node to a list of its children, and $\phi : T \rightarrow X$ is a function that maps a terminal node to an associated value. Every node except the root appears exactly once in all the lists of children.

Next, we define AST paths. For convenience, in the rest of this section we assume that all definitions refer to a single AST $\langle N, T, X, s, \delta, \phi\rangle$.

An AST path is a path between nodes in the AST, starting from one terminal, ending in another terminal, passing through an intermediate nonterminal in the path which is a common ancestor of both terminals. More formally:

Definition 2 (AST path). An AST-path of length $k$ is a sequence of the form: $n_{1} d_{1} \ldots n_{k} d_{k} n_{k+1}$, where $n_{1}, n_{k+1} \in T$ are terminals, for $i \in[2 . . k]: n_{i} \in N$ are nonterminals and for $i \in[1 . . k]: d_{i} \in\{\uparrow, \downarrow\}$ are movement directions (either up or down in the tree). If $d_{i}=\uparrow$, then: $n_{i} \in \delta\left(n_{i+1}\right) ;$ if $d_{i}=\downarrow,$ then: $n_{i+1} \in \delta\left(n_{i}\right) .$ For an AST-path $p,$ we use start $(p)$ to denote $n_{1}$ - the starting terminal of $p$; and end $(p)$ to denote $n_{k+1}$ - its final terminal.

Using this definition we define a path-context as a tuple of an AST path and the values associated with its terminals:

Definition 3 (Path-context). Given an AST Path $p$, its path-context is a triplet $\left\langle x_{s}, p, x_{t}\right\rangle$ where $x_{s}=\phi($ start $(p))$ and $x_{t}=\phi($ end $(p))$ are the values associated with the start and end terminals of $p$. That is, a path-context describes two actual tokens with the syntactic path between them.

Example 3.1. A possible path-context that represents the statement: “x =7 ;” would be:

Practically, to limit the size of the training data and reduce sparsity, it is possible to limit the paths by different aspects. Following earlier works, we limit the paths by maximum length — the maximal value of $k$, and limit the maximum width — the maximal difference in child index between two child nodes of the same intermediate node. These values are determined empirically as hyperparameters of our model.

4. Model

In this section we describe our model in detail. Section 4.1 describes the way the input source code is represented; Section 4.2 describes the architecture of the neural network; Section 4.3 describes the training process, and Section 4.4 describes the way the trained model is used for prediction. Finally Section 4.5 discusses some of the model design choices, and compares the architecture to prior art.

High-level View

At a high-level, the key point is that a code snippet is composed of a bag of contexts, and each context is represented by a vector that its values are learned. The values of this vector capture two distinct goals: (i) the semantic meaning of this context, and (ii) the amount of attention this context should get.

The problem is as follows: given an arbitrarily large number of context vectors, we need to aggregate them into a single vector. Two trivial approaches would be to learn the most important one of them, or to use them all by vector-averaging them. These alternatives will be discussed in Section 6.2, and the results of implementing these two alternatives are shown in Table 4 (“hard-attention” and “no-attention”) to yield poor results.

The main understanding in this work is that all context vectors need to be used, but the model should be let to learn how much focus to give each vector. This is done by learning how to average context vectors in a weighted manner. The weighted average is obtained by weighting each vector by a factor of its dot product with another global attention vector. The vector of each context and the global attention vector are trained and learned simultaneously using the standard neural approach of backpropagation. Once trained, the neural network is simply a pure mathematical function, which uses algebraic operators to output a code vector given a set of contexts.

4.1 Code as a Bag of Path-Contexts

Our path-attention model receives as input a code snippet in some programming language and a parser for that language.

Representing a Snippet of Code

We denote by $Rep$ the representation function (also known as a feature function) which transforms a code snippet into a mathematical object that can be used in a learning model. Given a code snippet $\mathcal{C}$ and its AST $\langle N, T, X, s, \delta, \phi\rangle,$ we denote by $TPairs$ the set of all pairs of AST terminal nodes (excluding pairs that contain a node and itself):

$TPairs \left(\mathcal{C}\right)=\{\left(term_{i},term_{j}\right)\mid term_{i}, term_{j}\; \in\; termNodes\left(\mathcal{C}\right) \wedge i \neq j \}$

where $termNodes$ is a mapping between a code snippet and the set of terminal nodes in its AST. We represent $\mathcal{C}$ as the set of path-contexts that can be derived from it:

$Rep(\mathcal{C})=\left\{\begin{array}{l|l} \left(x_{s}, p, x_{t}\right) & \begin{array}{l} \exists\left(term_{s}, term_{t}\right) \in TPairs\left(\mathcal{C}\right): \\ x_{s}=\phi\left(term_{s}\right) \wedge x_{t}=\phi\left(term_{t}\right) \\ \wedge start(p)=term_{s} \wedge end(p)=term_{t} \end{array} \end{array}\right\}$

that is, $\mathcal{C}$ is represented as the set of triplets $\left\langle x_{s}, p, x_{t}\right\rangle$ such that $x_{s}$ and $x_{t}$ are values of AST terminals, and $p$ is the AST path that connects them. For example, the representation of the code snippet from Figure 2 a contains, among others, the four AST paths of Figure 3.

4.2 Path-Attention Model

Overall, the model learns the following components: embeddings for paths and names (matrices $path\_vocab$ and $value\_vocab$), a fully connected layer (matrix $W$ ), attention vector ($\mathbf{a}$), and embeddings for the tags ($tags\_vocab$). We describe our model from from left-to-right (Fig. 4). We define two embedding vocabularies: $value_vocab$ and $path_vocab$, which are matrices in which every row corresponds to an embedding associated with a certain object:

$ \begin{array}{l} value\_vocab \in \mathbb{R}^{|X| \times d} \\ path\_vocab \in \mathbb{R}^{|P| \times d} \end{array} $

where as before, $X$ is the set of values of AST terminals that were observed during training, and $P$ is the set of AST paths. Looking up an embedding is simply picking the appropriate row of the matrix. For example, if we consider Figure 2a again, $value\_vocab$ contains rows for each token value such as boolean, target and Object. $path\_vocab$ contains rows which are mapped to each of the AST paths of Figure 3 (without the token values), such as the red ➀ path: $Name \;\uparrow\; FieldAccess\; \uparrow\; Foreach \downarrow\; Block \;\downarrow\; IfStmt \;\downarrow\; Block\;\downarrow\; Return\; \downarrow\; BooleanExpr.$ The values of these matrices are initialized randomly and are learned simultaneously with the network during training.

|

The width of the matrix $W$ is the embeddings size $d \in N$ – the dimensionality hyperparameter. $d$ is determined empirically, limited by the training time, model complexity and the GPU memory, and typically ranges between 100-500. For convenience, we refer to the embeddings of both the paths and the values as vectors of the same size $d$, but in general they can be of different sizes.

A bag of path-contexts $\mathcal{B} = \{b_1, \ldots,b_n } that were extracted from a given code snippet is fed into the network. Let $b_i = \langle x_s ,p_j , x_t\rangle$ be one of these path-contexts, such that $\{x_s , x_t \} \in X$ are values of terminals and $p_j \in P$ is their connecting path. Each component of a path-context is looked-up and mapped to its corresponding embedding. The three embeddings of each path-context are concatenated to a single context vector: $\mathbf{c}_i \in R^{3d}$ that represents that path-context:

| $\boldsymbol{c}_{\boldsymbol{i}}=embedding\left(\left\langle x_{s}, p_{j}, x_{t}\right\rangle\right)=\left[value\_vocab_{s} ; path\_vocab_{j} ; value\_vocab_{t}\right] \in \mathbb{R}^{3 d}$ | (1) |

For example, for the red➀ path-context from Figure 3, its context vector would be the concatenation of the vectors of elements, the red➀ path, and true.

Fully Connected Layer

Since every context vector $\mathbf{c_i}$ is formed by a concatenation of three independent vectors, a fully connected layer learns to combine its components. This is done separately for each context vector, using the same learned combination function. This allows the model to give a different attention to every combination of paths and values. This combination allows the model the expressivity of giving a certain path a high attention when observed with certain values, and a low attention when the exact same path is observed with other values.

Here, $\tilde{\boldsymbol{c}}_{\boldsymbol{i}}$ is the output of the fully connected layer, which we refer to as a combined context vector, computed for a path-context $b_i$. The computation of this layer can be described simply as

where $W \in R^{d\times 3d}$ is a learned weights matrix and $tanh$ is the hyperbolic tangent function. The height of the weights matrix $W$ determines the size of $\tilde{\boldsymbol{c}}_{\boldsymbol{i}}$, and for convenience is the same size $d$ as before, but in general it can be of a different size to determines the size of the target vector. $tanh$ is the hyperbolic tangent element-wise function, a commonly used monotonic nonlinear activation function which outputs values that range (−1, 1), which increases the expressiveness of the model

That is, the fully connected layer “compresses” a context vector of size $3d$ into a combined context vector of size d by multiplying it with a weights matrix, and then applies the tanh function to each element of the vector separately.

Aggregating multiple contexts into a single vector representation with attention. The attention mechanism computes a weighted average over the combined context vectors, and its main job is to compute a scalar weight to each of them. An attention vector $\mathbf{a} \in R^d$ is initialized randomly and learned simultaneously with the network. Given the combined context vectors: $\{\tilde{\boldsymbol{c}}_{\boldsymbol{1}},\ldots, \tilde{\boldsymbol{c}}_{\boldsymbol{n}} \}$, the attention weight $\alpha_i$ of each $\tilde{\boldsymbol{c}}_{\boldsymbol{i}}$ is computed as the normalized inner product between the combined context vector and the global attention vector $\mathbf{a}$:

$\text { attention weight } \alpha_{i}=\dfrac{\exp \left(\tilde{c}_{i}^{T} \cdot \boldsymbol{a}\right)}{\displaystyle \sum_{j=1}^{n} \exp \left(\tilde{\boldsymbol{c}}_{j}^{T} \cdot \boldsymbol{a}\right)}$

The exponents in the equations are used only to make the attention weights positive, and they are divided by their sum to have a sum of 1, as a standard softmax function.

The aggregated code vector $\mathbf{v} \in \R^d$ , which represents the whole code snippet, is a linear combination of the combined context vectors $\{\tilde{\boldsymbol{c}}_{\boldsymbol{1}},\ldots, \tilde{\boldsymbol{c}}_{\boldsymbol{n}} \}$ factored by their attention weights:

| $\text { code vector } \boldsymbol{v}=\displaystyle \sum_{i=1}^{n} \alpha_{i} \cdot \tilde{\boldsymbol{c}}_{\boldsymbol{i}}$ | (2) |

that is, the attention weights are non-negative and their sum is 1, and they are used as the factors of the combined context vectors $\tilde{\boldsymbol{c}}_{\boldsymbol{i}}$. Thus, attention can be viewed as a weighted average, where the weights are learned and calculated with respect to the other members in the bag of path-contexts.

Prediction

Prediction of the tag is performed using the code vector. We define a tags vocabulary which is learned as part of training:

where $Y$ is the set of tag values found in the training corpus. Similarly as before, the embedding of $tag_i$ is row $i$ of $tags\_vocab$. For example, looking at Figure 2a again, $tags\_vocab$ contains rows for each of contains, matches and canHandle. The predicted distribution of the model $q(y)$ is computed as the (softmax-normalized) dot product between the code vector $v$ and each of the tag embeddings:

$\text { for } y_{i} \in Y: q\left(y_{i}\right)=\dfrac{\exp \left(\boldsymbol{v}^{T} \cdot \text { tags_vocab }_{i}\right)}{\sum_{y_{j} \in Y} \exp \left(\boldsymbol{v}^{T} \cdot \operatorname{tag}_{-} \text {vocab }_{j}\right)}$

that is, the probability that a specific tag $y_i$ should be assigned to the given code snippet $\mathcal{C}$ is the normalized dot product between the vector of $y_i$ and the code vector $v$.

4.3 Training

For training the network we use cross-entropy loss (Rubinstein 1999, 2001) between the predicted distribution $q$ and the “true” distribution $p$. Since $p$ is a distribution that assigns a value of 1 to the actual tag in the training example and 0 otherwise, the cross-entropy loss for a single example is equivalent to the negative log-likelihood of the true label, and can be expressed as:

where $y_{true}$ is the actual tag that was seen in the example. That is, the loss is the negative logarithm of $q\left(y_{true}\right)$, the probability that the model assigns to $y_{true}$. As $q\left(y_{true}\right)$ tends to 1, the loss approaches zero. The further $q\left(y_{true}\right)$ goes below 1, the greater the loss becomes. Thus, minimizing this loss is equivalent to maximizing the log-likelihood that the model assigns to the true labels $y_{true}$.

Training the network is performed using any gradient descent based algorithm, and the standard approach of backpropagating the training error through each of the learned parameters (i.e., deriving the loss with respect to each of the learned parameters and updating the learned parameter's value by a small “step” towards the direction that minimizes the loss).

4.4 Using the Trained Network

A trained network can be used for two main purposes: (i) Use the code vector $v$ itself in a down-stream task, and (ii) Use the network to predict tags for new, unseen code.

Using the Code Vector

An unseen code can be fed into the trained network exactly the same as in the training step, up to the computation of the code vector (Eq.(2)). This code embedding can now be used in another deep learning pipeline for various tasks such as finding similar programs, code search, refactoring suggestion, and code summarization.

Predicting Tags and Names

The network can also be used to predict tags and names for unseen code. In this case we also compute the code vector $v$ using the weights and parameters that were learned during training, and prediction is done by finding the closest target tag:

where $q_{v_{\mathcal{C}}}$ is the predicted distribution of the model, given the code vector $v_{\mathcal{C}}$.

Scenario-Dependant Variants

For simplicity, we describe a network that predicts a single label, but the same architecture can be adapted for slightly different scenarios. For example, in a multitagging scenario (Tsoumakas and Katakis 2006), each code snippet contains multiple true tags as in StackOverflow questions. Another example is predicting a sequence of target words such as method documentation. In the latter case, the attention vector should be used to re-compute the attention weights after each predicted token, given the previous prediction, as commonly done in neural machine translation (Bahdanau et al. 2014; Luong et al. 2015).

4.5 Design Decisions

Bag of contexts

We represent a snippet of code as an unordered bag of path-contexts. This choice reflects our hypothesis that the existence of path-contexts in a method body is more significant than their internal location or order.

An alternative representation is to sort path-contexts according to a predefined order (e.g., order of their occurrence). However, unlike natural language, there is no predetermined location in a method where the main attention should be focused. An important path-context can appear anywhere in a method body (and span throughout the method body).

Working with Syntactic-only Context

The main contribution of this work is its ability to aggregate multiple contexts into a fixed-length vector in a weighted manner, and use the vector to make a prediction. In general, our proposed model is not bound to any specific representation of the input program, and can be applied in a similar way to a “bag of contexts” in which the contexts are designed for a specific task, or contexts that were produced using semantic analysis. Specifically, we chose to use a syntactic representation that is similar to Alon et al. (2018) because it was shown to be useful as a representation for modelling programming languages in machine learning models, and more expressive than n-grams and manually-designed features.

An alternative approach is to include semantic relations as context. Such an approach was performed by Allamanis et al. (2018) who presented a Gated Graph Neural Network, in which program elements are graph nodes and semantic relations such as ComputedFrom and LastWrite are edges in the graph. In their work, these semantic relations were chosen and implemented for specific programming language and tasks. In our work, we wished to explore how far can a syntactic-only approach go. Using semantic knowledge has many advantages and potentially contains information that is not clearly expressed in a syntactic-only observation, but comes at a cost: (i) an expert is required to choose and design the semantic analyses; (ii) generalizing to new languages is much more difficult, as the semantic analyses need to be implemented differently for every language; and (iii) the designed analyses might not easily generalize to other tasks. In contrast, in our syntactic approach (i) no expert knowledge of the language nor manual feature designing is required; (ii) generalizing to other languages is performed by simply replacing the parser and extracting paths from the new language's AST using the same traversal algorithm; and (iii) the same syntactic paths generalize surprisingly well to other tasks (as was shown by Alon et al.,2018).

Large Corpus, Simple Model

Similarly to the approach of Mikolov et al. (2013a) for word representations, we found that it is more efficient to use a simpler model with a large amount of data, rather than a complex model and a small corpus.

Some previous works decomposed the target predictions. Allamanis et al. (2015a),2016) decomposed method names into smaller “sub-tokens” and used the continuous prediction approach to compose a full name. Iyer et al. (2016) decomposed StackOverflow titles to single words and predicted them word-by-word. In theory, this approach could be used to predict new compositions of names that were not observed in the training corpus, referred to as neologisms (Allamanis et al. 2015a). However, when scaling to millions of examples this approach might become cumbersome and fail to train well due to hardware and time limitations. As shown in Section 6.1, our model yields significantly better results than previous models that used this approach.

Another disadvantage of subtoken-by-subtoken learning is that it requires a time consuming beam-search during prediction. This results in an orders of magnitude slower prediction rate (the number of predictions that the model is able to make per second). An empirical comparison of the prediction rate of our model and the models of Allamanis et al. (2016); Iyer et al. (2016), shows that our model achieves roughly 200 times higher prediction rate than Iyer et al. (2016) and 10,000 times higher than Allamanis et al. (2016) (Section 6.1).

OoV Prediction

The main potential advantage of the models of Allamanis et al. (2016) and Iyer et al. (2016) over our model is the subtoken-by-subtoken prediction, which allows them to predict a neologism, and the copy mechanism used by Allamanis et al. (2016) which allows it to use out-of-vocabulary (OoV) words in the prediction.

An analysis of our test data shows that the top-10 most frequent method names, such as toString, hashCode and equals, which are typically easy to predict, appear in less than 6% of the test examples. The 13% least occurring names are rare names, which did not appear as whole in the training data, and are difficult or impossible to predict exactly even with a neologism or copy mechanism, such as: imageFormatExceptionShouldProduceNotSuccessOperationResultWithMessage. Therefore, our goal is to maximize our efforts on the remaining of the examples.

Even though the upper bound of accuracy of models which incorporate neologism or copy mechanisms is hypothetically higher than ours, the actual contribution of these abilities is minor. Empirically, when trained and evaluated on the same corpora as our model, only less than 3% of the predictions of each of these baselines were actually neologism or OoV. Further, out of all the cases that the baseline suggested a neologism or OoV, more predictions could have been exact-matches using an already-seen target name, rather than composing a neologism or OoV.

Although it is possible to incorporate these mechanisms in our model as well, we chose to predict complete names due to the high cost of training and prediction time and the relatively negligible contribution of these mechanisms.

Granularity of Path Decomposition

An alternative approach could decompose the representation of a path to granularity of single nodes, and learn to represent a whole path node-by-node using a recurrent neural network (RNN). This would possibly require less space, but will require more time to train and predict, as training of RNNs is usually more time consuming and not clearly better.

Further, a statistical analysis of our corpus shows that more than 95% of the paths in the test set were already seen in the training set. Accordingly, in the trade-off between time and space we chose a little less expressive, more memory-consuming, but fast-to-train approach. This choice leads to results that are as 95% as good as our final results in only 6 hours of training, while significantly improving over previous works. Despite our choice of time over space, training our model on millions of examples fits in the memory of common GPUs.

5. Distributed Vs. Symbolic Representations

We compare our model, which uses distributed representations, with Conditional Random Fields (CRFs) as an example of a model that uses symbolic representations (Alon et al. 2018 and Raychev et al. 2015). Distributed representations refer to representations of elements that are discrete in their nature (e.g. words and names) as vectors or matrices, such that the meaning of an element is distributed across multiple components. This contrasts with symbolic representations, where each element is uniquely represented with exactly one component (Allamanis et al. 2017). Distributed representations have recently become extremely common in machine learning and NLP because they generalize better, while often requiring fewer parameters.

In general, CRFs can also use distributed representations (Artieres et al. 2010; Durrett and Klein 2015), but for the purpose of this discussion, “CRFs” refers to a CRFs with symbolic representations as used by Alon et al. 2018 and Raychev et al. 2015.

Generalization Ability

Using CRFs for predicting program properties was found to be powerful (Alon et al. 2018; Raychev et al. 2015), but limited to modeling only combinations of values that were seen in the training data. In their works, in order to score the likelihood of a combination of values, the trained model keeps a scalar parameter for every combination of three components that was observed in the training corpus: variable name, another identifier, and the relation between them. When an unseen combination is observed in test data, a model of this kind cannot generalize and evaluate its likelihood, even if each of the individual values was observed during training.

In contrast, the main advantage of distributed representations in this aspect is the ability to compute the likelihood of every combination of observed values. Instead of keeping a parameter for every observed combination of values, our model keeps a small constant number (d) of learned parameters for each atomic value, and use algebraic operations to compute the likelihood of their combination.

Trading Polynomial Complexity with Linear

Using symbolic representations can be very costly in terms of the number of required parameters. Using CRFs in our problem, which models the probability of a label given a bag of path-contexts, would require using ternary factors, which require keeping a parameter for every observed combination of four components: terminal value, path, another terminal value, and the target code label (a ternary factor which is determined by the path, with its three parameters). A CRF would thus have a space complexity of $O \left( \vert X\vert^2 \cdot \vert P \vert \cdot \vert Y \vert \right)$ , where $X$ is the set of terminal values, $P$ is the set of paths, and $Y$ is the set of labels.

In contrast, the number of parameters in our model is $O \left(d \cdot \left(|X|+|P|+|Y|\right)\right)$, where $d$ is a relatively small constants (128 in our final model) – we keep a vector of size $d$ for every atomic terminal value or path, and use algebraic operations to compute the vector that represents the whole code snippet. Thus, distributed representations allow to trade the polynomial complexity with linear. This is extremely important in these settings, because $|X|$,$|Y|$ and $|P|$ are in the orders of millions (the number of observed values, paths and labels). In fact, using distributed representations of symbols and relations in neural networks allows to keep less parameters than CRFs, and at the same time compute a score to every possible combination of observed values, paths and target labels, instead of only observed combinations.

Practically, we reproduced the experiments of Alon et al. 2018 of modeling the task of predicting method names with CRFs using trenary factors. In addition to yielding an F1 score of 49.9, which our model relatively improves by 17%, the CRF model required + 104% more parameters, and about 10 times more memory.

6. Evaluation

The main contribution of our method is in its ability to aggregate an arbitrary sized snippet of code into a fixed-size vector in a way that captures its semantics. Since Java methods are usually short, focused, have a single responsibility and a descriptive name, a natural benchmark of our approach would consider a method body as a code snippet, and use the produced code vector to predict the method name. Succeeding in this task would suggest that the code vector has indeed accurately captured the functionality and semantic role of the method.

Our evaluation aims to answer the following questions:

- How useful is our model in predicting method names, and how well does it measure in comparison to other recent approaches (Section 6.1)?

- What is the contribution of the attention mechanism to the model? How well would it perform using hard-attention instead, or without attention at all (Section 6.2)?

- What is the contribution of each of the path-context components to the model (Section 6.3)?

- Is it actually able to predict names of complex methods, or only of trivial ones (Section 6.4)?

- What are the properties of the learned vectors? Which semantic patterns do they encode (Section 6.4)?

Training Process

In our experiments we took the top 1M paths that occurred the most in the training set. we use the Adam optimization algorithm (Kingma and Ba 2014), an adaptive gradient descent method commonly used in deep learning. We use dropout (Srivastava et al. 2014) of 0.25 on the context vectors. The values of all the parameters are initialized using the initialization heuristic of Glorot and Bengio (2010). When training on a single Tesla K80 GPU, we achieve a training through put of more than 1000 methods per second. Therefore, a single training epoch takes about 3 hours, and it takes about 1.5 days to completely train a model. Training on newer GPUs doubles and quadruples the speed. Although the attention mechanism has the ability to aggregate an arbitrary number of inputs, we randomly sampled up to k = 200 path-contexts from each training example. The value k = 200 seemed to be enough to “cover” each method, since increasing to k = 300 did not seem to improve the results.

Data Sets

We are interested in evaluating the ability of the approach to generalize across projects. We used a data set of 10,072 Java GitHub repositories, originally introduced by Alon et al. 2018. Following recent work which found a large amount of code duplication in GitHub (Lopes et al. 2017, Alon et al. 2018) used the top-ranked and most popular projects, in which duplication was observed to be less of a problem (Lopes et al. 2017 measured duplication across all the code in GitHub), and they filtered out migrated projects and forks of the same project. While it is possible that some duplications are left between the training and test set, in this case the compared baselines could have benefited from them as well. In this dataset, the files from all the projects are shuffled and split to 14,162,842 training, 415,046 validation and 413,915 of test methods.

We trained our model on the training set, tuned hyperparameters on the validation set for maximizing F1 score. The number of training epochs is tuned on the validation set using early stopping. Finally, we report results on the unseen test set. A summary of the amount of data used is shown in Table 2.

| Number of methods | Number of files | Size (GB) | |

|---|---|---|---|

| Training | 14,162,842 | 1,712,819 | 66 |

| Validation | 415,046 | 50,000 | 2.3 |

| Test | 413,915 | 50,000 | 2.3 |

| Sampled Test | 7,454 | 1,000 | 0.04 |

Evaluation Metric

Ideally, we would like to manually evaluate the results, but given that manual evaluation is very difficult to scale, we we adopted the measure used by previous works Allamanis et al. 2015a, 2016; Alon et al. 2018, which measured precision, recall, and F1 score over subtokens, case-insensitive. This is based on the idea that the quality of a method name prediction is mostly dependant on the sub-words that were used to compose it. For example, for a method called countLines, a prediction of linesCount is considered as an exact match, a prediction of count has a full precision but low recall, and a prediction of countBlankLines has a full recall but low precision. An unknown sub-token in the test label (“UNK”) is counted as a false negative, therefore automatically hurting recall.

While there are alternative metrics in the literature, such as accuracy and BLEU score, they are problematic because accuracy counts even mostly-correct predictions as completely incorrect, and BLEU score tends to favor short predictions, which are usually uninformative (Callison-Burch et al. 2006). We provide a qualitative evaluation including a manual inspection of examples in Section 6.4.

6.1 Quantitative Evaluation

We compare our model to two other recently-proposed models that address similar tasks:

CNN + attention. — proposed by Allamanis et al. 2016 for prediction of method names using CNNs and attention. This baseline was evaluated on a random sample of the test set due to its slow prediction rate (Table 3). We note that the results reported here are lower than the original results reported in their paper, because we consider the task of learning a single model that is able to predict names for a method from any possible project. We do not make the restrictive assumption of having a per-project model, able to predict only names within that project. The results we report for CNN + attention are when evaluating their technique in this realistic setting. In contrast, the numbers reported in their original work are for the simplified setting of predicting names within the scope of a single project.

| Model | Sampled Test Set (7454 methods) | Full Test Set (413915 methods) | prediction rate | ||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | (examples / sec) | |

| CNN+Attention Allamanis et al. 2016 | 47.3 | 29.4 | 33.9 | − | − | − | 0.1 |

| LSTM+Attention Iyer et al. 2016 | 27.5 | 21.5 | 24.1 | 33.7 | 22.0 | 26.6 | 5 |

| Paths+CRFs Alon et al. 2018 | − | − | − | 53.6 | 46.6 | 49.9 | 10 |

| PathAttention (this work) | 63.3 | 56.2 | 59.5 | 63.1 | 54.4 | 58.4 | 1000 |

LSTM + attention. — proposed by Iyer et al. 2016, originally for translation between StackOverflow questions in English and snippets of code that were posted as answers and vice-versa, using an encoder-decoder architecture based on LSTMs and attention. Originally, they demonstrated their approach for C# and SQL. We used a Java lexer instead of the original C#, and pedantically modified it to be equivalent. We re-trained their model with the target language being the methods' names, split into sub-tokens. Note that this model was designed for a slightly different task than ours, of translation between source code snippets and natural language descriptions, and not specifically for prediction of method names.

Paths + CRFs. — proposed by Alon et al. 2018 , using a similar syntactic path representation as this work, with CRFs as the learning algorithm. We evaluate our model on the their introduced dataset, and achieve a significant improvement in results, training time and prediction time.

Each baseline was trained on the same training data as our model. We used their default hyperparameters, except for the embedding and LSTM size of the LSTM + attention model, which were reduced from 400 to 100, to allow it to scale to our enormous training set while complying with the GPU's memory constraints. The alternative was to reduce the amount of training data, which achieved worse results.

Performance

Table 3 shows the precision, recall, and F1 score of each model. The model of Alon et al. 2018 seems to perform better than that of Allamanis et al. 2016 and Iyer et al. 2016, while our model achieves significantly better precision and recall than all of them.

Short and Long Methods

The reported results are based on evaluation on all the test data. Additionally evaluating the performance of our model with respect to the length of a test method, we observe similar results across method lengths, with natural descent as length increases. For example, the F1 score of one-line methods is around 65; for two-to-ten lines 59; and for eleven-lines and further 52, while the average method length is 7 lines. We used all the methods in the dataset, regardless of their size. This shows the robustness of our model to the length of the methods. Short methods have shorter names and their logic is usually simpler, while long methods benefit from more context for prediction, but their names are usually longer, more diverse and sparse, for example: generateTreeSetHashSetSpoofingSetInteger which has 17 lines of code.

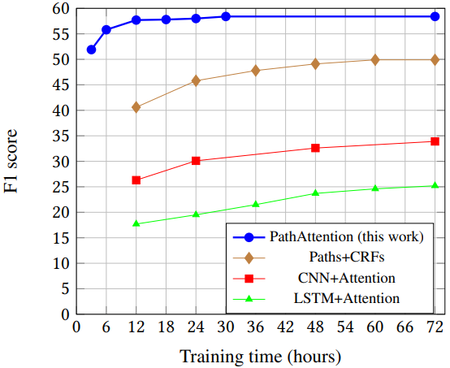

Speed

Fig. 5 shows the test F1 score over training time for each of the evaluated models. In just 3 hours, our model achieves results that are as 88% as good as its final results, and in 6 hours results that are as 95% as good, while both being substantially higher than the best results of the baseline models. Our model achieves its best results after 30 hours.

Table 3 shows the approximate prediction rate of the different models. The syntactic preprocessing time of our model is negligible but is included in the calculation. As shown, due to their complexity and expensive beam search on prediction, the other models are several orders of magnitude slower than ours, limiting their applicability.

Data Efficiency

The results reported in Table 3 were obtained using our full and large training corpus, to demonstrate the ability of our approach to leverage enormous amounts of training data in a relatively short training time. However, in order to investigate the data efficiency of our model, we also performed experiments using smaller training corpora which are not reported in details here. With 20% of the amounts of data, the F1 score of our model drops in only 50%. With 5% of the data, the F1 score drops only to 30% of our top results. We do not focus on this series of experiments here, since our model can process more than a thousand of examples per second, so there is no significant practical point in deliberately limiting the size of the training corpus.

6.2 Evaluation of Alternative Designs

We experiment with alternative model designs, in order to understand the contribution of each network component.

Attention. As we refer to our approach as soft-attention, we examine two other approaches which are the extreme alternatives to our approach:

- (1) No-attention — in which every path-context is given an equal weight: the model uses the ordinary average of the path-contexts rather than learning a weighted average.

- (2) Hard-attention — in which instead of placing the attention “softly” over the path-contexts, all the attention is given to a single path-context, i.e., the network learns to select a single most important path-context at a time.

A new model was trained for each of these alternative designs. However, training hard-attention neural networks is difficult, because the gradient of the argmax function is zero almost everywhere. Therefore, we experimented with an additional approach: train-soft, predict-hard, in which training is performed using soft-attention (as in our ordinary model), and prediction is performed using hard-attention. Table 4 shows the results of all the compared alternative designs. As seen, hard-attention achieves the lowest results. This concludes that when predicting method names, or in general describing code snippets, it is more beneficial to use all the contexts with equal weights than focusing on the single most important one. Train-soft, predict-hard improves over hard training, and gains similar results to no-attention. As soft-attention achieves higher scores than all of the alternatives, both on training and prediction, this experiment shows its contribution as a “sweet-spot” between no-attention and hard-attention.

| Model Design | Precision | Recall | F1 |

|---|---|---|---|

| No-attention | 54.4 | 45.3 | 49.4 |

| Hard-attention | 42.1 | 35.4 | 38.5 |

| Train-soft, predict-hard | 52.7 | 45.9 | 49.1 |

| Soft-attention | 63.1 | 54.4 | 58.4 |

| Element-wise soft-attention | 63.7 | 55.4 | 59.3 |

Removing the Fully-Connected Layer

To understand the contribution of each component of our model, we experiment with removing the fully connected layer (described in Section 4.2). In this experiment, soft-attention is applied directly on the context-vectors instead of the combined context-vectors. This experiment resulted in the same final F1 score as our regular model. Even though its training rate (training examples per second) was faster, it took more actual training time to achieve the same results. For example, instead of reaching results that are as 95% as good as the final results in 6 hours, it took 12 hours, and a few more hours to achieve the final results than our standard model.

Element-wise Soft-attention

We also experimented with element-wise soft-attention. In this design, instead of using a single attention vector $\mathbf{a} \in \R^d$ to compute the attention for the whole combined context vector $\tilde{\mathbf{c}}_i$, there are $d$ attention vectors $\mathbf{a_1,\ldots, a_d} \in \R^d$, and each of them is used to compute the attention for a different element. Therefore, the attention weight for element $j$ of a combined context vector $\tilde{\mathbf{c}}_i$ is: attention weight $\alpha_{i_{j}}=\dfrac{\exp \left(\tilde{\mathbf{c}}_{i}^{T} \cdot a_{j}\right)}{\displaystyle \sum_{k=1}^{n} \exp \left(\tilde{\mathbf{c}}_{k}^{T} \cdot a_{j}\right)}$. This variation allows the model to compute a different attention score for each element in the combined context vector, instead of computing the same attention score for the whole combined context vector. This model achieved F1 score of 59.3 (on the full test set) which is even higher than our standard soft-attention model, but since this model gives a different attention to different elements within the same context vector it is more difficult to interpret. Thus, this is an alternative model that gives slightly better results in the cost of losing its interpretability and slower training.

6.3 Data Ablation Study

The contribution of each path-context element. To understand the contribution of each component of a path-context, we evaluate our best model on the same test set in the same settings, except that one or more input locations is “hidden” and replaced with a constant “UNK” symbol, such that the model cannot use this element for prediction. As the “full” representation is referred to as: $\langle x_s, p, x_t\rangle$, the following experiments were performed:

- “only-values” - using only the values of the terminals for prediction, without paths, and therefore representing each path-context as: $\langle x_s , \_\_ , x_t\rangle$.

- “no-values” - using only the path: $\langle \_\_,p, \_\_\rangle$, without identifiers and keywords.

- “value-path” - allowing the model to use a path and one of its values: $\langle x_s ,p, \_\_\rangle$.

- “one-value” - using only one of the values: $\langle x_s , \_\_, \_\_ \rangle$.

The results of these experiments are presented in Table 5. Interestingly, the “full” representation ($\langle x_s, p, x_t\rangle$) achieves better results than the sum of “only-values” and “no-values”, without each of them alone “covering” for the other. This shows the importance of using both paths and keywords, and letting the attention mechanism learn how to combine them in every example. The lower results of “only-values” (compared to the full representation) show the importance of using syntactic paths. As shown in the table, dropping identifiers and keywords hurt the model more than dropping paths, but combining both of them achieves significantly better results. “no-paths” gets better results than “no-values”, and “single-identifiers” gets the worst results.

| Path-context input | Precision | Recall | F1 | |

|---|---|---|---|---|

| Full: | $\langle x_s, p, x_t \rangle$ | 63.1 | 54.4 | 58.4 |

| Only-values: | $\langle x_s, \_\_, xt \rangle$ | 44.9 | 37.1 | 40.6 |

| No-values: | $\langle \_\_, p, \_\_\rangle$ | 12.0 | 12.6 | 12.3 |

| Value-path: | $\langle x_s, p, \_\_\rangle$ | 31.5 | 30.1 | 30.7 |

| One-value: | $\langle x_s, \_\_, \_\_\rangle$ | 10.6 | 10.4 | 10.7 |

The low results of “no-words” suggest that predicting names for methods with obfuscated names is a much more difficult task. In this scenario, it might be more beneficial to predict variable names as a first step using a model that was trained specifically for this task, and then predict a method name given the predicted variable names.

6.4 Qualitative Evaluation

6.4.1 Interpreting Attention

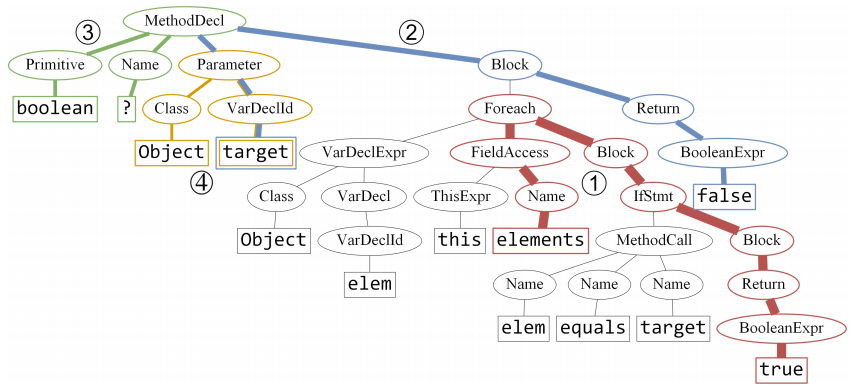

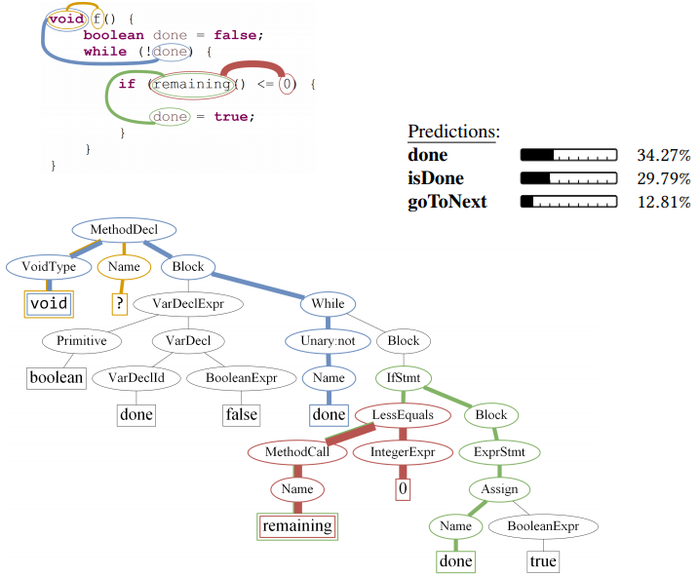

Despite the “black-box” reputation of neural networks, our model is partially interpretable thanks to the attention mechanism, which allows us to visualize the distribution of weights over the bag of path-contexts. Figure 6 illustrates a few predictions, along with the path-contexts that were given the most attention in each method. The width of each of the visualized paths is proportional to the attention weight that it was allocated. We note that in these figures the path is represented only as a connecting line between tokens, while in fact it contains rich syntactic information which is not expressed properly in the figures. Figure 7 and Figure 8 portrays the paths on the AST.

|

|

|

The examples of Figure 6 are particularly interesting since the top names are accurate and descriptive (reverseArray and reverse; isPrime; sort and bubbleSort) but do not appear explicitly in the method bodies. The method bodies, and specifically the most attended path-contexts describe lower-level operations. Suggesting a descriptive name for each of these methods is difficult and might take time even for a trained human programmer. The average method length in our dataset of real-world projects is 7 lines, and the examples presented in this section are longer than this average length.

Figure 7 and Figure 8 show additional of our model's predictions, along with the path-contexts that were given the most attention in each example. The path-contexts are portrayed both on the code and on the AST. An interactive demo of method name predictions and name vectors similarities can be found at: http://code2vec.org. When manually examining the predictions of custom inputs, it is important to note that a machine learning model learns to predict names for examples that are likely to be observed “in the wild”. Thus, it can be misleaded by confusing adversarial examples that are unlikely to be found in real code.

6.4.2 Semantic Properties of the Learned Embeddings

Surprisingly, the learned method name vectors encode many semantic similarities and even analogies that can be represented as linear additions and subtractions. When simply looking for the closest vector (in terms of cosine distance) to a given method name vector, the resulting neighbors usually contain semantically similar names; e.g. size is most similar to getSize, length, getCount, and getLength. Table 1 shows additional examples of name similarities.

When looking for a vector that is close to two other vectors, we often find names that are semantic combinations of the two other names. Specifically, we can look for the vector $\mathbf{v}$ that maximizes the similarity to two vectors $\mathbf{a}$ and $\mathbf{b}$:

| [math]\displaystyle{ \operatorname{argmax}_{\boldsymbol{v} \in V}(\operatorname{sim}(\boldsymbol{a}, \boldsymbol{v}) \circledast \operatorname{sim}(\boldsymbol{b}, \boldsymbol{v})) }[/math] | (3) |

where $circledast$ is an arithmetic operator used to combine two similarities, and $V$ is a vocabulary of learned name vectors, $tags\_vocab$ in our case. When measuring similarity using cosine distance, Equation (3) can be written as:

| [math]\displaystyle{ \operatorname{argmax}_{\boldsymbol{v} \in V}(\cos (\boldsymbol{a}, \boldsymbol{v}) \circledast \cos (\boldsymbol{b}, \boldsymbol{v})) }[/math] | (4) |

Neither vec(equals) nor vec(toLowerCase) are the closest vectors to vec(equalsIgnoreCase) individually. However, assigning $\mathbf{a}$ = vec (equals), $\mathbf{b} = vec (toLowerCase) and using “$+$” as the operator $circledast$, results with the vector of equalsIgnoreCase as the vector that maximizes Equation (4) for $\mathbf{v}$.

Previous work in NLP has suggested a variety of methods for combining similarities Levy and Goldberg 2014a for the task of natural language analogy recovery. Specifically, when using “$+$” as the operator $circledast$, as done by Mikolov et al. 2013b, and denoting $\hat{\boldsymbol{u}}$ as the unit vector of a vector $\mathbf{u}$, Equation (4) can be simplified to:

Since cosine distance between two vectors equals to the dot product of their unit vectors. Particularly, this can be used as a simpler way to find the above combination of method name similarities:

$vec\left(\mathrm{equals}\right) + vec \left(\mathrm{toLowerCase}\right) \approx vec \left(\mathrm{equalsIgnoreCase}\right)$

This implies that the model has learned that equalsIgnoreCase is the most similar name to equals and toLowerCase combined. Table 6 shows some of these examples.

| $A$ | $+B$ | $\approx C$ |

|---|---|---|

| get | value | getValue |

| get | instance | getInstance |

| getRequest | addBody | postRequest |

| setHeaders | setRequestBody | createHttpPost |

| remove | add | update |

| decode | fromBytes | deserialize |

| encode | toBytes | serialize |

| equals | toLowerCase | equalsIgnoreCase |

Similarly to the way that syntactic and semantic word analogies were found using vector calculation in NLP by Mikolov et al. 2013a, c, the method name vectors that were learned by our model also express similar syntactic and semantic analogies. For example, $vec \left(\mathrm{download}\right)- vec \left(\mathrm{receive}\right)+vec \left(\mathrm{send}\right)$ results in a vector whose closest neighbor is the vector for upload. This analogy can be read as: “receive is to send as download is to: upload”. More examples are shown in Table 7.

| A: | B | C: | D |

|---|---|---|---|

| open: | connect | close: | disconnect |

| key: | keys | value: | values |

| lower: | toLowerCase | upper: | toUpperCase |

| down: | onMouseDown | up: | onMouseUp |

| warning: | getWarningCount | error: | getErrorCount |

| value: | containsValue | key: | containsKey |

| start: | activate | end: | deactivate |

| receive: | download | send: | upload |

7. Limitations of Our Model

In this section we discuss some of the limitations of our model and raise potential future research directions.

==== Closed Labels Vocabulary ====

One of the major limiting factors is the closed label space we use as target - our model is able to predict only labels that were observed as-is at training time. This works very well for the vast majority of targets (that repeat across multiple programs), but as the targets become very specific and diverse (e.g., findUserInfoByUserIdAndKey) the model is unable to compose such names and usually catches only the main idea (for example: findUserInfo). Overall, on a general dataset, our model outperforms the baselines by a large margin even though the baselines are technically able to produce complex names.

==== Sparsity and Data-hunger ==== There are three main sources of sparsity in our model:

- Terminal values are represented as whole symbols - e.g., each of

newArrayandoldArrayis a unique symbol that has an embedding of its own, even though they share most of their characters (Array). - AST paths are represented as monolithic symbols - two paths that share most of their AST nodes but differ in only a single node are represented as distinct paths which are assigned with distinct embeddings.

- Target nodes are whole symbols, even if they are composed of more common smaller symbols.

These sources of sparsity make the model consume a lot of trained parameters to keep an embedding for each observed value. The large number of trained parameters results in a large GPU memory consumption at training time, increases the size of the stored model (about 1.4 GB), and requires a lot of training data. Further, this sparseness potentially hurts the results, because modelling source code with a finer granularity of atomic units may have allowed the model to represent more unseen contexts as compositions of smaller atomic units, and would have increased the repetitiousness of atomic units across examples. In the model described in this paper, paths, terminal values or target values that were not observed in training time - cannot be represented. To address these limitations we train the model on a huge dataset of 14M examples, but the model might not perform as well using smaller datasets. Although requiring a lot of GPU memory, training our model on millions of examples fits in the memory of a Tesla K80 GPU which is relatively old.